No More Silos: Integrating Databases and Apache Kafka

A presentation at UKOUG 2018 in in Liverpool, UK by Robin Moffatt

@rmoff #ukoug_tech18 Integrating Databases and Apache Kafka Robin Moffatt, Developer Advocate @ Confluent

@rmoff #ukoug_tech18 Photo by Emily Morter on Unsplash No More Silos: Integrating Databases and Apache Kafka

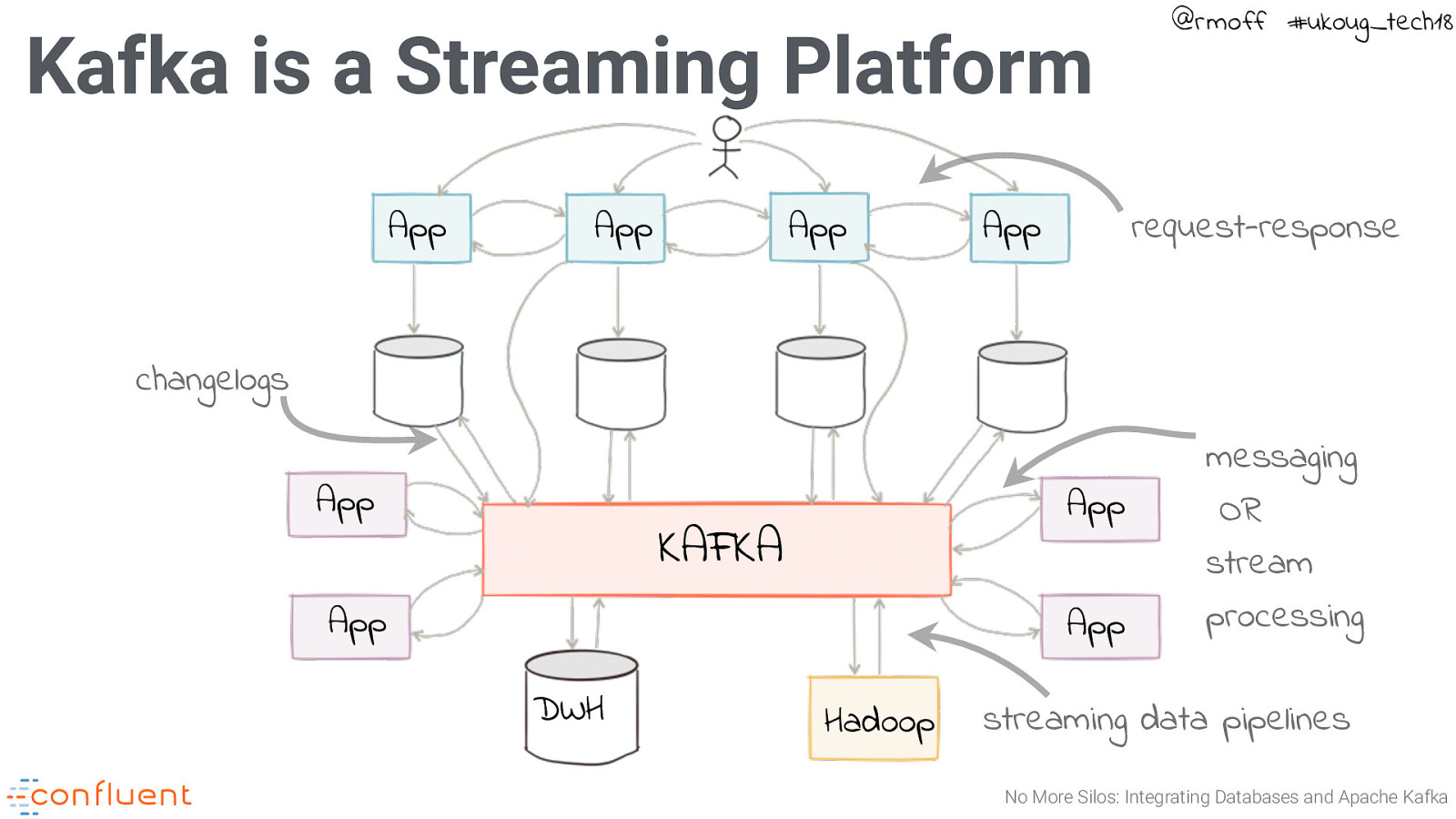

Kafka is a Streaming Platform App App App App @rmoff #ukoug_tech18 request-response changelogs App App KAFKA App App DWH Hadoop messaging OR stream processing streaming data pipelines No More Silos: Integrating Databases and Apache Kafka

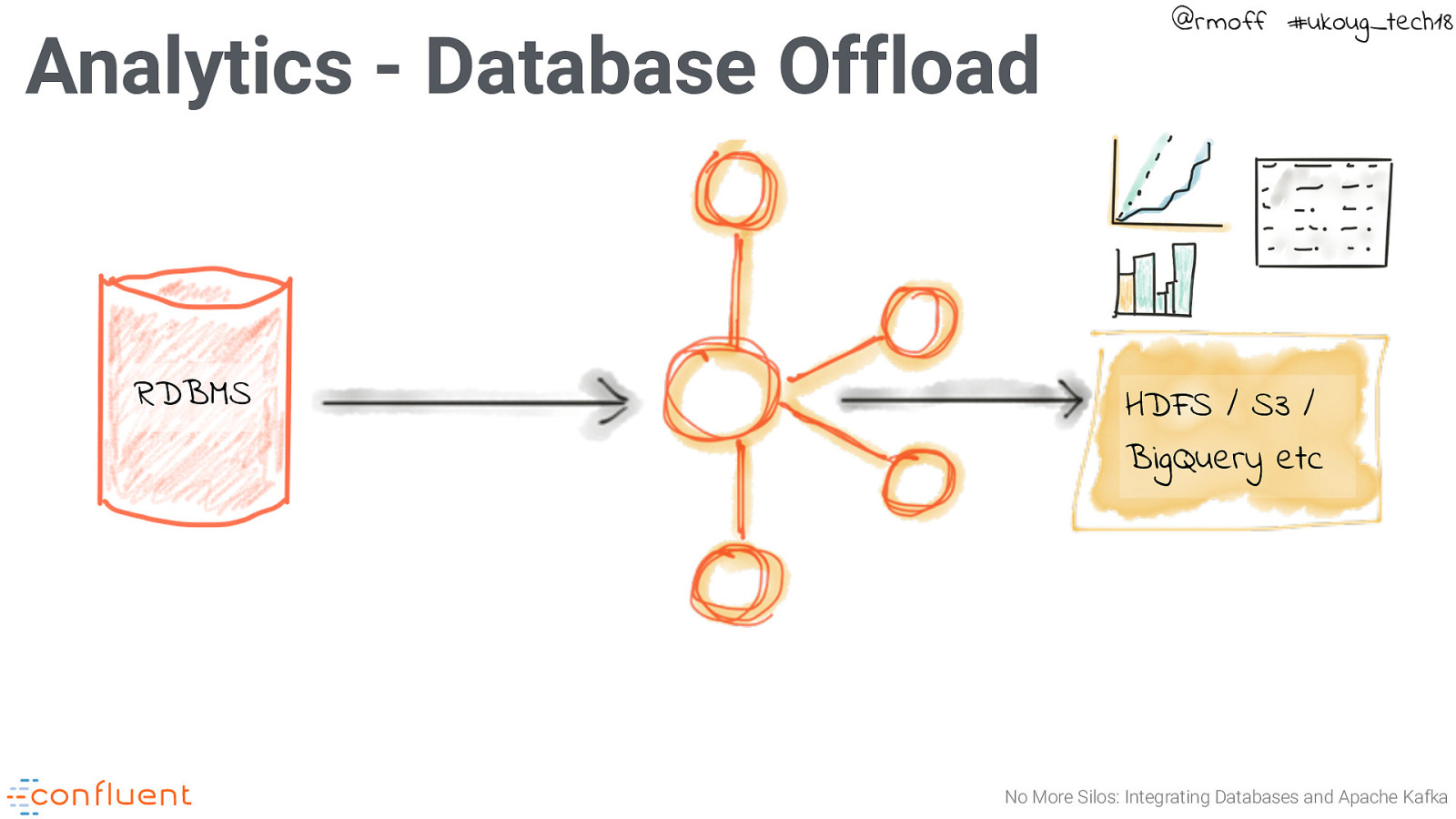

Analytics - Database Offload RDBMS @rmoff #ukoug_tech18 HDFS / S3 / BigQuery etc No More Silos: Integrating Databases and Apache Kafka

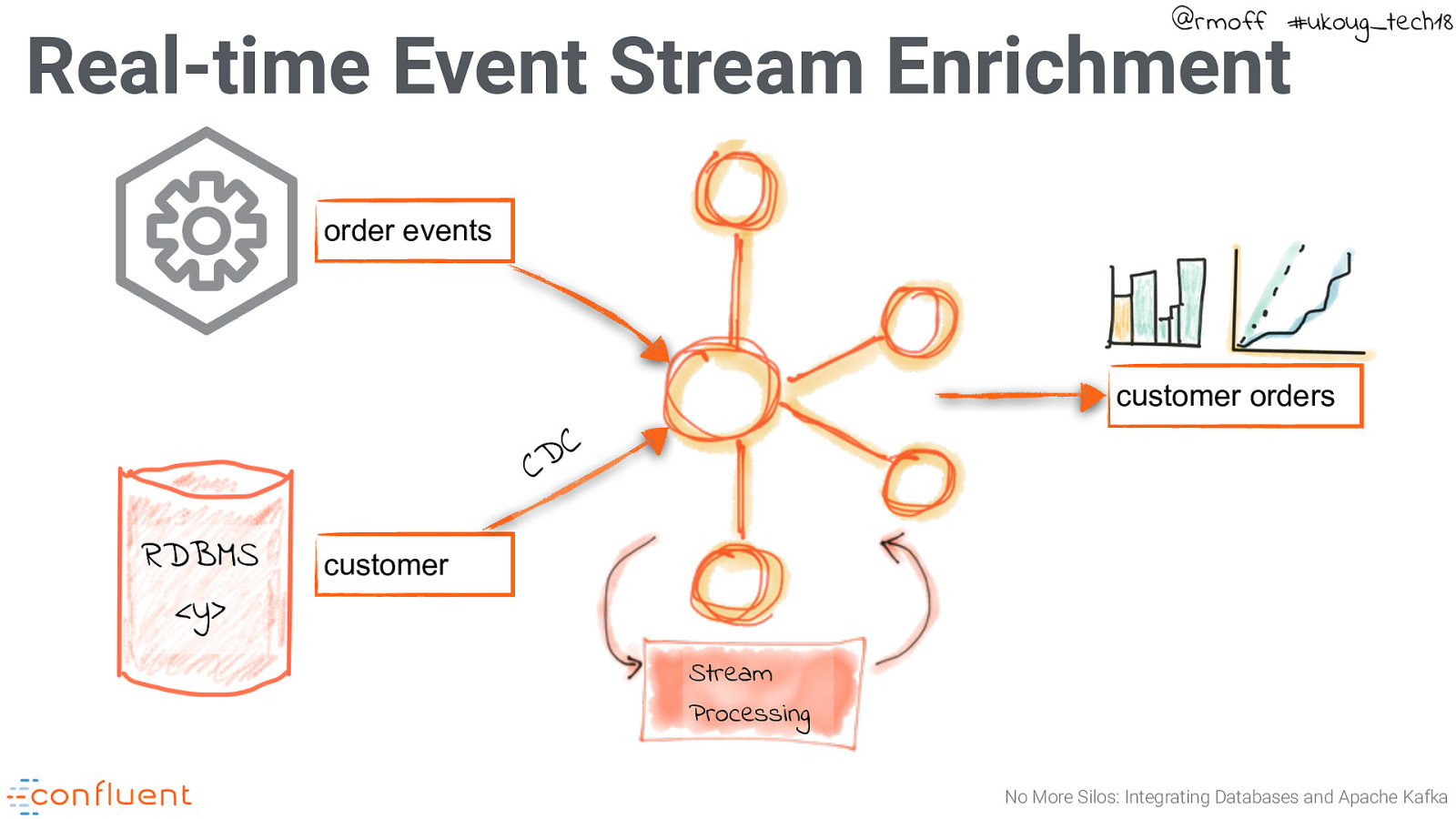

@rmoff #ukoug_tech18 Real-time Event Stream Enrichment order events customer orders C D C RDBMS <y> customer Stream Processing No More Silos: Integrating Databases and Apache Kafka

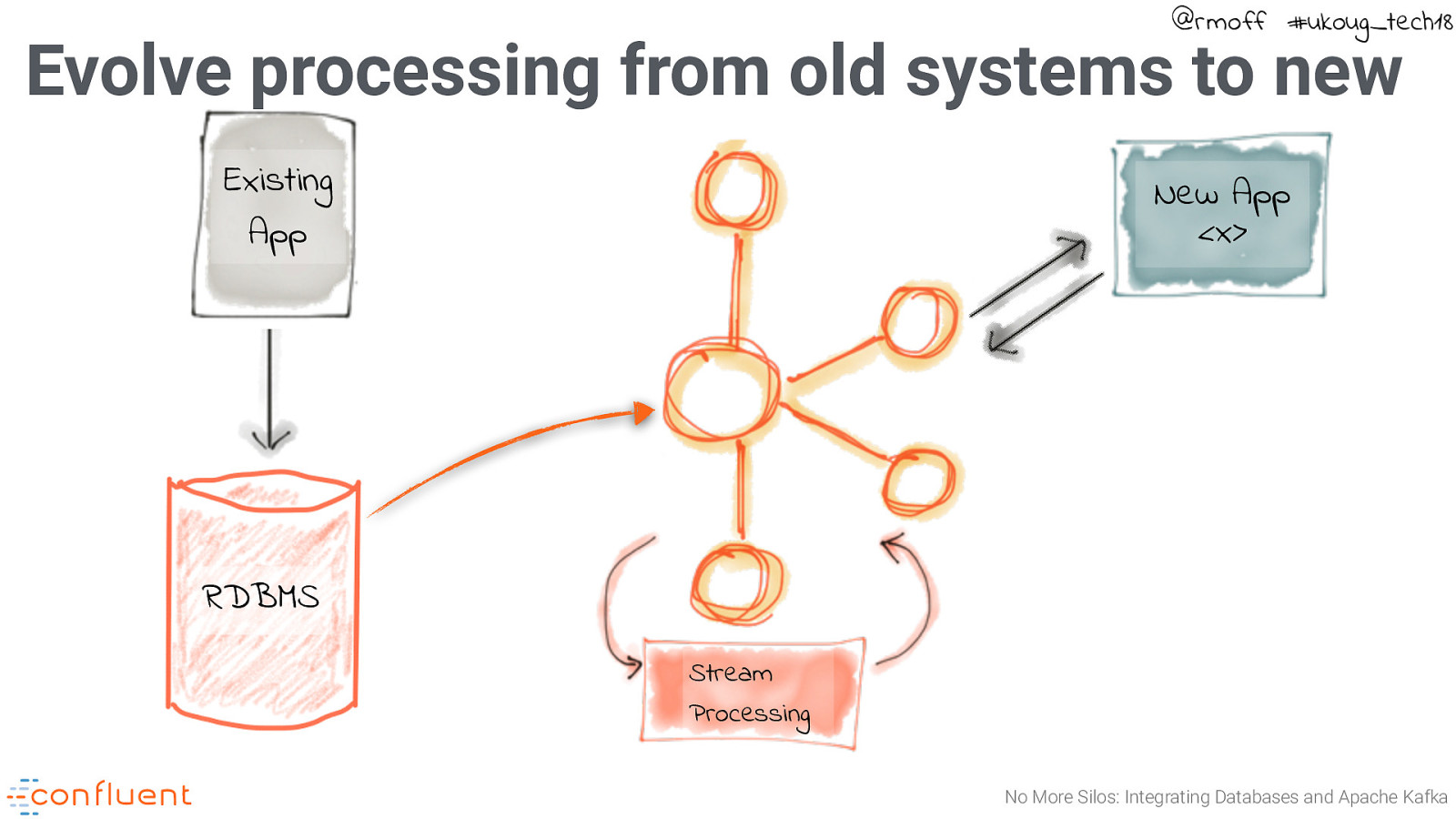

@rmoff #ukoug_tech18 Evolve processing from old systems to new Existing App New App <x> RDBMS Stream Processing No More Silos: Integrating Databases and Apache Kafka

“ But streaming…I’ve just got data in a database…right? @rmoff / No More Silos: Integrating Databases and Apache Kafka

“ Bold claim: all your data is event streams @rmoff / No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 A Customer Experience No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 A Sale No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 A Sensor Reading No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 An Application Log Entry No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 Databases No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 Do you think that’s a table you are querying? No More Silos: Integrating Databases and Apache Kafka

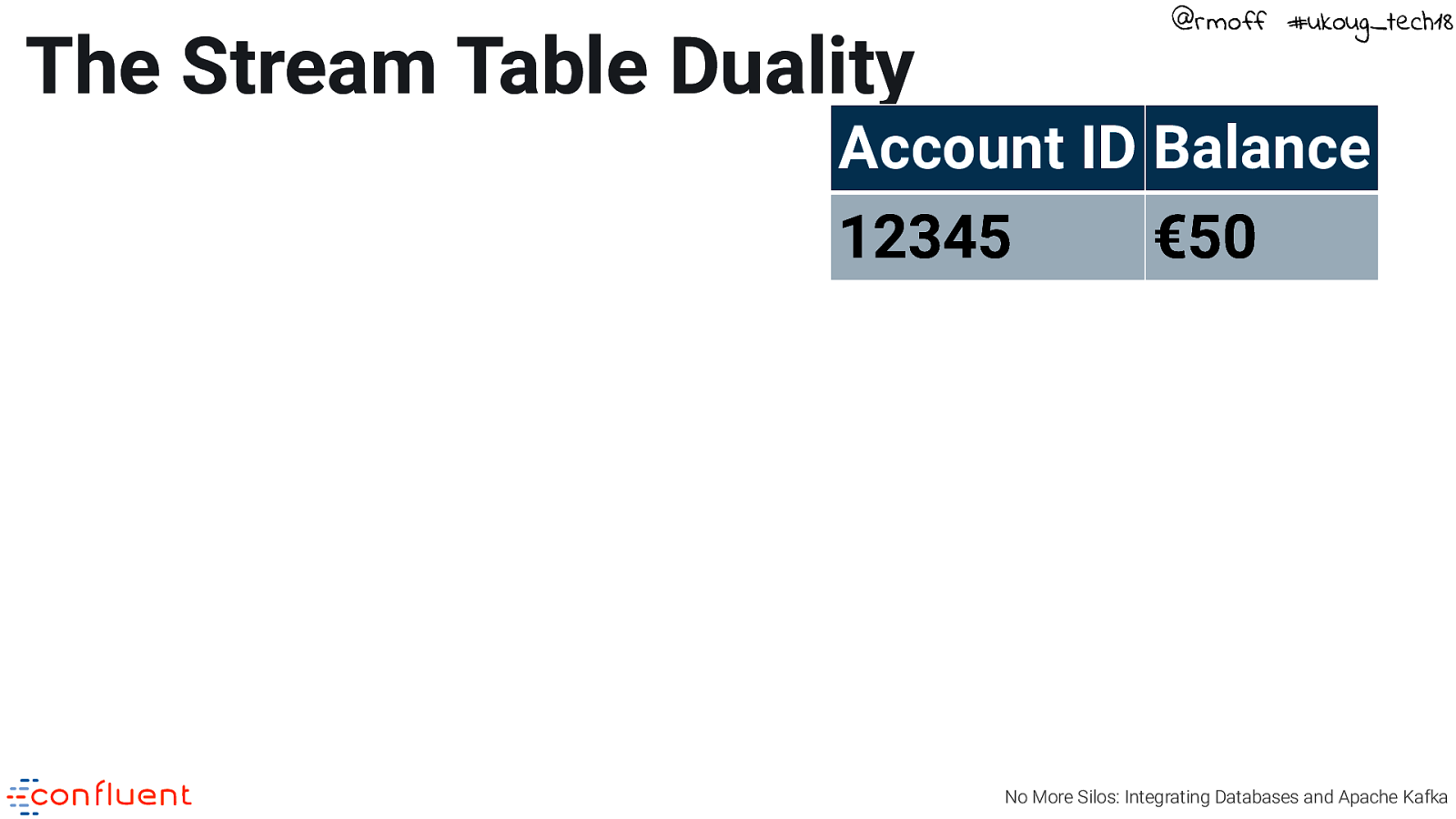

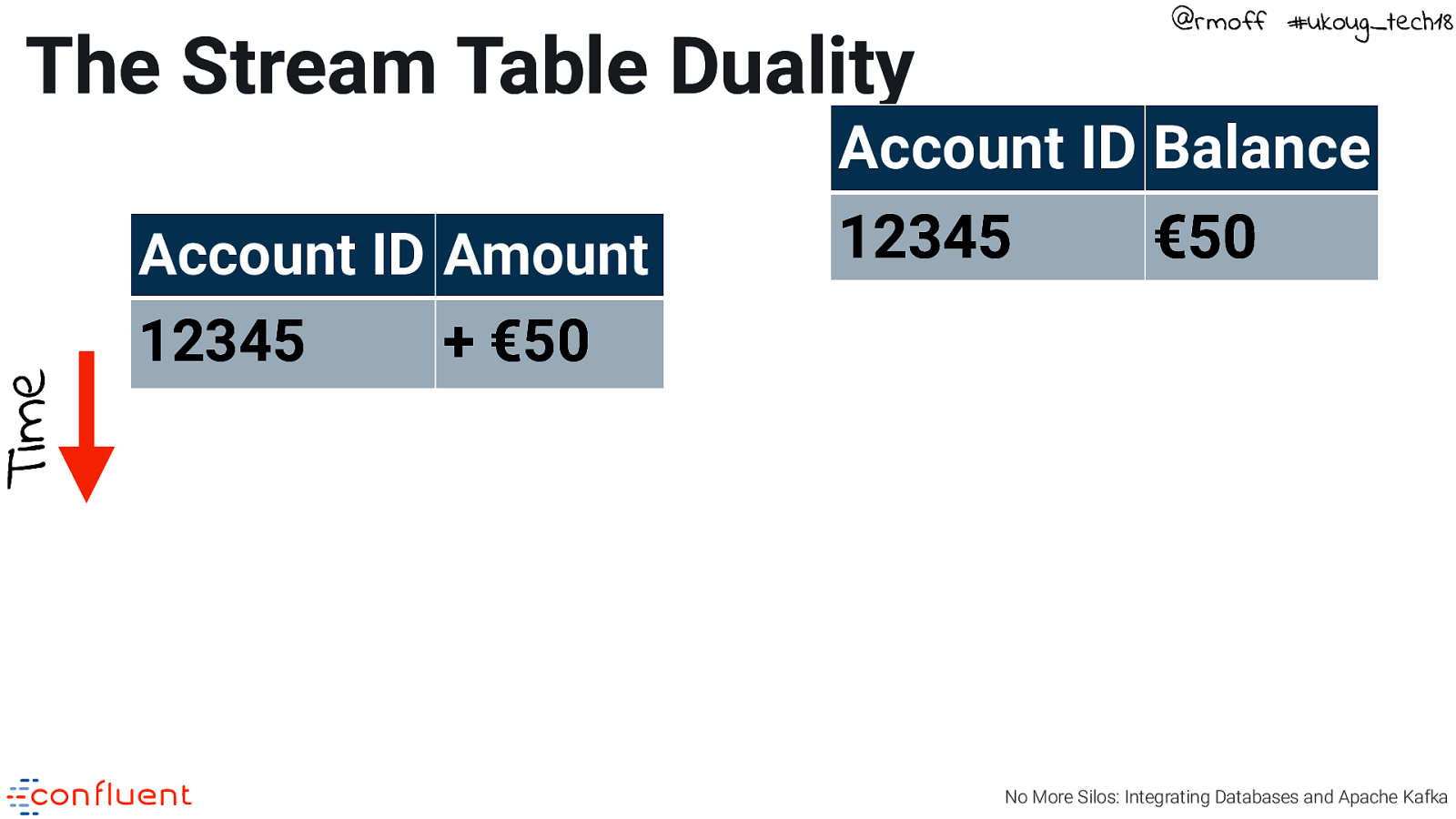

The Stream Table Duality @rmoff #ukoug_tech18 Account ID Balance 12345 €50 No More Silos: Integrating Databases and Apache Kafka

Time The Stream Table Duality Account ID Amount 12345 + €50 @rmoff #ukoug_tech18 Account ID Balance 12345 €50 No More Silos: Integrating Databases and Apache Kafka

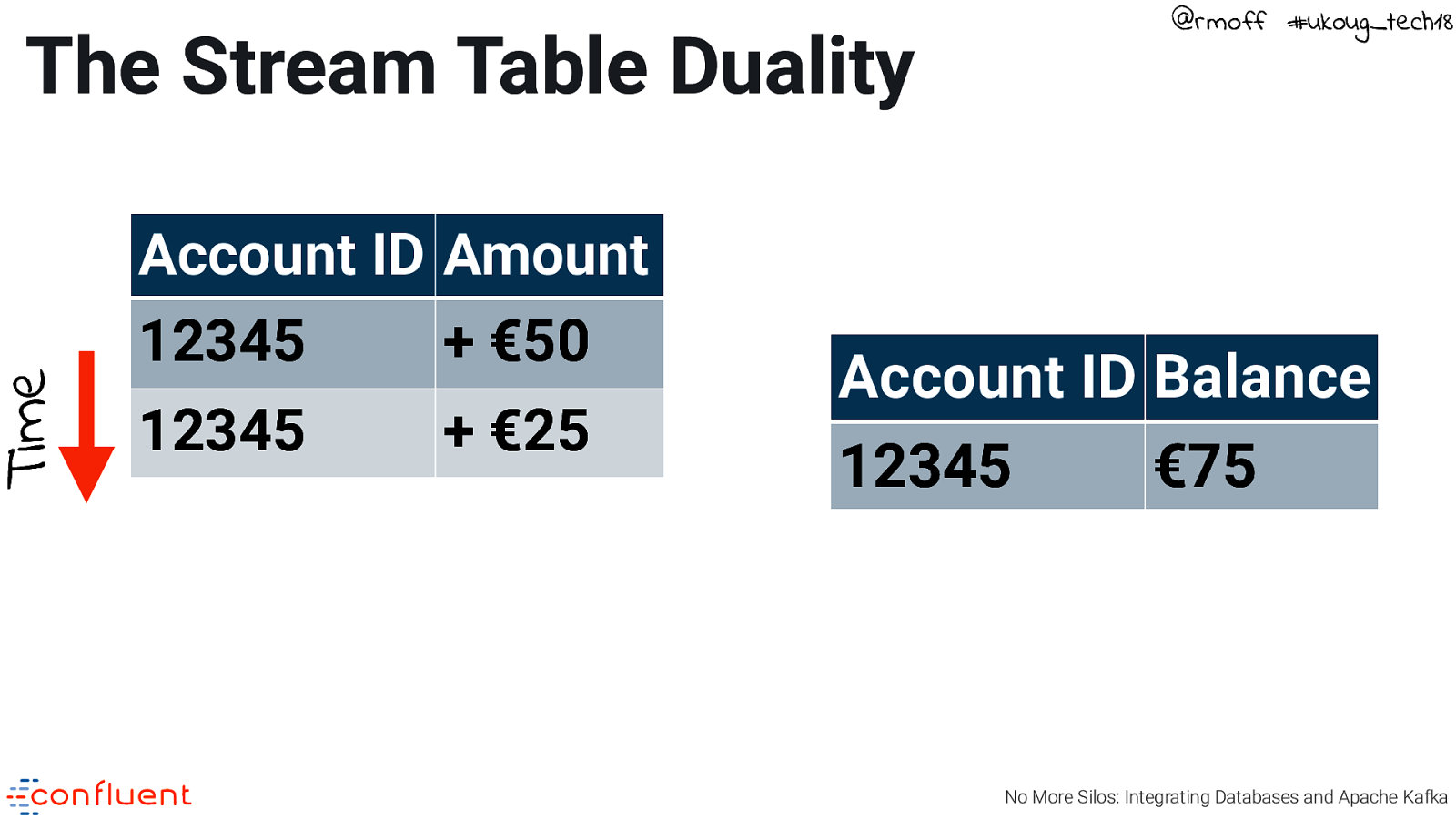

Time The Stream Table Duality Account ID Amount 12345 + €50 12345

- €25 @rmoff #ukoug_tech18 Account ID Balance 12345 €75 No More Silos: Integrating Databases and Apache Kafka

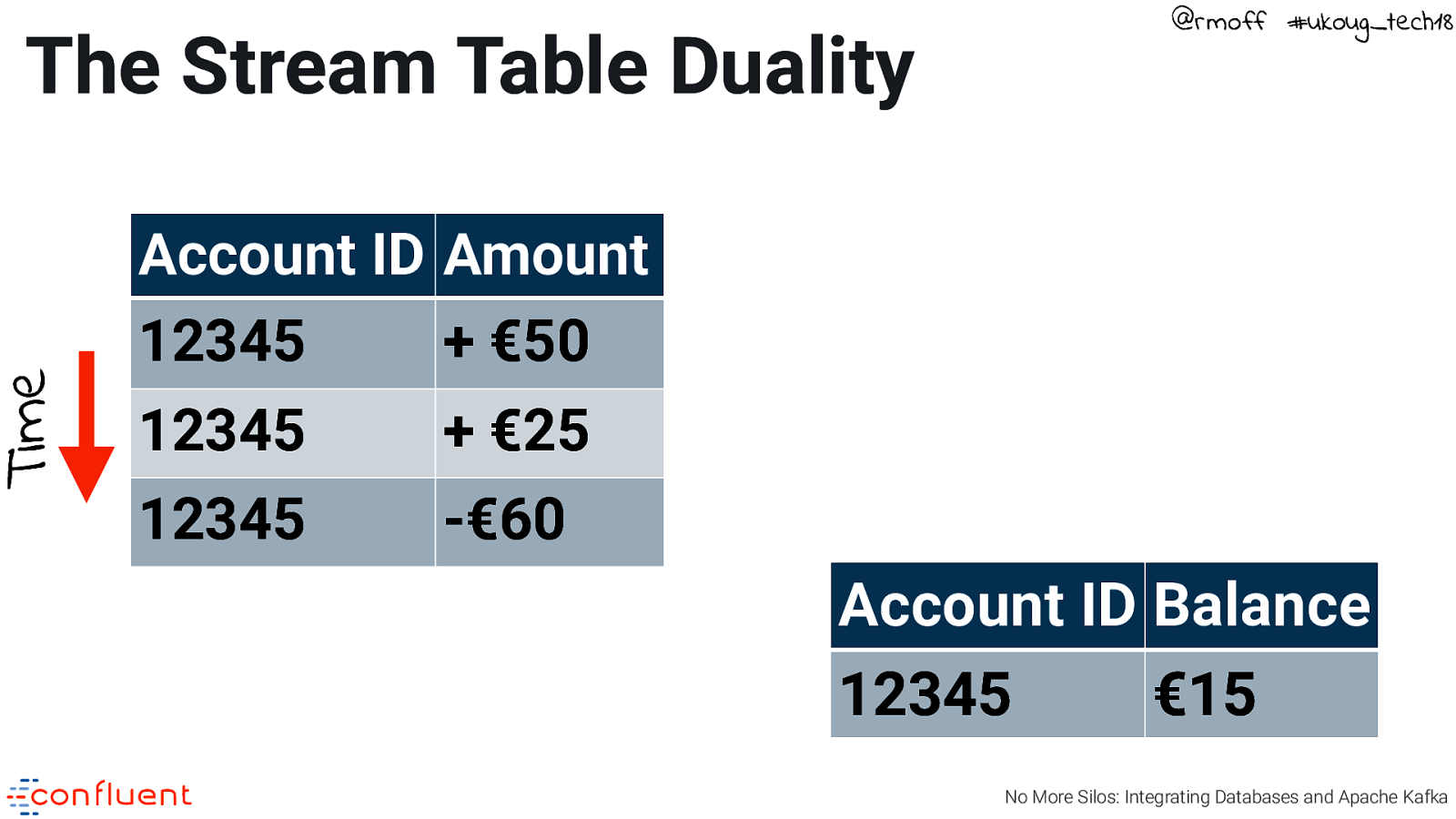

Time The Stream Table Duality @rmoff #ukoug_tech18 Account ID Amount 12345 + €50 12345

- €25 12345 -€60 Account ID Balance 12345 €15 No More Silos: Integrating Databases and Apache Kafka

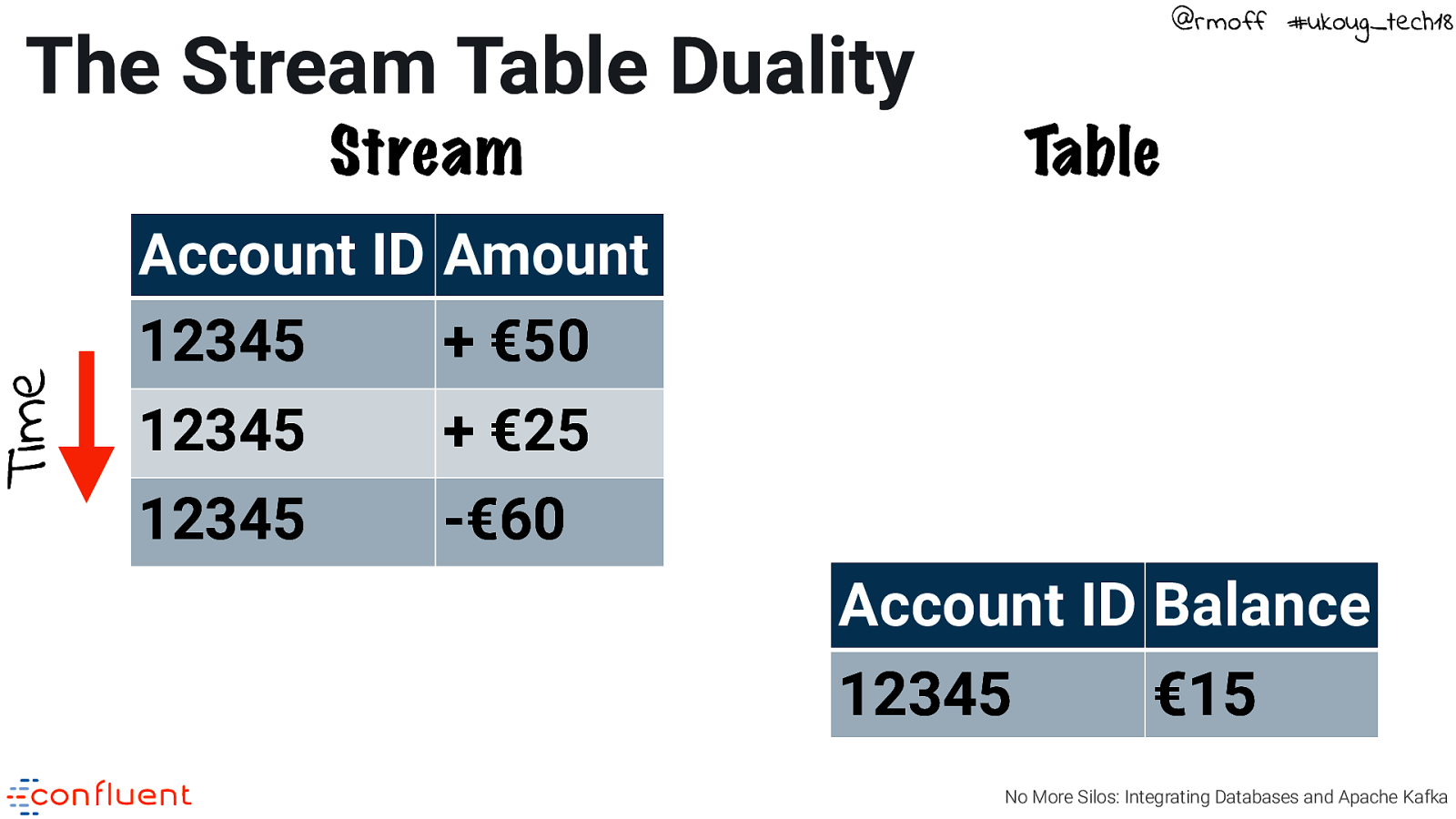

@rmoff #ukoug_tech18 Time The Stream Table Duality Stream Table Account ID Amount 12345 + €50 12345

- €25 12345 -€60 Account ID Balance 12345 €15 No More Silos: Integrating Databases and Apache Kafka

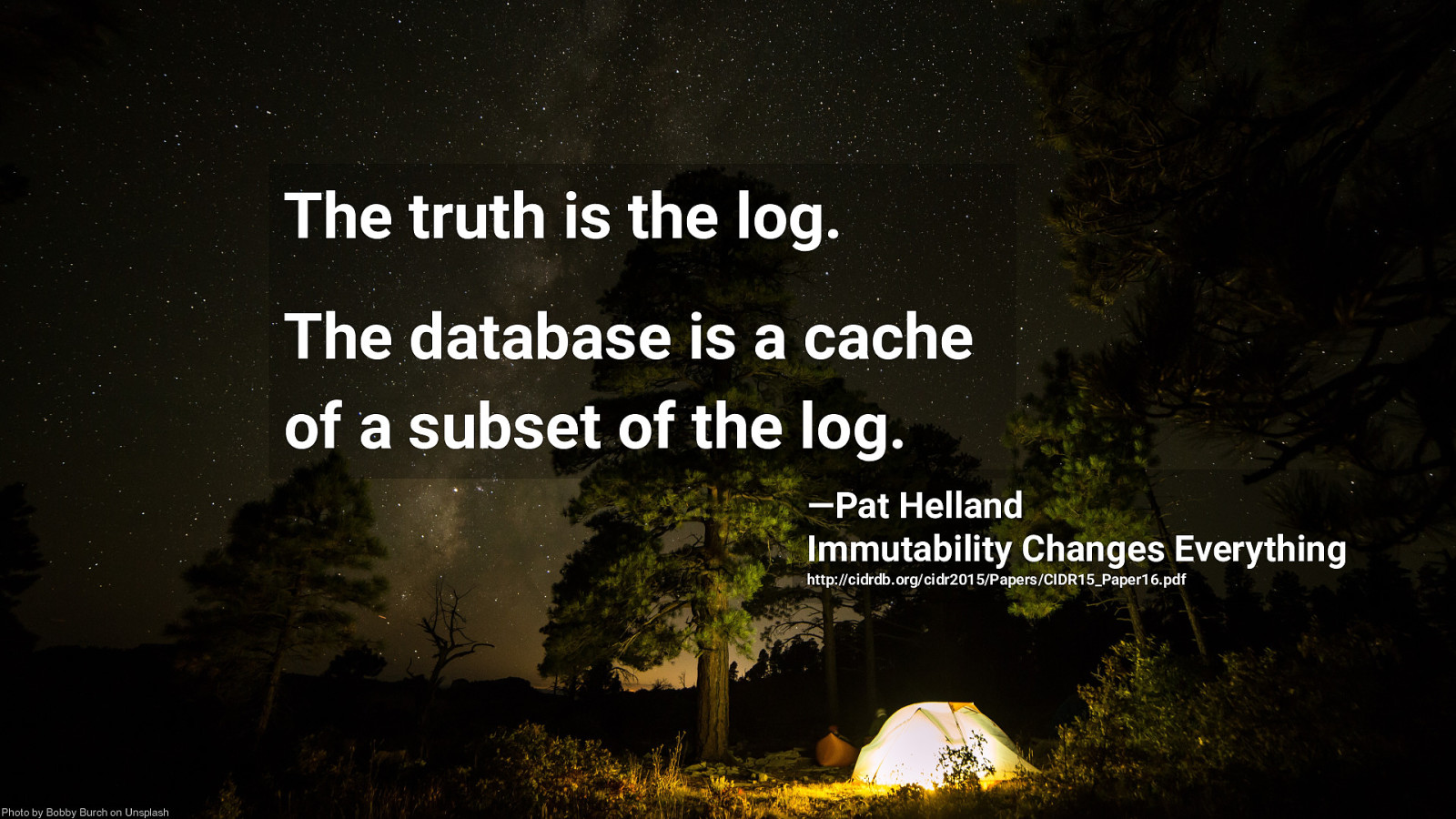

@rmoff #ukoug_tech18 The truth is the log. The database is a cache of a subset of the log. —Pat Helland Immutability Changes Everything http://cidrdb.org/cidr2015/Papers/CIDR15_Paper16.pdf No More Silos: Integrating Databases and Apache Kafka Photo by Bobby Burch on Unsplash

@rmoff #ukoug_tech18 KSQL is the Streaming SQL Engine for Apache Kafka No More Silos: Integrating Databases and Apache Kafka

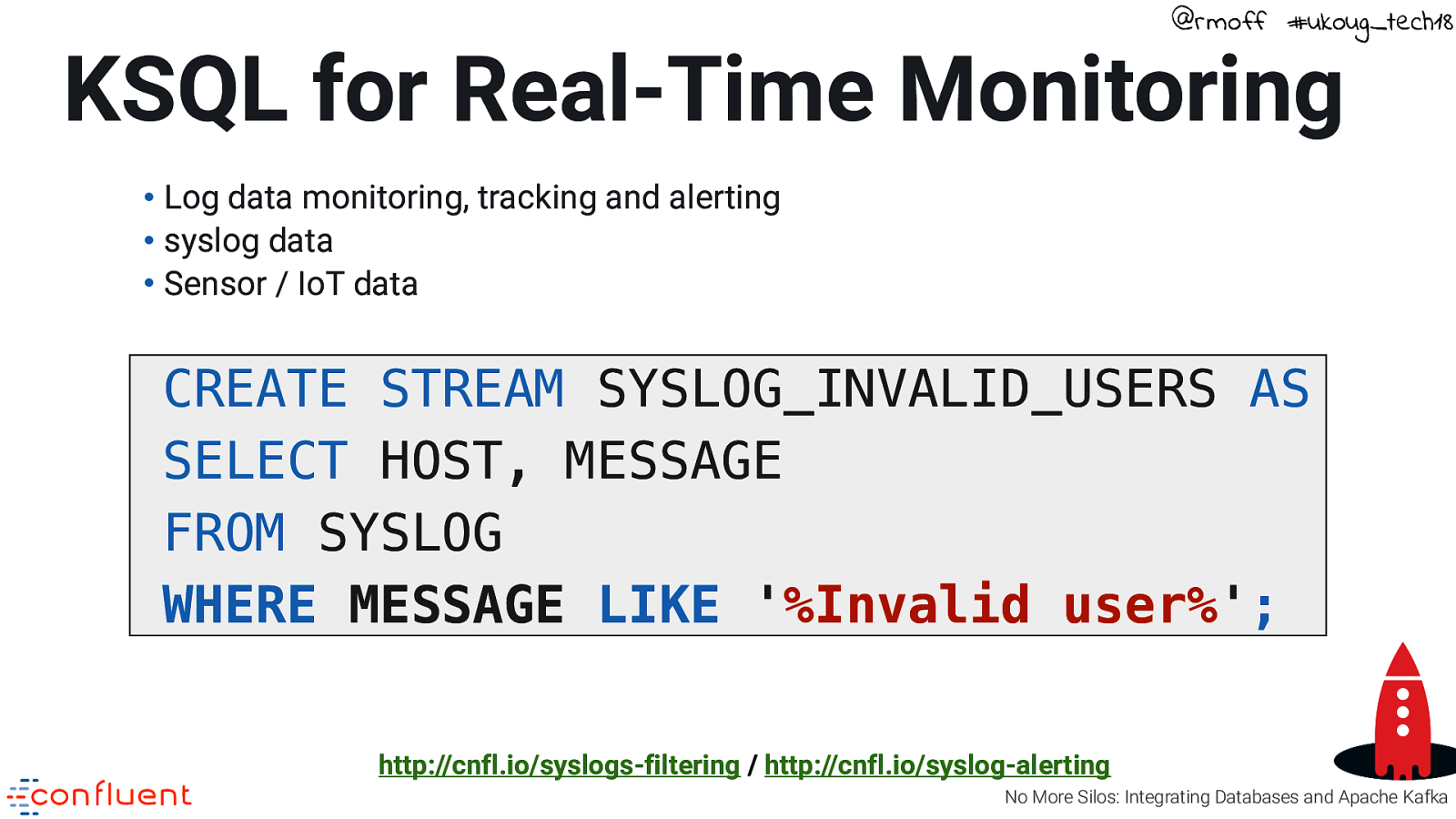

@rmoff #ukoug_tech18 KSQL for Real-Time Monitoring • Log data monitoring, tracking and alerting • syslog data • Sensor / IoT data CREATE STREAM SYSLOG_INVALID_USERS AS SELECT HOST, MESSAGE FROM SYSLOG WHERE MESSAGE LIKE ‘%Invalid user%’; http://cnfl.io/syslogs-filtering / http://cnfl.io/syslog-alerting No More Silos: Integrating Databases and Apache Kafka

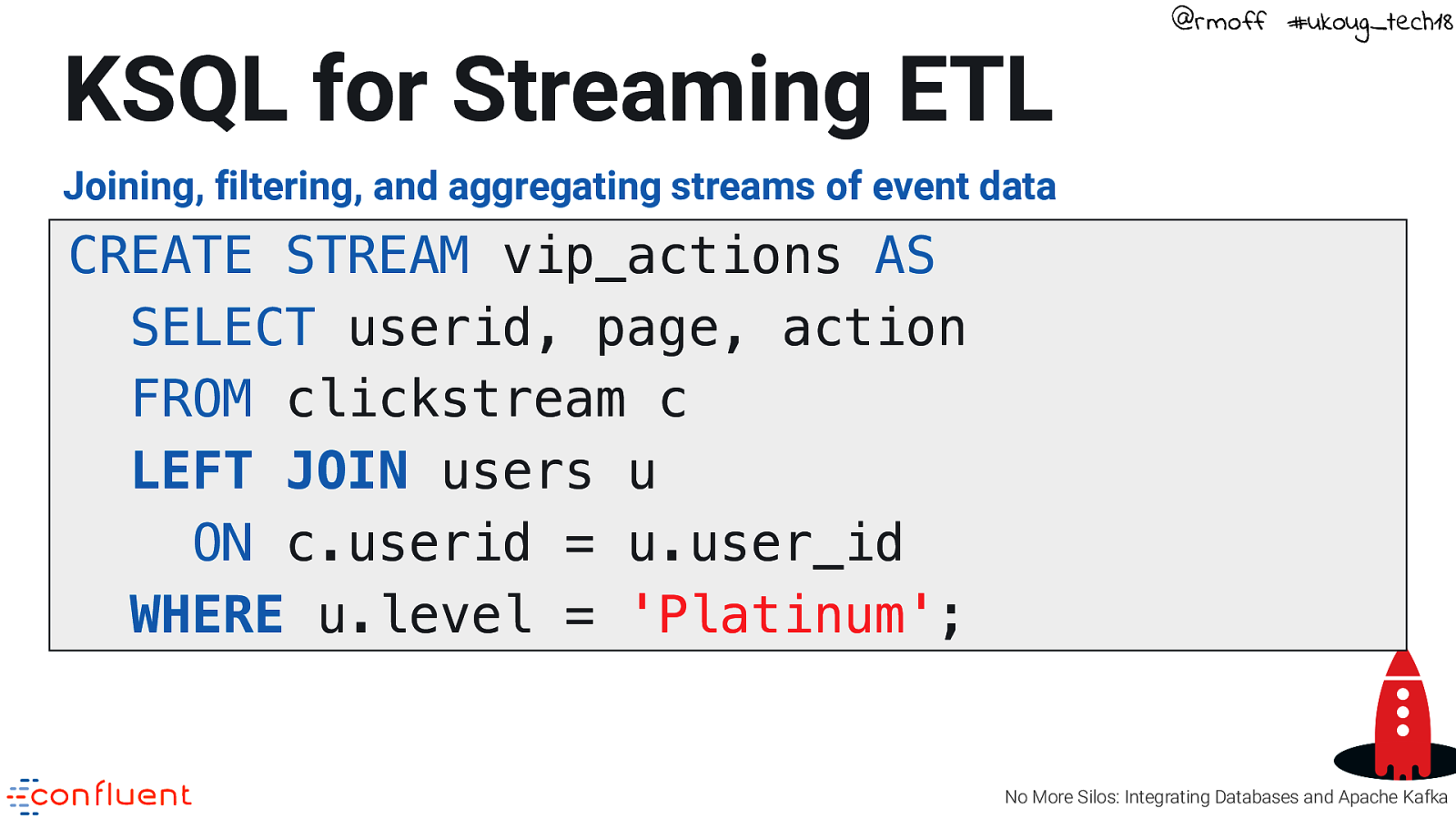

KSQL for Streaming ETL @rmoff #ukoug_tech18 Joining, filtering, and aggregating streams of event data CREATE STREAM vip_actions AS SELECT userid, page, action FROM clickstream c LEFT JOIN users u ON c.userid = u.user_id WHERE u.level = ‘Platinum’; No More Silos: Integrating Databases and Apache Kafka

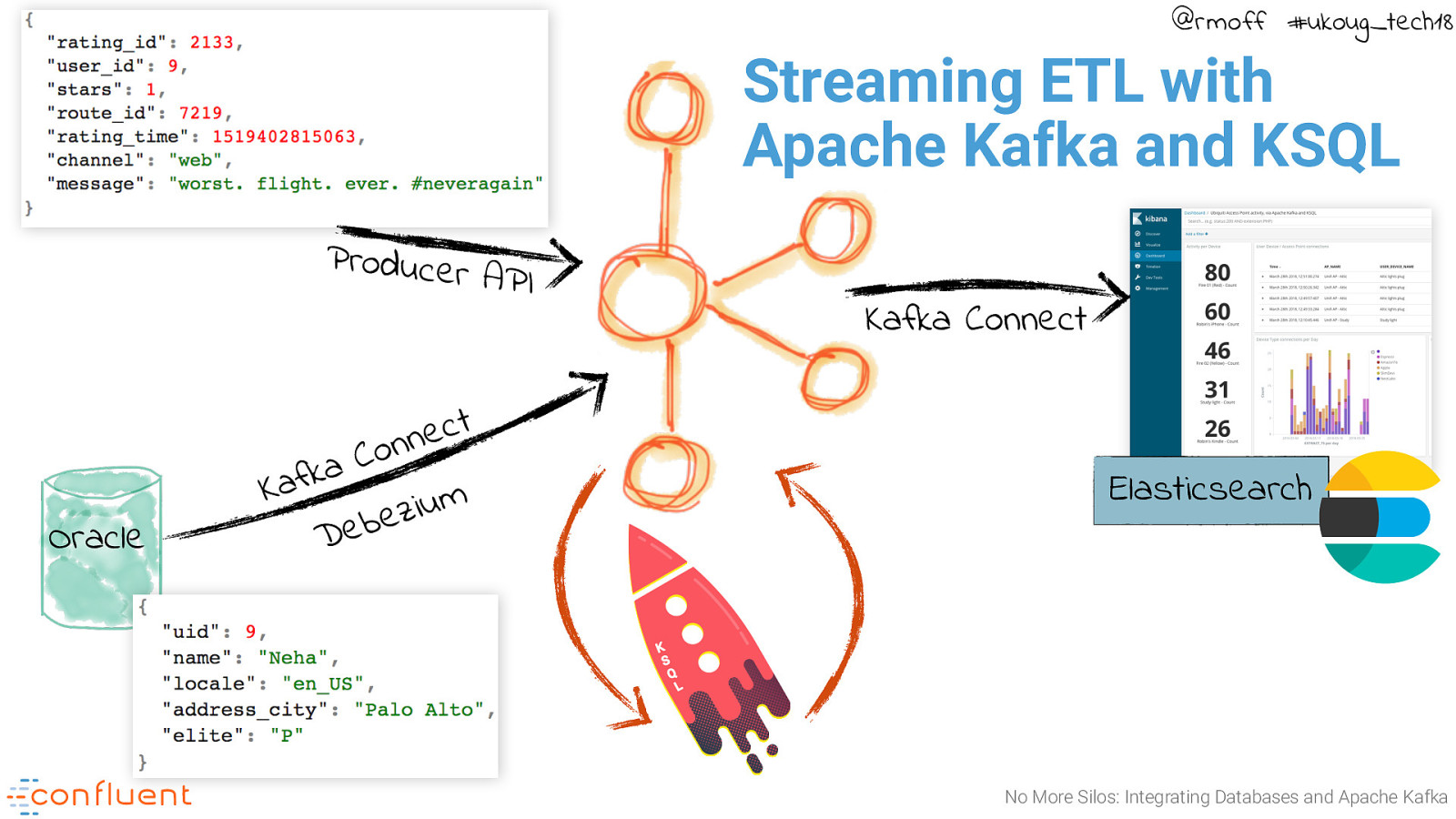

@rmoff #ukoug_tech18 Streaming ETL with Apache Kafka and KSQL Producer API Oracle t c e n n o C a k f Ka m u i z e b e D Kafka Connect Elasticsearch No More Silos: Integrating Databases and Apache Kafka

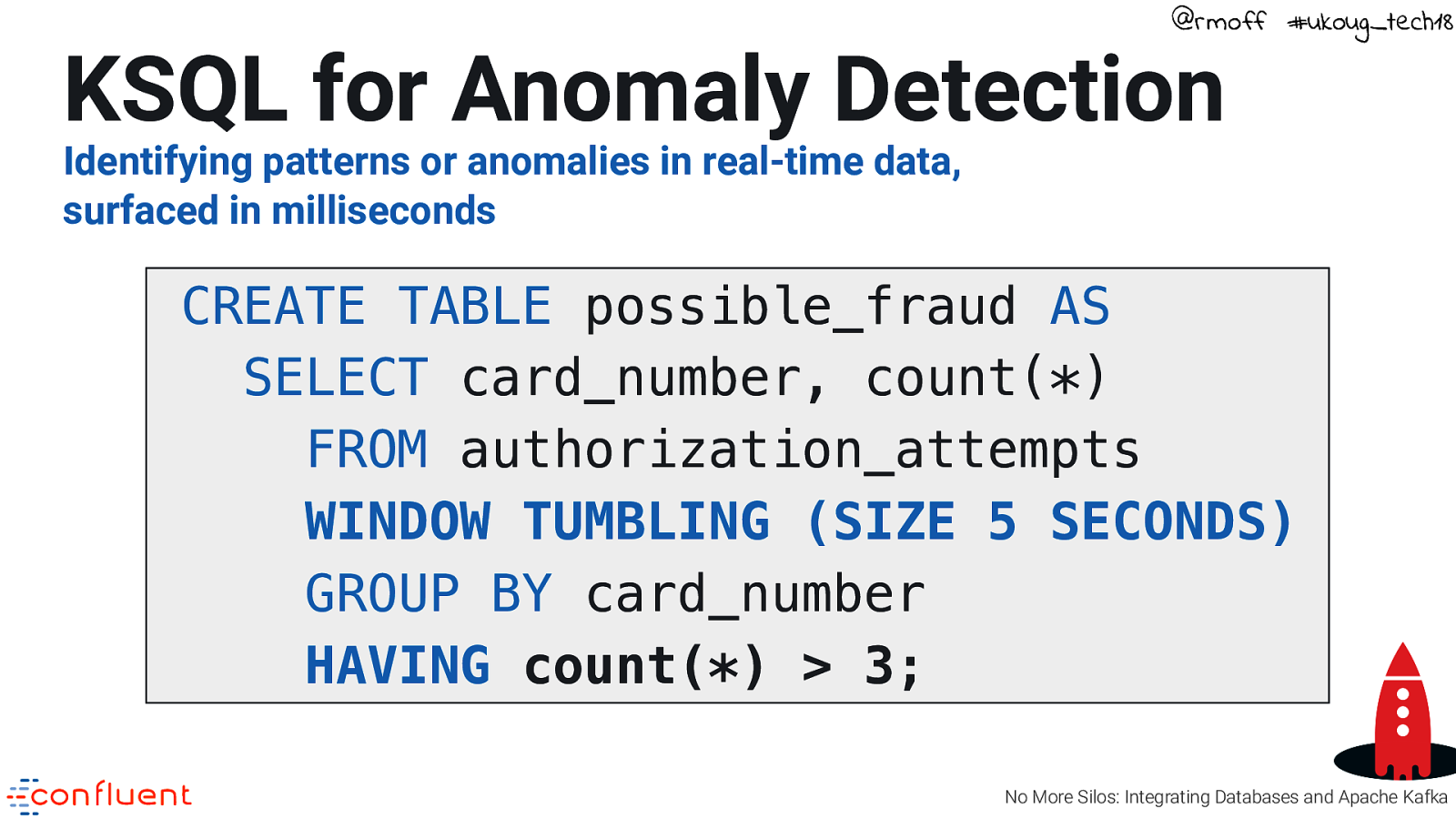

@rmoff #ukoug_tech18 KSQL for Anomaly Detection Identifying patterns or anomalies in real-time data, surfaced in milliseconds CREATE TABLE possible_fraud AS SELECT card_number, count() FROM authorization_attempts WINDOW TUMBLING (SIZE 5 SECONDS) GROUP BY card_number HAVING count() > 3; No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 Photo by Vadim Sherbakov on Unsplash No More Silos: Integrating Databases and Apache Kafka

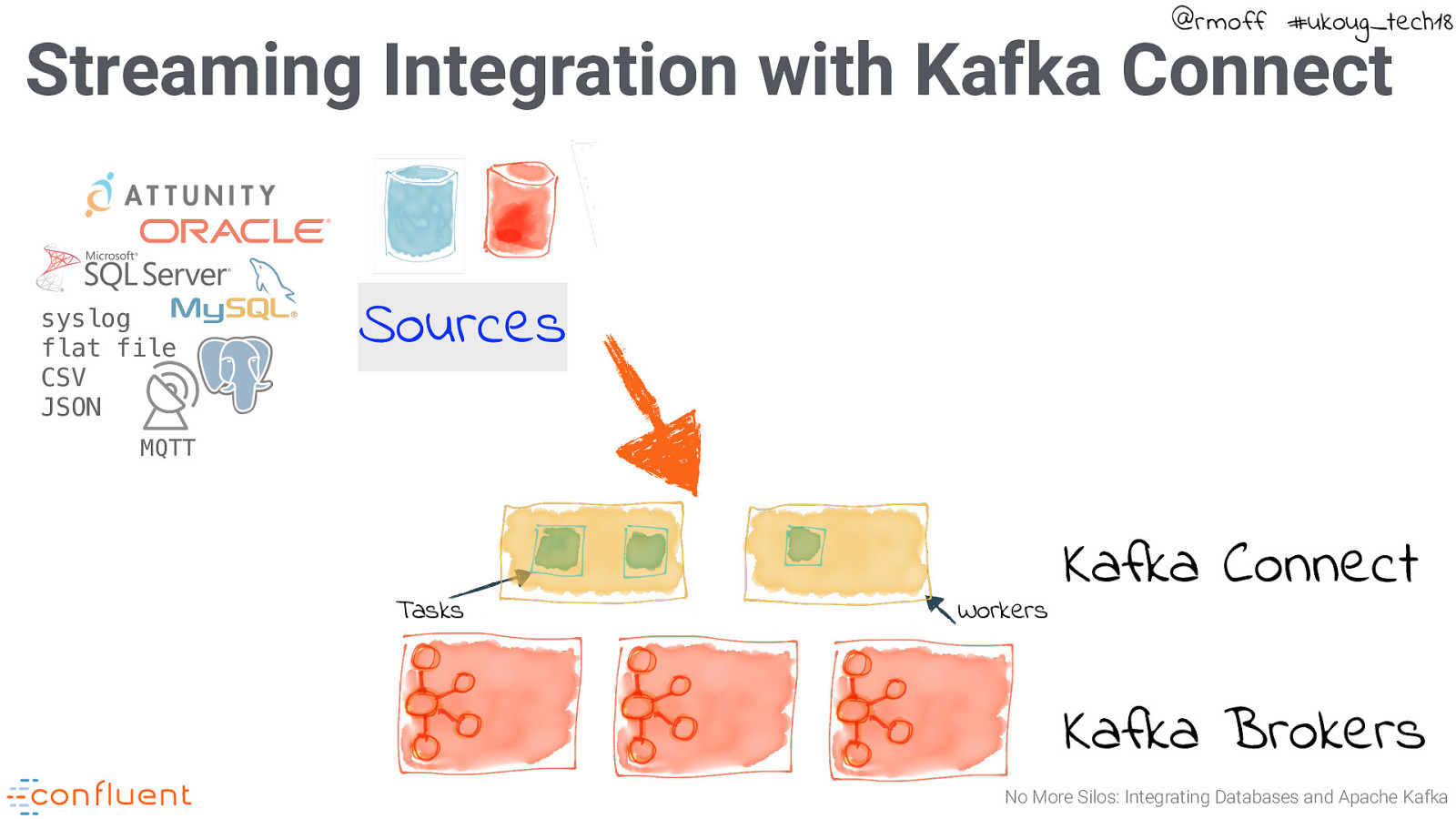

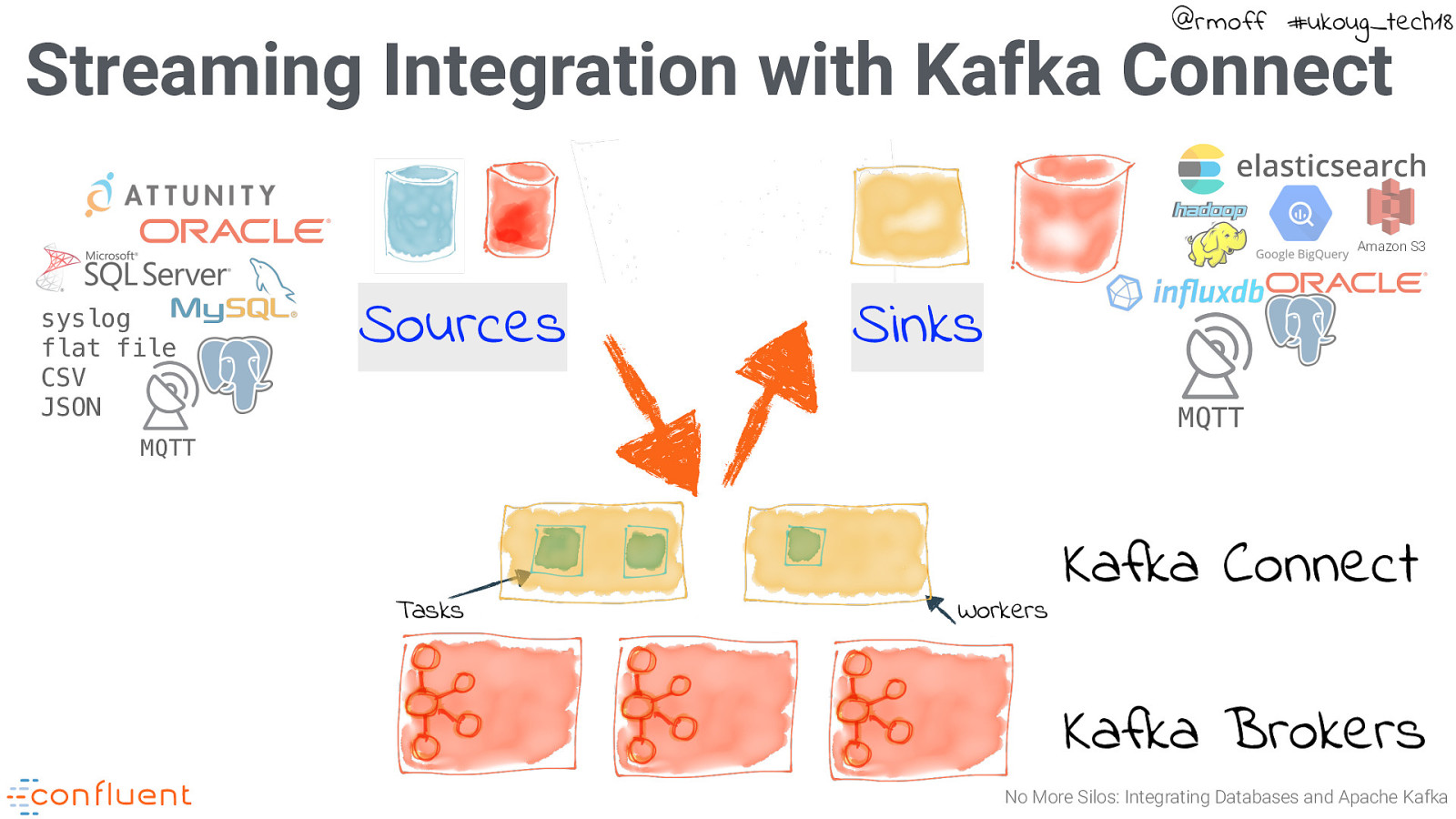

@rmoff #ukoug_tech18 Streaming Integration with Kafka Connect syslog flat file CSV JSON Sources MQTT Tasks Workers Kafka Connect Kafka Brokers No More Silos: Integrating Databases and Apache Kafka

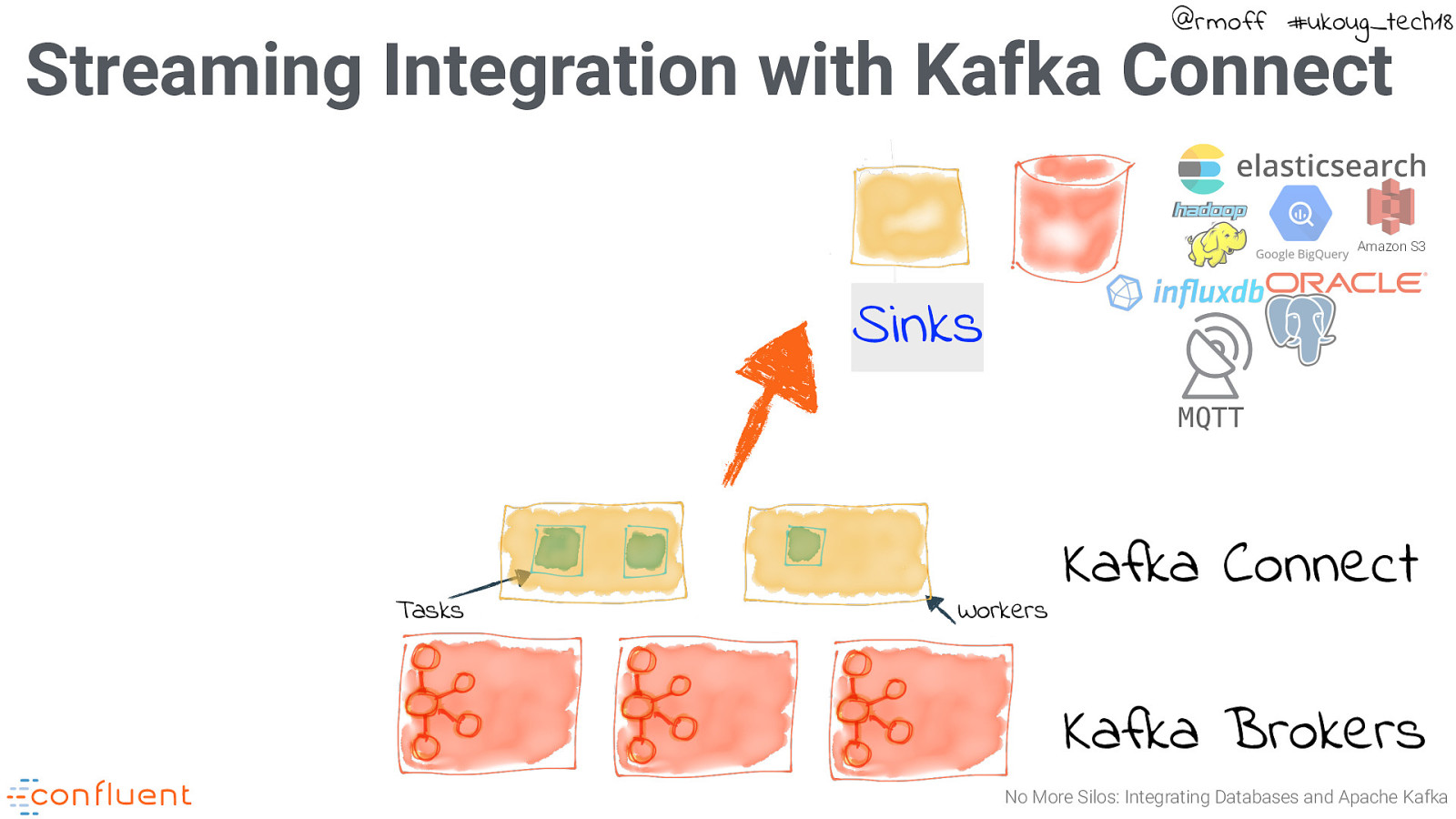

@rmoff #ukoug_tech18 Streaming Integration with Kafka Connect Amazon S3 Sinks MQTT Tasks Workers Kafka Connect Kafka Brokers No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 Streaming Integration with Kafka Connect Amazon S3 syslog flat file CSV JSON Sources Sinks MQTT MQTT Tasks Workers Kafka Connect Kafka Brokers No More Silos: Integrating Databases and Apache Kafka

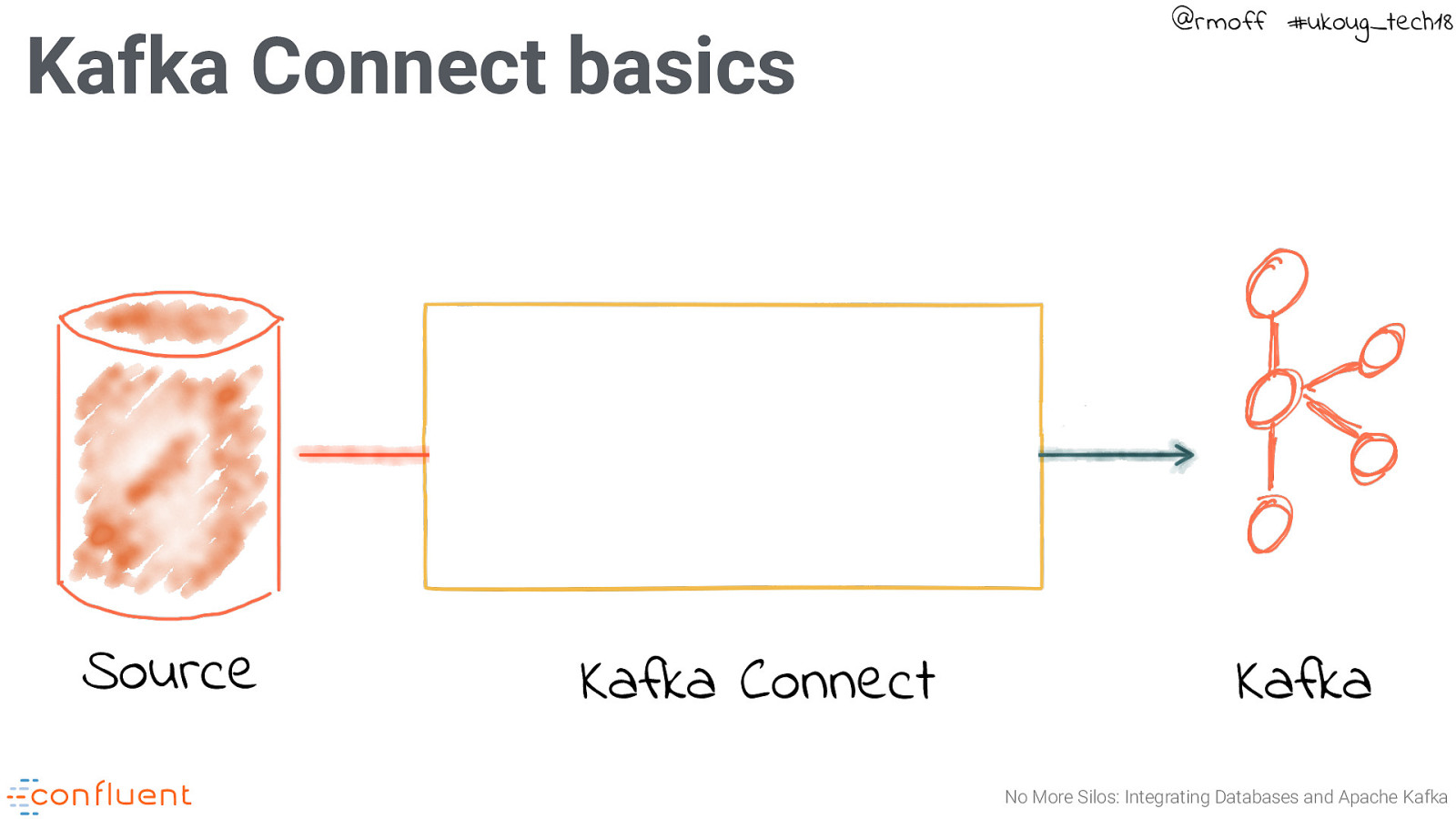

Kafka Connect basics Source Kafka Connect @rmoff #ukoug_tech18 Kafka No More Silos: Integrating Databases and Apache Kafka

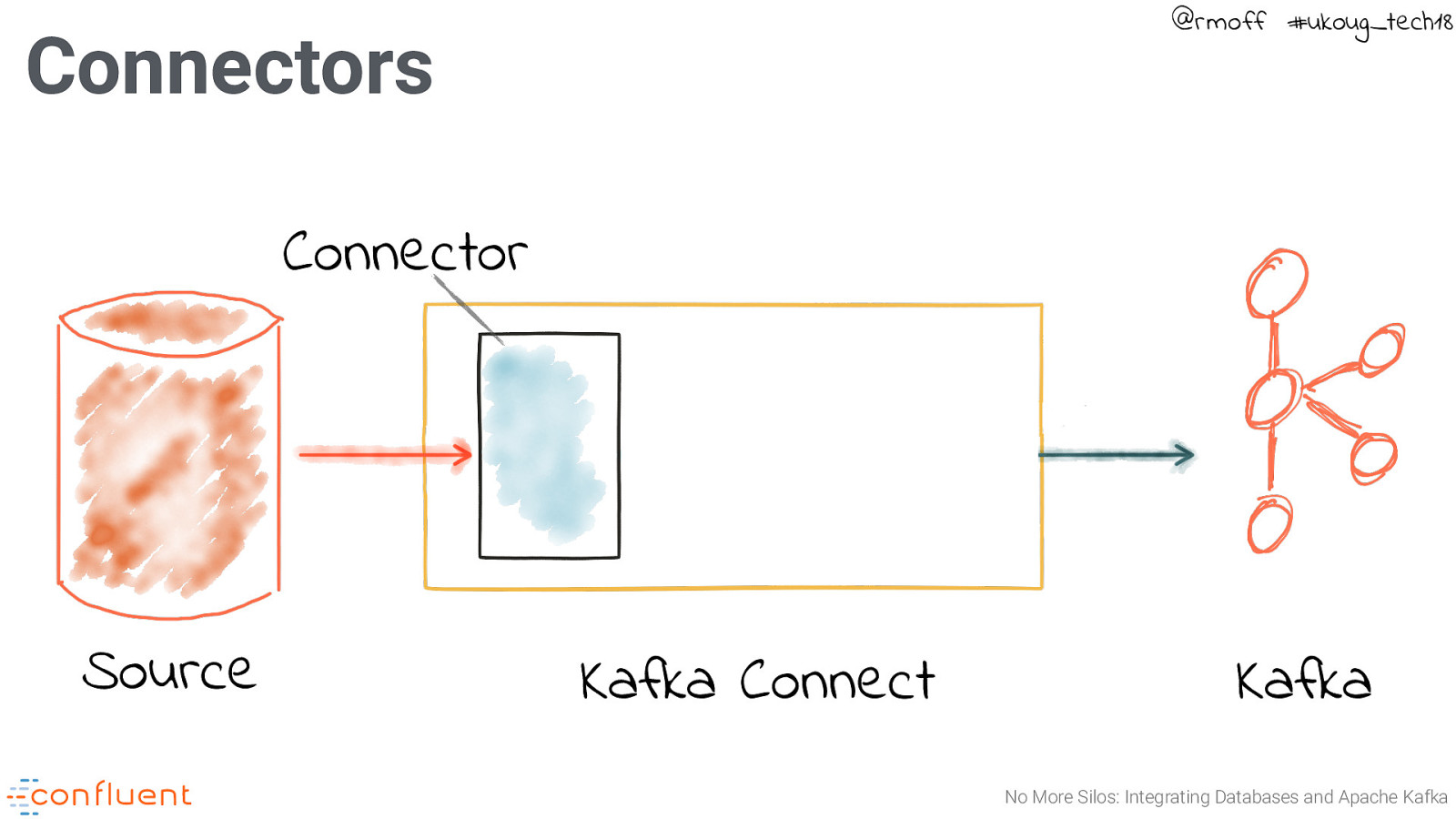

@rmoff #ukoug_tech18 Connectors Connector Source Kafka Connect Kafka No More Silos: Integrating Databases and Apache Kafka

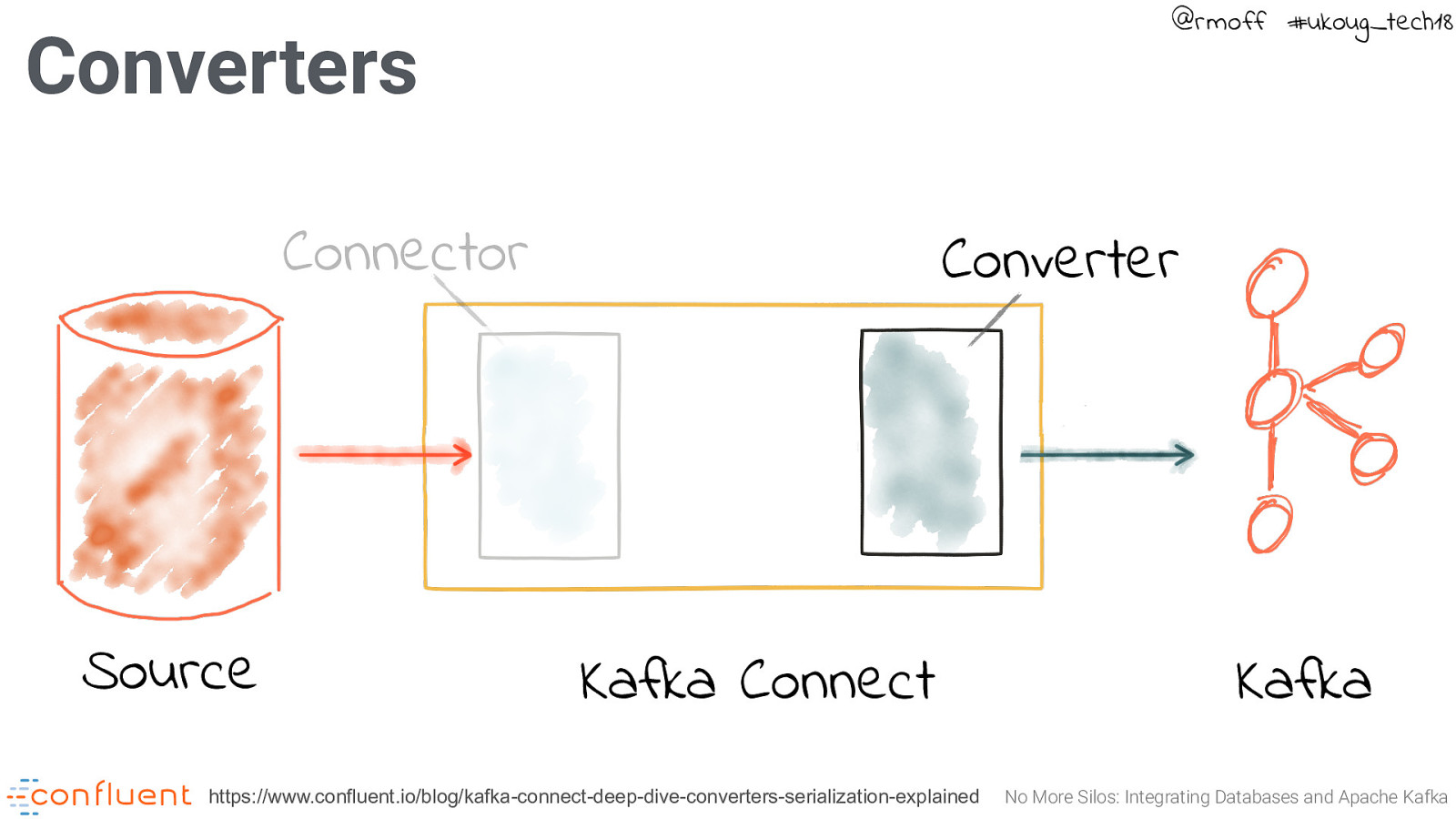

@rmoff #ukoug_tech18 Converters Connector Source Converter Kafka Connect https://www.confluent.io/blog/kafka-connect-deep-dive-converters-serialization-explained Kafka No More Silos: Integrating Databases and Apache Kafka

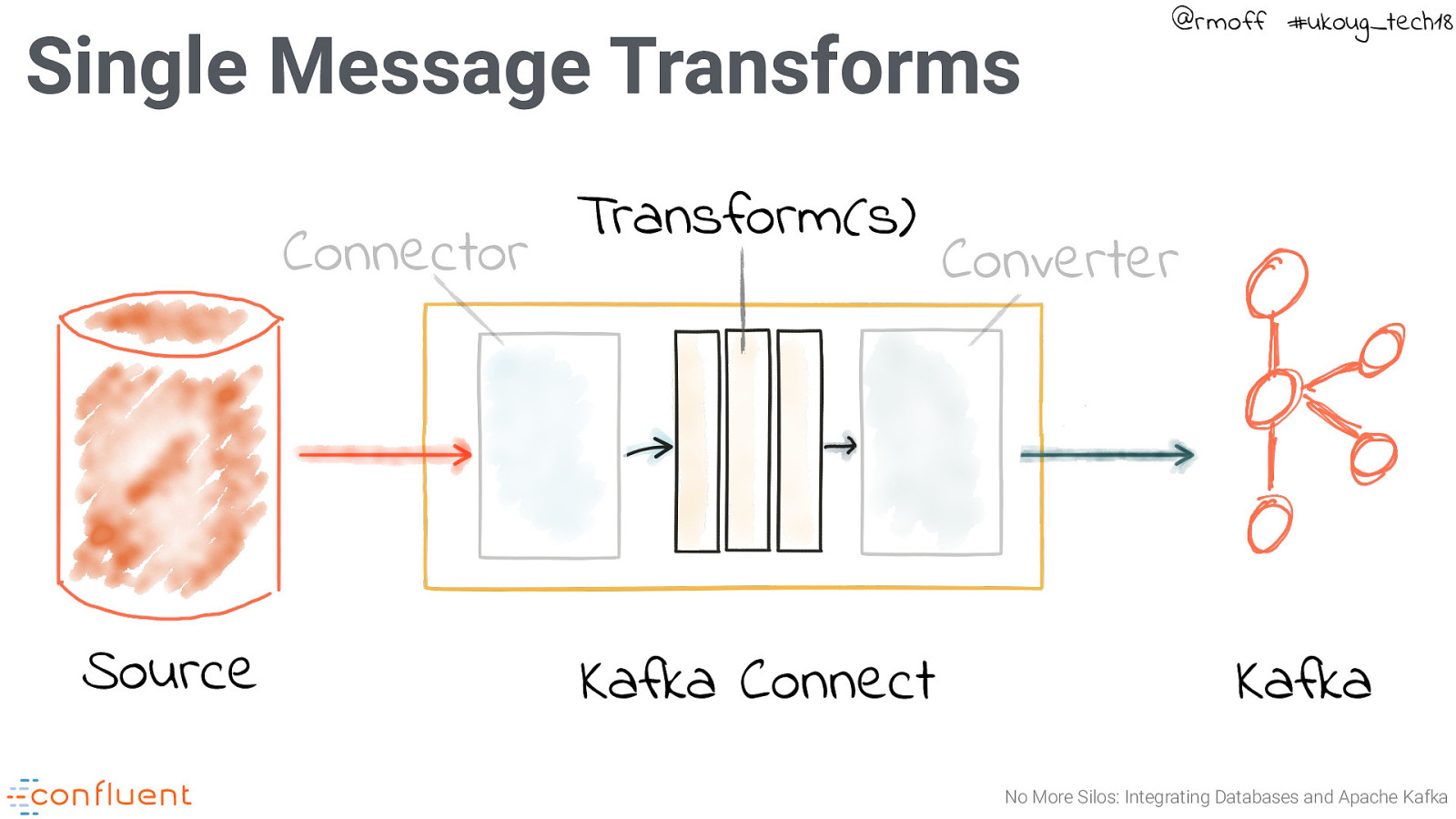

Single Message Transforms Connector Source Transform(s) Kafka Connect @rmoff #ukoug_tech18 Converter Kafka No More Silos: Integrating Databases and Apache Kafka

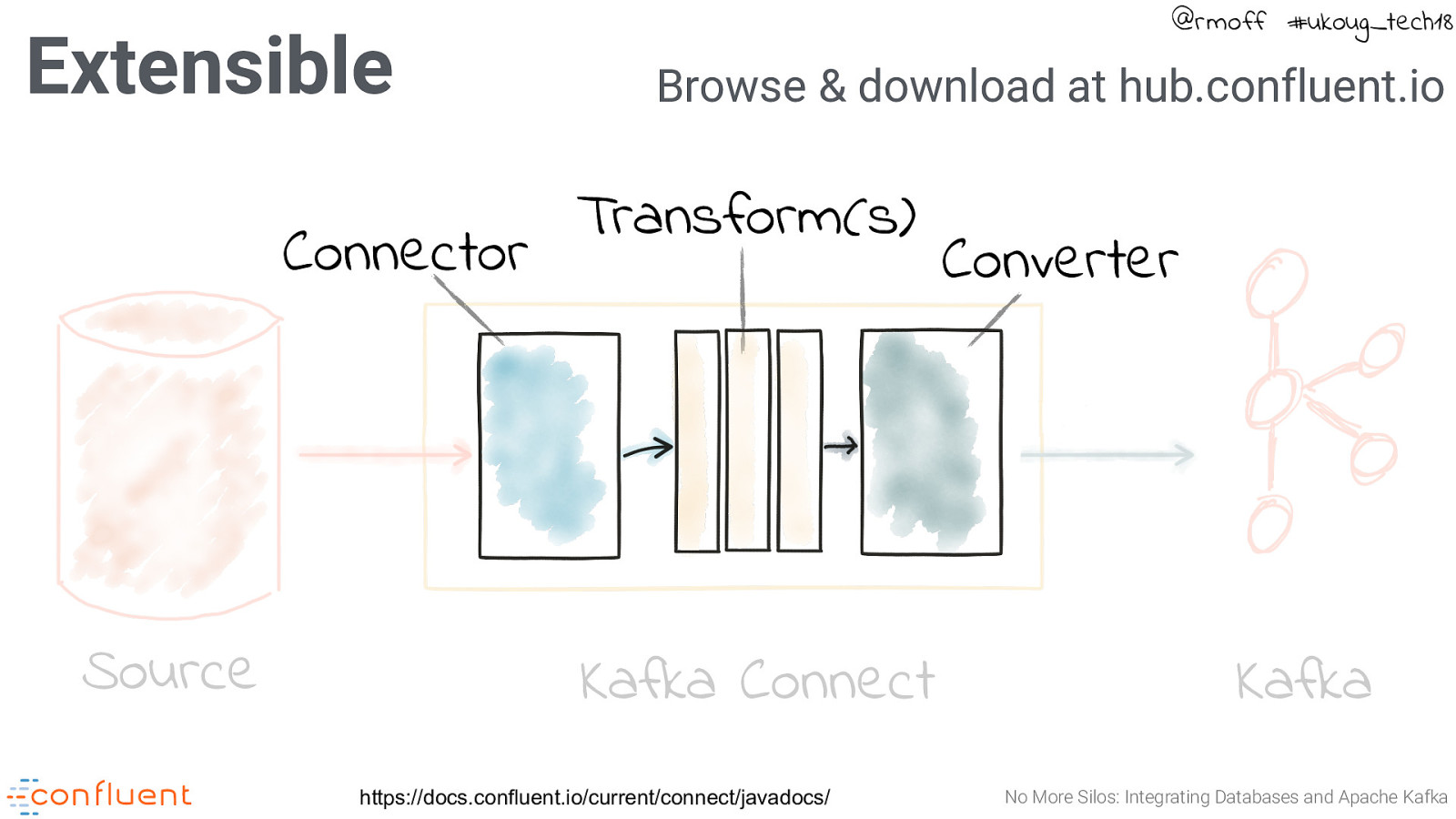

Extensible Connector Source @rmoff #ukoug_tech18 Browse & download at hub.confluent.io Transform(s) Kafka Connect https://docs.confluent.io/current/connect/javadocs/ Converter Kafka No More Silos: Integrating Databases and Apache Kafka

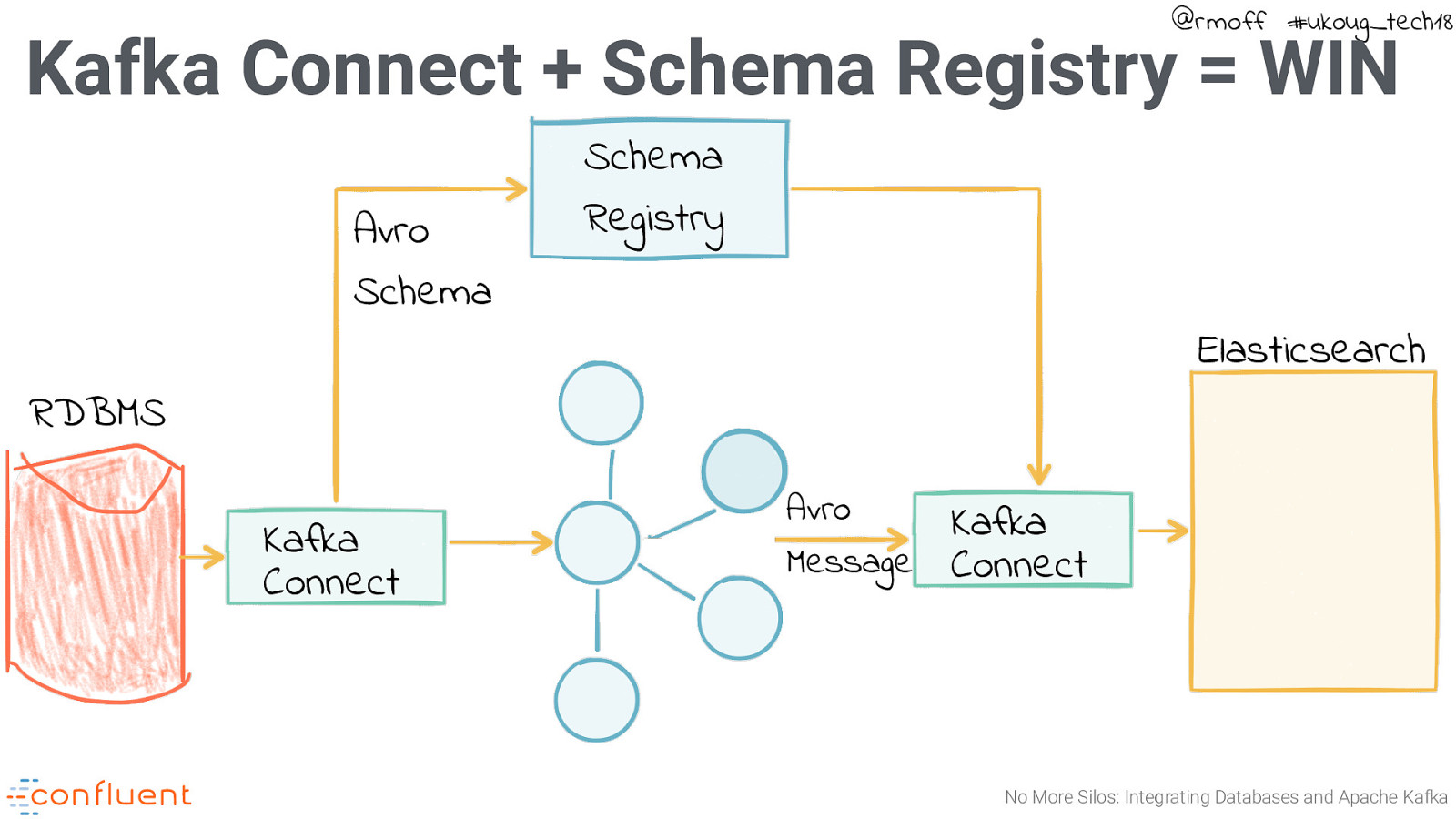

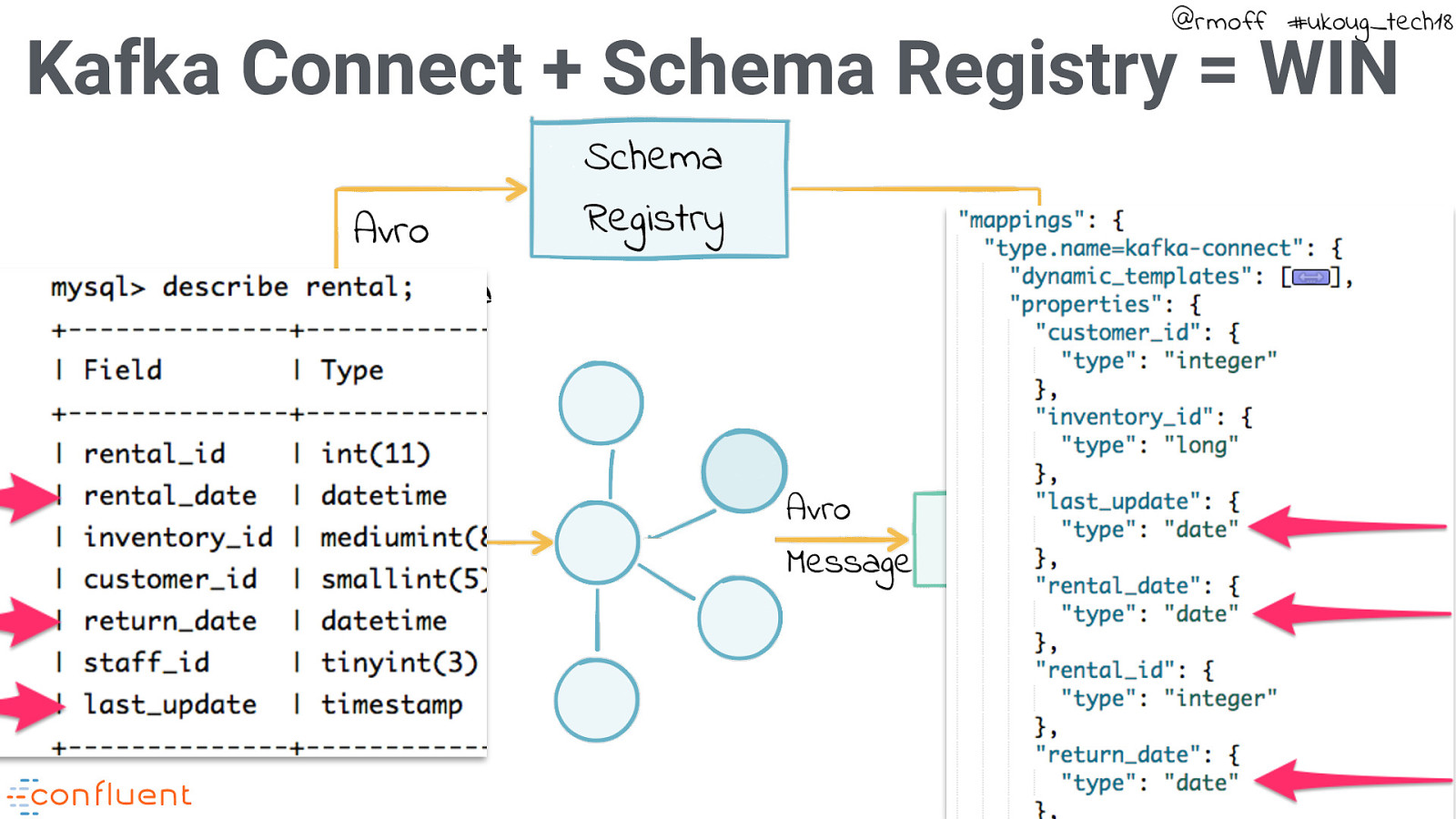

@rmoff #ukoug_tech18 Kafka Connect + Schema Registry = WIN Avro Schema Schema Registry Elasticsearch RDBMS Kafka Connect Avro Message Kafka Connect No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 Kafka Connect + Schema Registry = WIN Avro Schema Schema Registry Elasticsearch RDBMS Kafka Connect Avro Message Kafka Connect No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 Change-Data-Capture (CDC) • CDC is a generic term referring to capturing changing data typically from a RDBMS. • Two general approaches: • Query-based CDC • Log-based CDC There are other options including hacks with Triggers, Flashback etc but these are system and/or technology-specific. No More Silos: Integrating Databases and Apache Kafka

Query-based CDC @rmoff #ukoug_tech18 • Use a database query to try and identify new & changed rows SELECT * FROM my_table WHERE col > <value of col last time we polled> • Implemented with the open source Kafka Connect JDBC connector • Can import based on table names, schema, or bespoke SQL query • Incremental ingest driven through incrementing ID column and/or timestamp column No More Silos: Integrating Databases and Apache Kafka

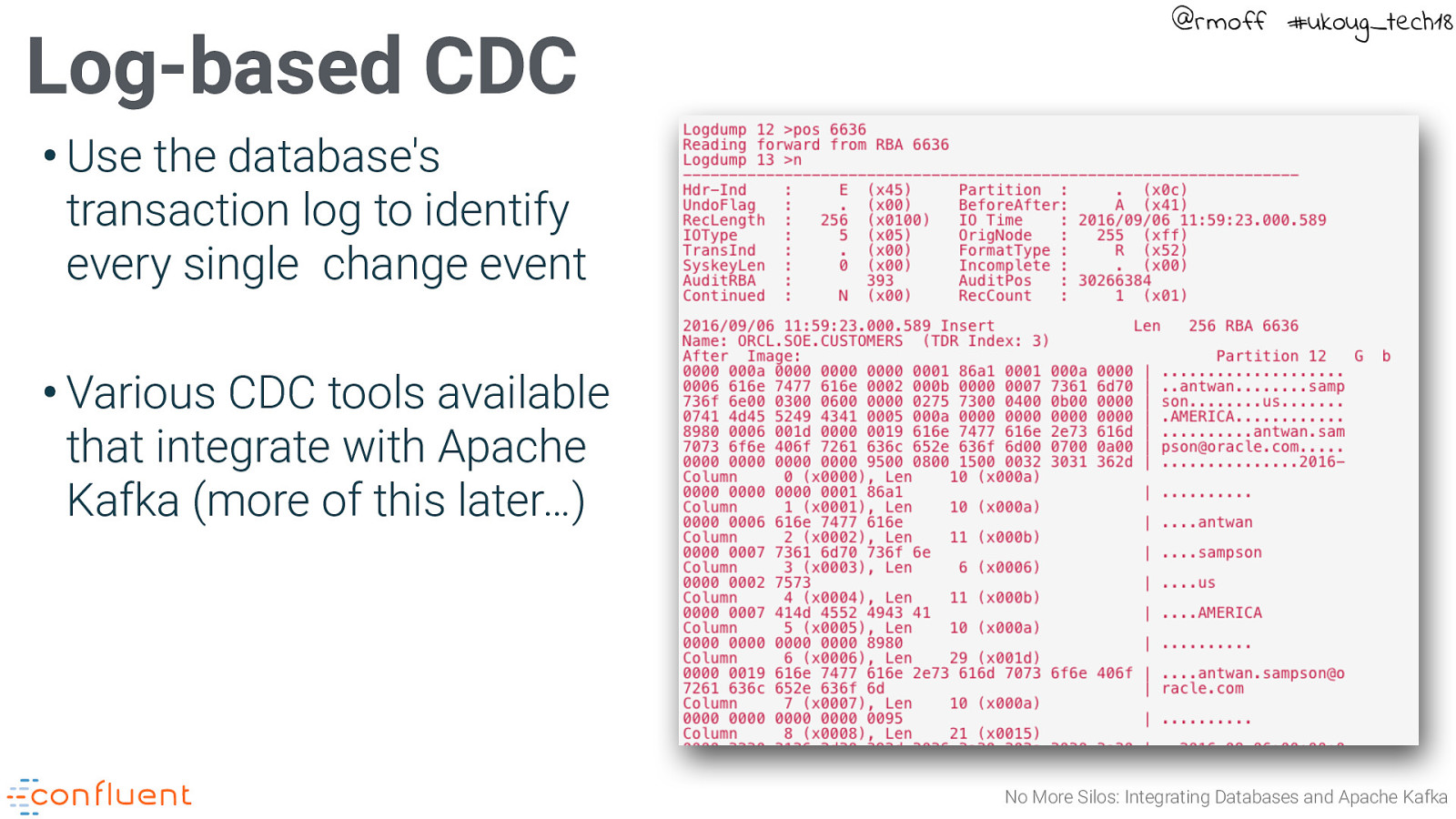

Log-based CDC @rmoff #ukoug_tech18 • Use the database’s transaction log to identify every single change event • Various CDC tools available that integrate with Apache Kafka (more of this later…) No More Silos: Integrating Databases and Apache Kafka

Demo Time! @rmoff #ukoug_tech18 No More Silos: Integrating Databases and Apache Kafka

“Which one should I use?” Photo by Tyler Nix on Unsplash @rmoff #ukoug_tech18 No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 It Depends! No More Silos: Integrating and on Apache Kafka Photo by Databases Trevor Cole Unsplash

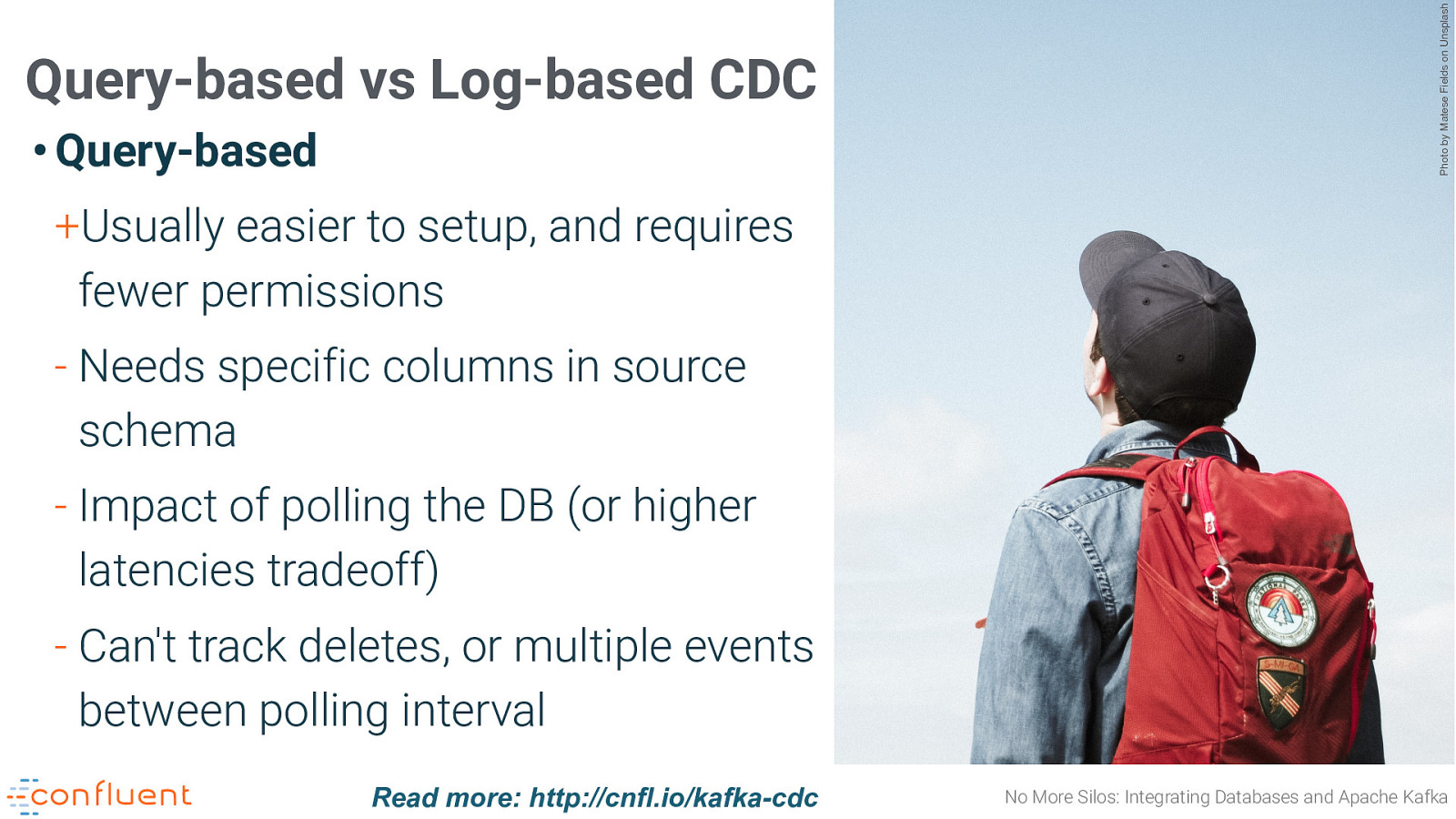

Photo by Matese Fields on Unsplash @rmoff #ukoug_tech18 Query-based vs Log-based CDC • Query-based +Usually easier to setup, and requires fewer permissions - Needs specific columns in source schema - Impact of polling the DB (or higher latencies tradeoff) - Can’t track deletes, or multiple events between polling interval Read more: http://cnfl.io/kafka-cdc No More Silos: Integrating Databases and Apache Kafka

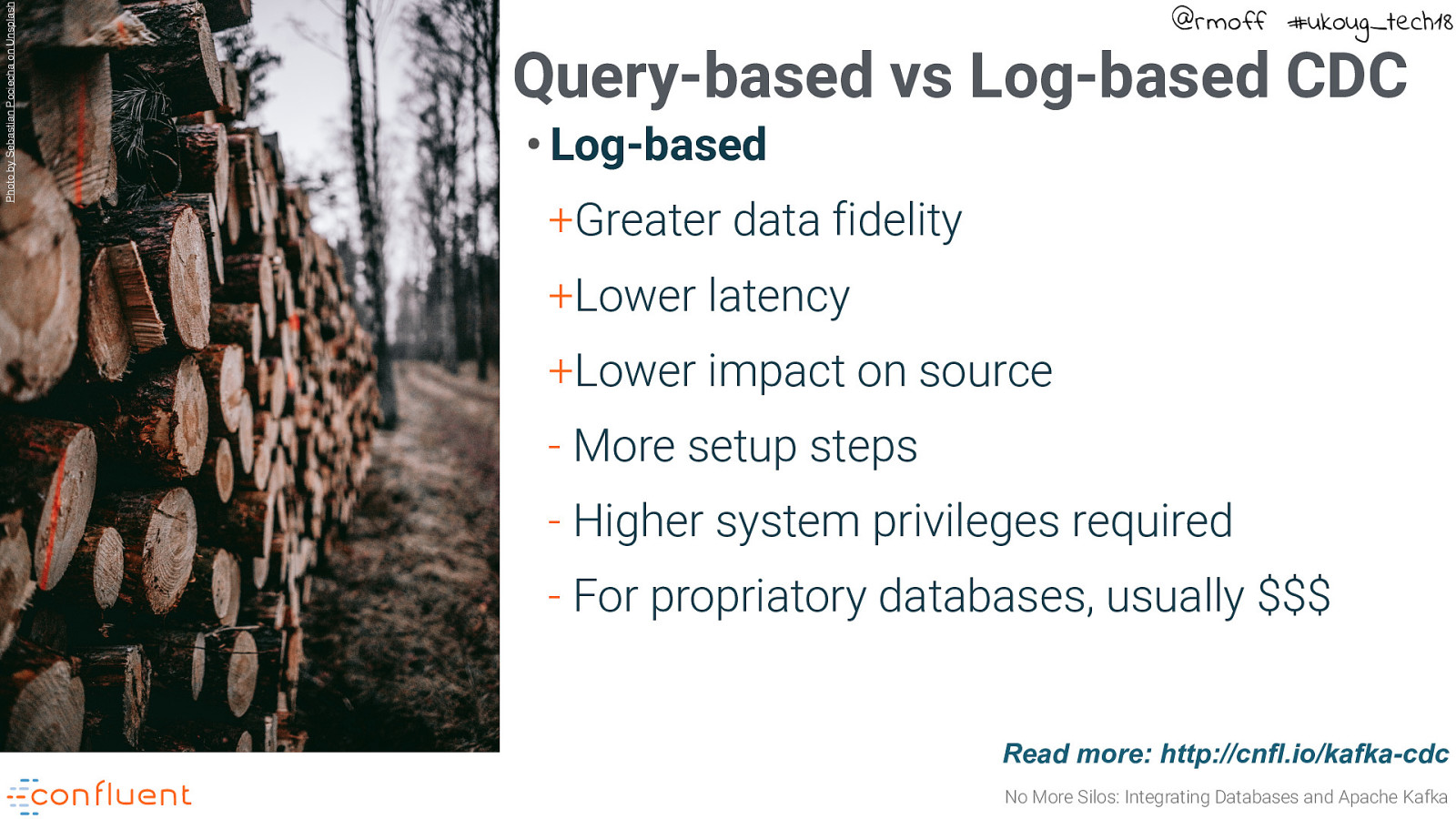

Photo by Sebastian Pociecha on Unsplash @rmoff #ukoug_tech18 Query-based vs Log-based CDC • Log-based +Greater data fidelity +Lower latency +Lower impact on source - More setup steps - Higher system privileges required - For propriatory databases, usually $$$ Read more: http://cnfl.io/kafka-cdc No More Silos: Integrating Databases and Apache Kafka

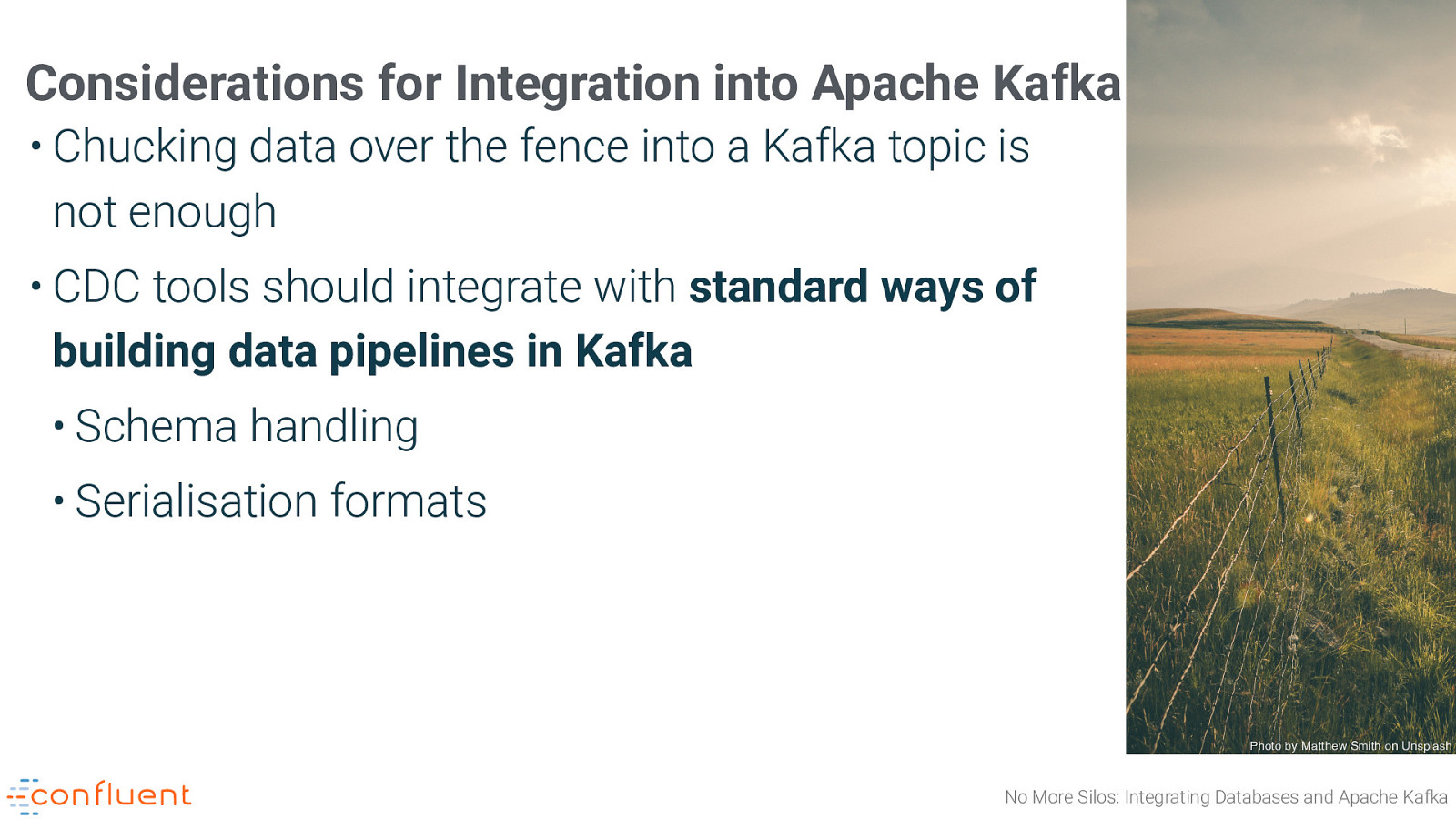

@rmoff #ukoug_tech18 Considerations for Integration into Apache Kafka • Chucking data over the fence into a Kafka topic is not enough • CDC tools should integrate with standard ways of building data pipelines in Kafka • Schema handling • Serialisation formats Photo by Matthew Smith on Unsplash No More Silos: Integrating Databases and Apache Kafka

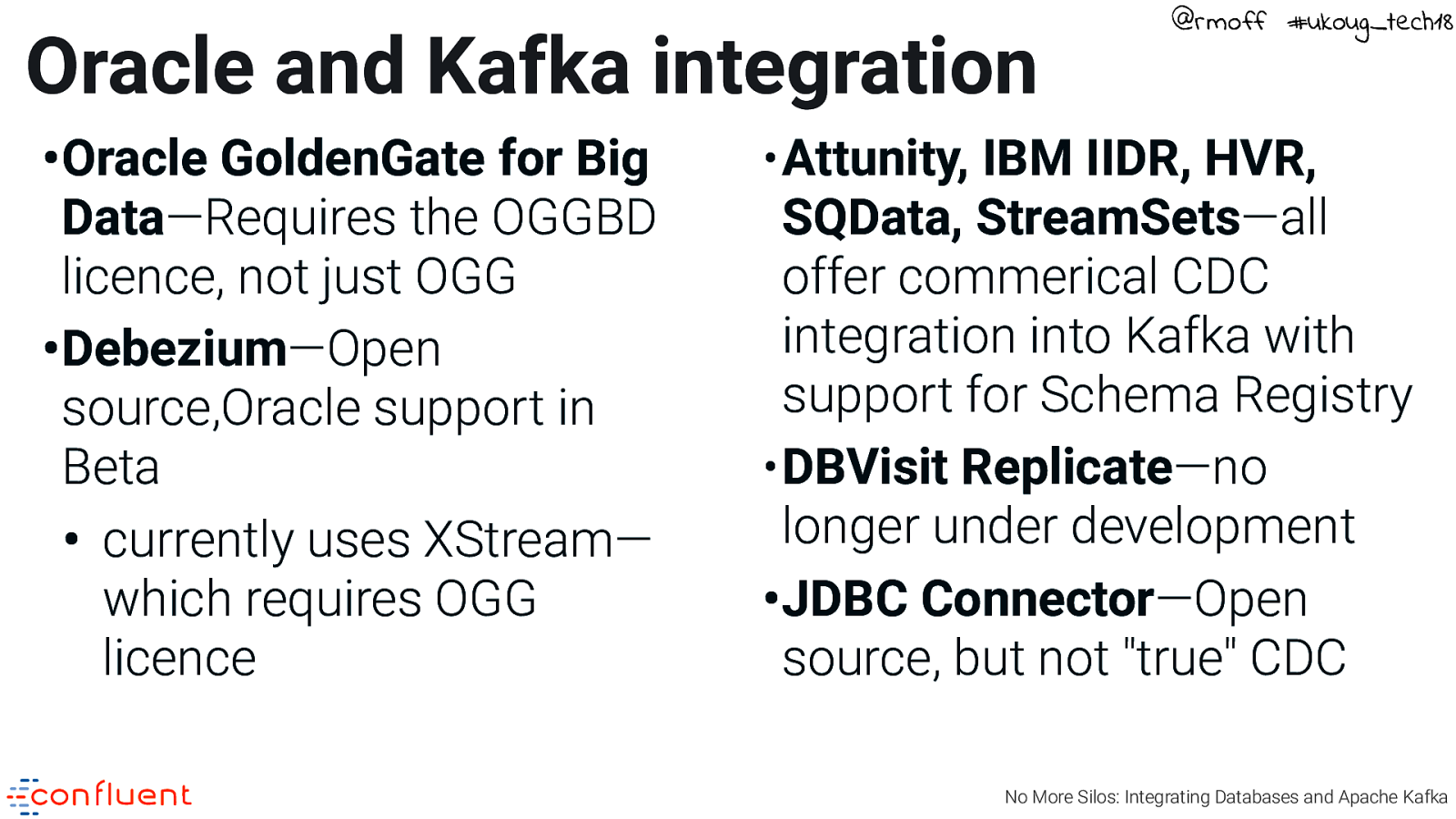

Oracle and Kafka integration •Oracle GoldenGate for Big Data—Requires the OGGBD licence, not just OGG •Debezium—Open source,Oracle support in Beta • currently uses XStream— which requires OGG licence @rmoff #ukoug_tech18 •Attunity, IBM IIDR, HVR, SQData, StreamSets—all offer commerical CDC integration into Kafka with support for Schema Registry •DBVisit Replicate—no longer under development •JDBC Connector—Open source, but not “true” CDC No More Silos: Integrating Databases and Apache Kafka

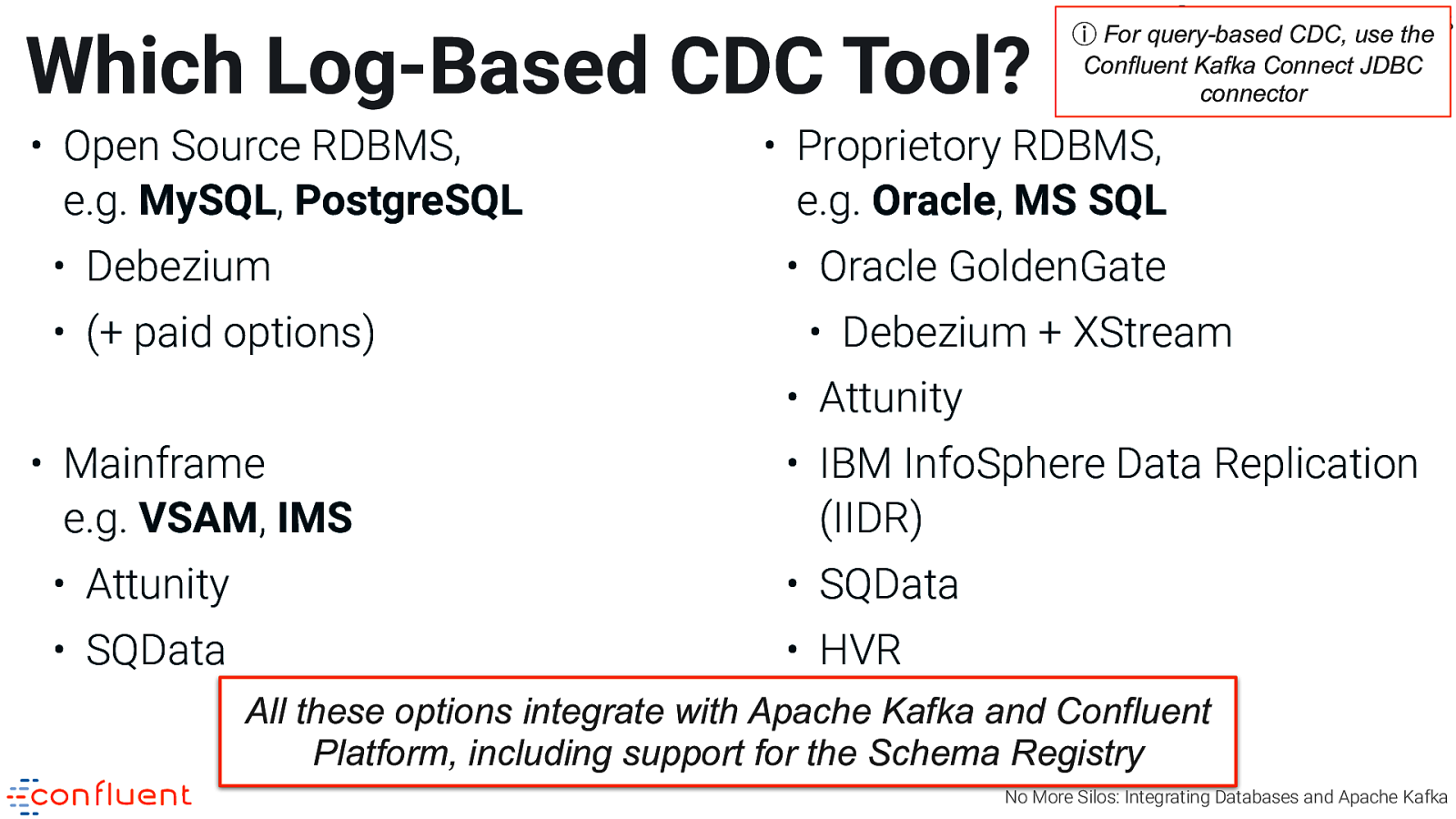

Which Log-Based CDC Tool? • Open Source RDBMS, e.g. MySQL, PostgreSQL • Debezium • (+ paid options) @rmoff #ukoug_tech18 ⓘ For query-based CDC, use the Confluent Kafka Connect JDBC connector • Proprietory RDBMS, e.g. Oracle, MS SQL • Oracle GoldenGate • Debezium + XStream • Attunity • Mainframe e.g. VSAM, IMS • IBM InfoSphere Data Replication (IIDR) • Attunity • SQData • SQData • HVR All these options integrate with Apache Kafka and Confluent Platform, including support for the Schema Registry No More Silos: Integrating Databases and Apache Kafka

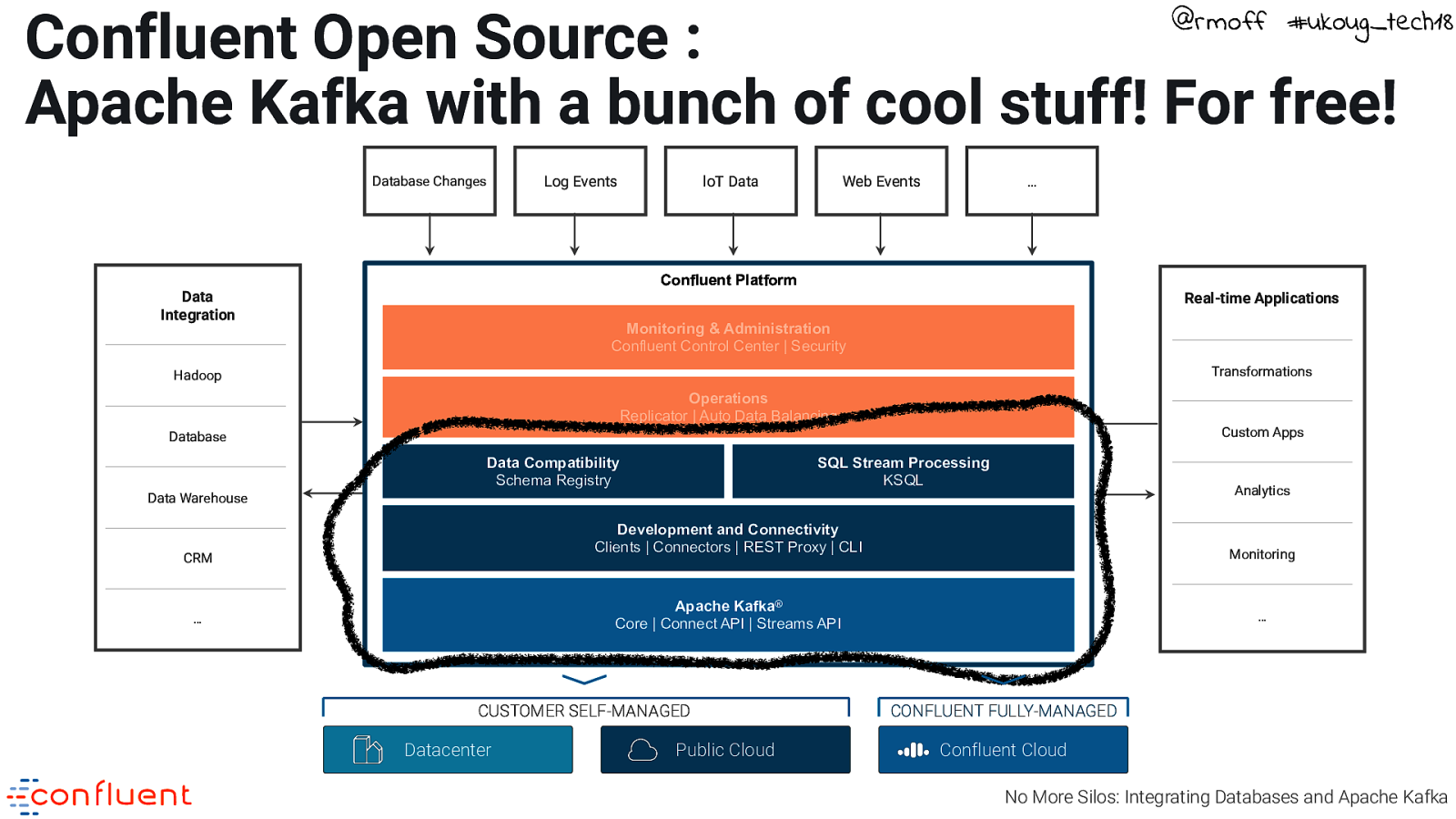

Confluent Open Source : Apache Kafka with a bunch of cool stuff! For free! @rmoff #ukoug_tech18 Log Events Database Changes loT Data Web Events … Confluent Platform Data Integration Real-time Applications Monitoring & Administration Confluent Control Center | Security Confluent Platform Transformations Hadoop Operations Replicator | Auto Data Balancing Custom Apps Database Data Compatibility Schema Registry SQL Stream Processing KSQL Analytics Data Warehouse Development and Connectivity Clients | Connectors | REST Proxy | CLI CRM Monitoring Apache Kafka® Core | Connect API | Streams API … CUSTOMER SELF-MANAGED Datacenter Public Cloud … CONFLUENT FULLY-MANAGED Confluent Cloud No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 Free Books! https://www.confluent.io/apache-kafka-stream-processing-book-bundle No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 https://www.confluent.io/download/ http://cnfl.io/kafka-cdc http://cnfl.io/slack @rmoff [email protected] No More Silos: Integrating Databases and Apache Kafka

@rmoff #ukoug_tech18 #EOF No More Silos: Integrating Databases and Apache Kafka

Companies new and old are all recognising the importance of a low-latency, scalable, fault-tolerant data backbone, in the form of the Apache Kafka® streaming platform. With Kafka, developers can integrate multiple sources and systems, which enables low latency analytics, event driven architectures and the population of multiple downstream systems.

In this talk we’ll look at one of the most common integration requirements - connecting databases to Kafka. We’ll consider the concept that all data is a stream of events, including that residing within a database. We’ll look at why we’d want to stream data from a database, including driving applications in Kafka from events upstream. We’ll discuss the different methods for connecting databases to Kafka, and the pros and cons of each. Techniques including Change-Data-Capture (CDC) and Kafka Connect will be covered, as well as an exploration of the power of KSQL for performing transformations such as joins on the inbound data.

Attendees of this talk will learn:

-

That all data is event streams; databases are just a materialised view of a stream of events.

-

The best ways to integrate databases with Kafka.

-

Anti-patterns of which to be aware.

-

The power of KSQL for transforming streams of data in Kafka.