Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline! @rmoff #ljcjug

Slide 1

Slide 2

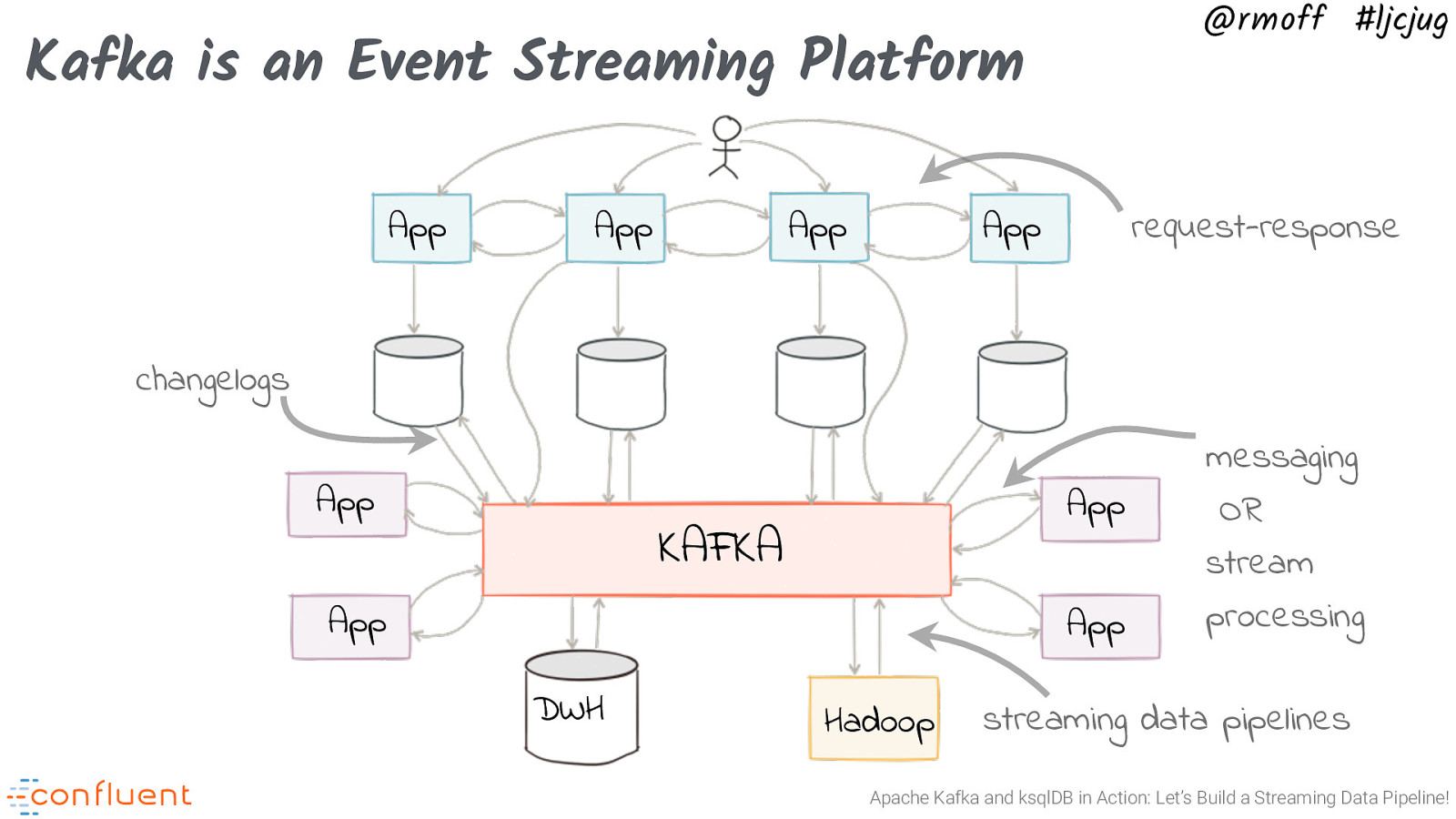

@rmoff #ljcjug Kafka is an Event Streaming Platform App App App App request-response changelogs App App KAFKA App App DWH Hadoop messaging OR stream processing streaming data pipelines Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 3

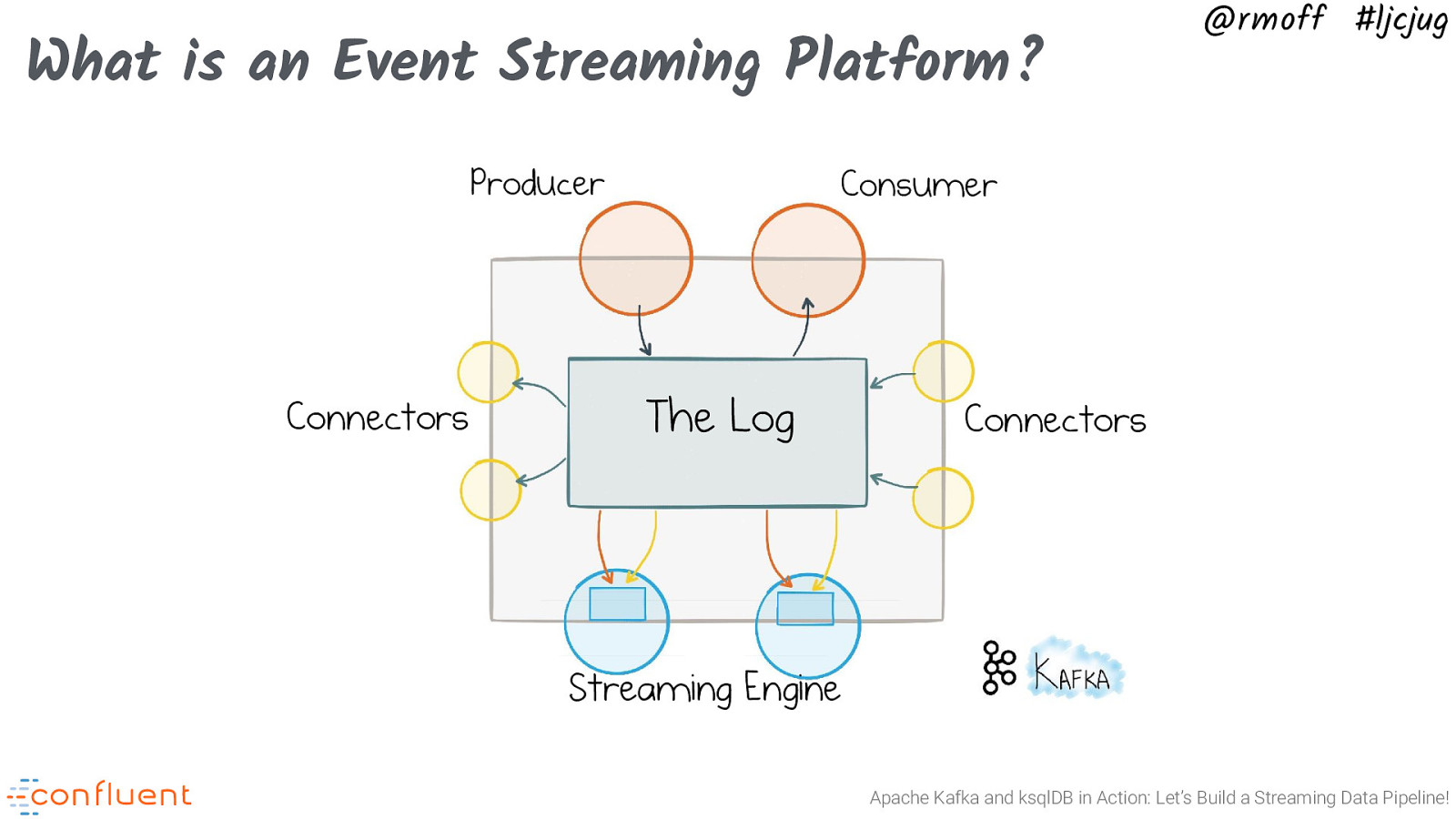

What is an Event Streaming Platform? Producer Connectors @rmoff #ljcjug Consumer The Log Connectors Streaming Engine Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 4

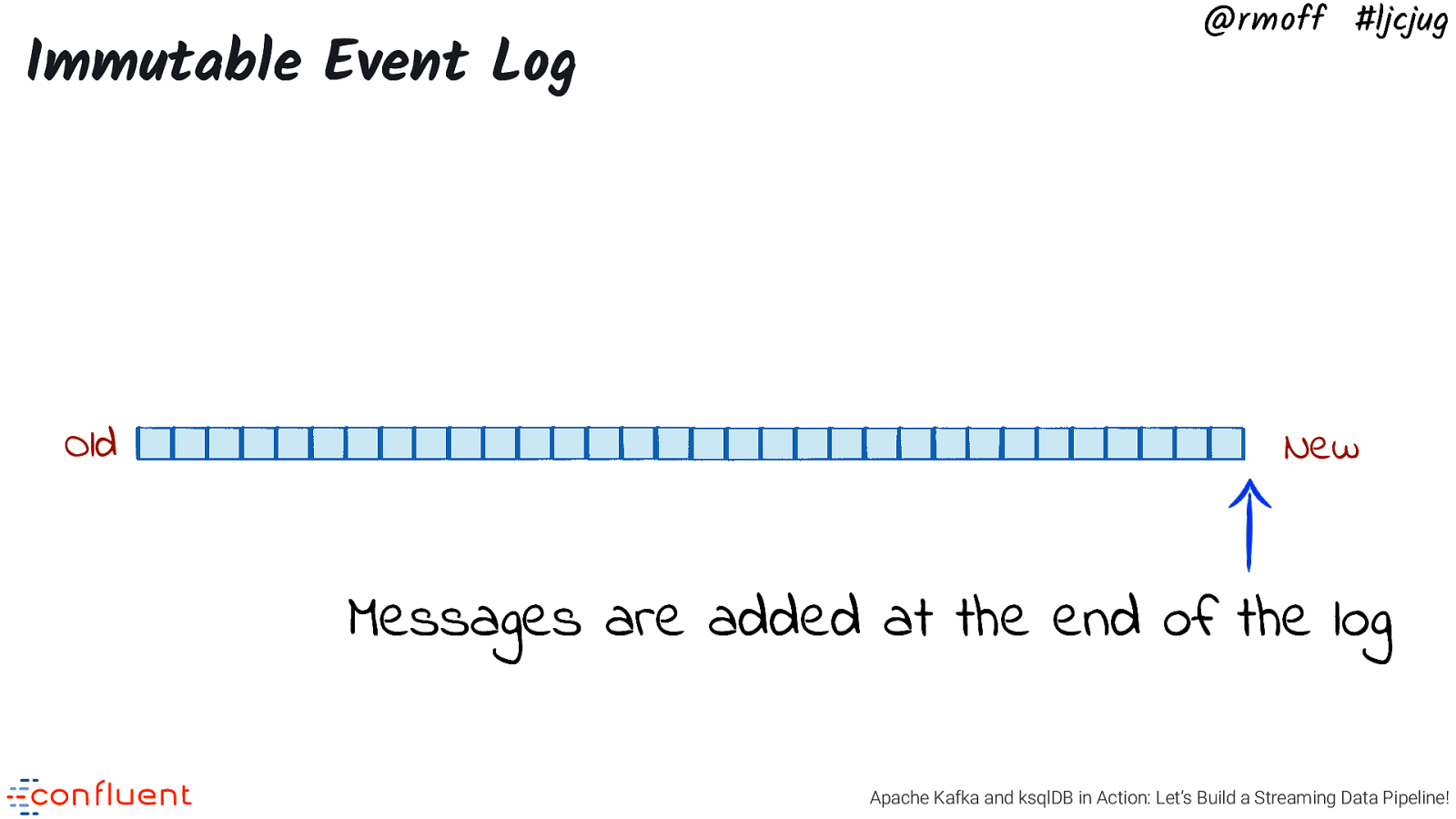

Immutable Event Log Old @rmoff #ljcjug New Messages are added at the end of the log Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 5

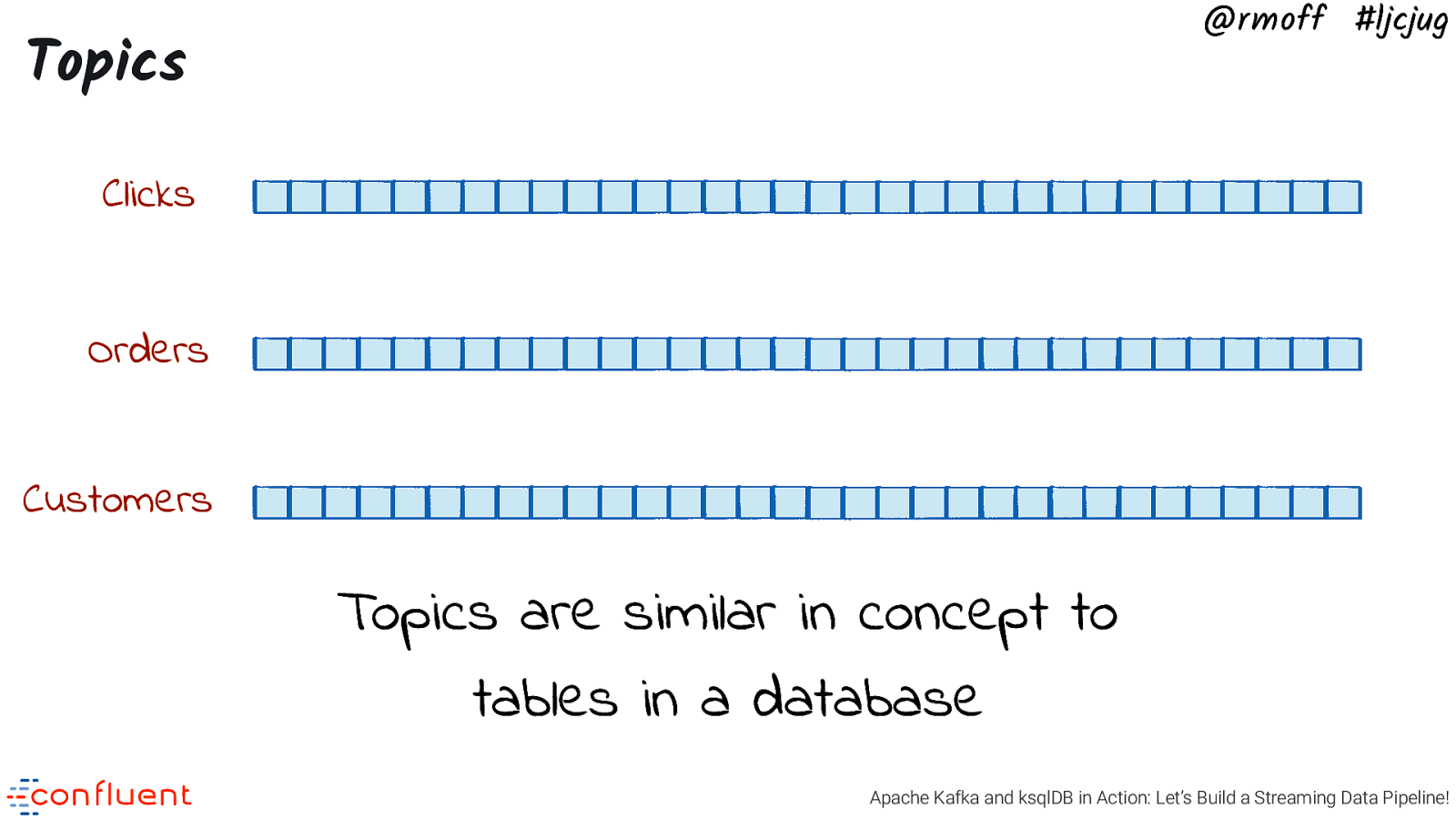

@rmoff #ljcjug Topics Clicks Orders Customers Topics are similar in concept to tables in a database Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 6

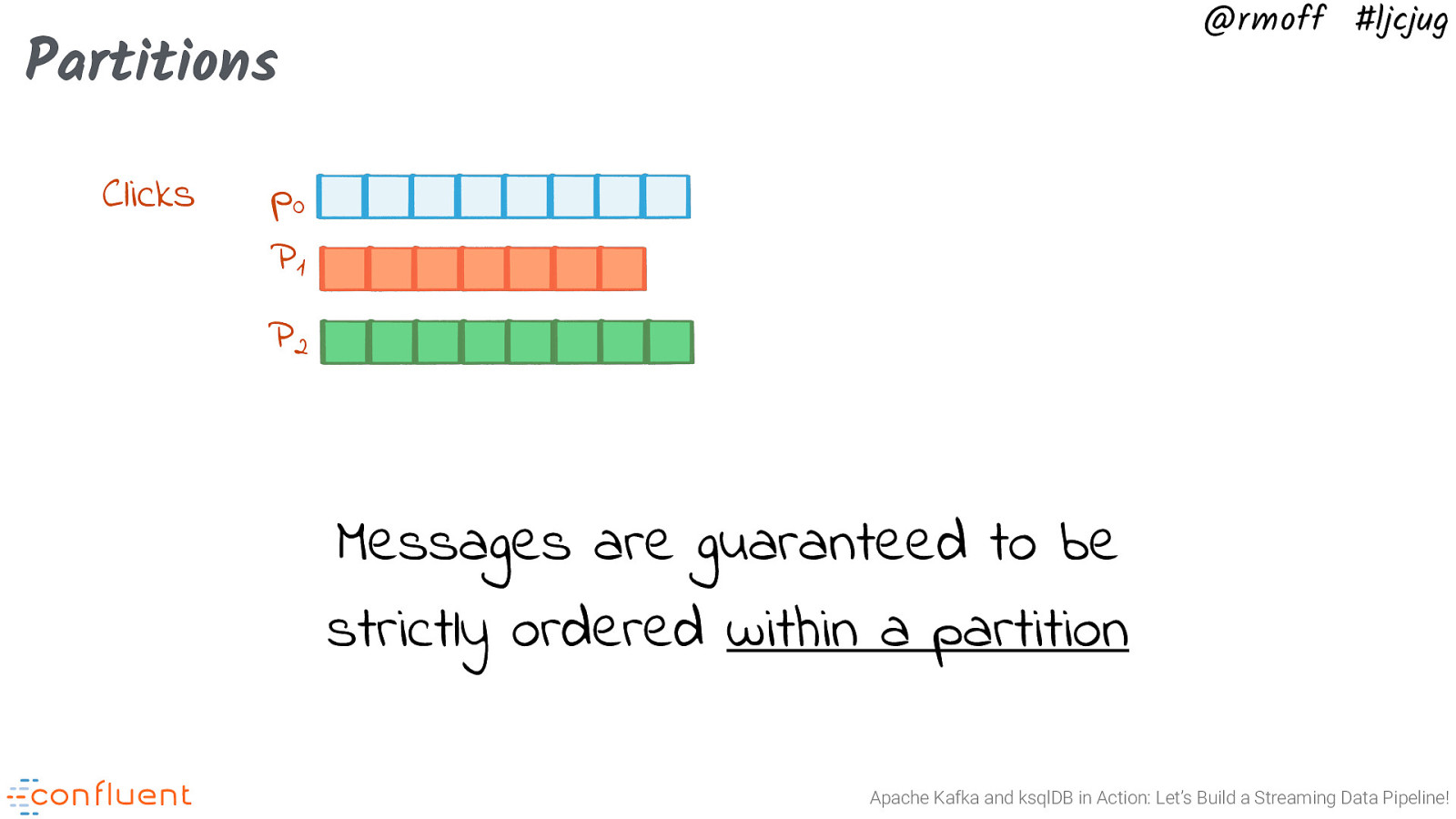

@rmoff #ljcjug Partitions Clicks p0 P1 P2 Messages are guaranteed to be strictly ordered within a partition Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 7

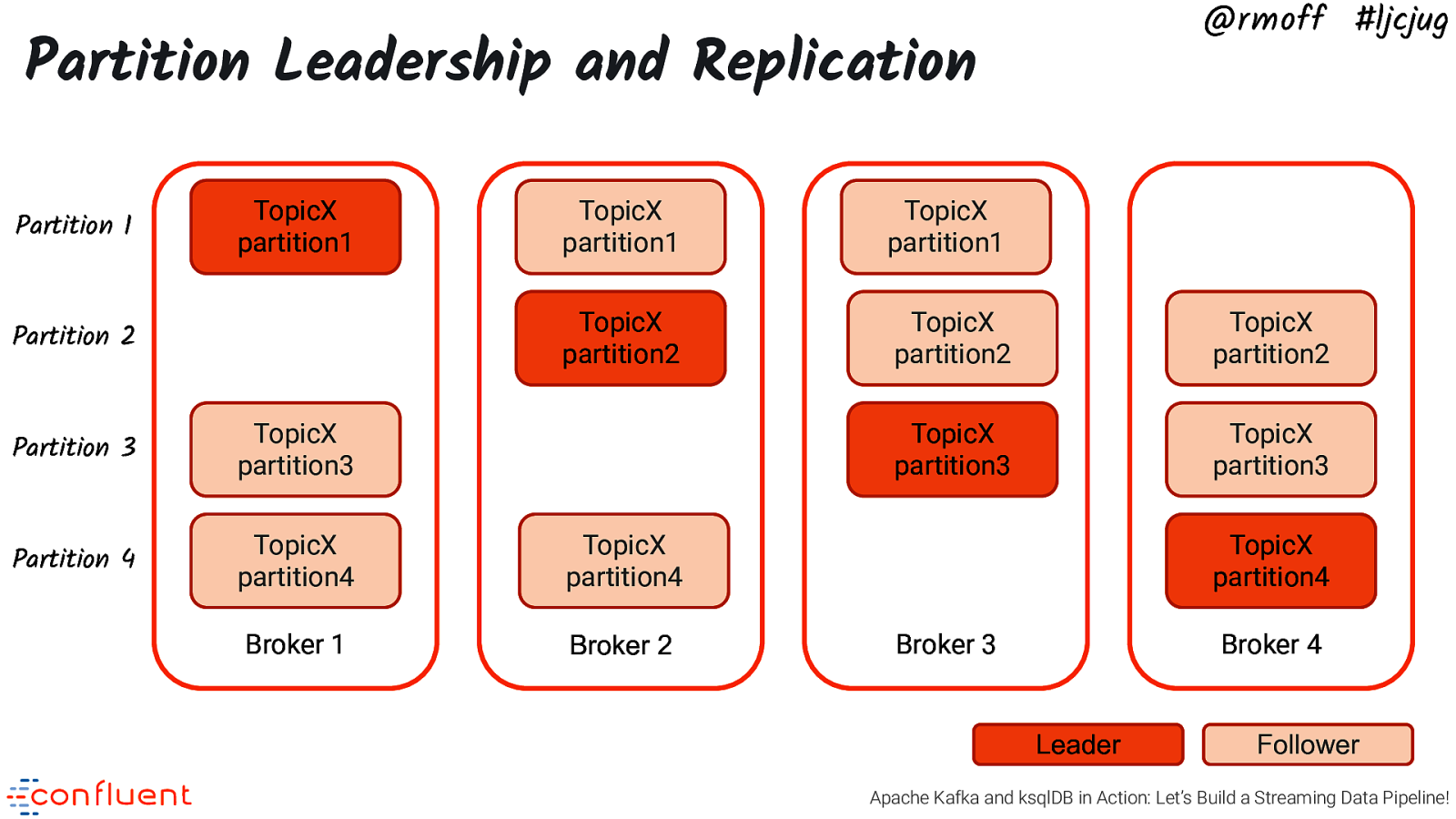

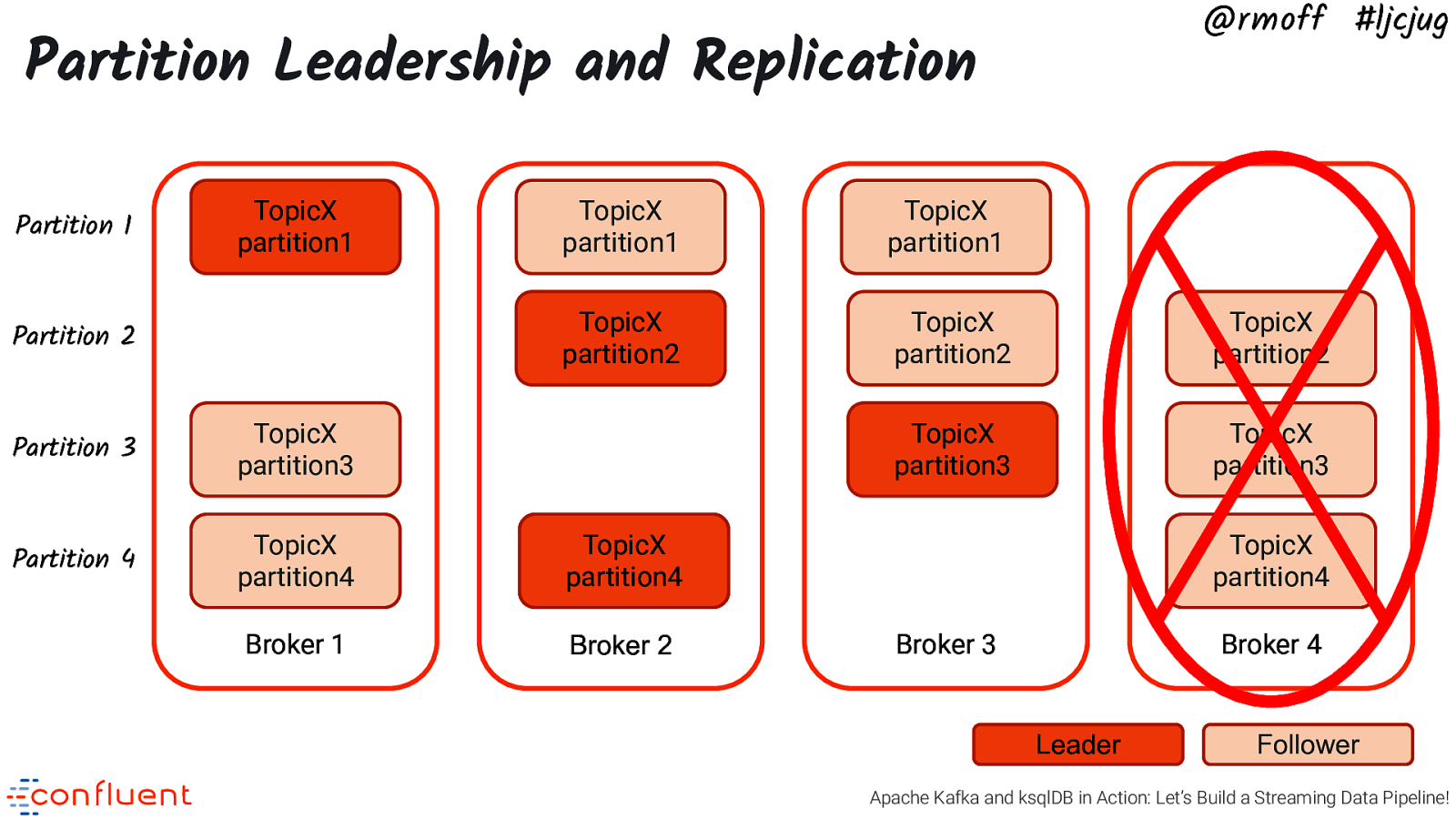

@rmoff #ljcjug Partition Leadership and Replication Partition 1 TopicX partition1 Partition 2 TopicX partition1 TopicX partition1 TopicX partition2 TopicX partition2 TopicX partition2 TopicX partition3 TopicX partition3 Partition 3 TopicX partition3 Partition 4 TopicX partition4 TopicX partition4 Broker 1 Broker 2 TopicX partition4 Broker 3 Broker 4 Leader Follower Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 8

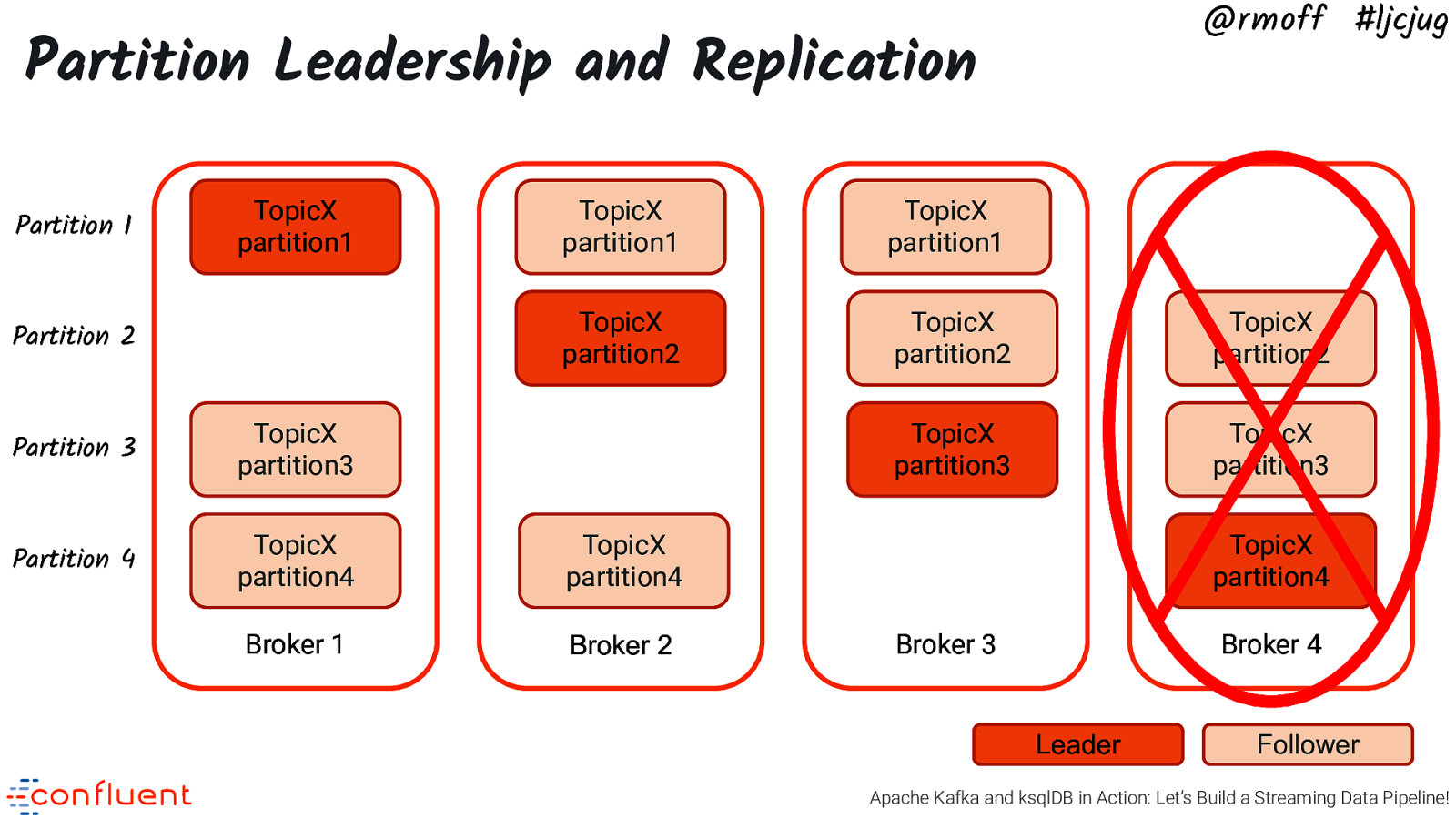

@rmoff #ljcjug Partition Leadership and Replication Partition 1 TopicX partition1 Partition 2 TopicX partition1 TopicX partition1 TopicX partition2 TopicX partition2 TopicX partition2 TopicX partition3 TopicX partition3 Partition 3 TopicX partition3 Partition 4 TopicX partition4 TopicX partition4 Broker 1 Broker 2 TopicX partition4 Broker 3 Broker 4 Leader Follower Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 9

@rmoff #ljcjug Partition Leadership and Replication Partition 1 TopicX partition1 Partition 2 TopicX partition1 TopicX partition1 TopicX partition2 TopicX partition2 TopicX partition2 TopicX partition3 TopicX partition3 Partition 3 TopicX partition3 Partition 4 TopicX partition4 TopicX partition4 Broker 1 Broker 2 TopicX partition4 Broker 3 Broker 4 Leader Follower Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 10

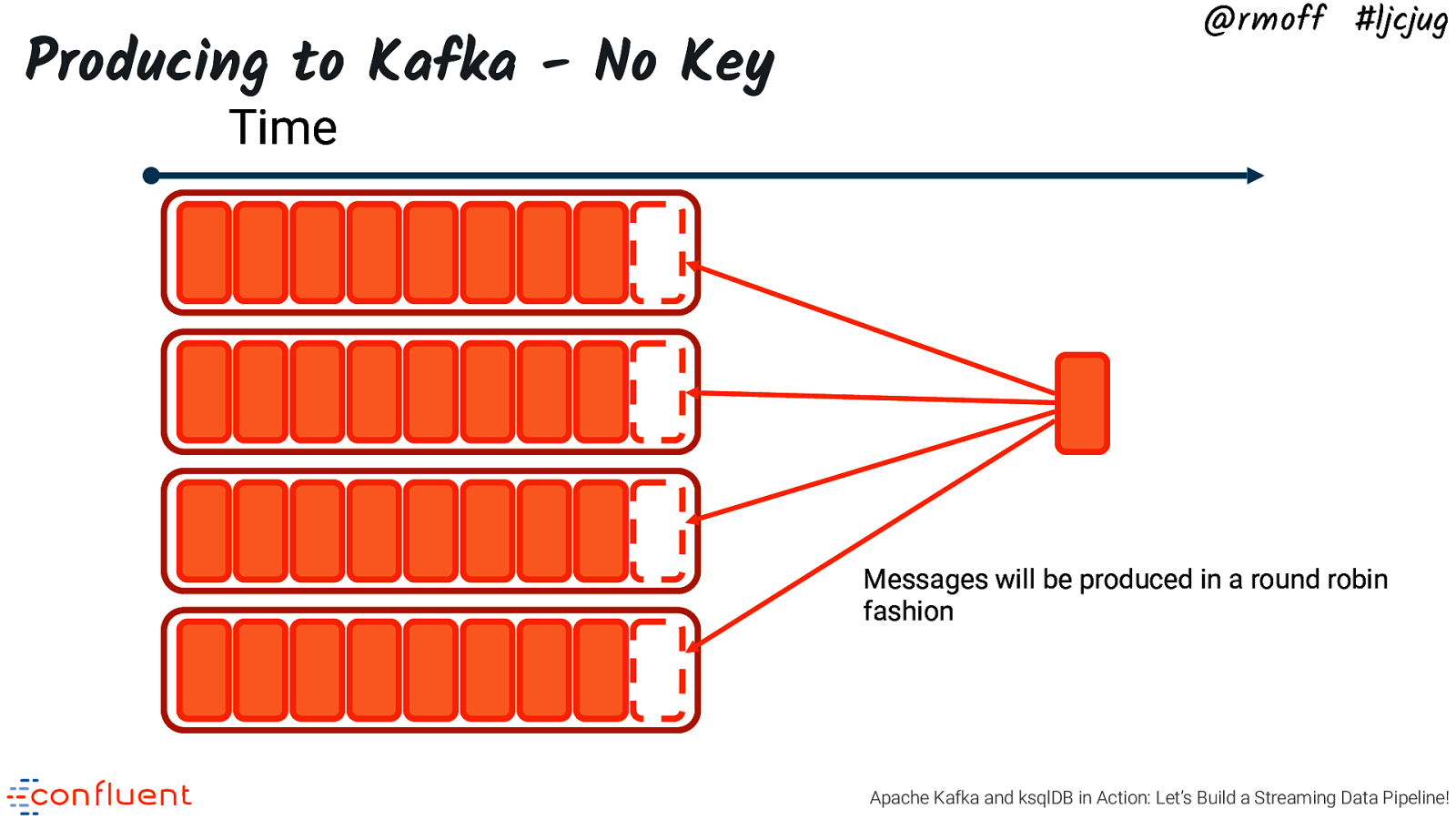

Producing to Kafka - No Key @rmoff #ljcjug Time Messages will be produced in a round robin fashion Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 11

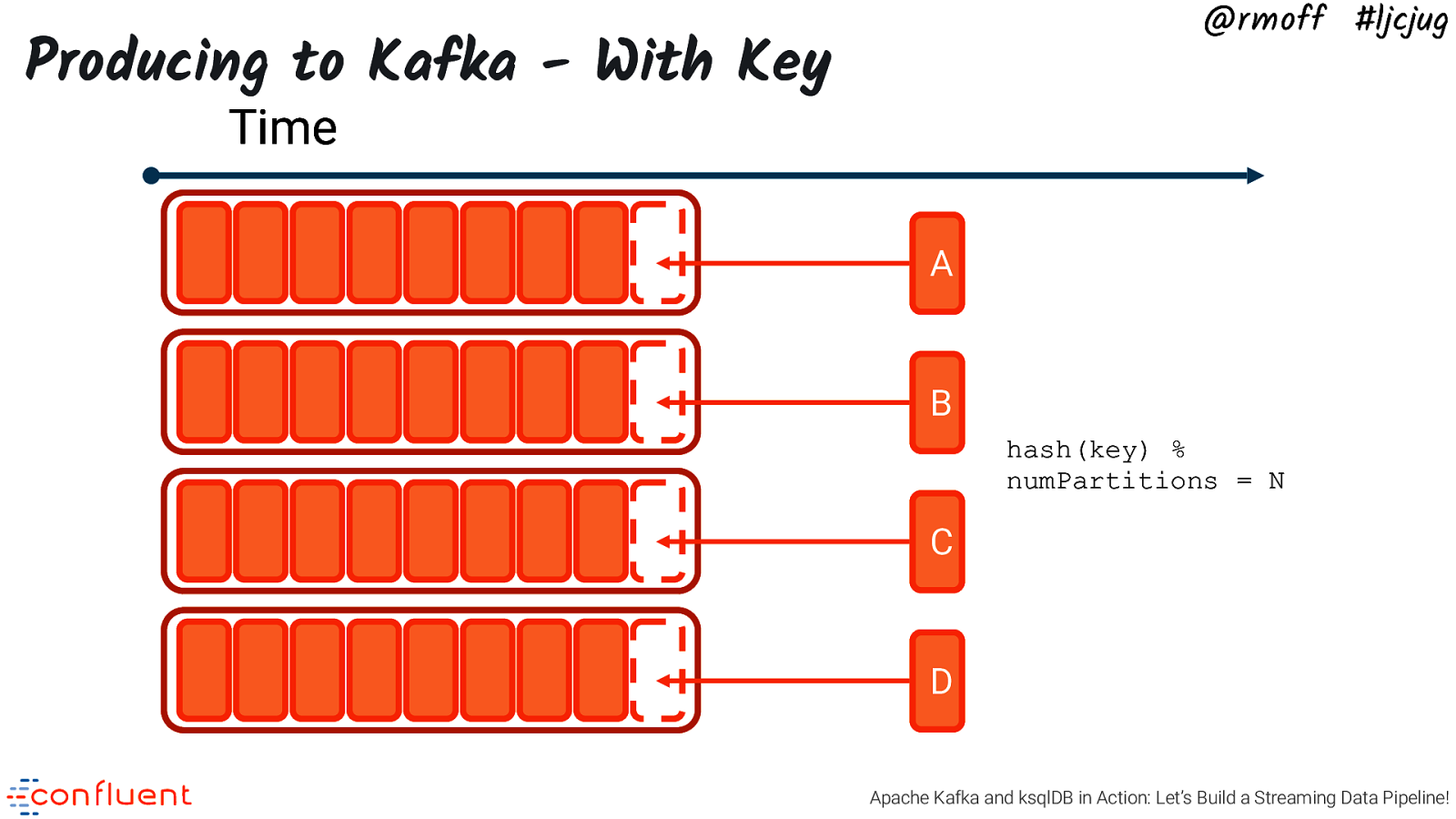

@rmoff #ljcjug Producing to Kafka - With Key Time A B hash(key) % numPartitions = N C D Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 12

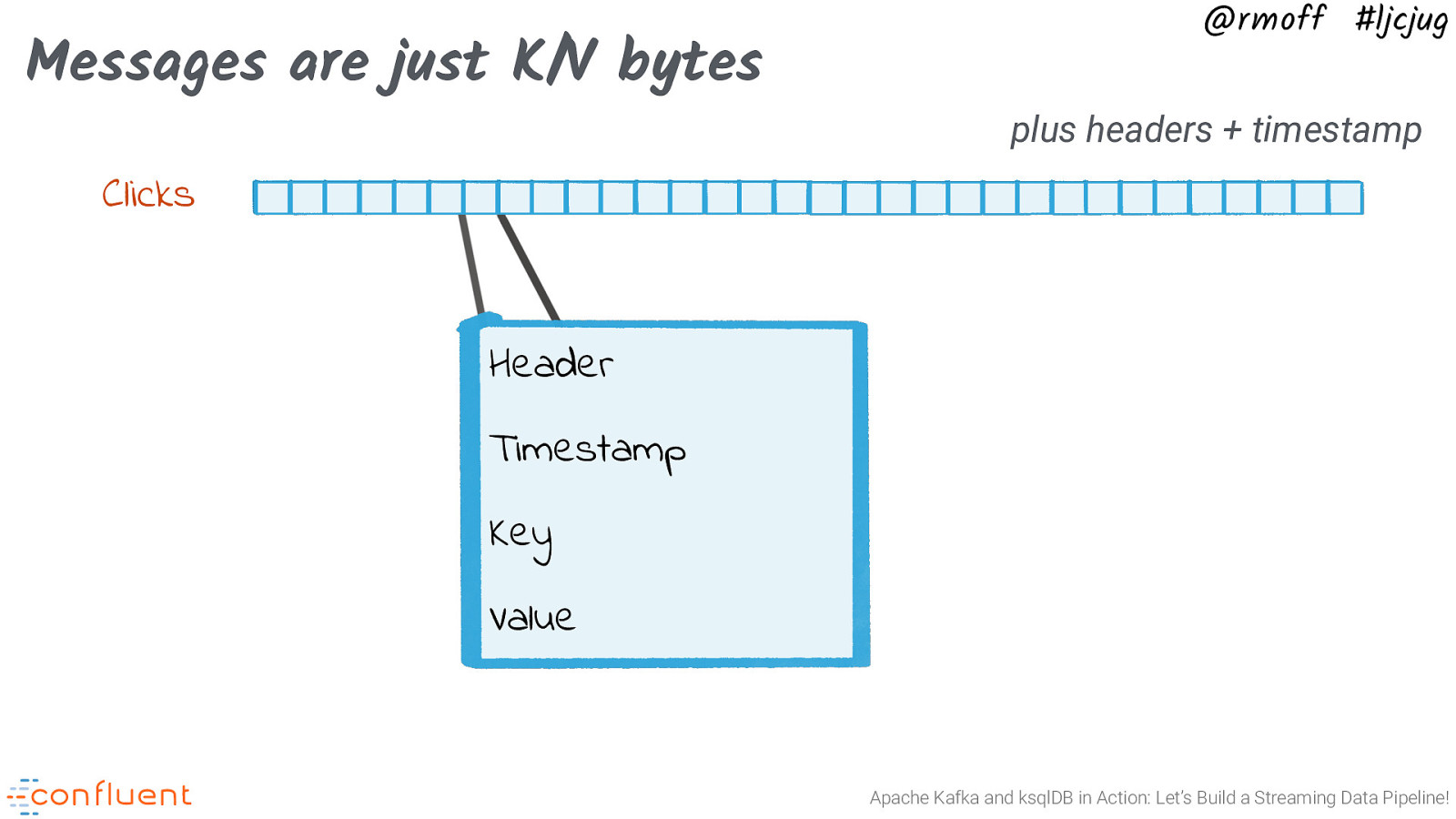

Messages are just K/V bytes @rmoff #ljcjug plus headers + timestamp Clicks Header Timestamp Key Value Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 13

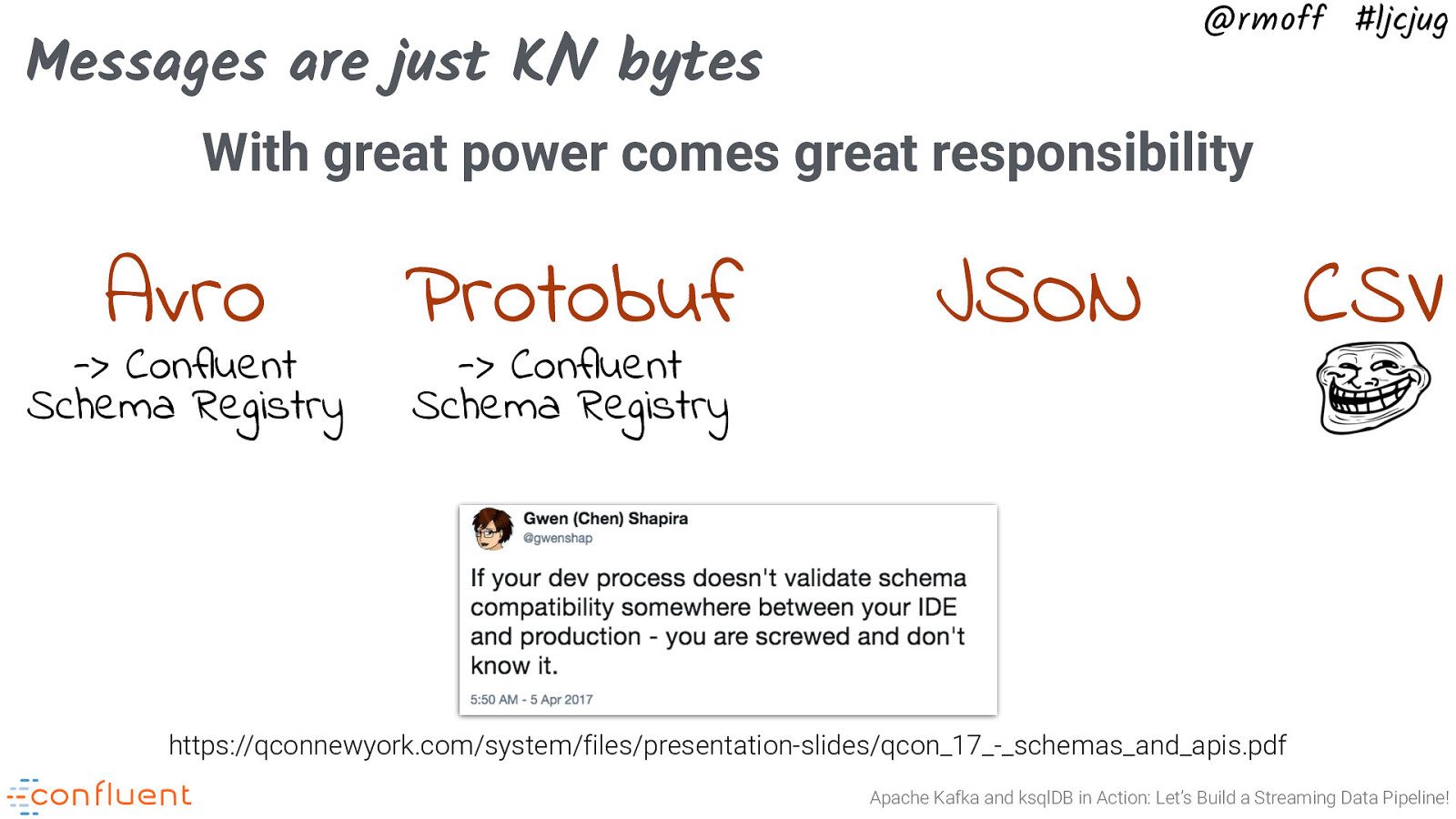

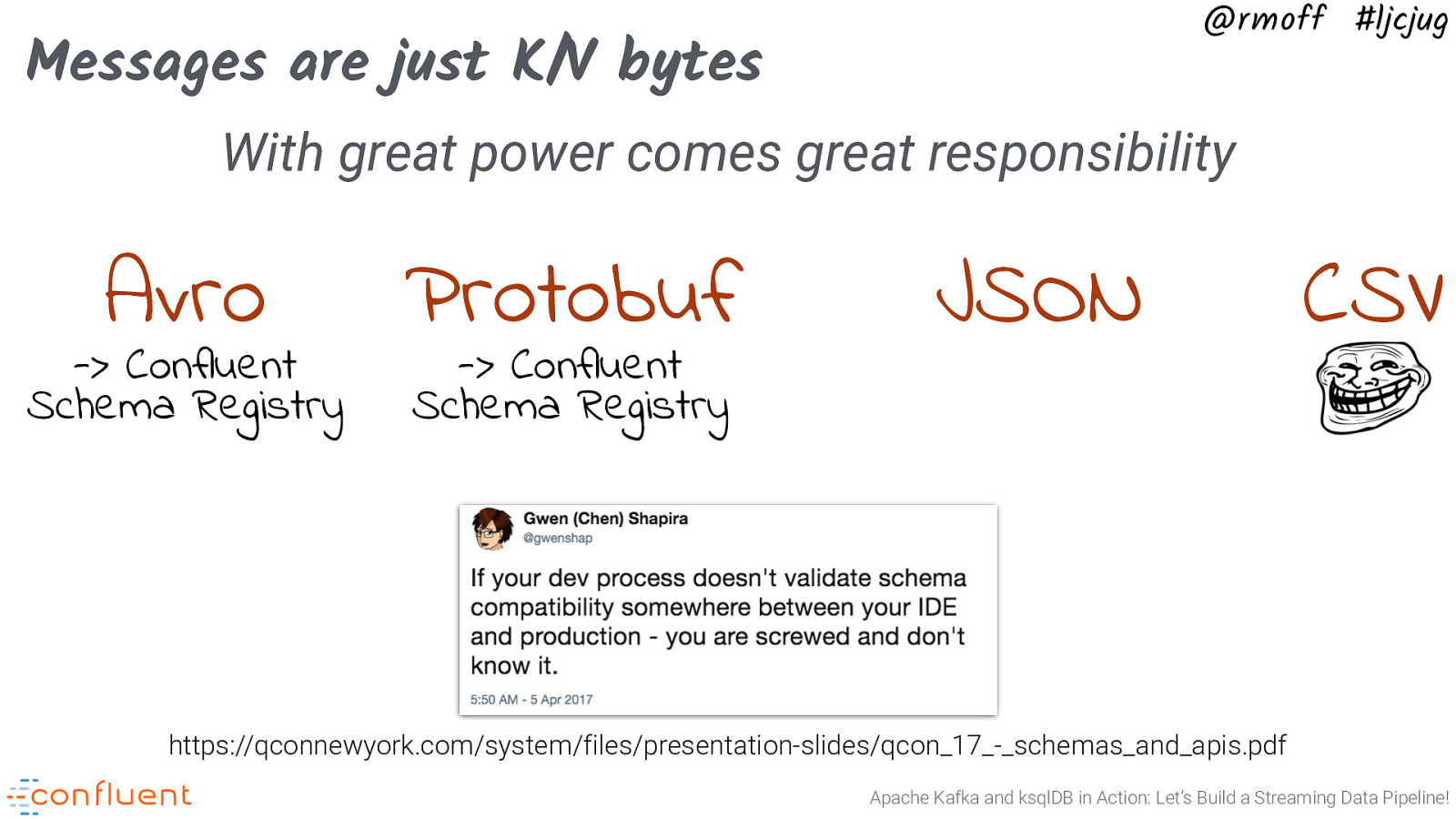

@rmoff #ljcjug Messages are just K/V bytes With great power comes great responsibility Avro -> Confluent Schema Registry Protobuf -> Confluent Schema Registry JSON CSV https://qconnewyork.com/system/files/presentation-slides/qcon_17_-_schemas_and_apis.pdf Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 14

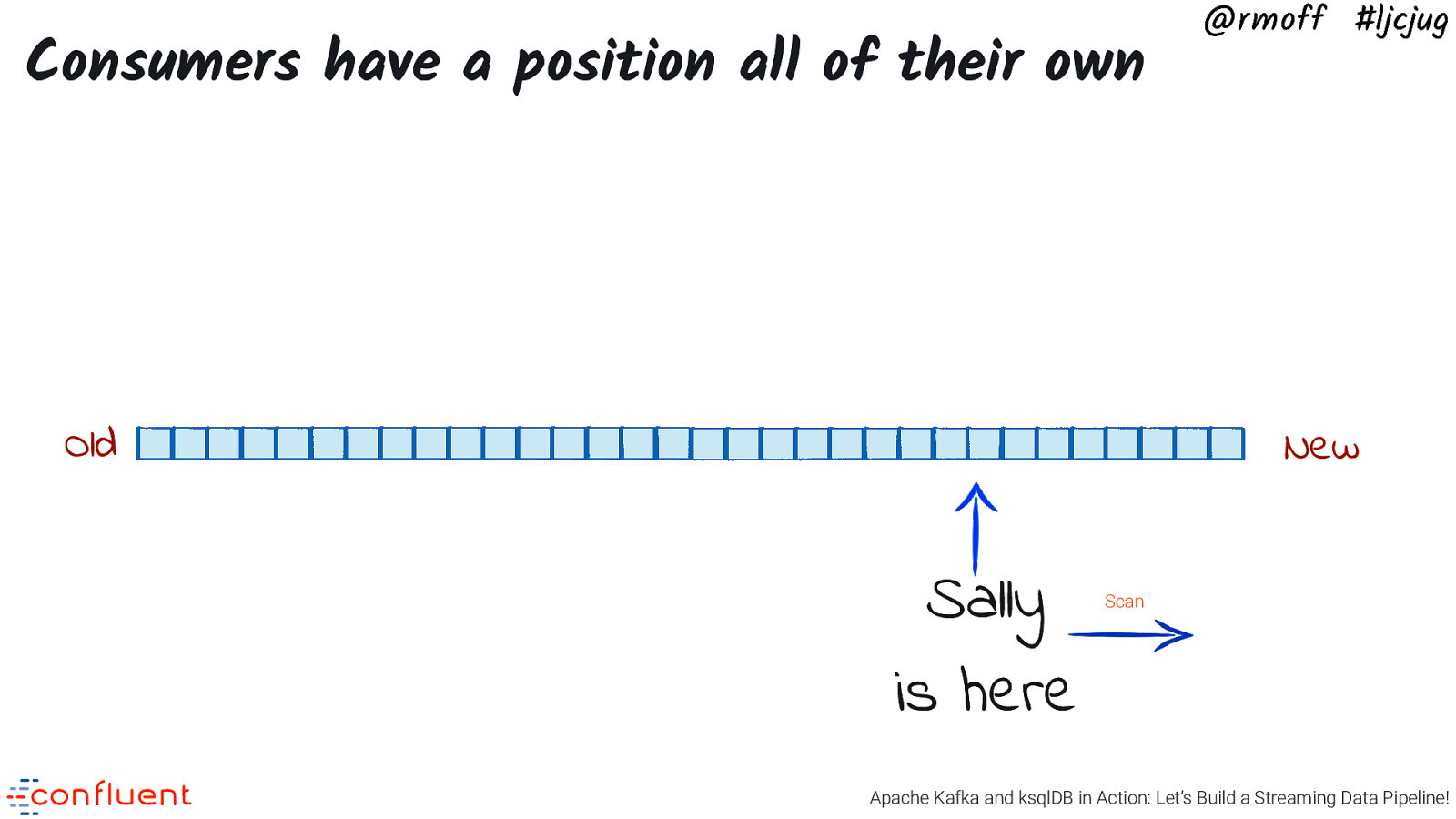

Consumers have a position all of their own Old @rmoff #ljcjug New Sally is here Scan Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 15

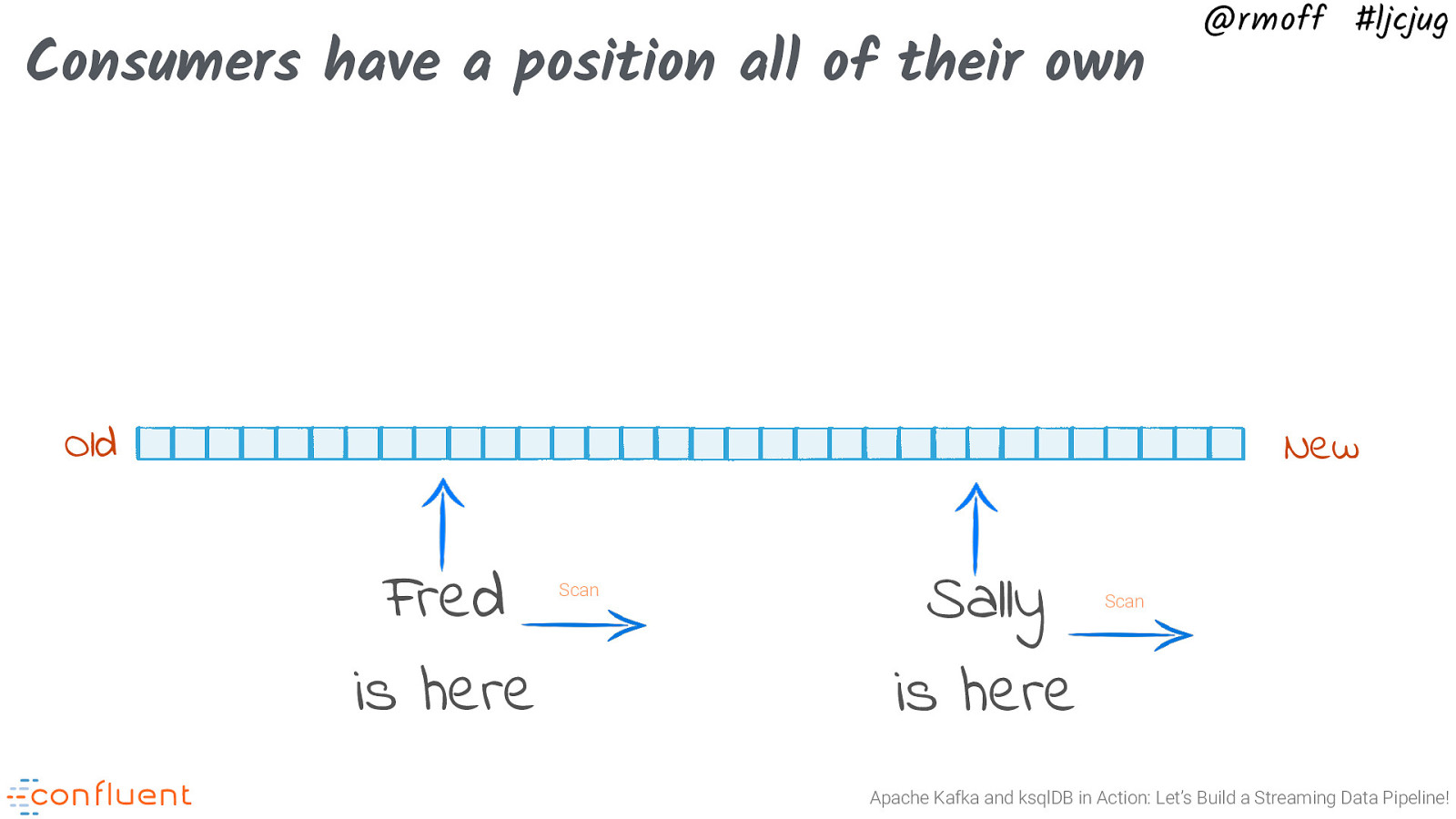

Consumers have a position all of their own Old @rmoff #ljcjug New Fred is here Scan Sally is here Scan Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 16

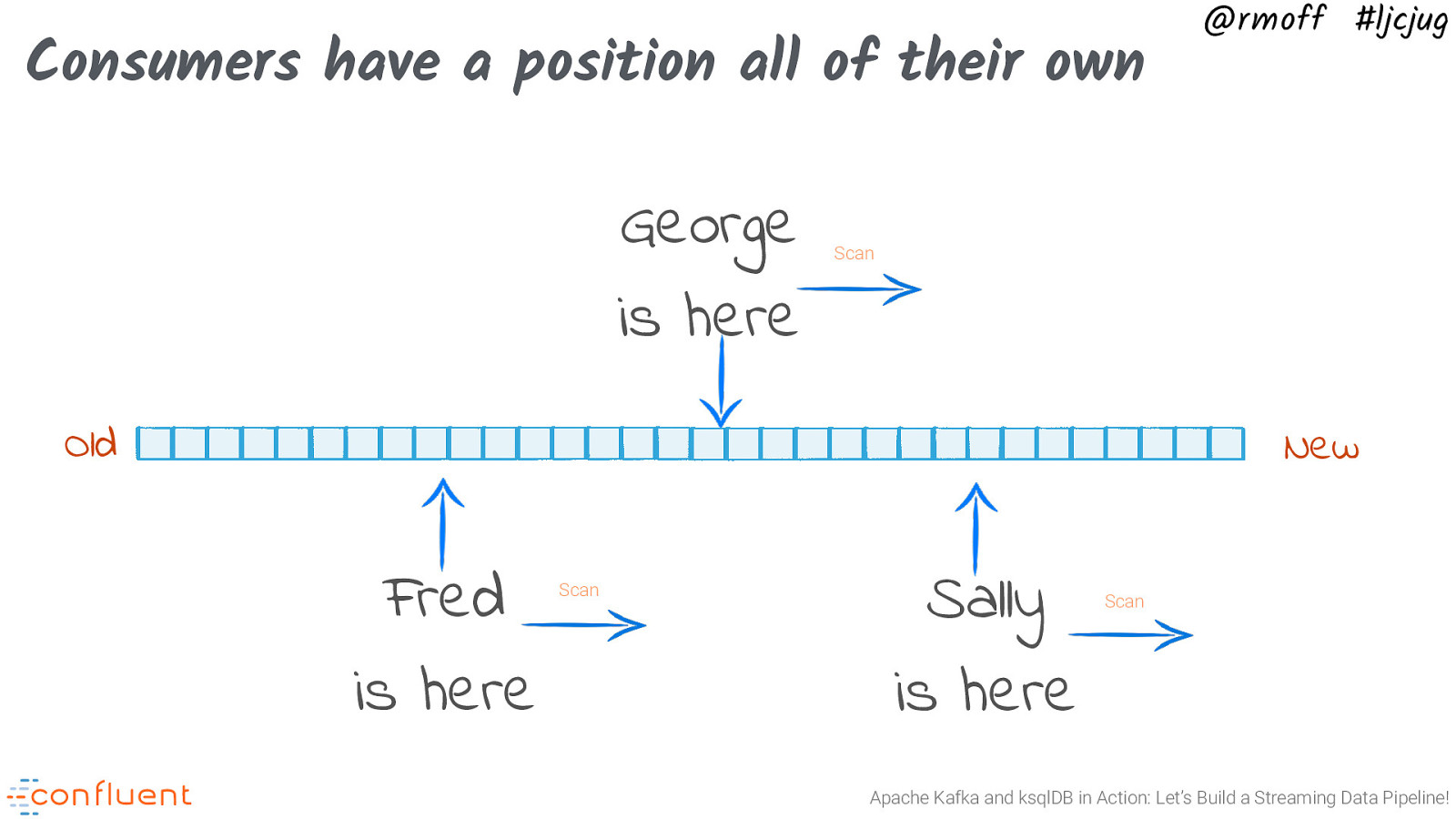

Consumers have a position all of their own George is here @rmoff #ljcjug Scan Old New Fred is here Scan Sally is here Scan Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 17

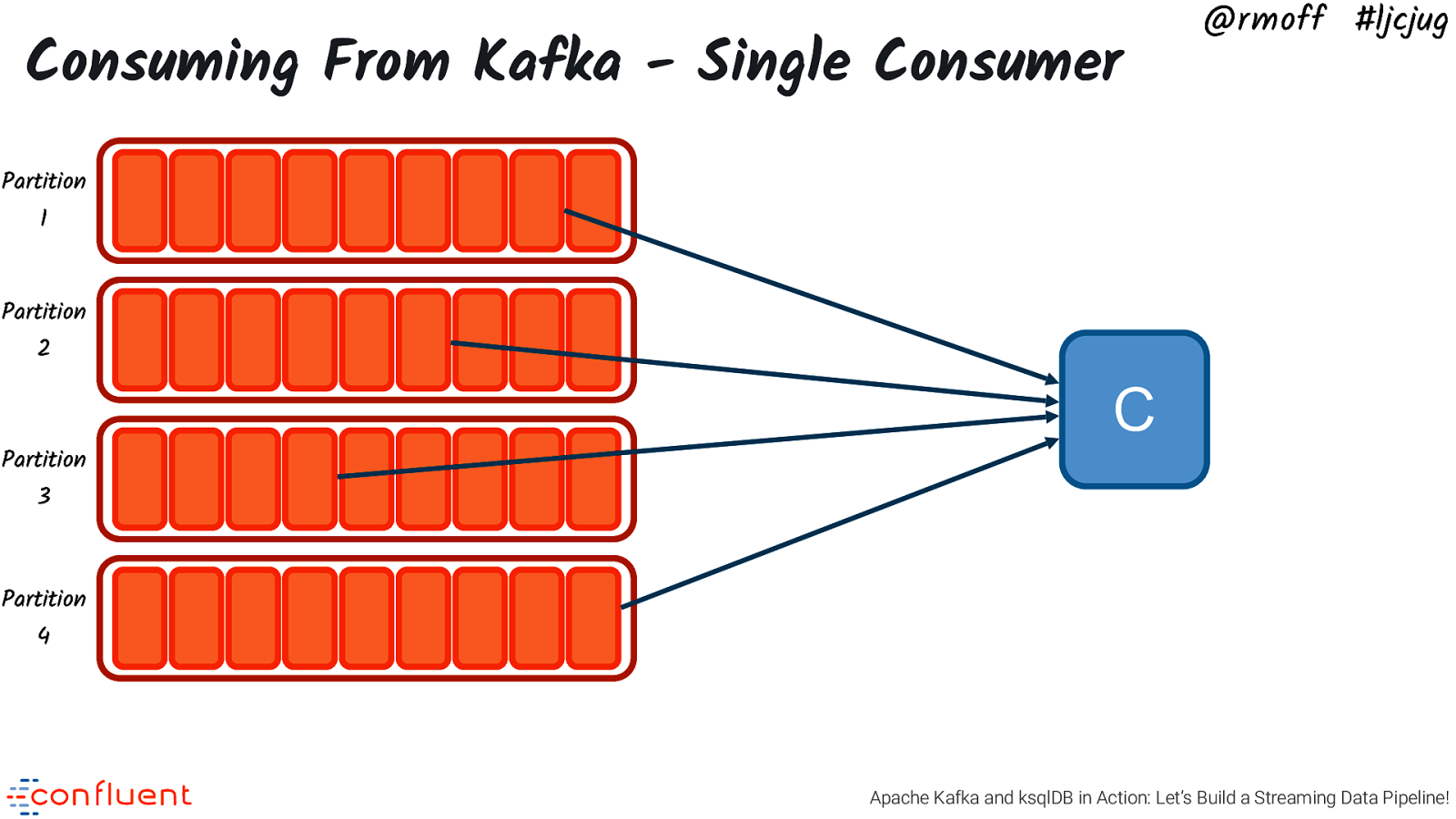

Consuming From Kafka - Single Consumer @rmoff #ljcjug Partition 1 Partition 2 Partition C 3 Partition 4 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 18

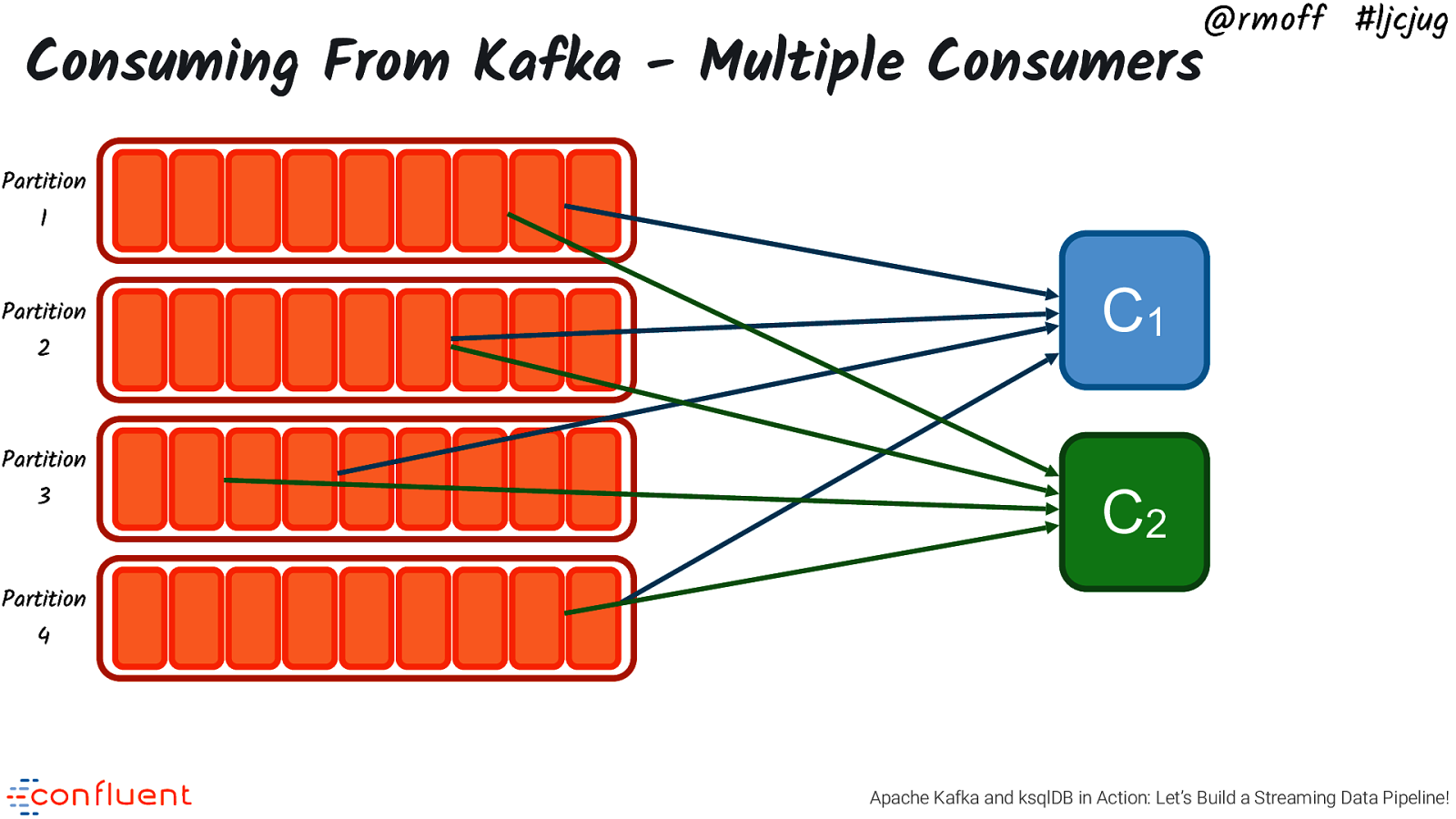

@rmoff #ljcjug Consuming From Kafka - Multiple Consumers Partition 1 Partition 2 Partition 3 C1 C2 Partition 4 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 19

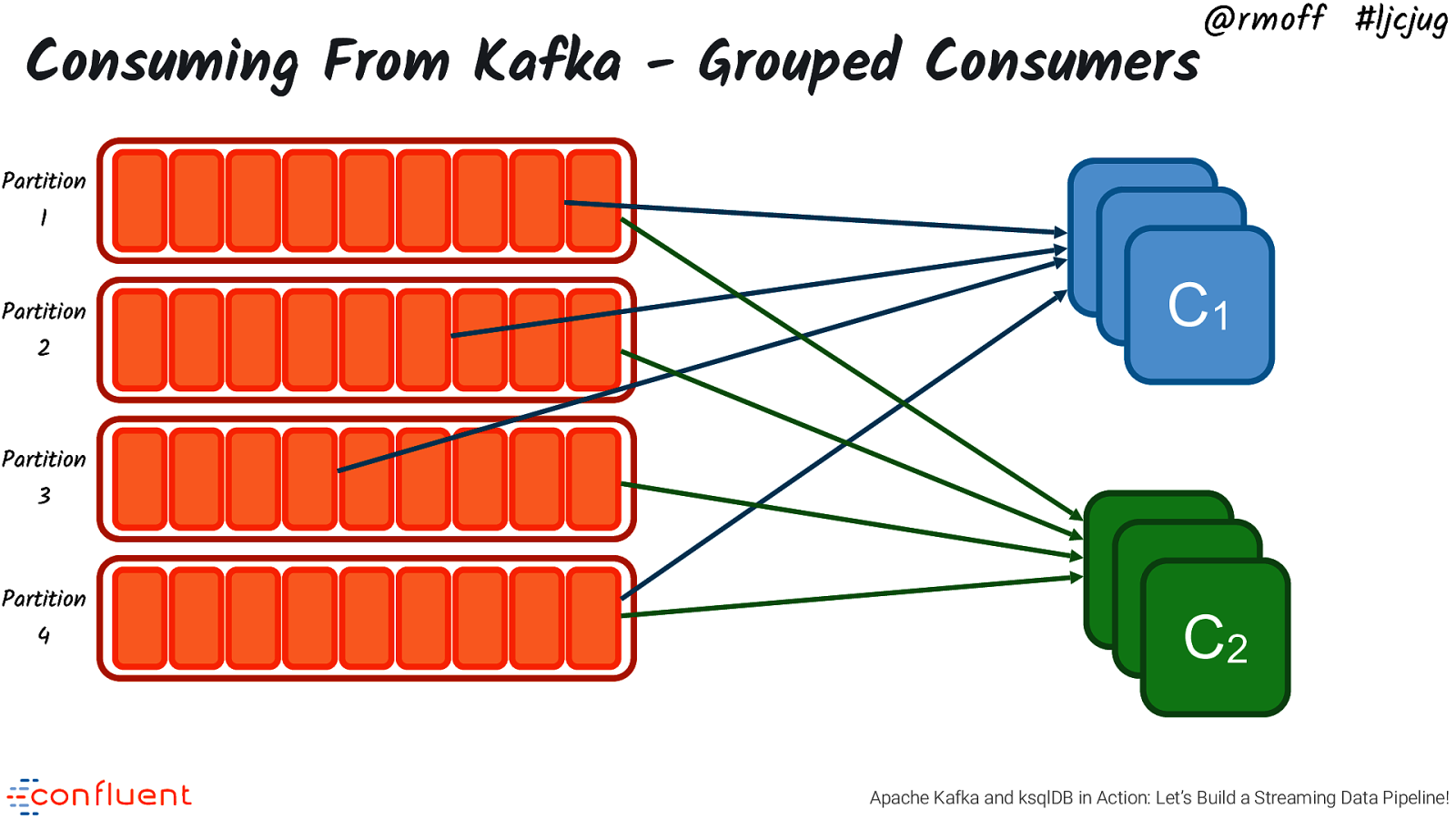

Consuming From Kafka - Grouped Consumers @rmoff #ljcjug Partition 1 Partition 2 CC C1 Partition 3 Partition 4 CC C2 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 20

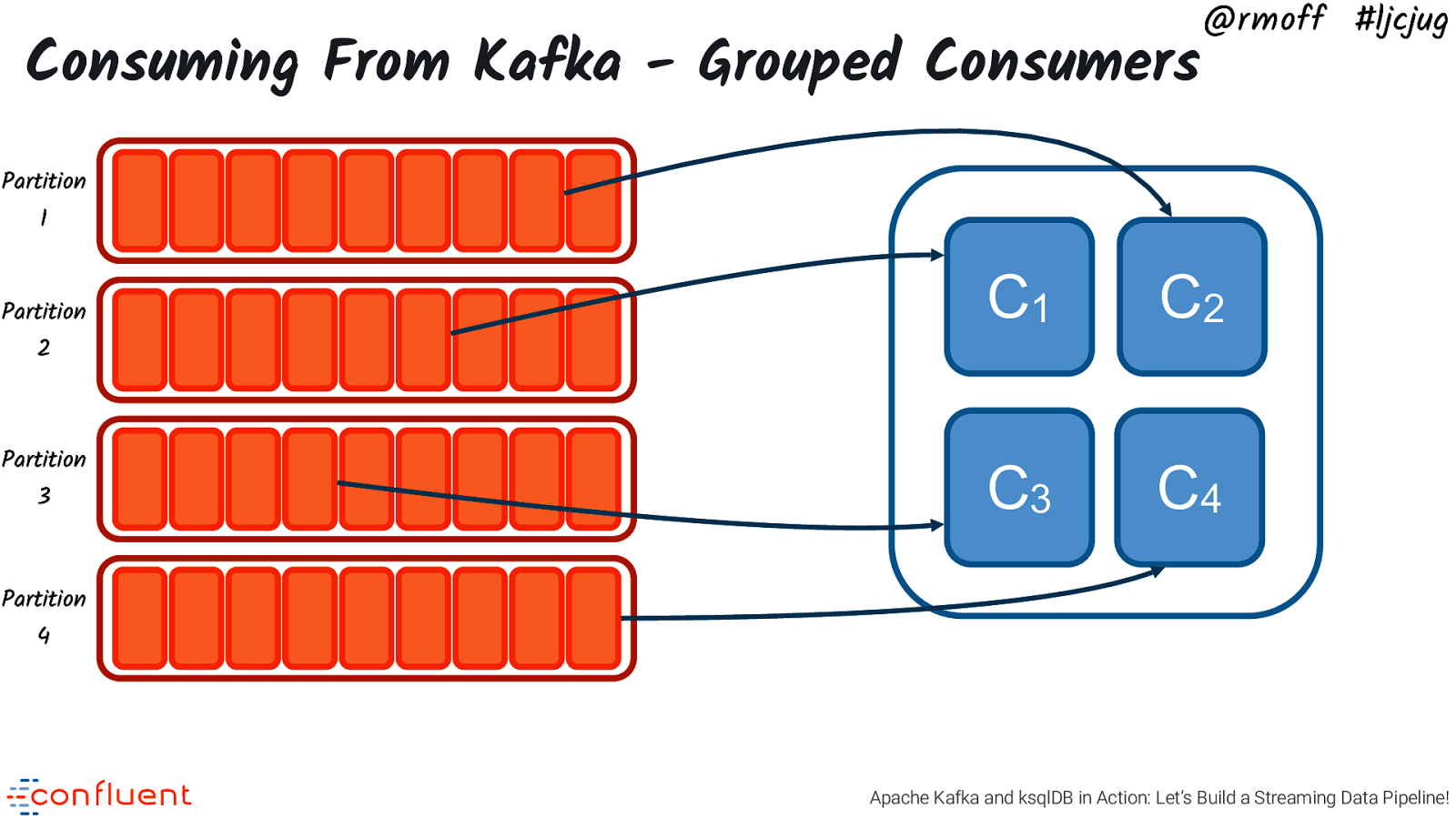

Consuming From Kafka - Grouped Consumers @rmoff #ljcjug Partition 1 Partition C1 C2 C3 C4 2 Partition 3 Partition 4 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 21

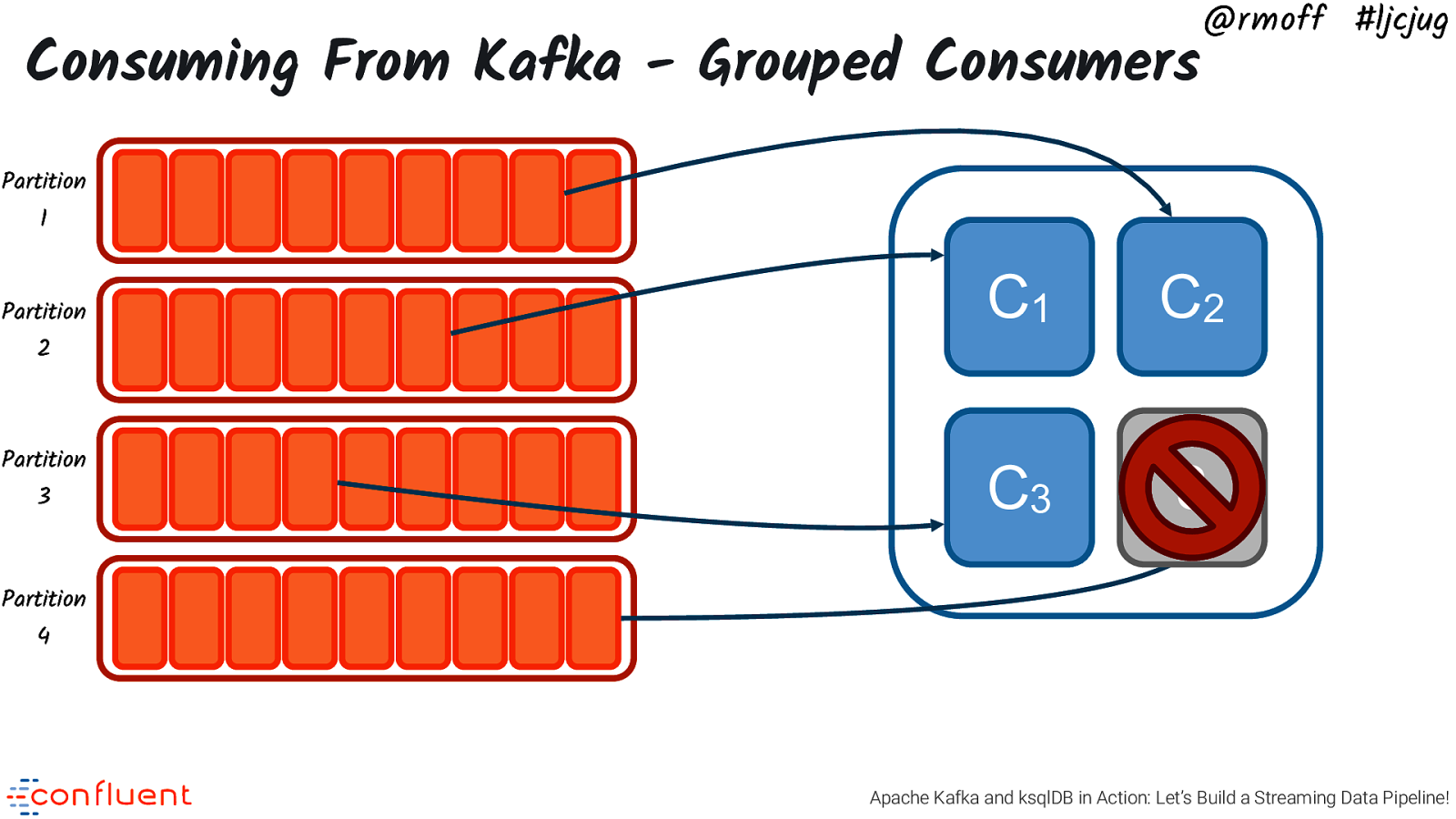

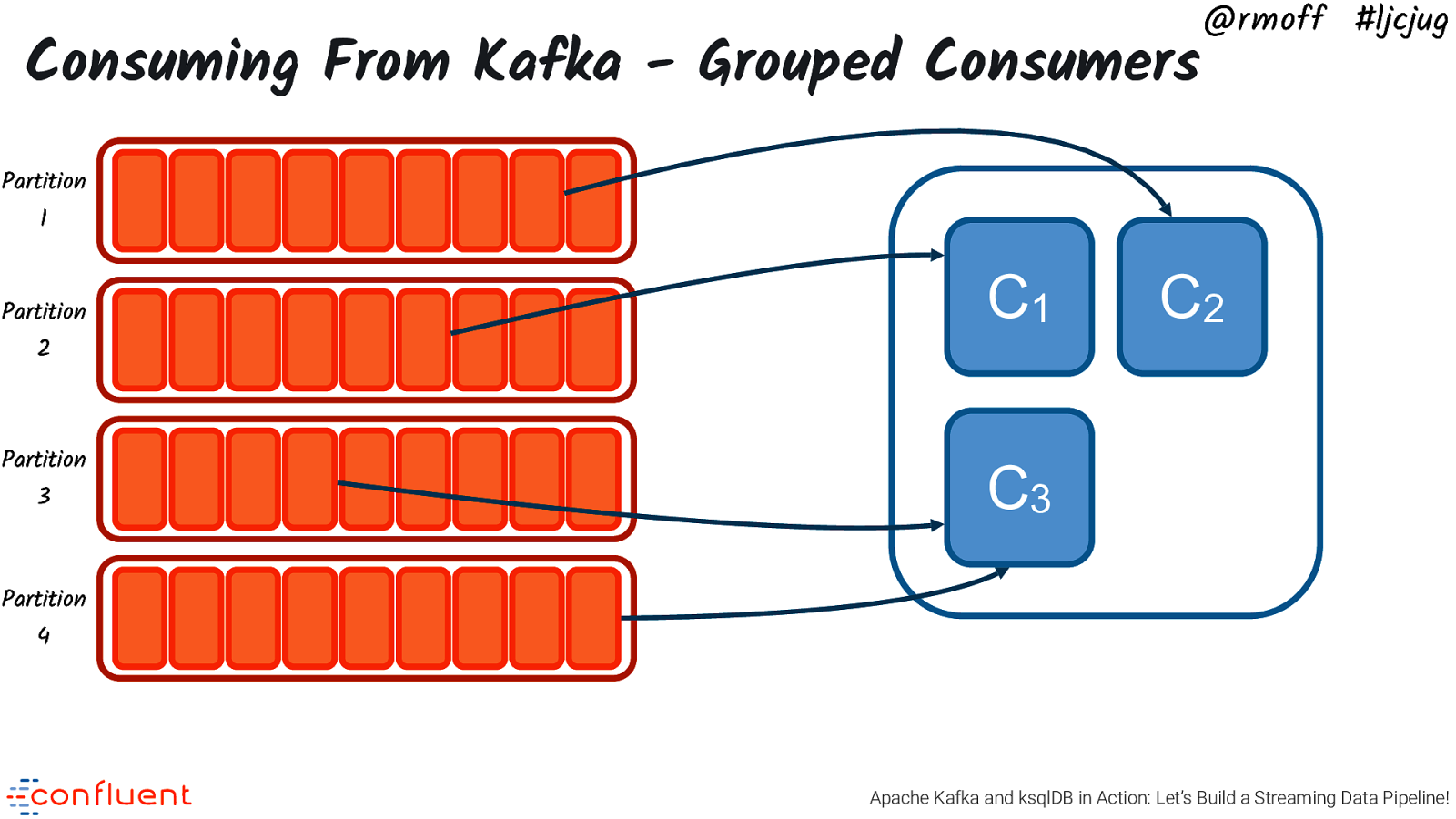

Consuming From Kafka - Grouped Consumers @rmoff #ljcjug Partition 1 Partition C1 C2 C3 3 2 Partition 3 Partition 4 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 22

Consuming From Kafka - Grouped Consumers @rmoff #ljcjug Partition 1 Partition C1 C2 2 Partition 3 C3 Partition 4 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 23

@rmoff #ljcjug Free Books! https://rmoff.dev/ljcjug Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 24

Streaming Data Pipelines Photo by Victor Garcia on Unsplash @rmoff #ljcjug Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 25

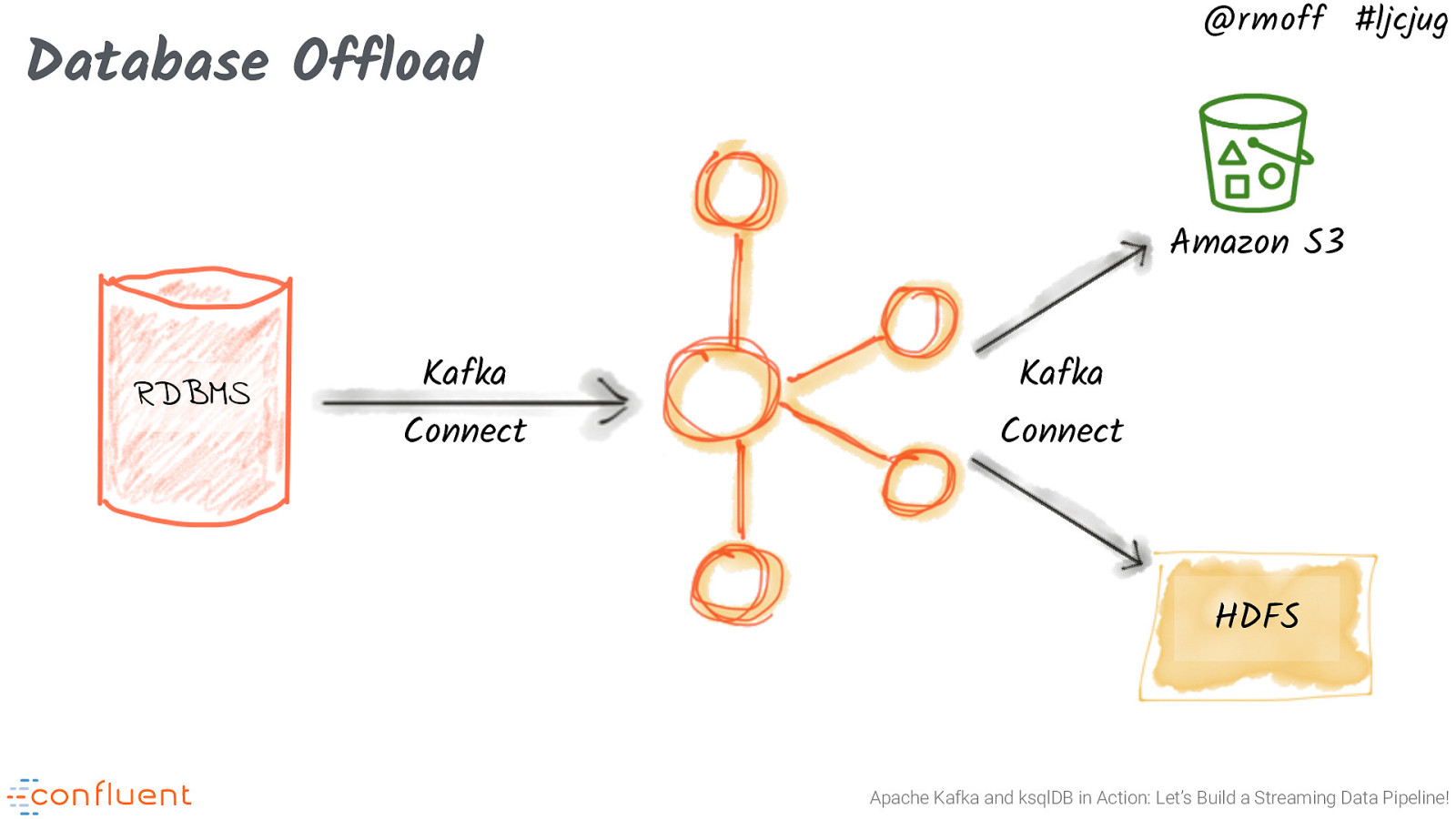

@rmoff #ljcjug Database Offload Amazon S3 RDBMS Kafka Connect Kafka Connect HDFS Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 26

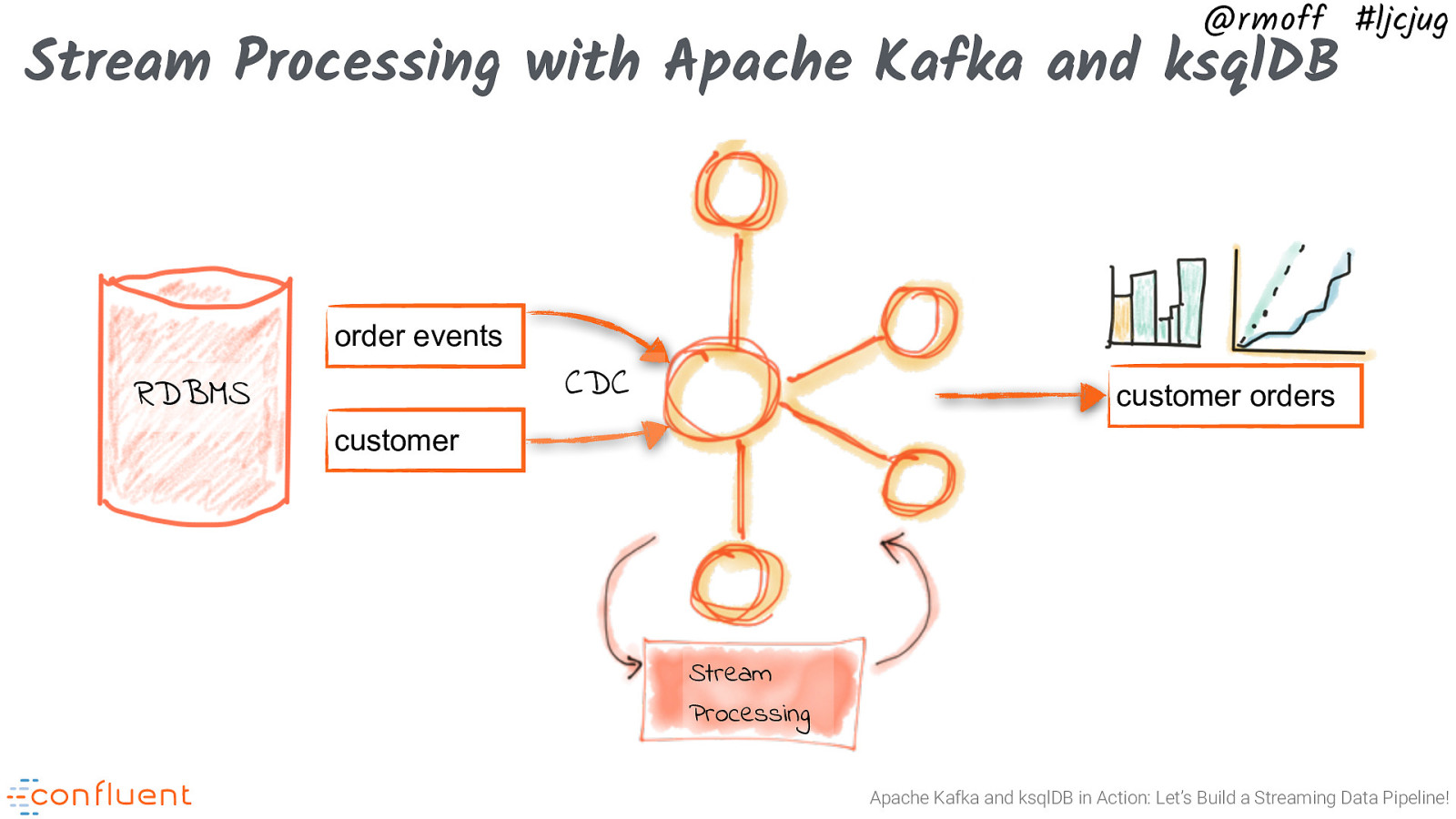

@rmoff #ljcjug Stream Processing with Apache Kafka and ksqlDB order events CDC RDBMS customer orders customer Stream Processing Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 27

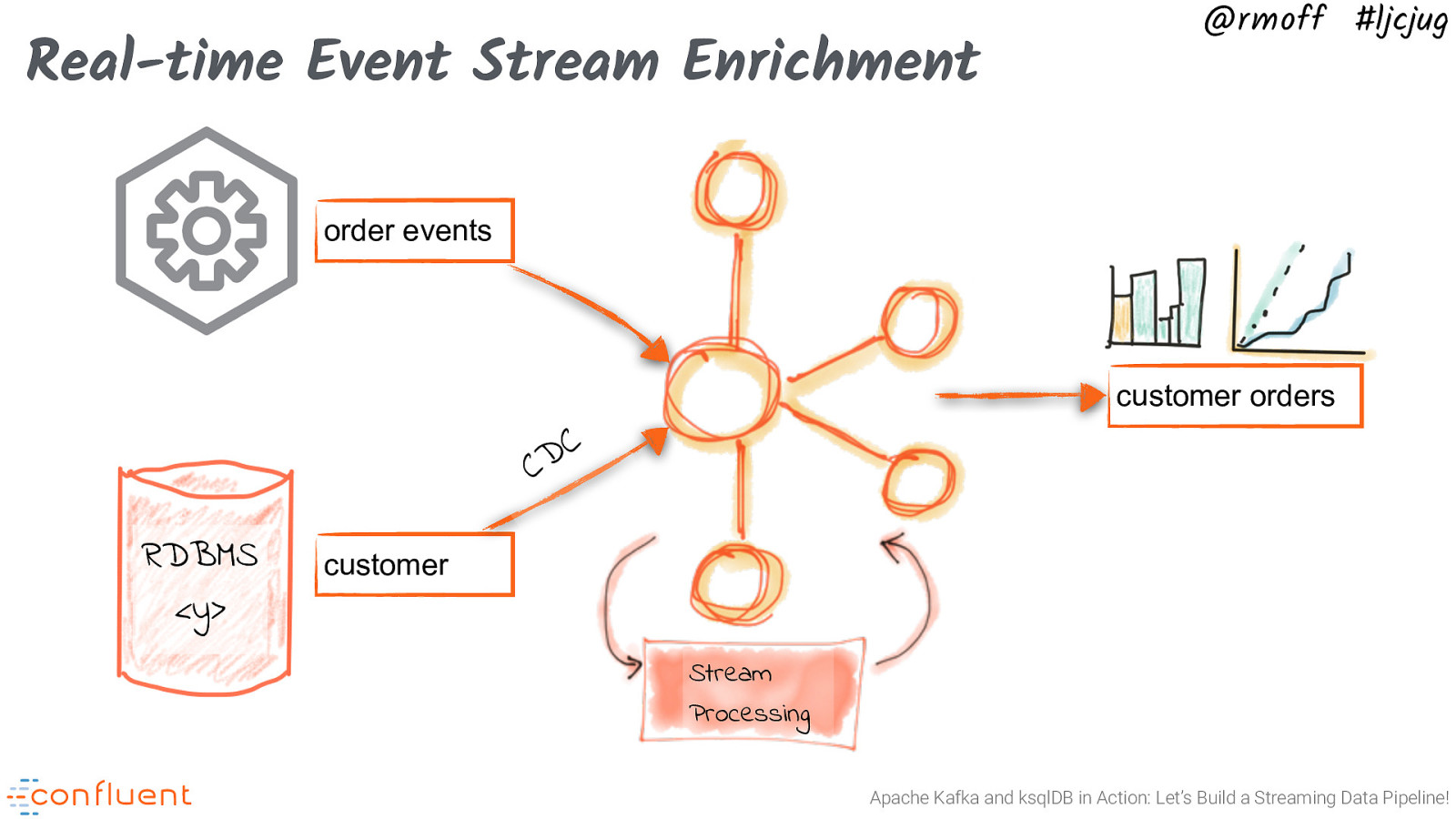

Real-time Event Stream Enrichment @rmoff #ljcjug order events customer orders C D C RDBMS <y> customer Stream Processing Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 28

@rmoff #ljcjug Building a streaming data pipeline Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 29

@rmoff #ljcjug Stream Integration + Processing Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 30

@rmoff #ljcjug Integration Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 31

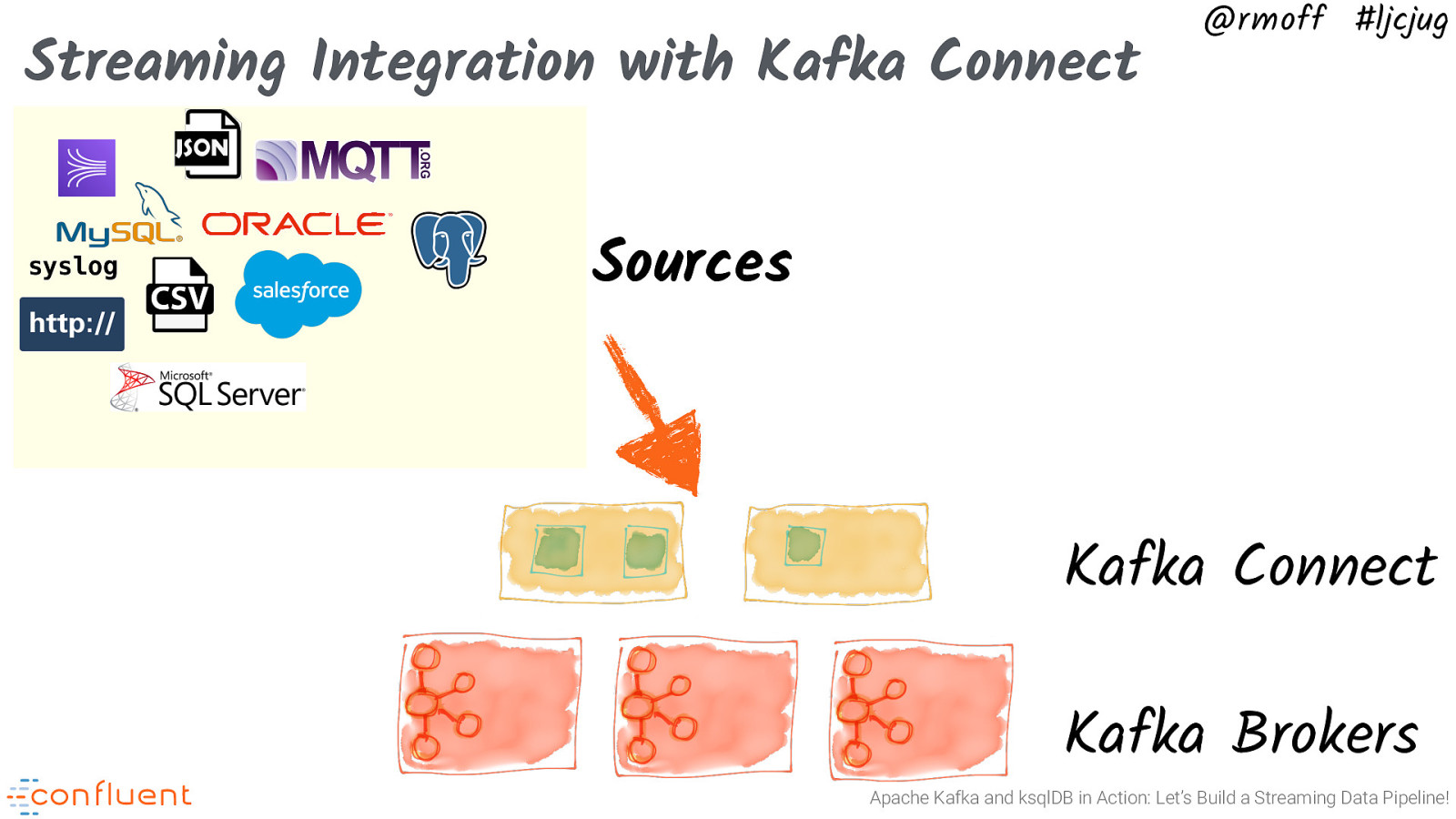

Streaming Integration with Kafka Connect syslog @rmoff #ljcjug Sources Kafka Connect Kafka Brokers Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 32

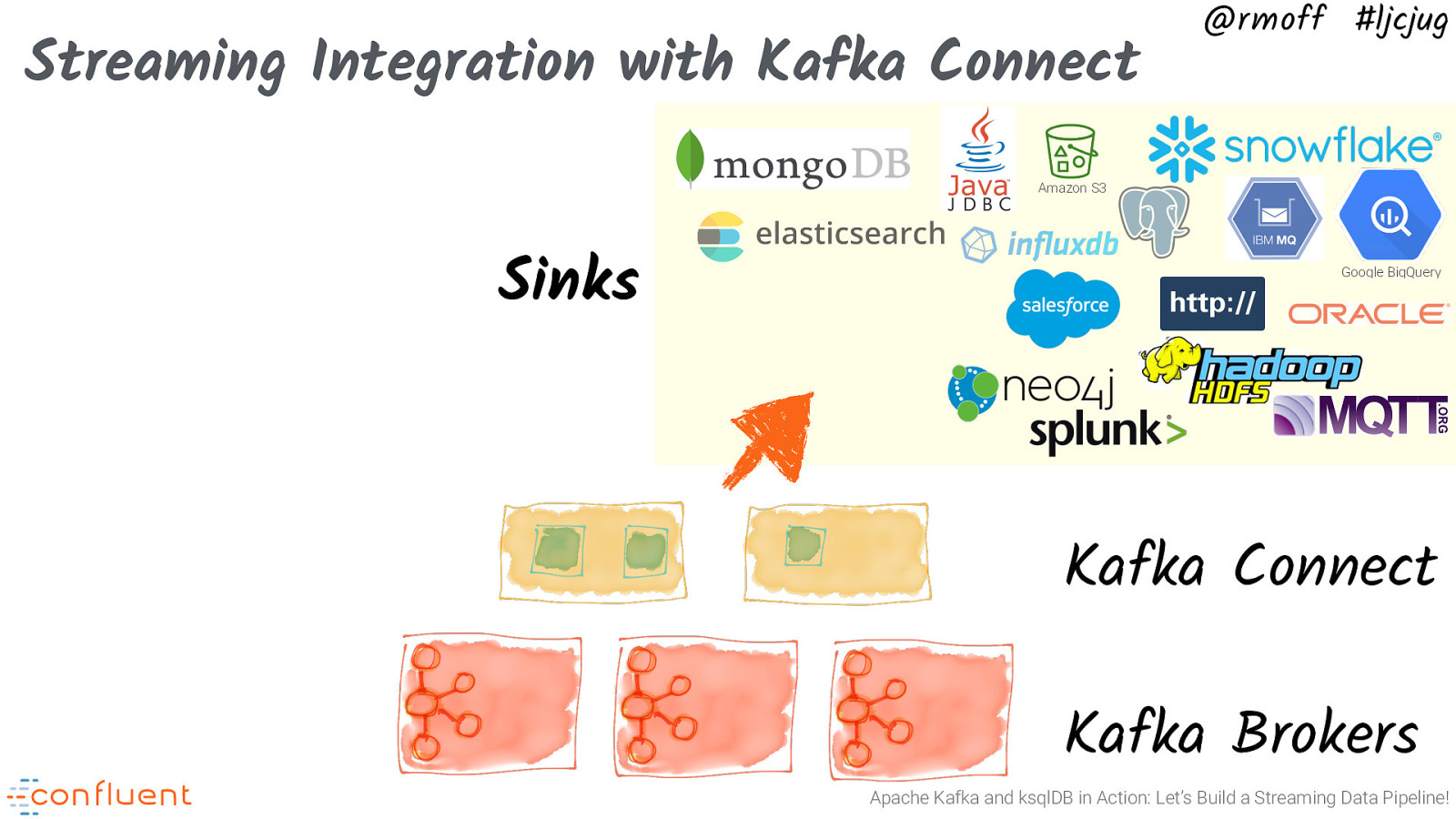

Streaming Integration with Kafka Connect @rmoff #ljcjug Amazon S3 Sinks Google BigQuery Kafka Connect Kafka Brokers Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 33

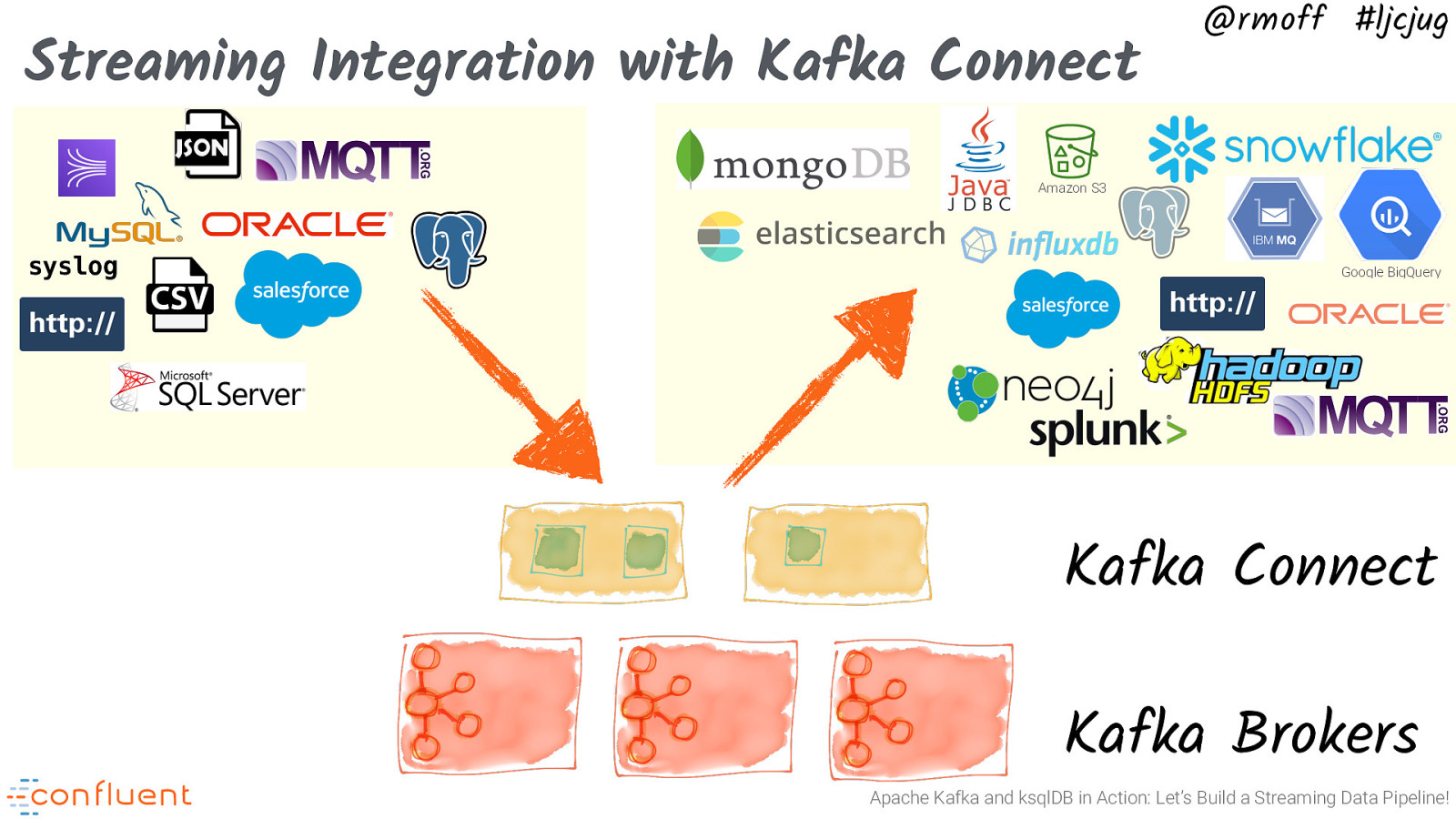

Streaming Integration with Kafka Connect @rmoff #ljcjug Amazon S3 syslog Google BigQuery Kafka Connect Kafka Brokers Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 34

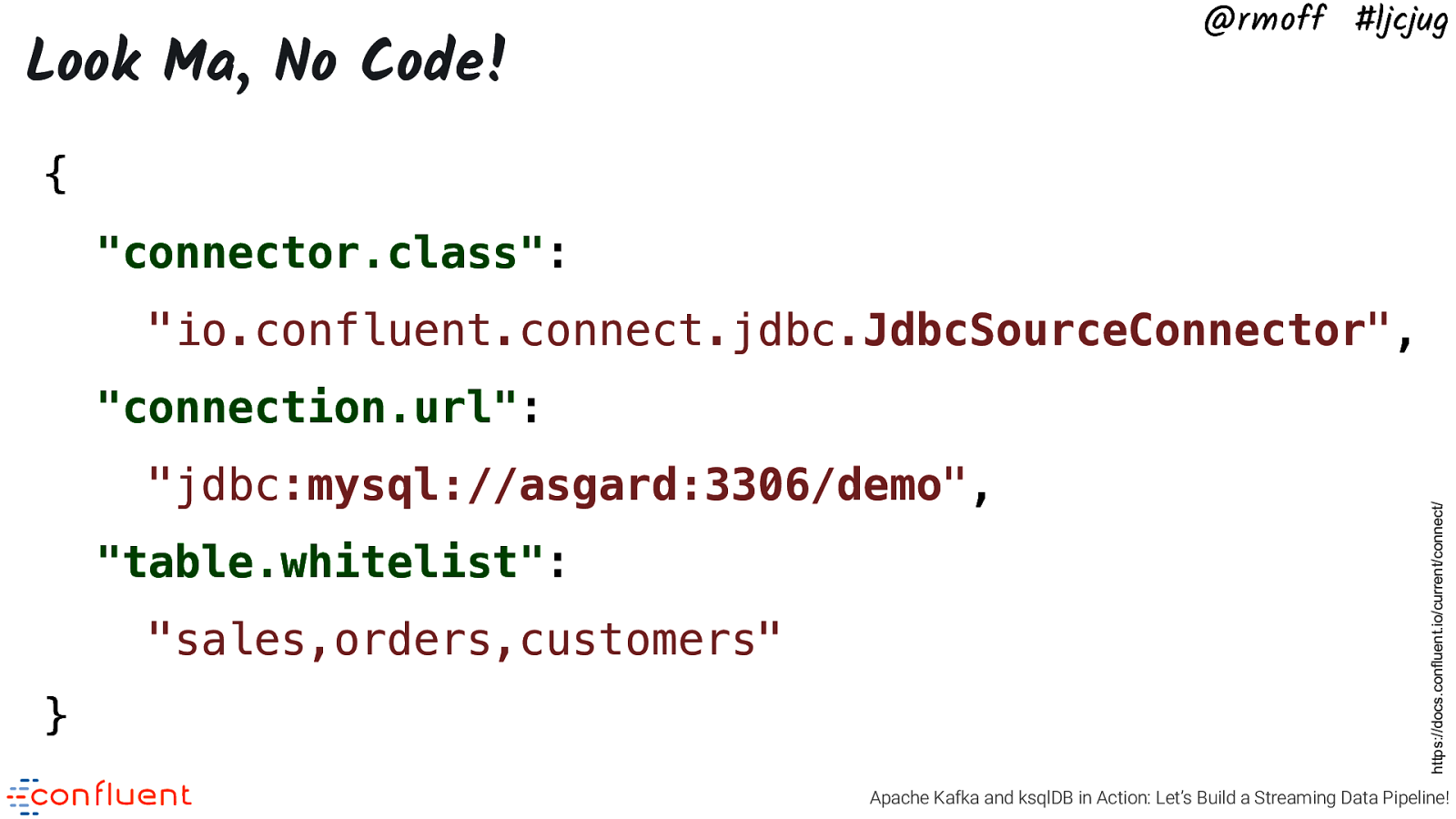

@rmoff #ljcjug Look Ma, No Code! { “connector.class”: “io.confluent.connect.jdbc.JdbcSourceConnector”, “jdbc:mysql://asgard:3306/demo”, “table.whitelist”: “sales,orders,customers” } https://docs.confluent.io/current/connect/ “connection.url”: Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 35

@rmoff #ljcjug Messages are just K/V bytes With great power comes great responsibility Avro -> Confluent Schema Registry Protobuf -> Confluent Schema Registry JSON CSV https://qconnewyork.com/system/files/presentation-slides/qcon_17_-_schemas_and_apis.pdf Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 36

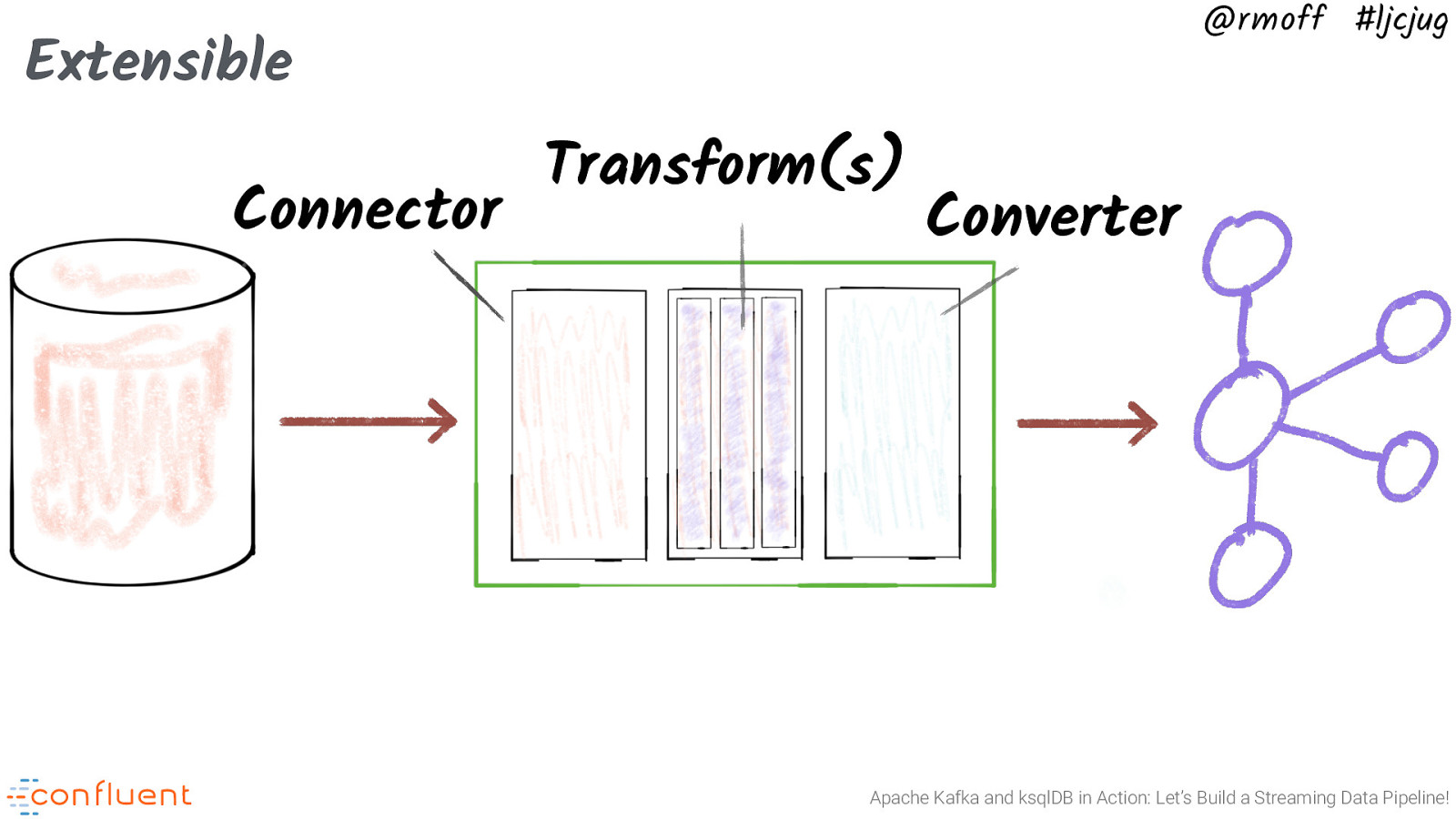

@rmoff #ljcjug Extensible Connector Transform(s) Converter Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 37

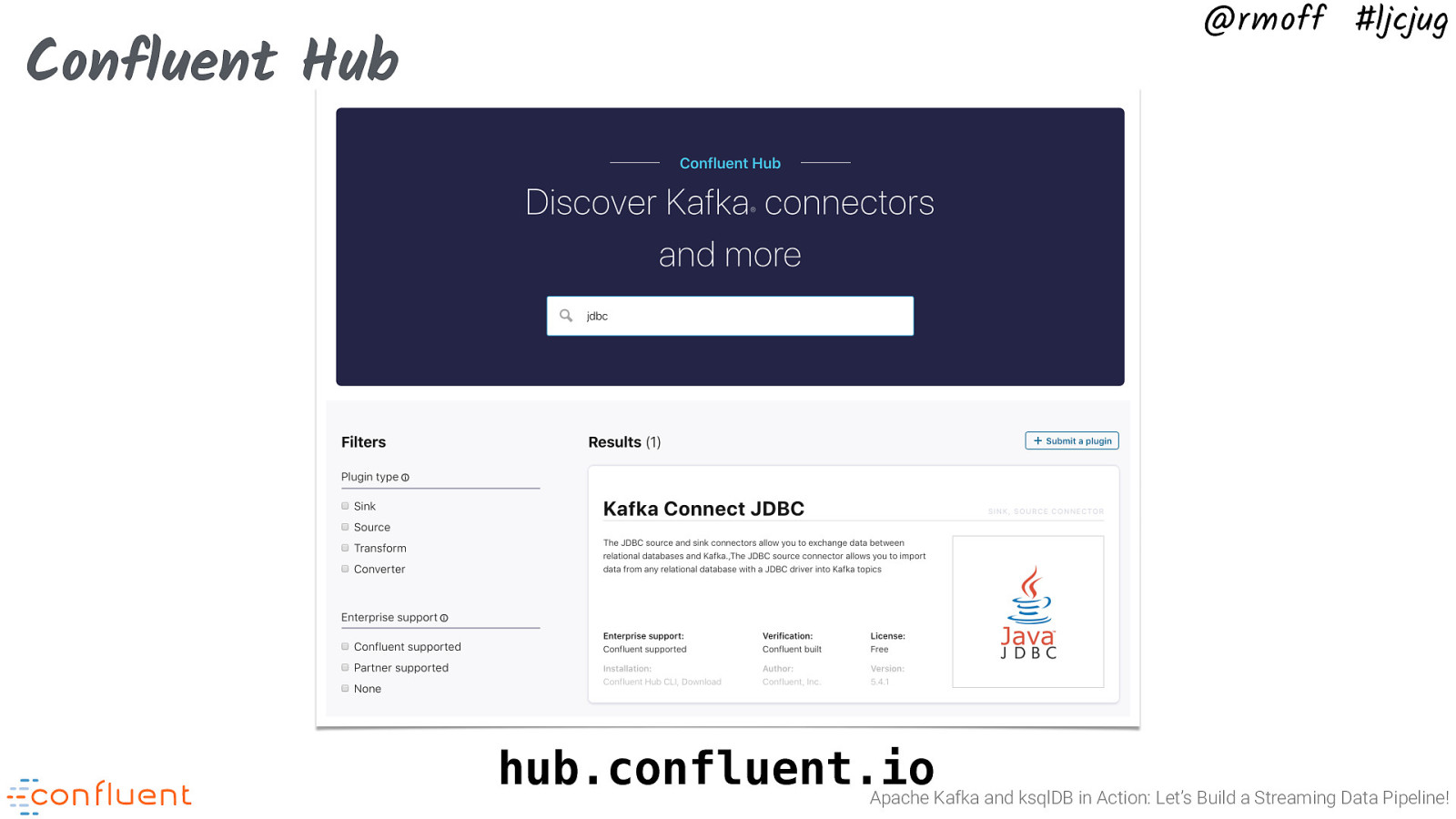

@rmoff #ljcjug Confluent Hub hub.confluent.io Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 38

@rmoff #ljcjug Stream Integration + Processing Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 39

@rmoff #ljcjug Stream Processing Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 40

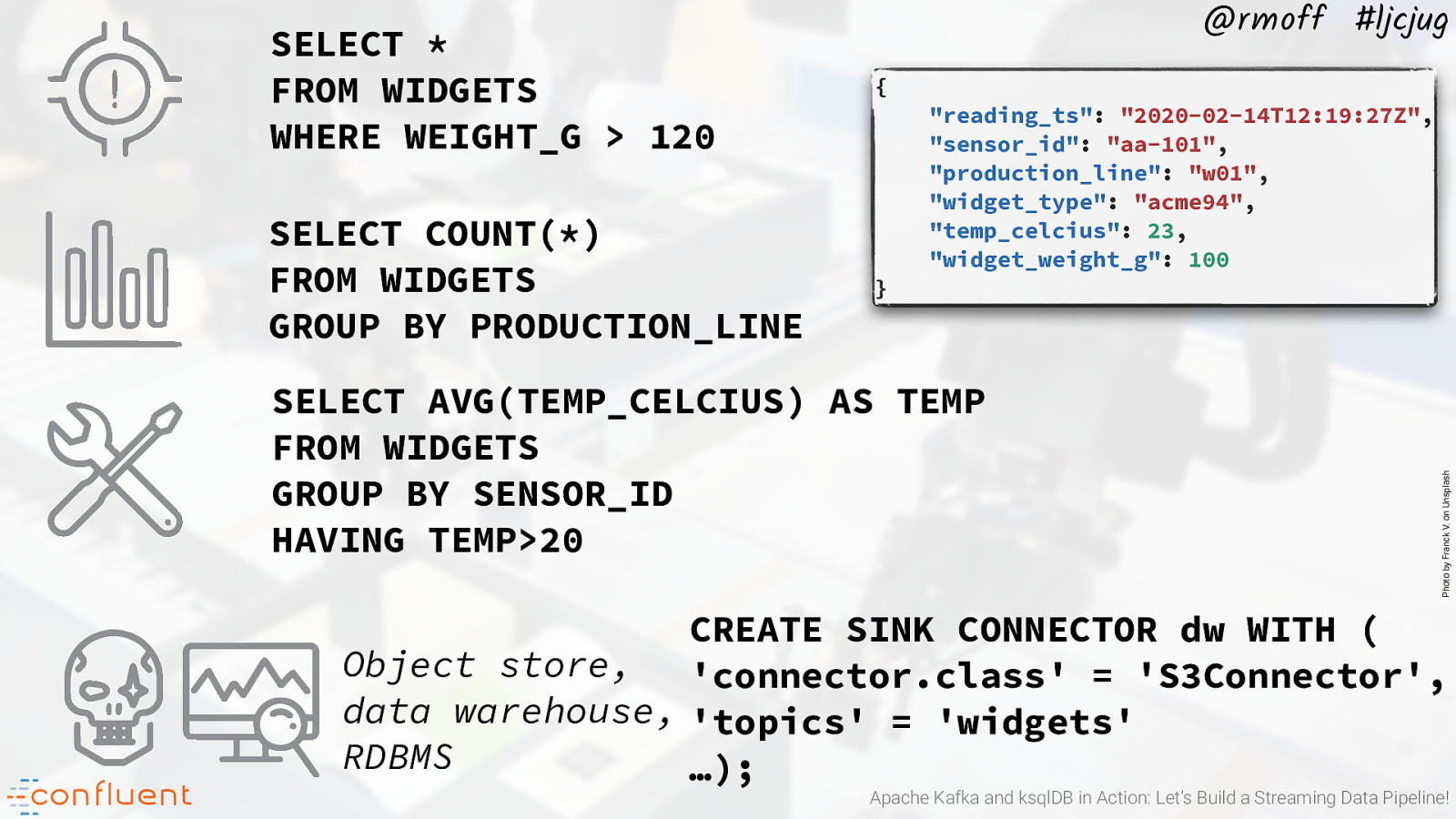

} “reading_ts”: “2020-02-14T12:19:27Z”, “sensor_id”: “aa-101”, “production_line”: “w01”, “widget_type”: “acme94”, “temp_celcius”: 23, “widget_weight_g”: 100 Photo by Franck V. on Unsplash { @rmoff #ljcjug Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 41

@rmoff #ljcjug SELECT * FROM WIDGETS WHERE WEIGHT_G > 120 { SELECT COUNT(*) FROM WIDGETS GROUP BY PRODUCTION_LINE } SELECT AVG(TEMP_CELCIUS) AS TEMP FROM WIDGETS GROUP BY SENSOR_ID HAVING TEMP>20 Photo by Franck V. on Unsplash “reading_ts”: “2020-02-14T12:19:27Z”, “sensor_id”: “aa-101”, “production_line”: “w01”, “widget_type”: “acme94”, “temp_celcius”: 23, “widget_weight_g”: 100 CREATE SINK CONNECTOR dw WITH ( Object store, ‘connector.class’ = ‘S3Connector’, data warehouse, ‘topics’ = ‘widgets’ RDBMS …); Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 42

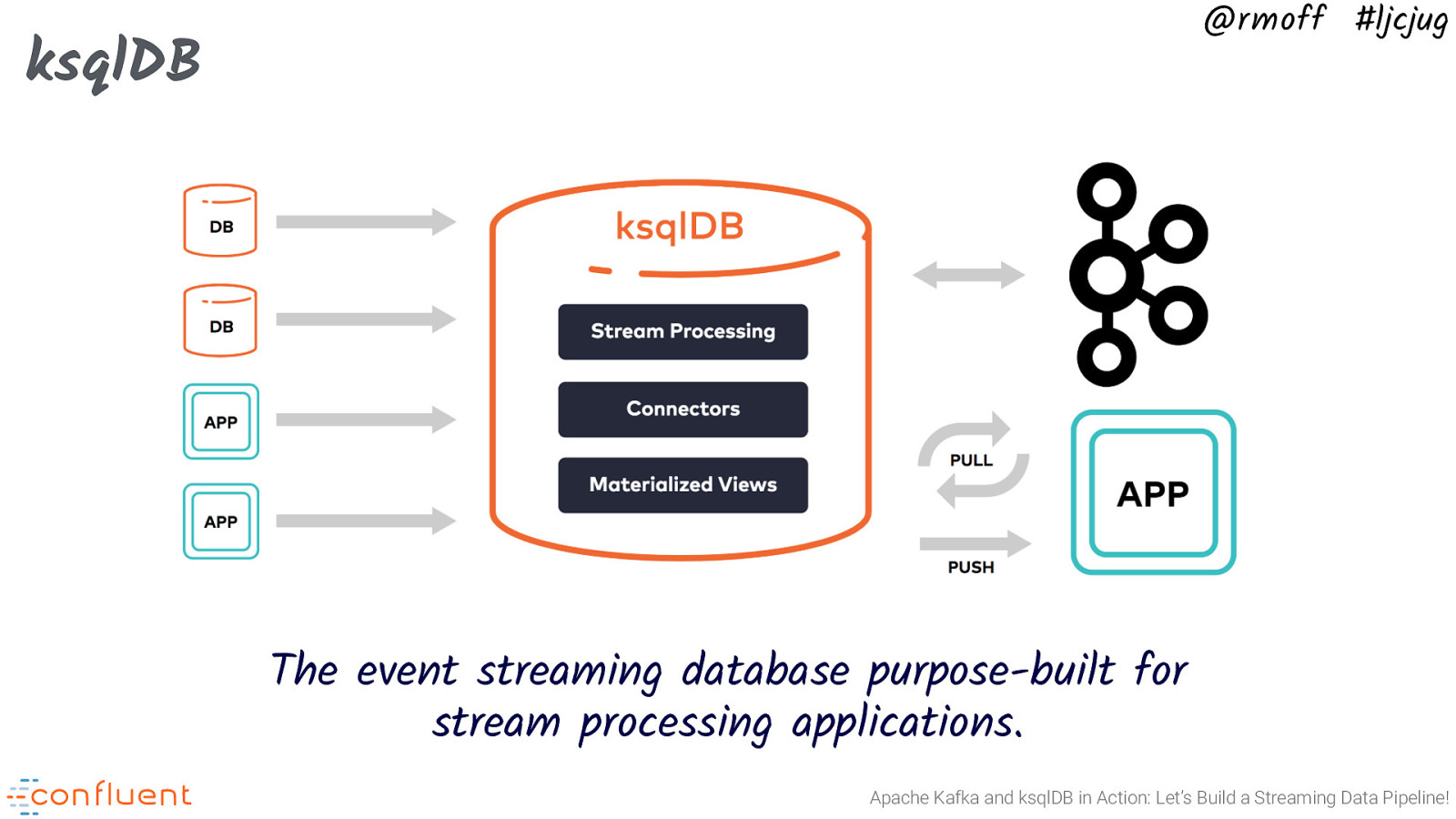

@rmoff #ljcjug ksqlDB The event streaming database purpose-built for stream processing applications. Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 43

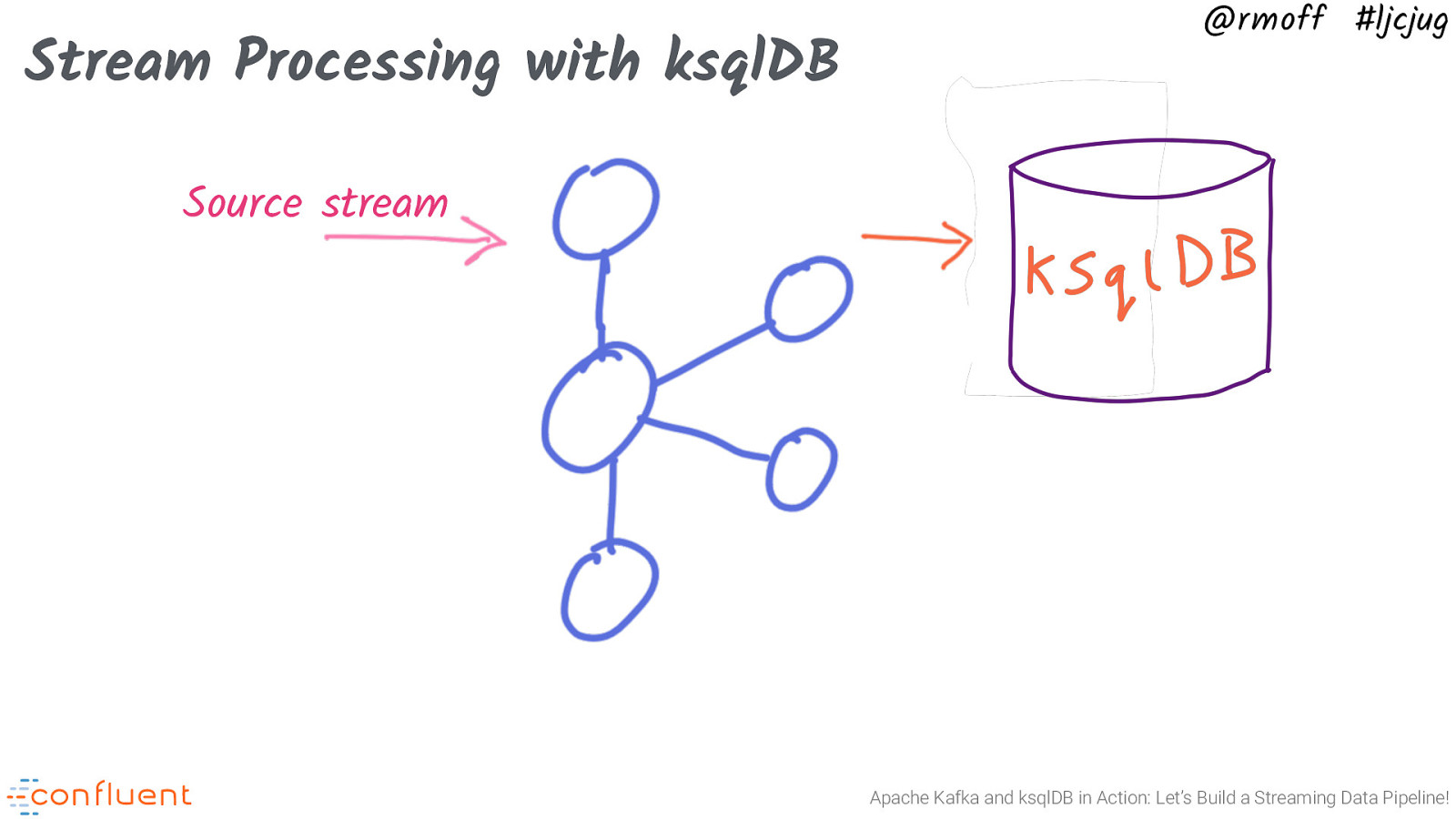

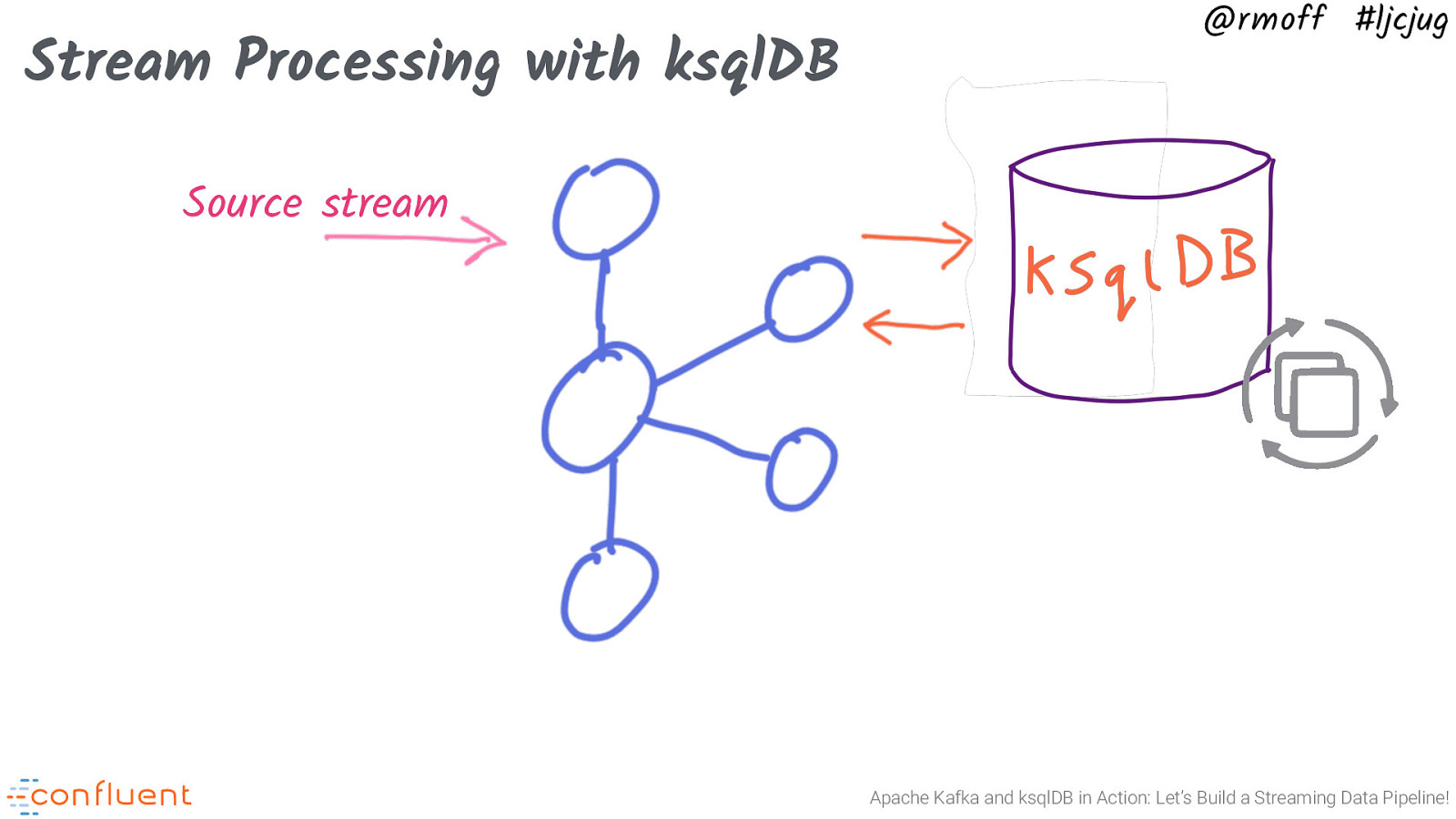

Stream Processing with ksqlDB @rmoff #ljcjug Source stream Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 44

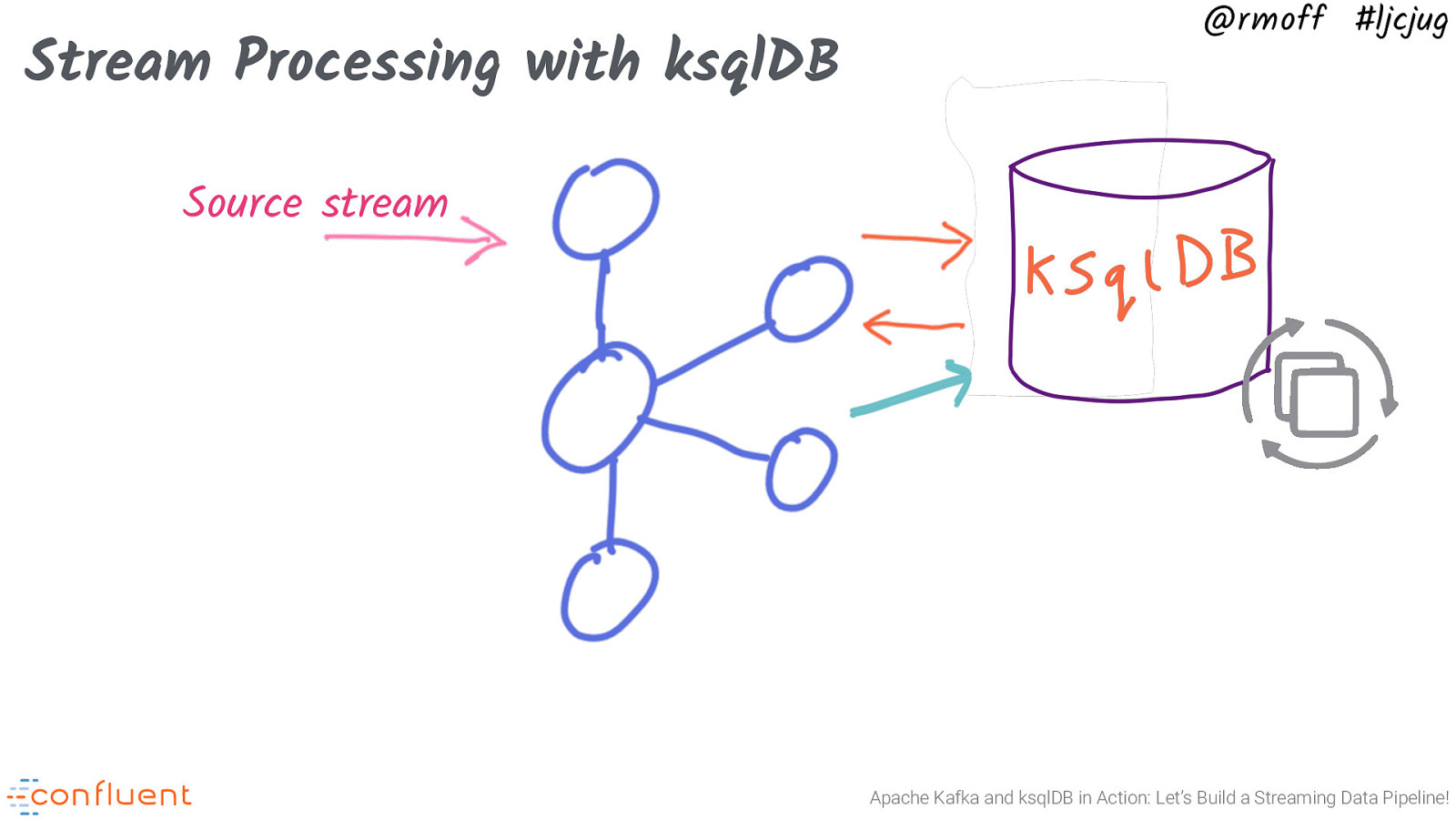

Stream Processing with ksqlDB @rmoff #ljcjug Source stream Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 45

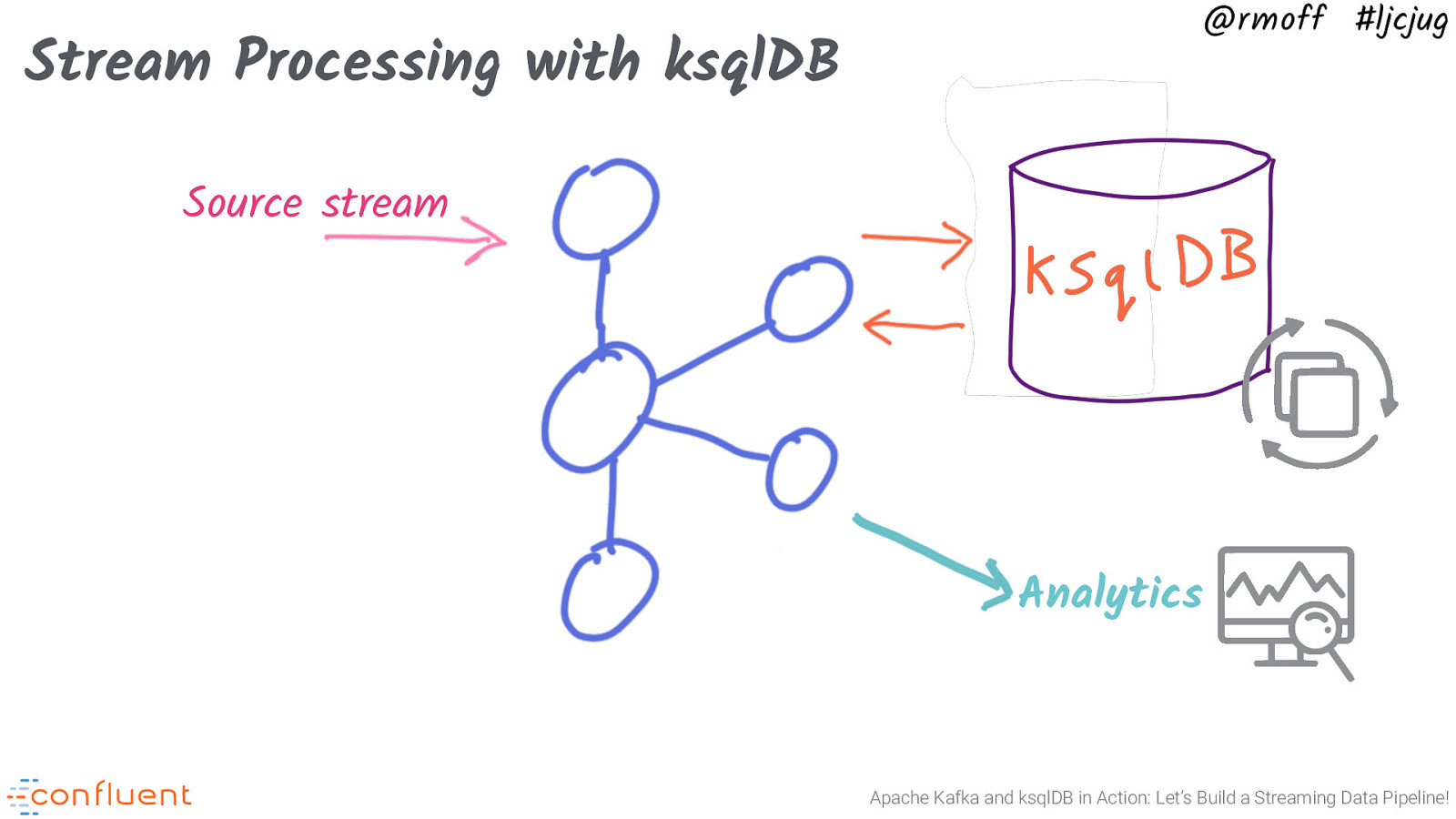

Stream Processing with ksqlDB @rmoff #ljcjug Source stream Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 46

@rmoff #ljcjug Stream Processing with ksqlDB Source stream Analytics Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

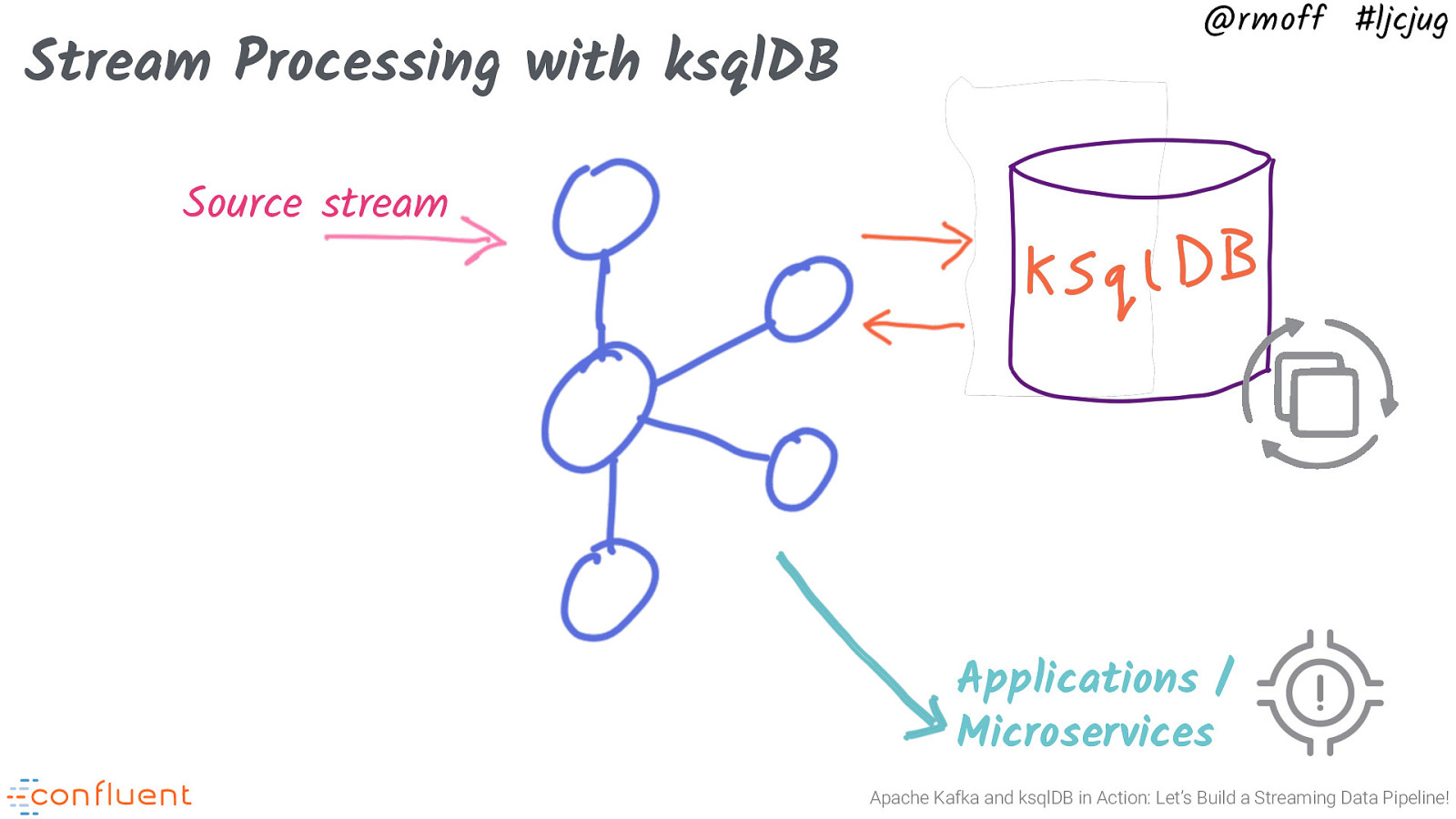

Slide 47

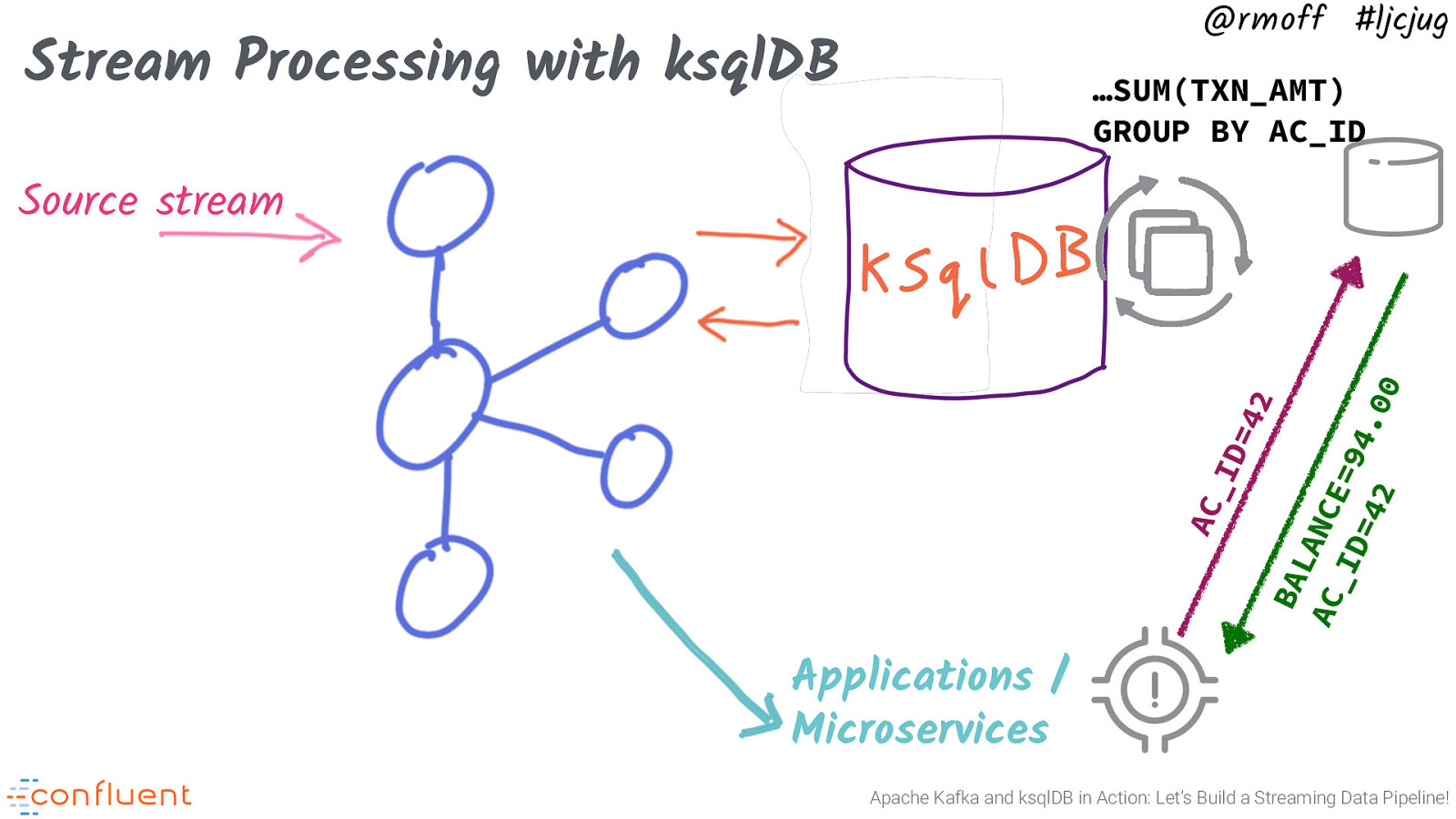

Stream Processing with ksqlDB @rmoff #ljcjug Source stream Applications / Microservices Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 48

@rmoff #ljcjug Stream Processing with ksqlDB …SUM(TXN_AMT) GROUP BY AC_ID AC _I D= 42 BA LA NC AC E= _I 94 D= .0 42 0 Source stream Applications / Microservices Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 49

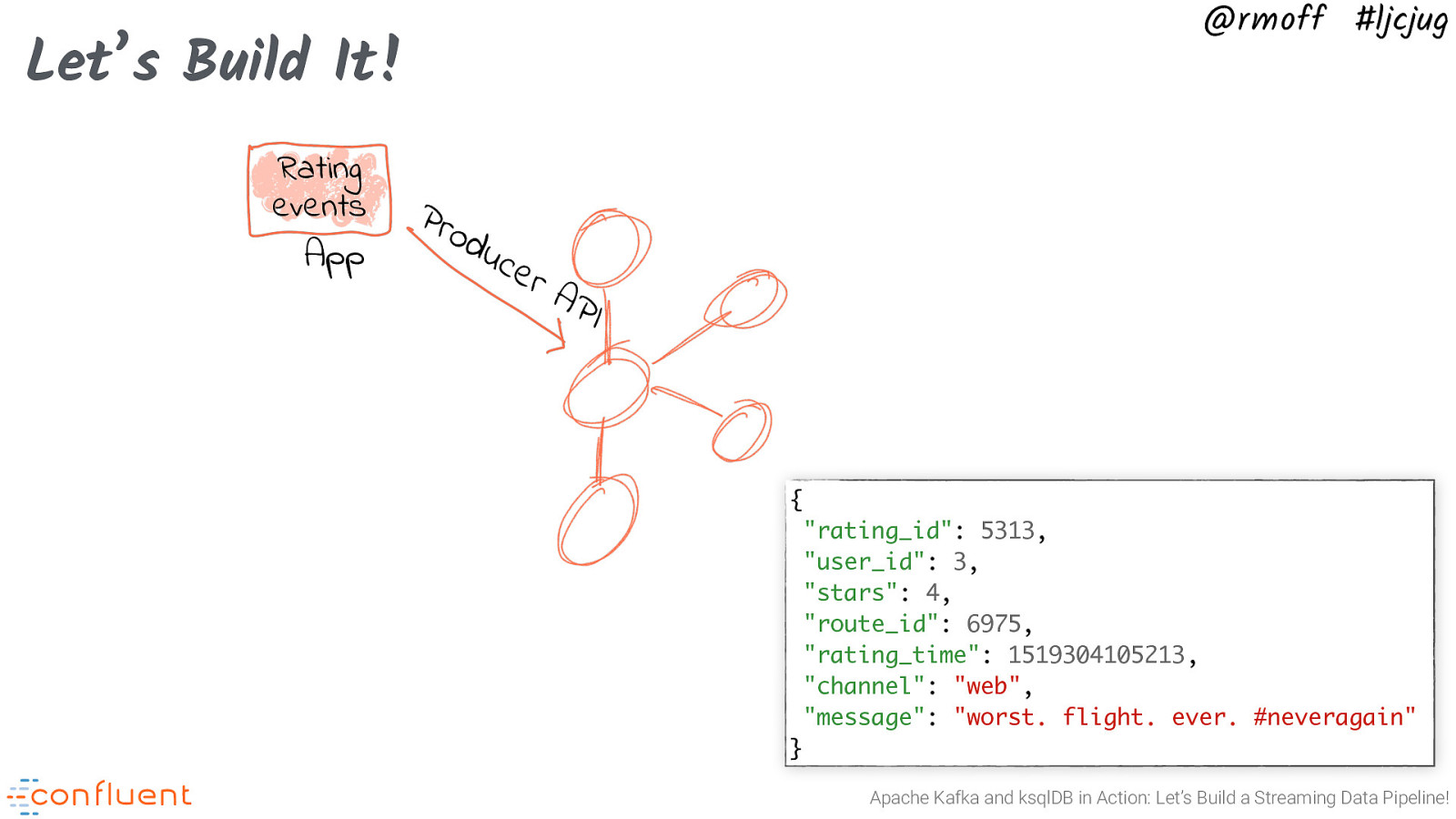

@rmoff #ljcjug Let’s Build It! Rating events App Pro d uc e rA PI { “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 50

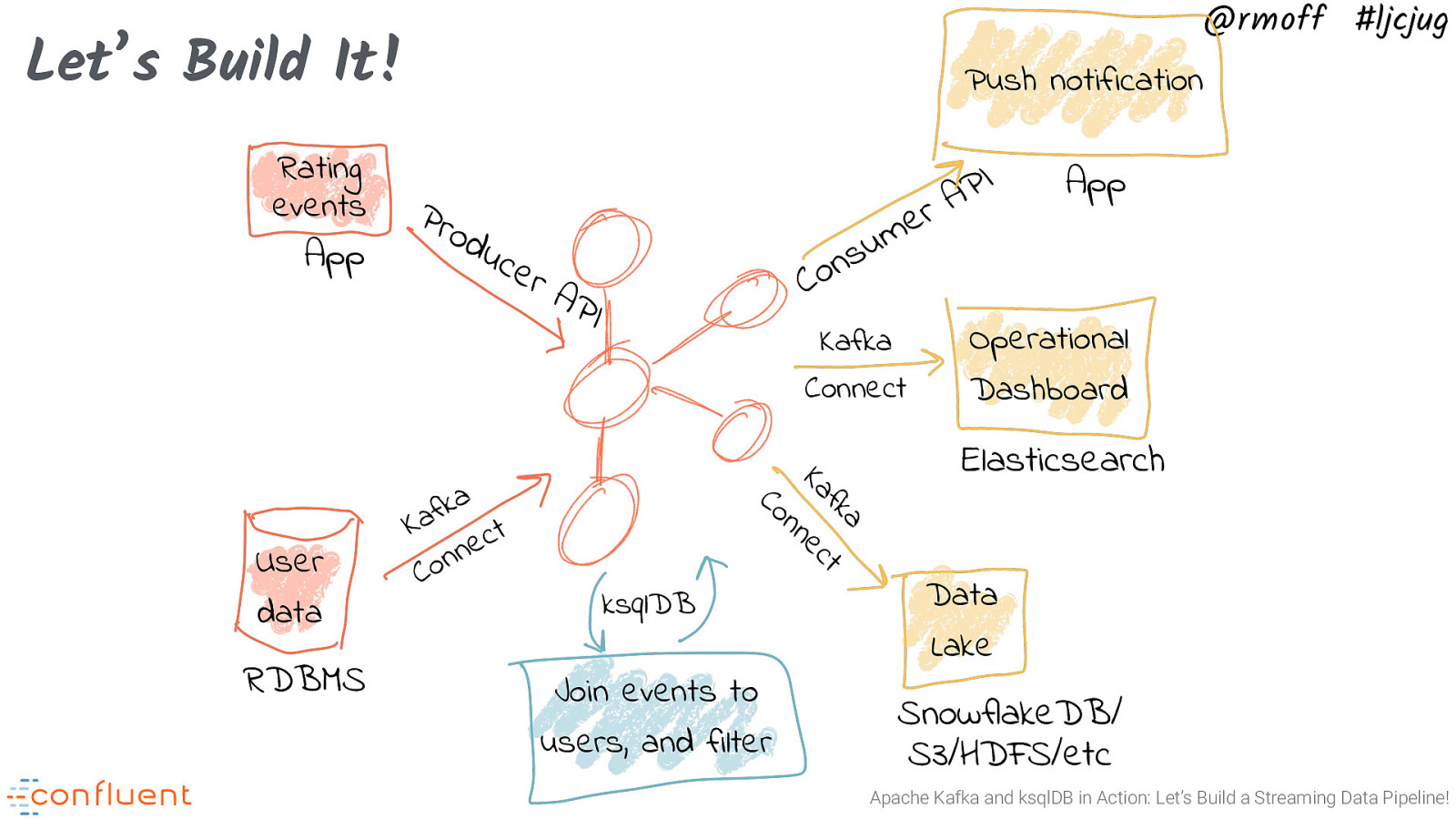

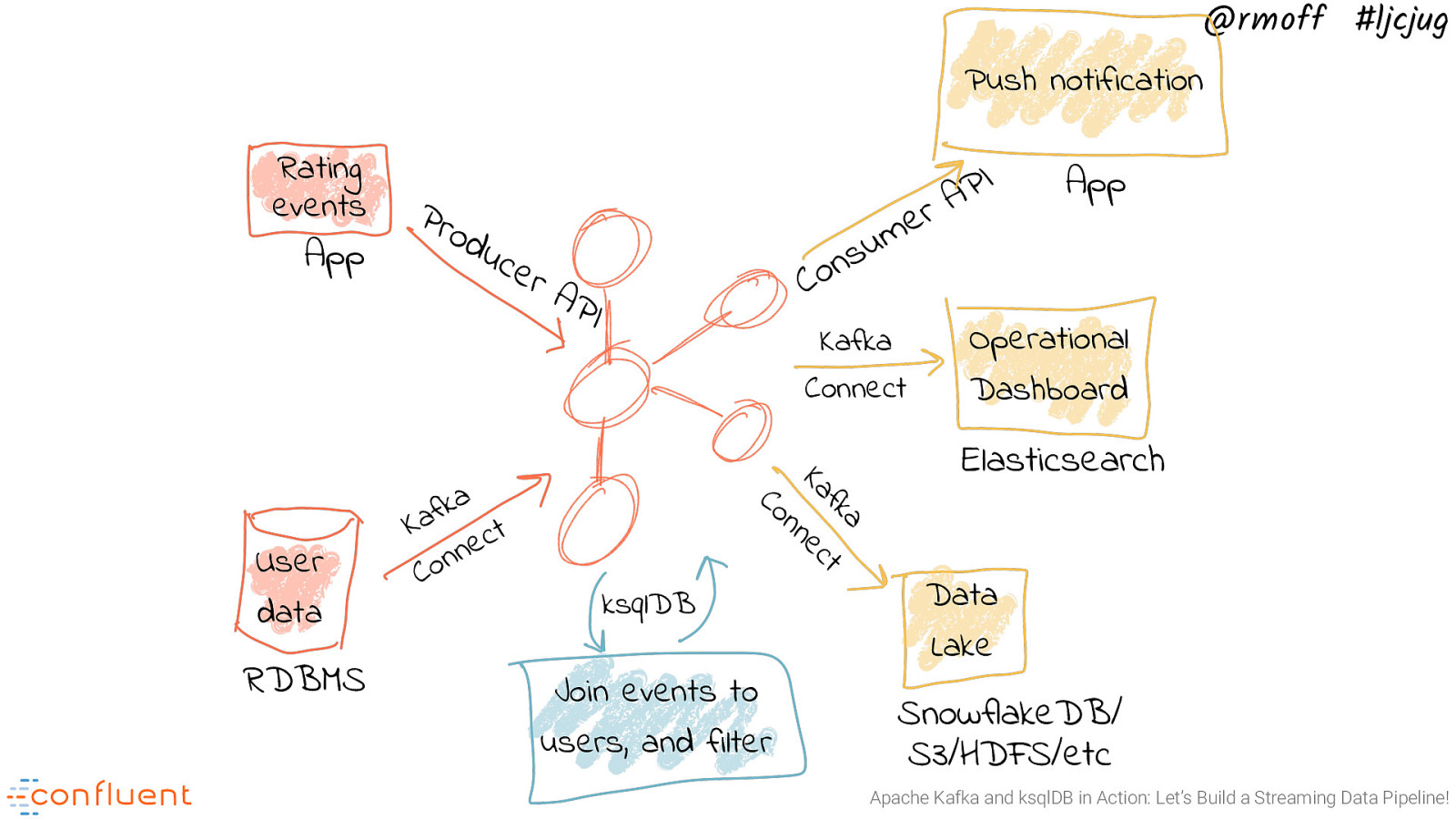

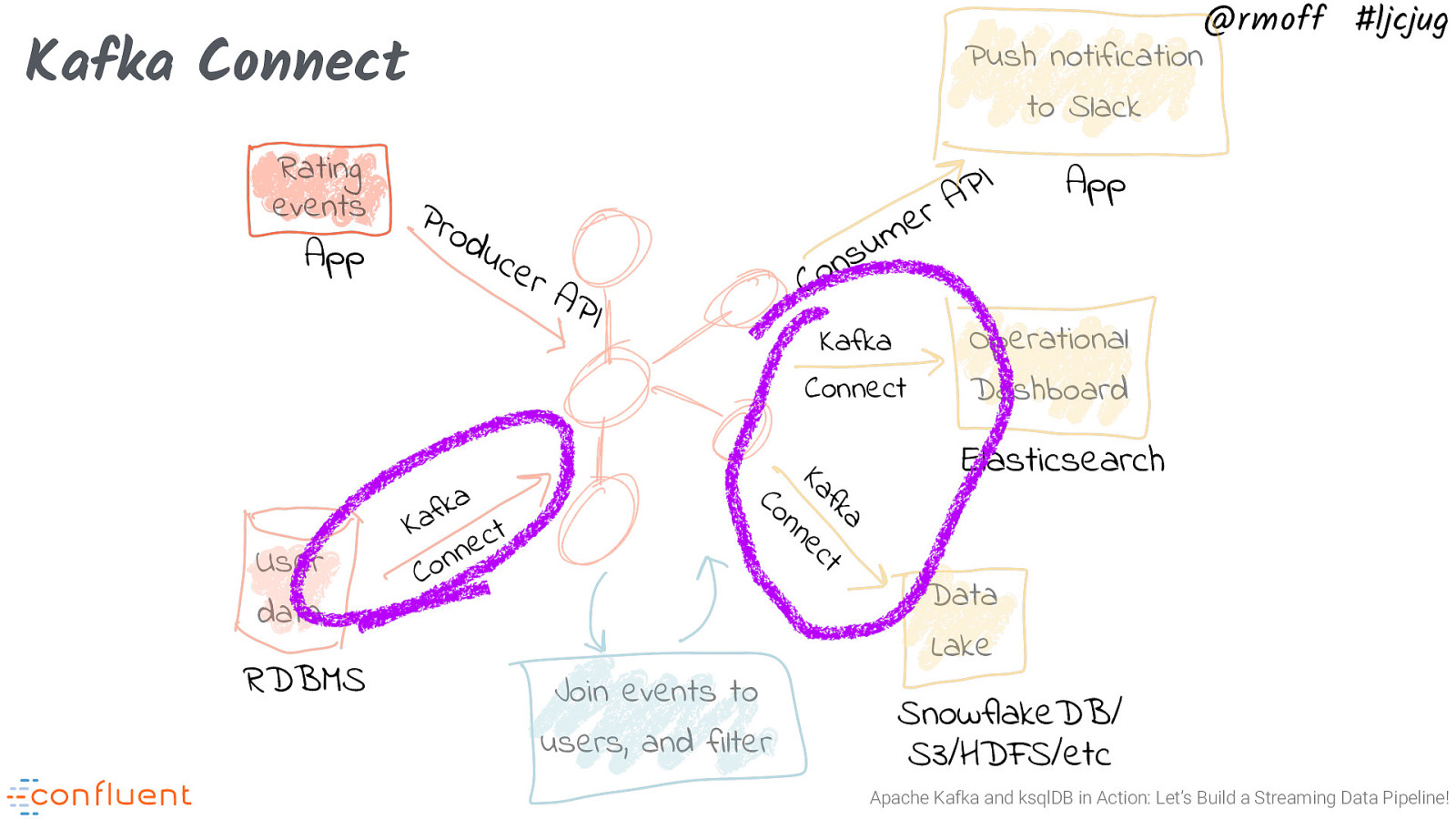

@rmoff #ljcjug Let’s Build It! Rating events App a k f a K t c e n n o C App u s n o C uc e rA PI Kafka Connect a fk t Ka ec n RDBMS Pro d I P A r e m Operational Dashboard Elasticsearch n Co User data Push notification ksqlDB Join events to users, and filter Data Lake SnowflakeDB/ S3/HDFS/etc Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 51

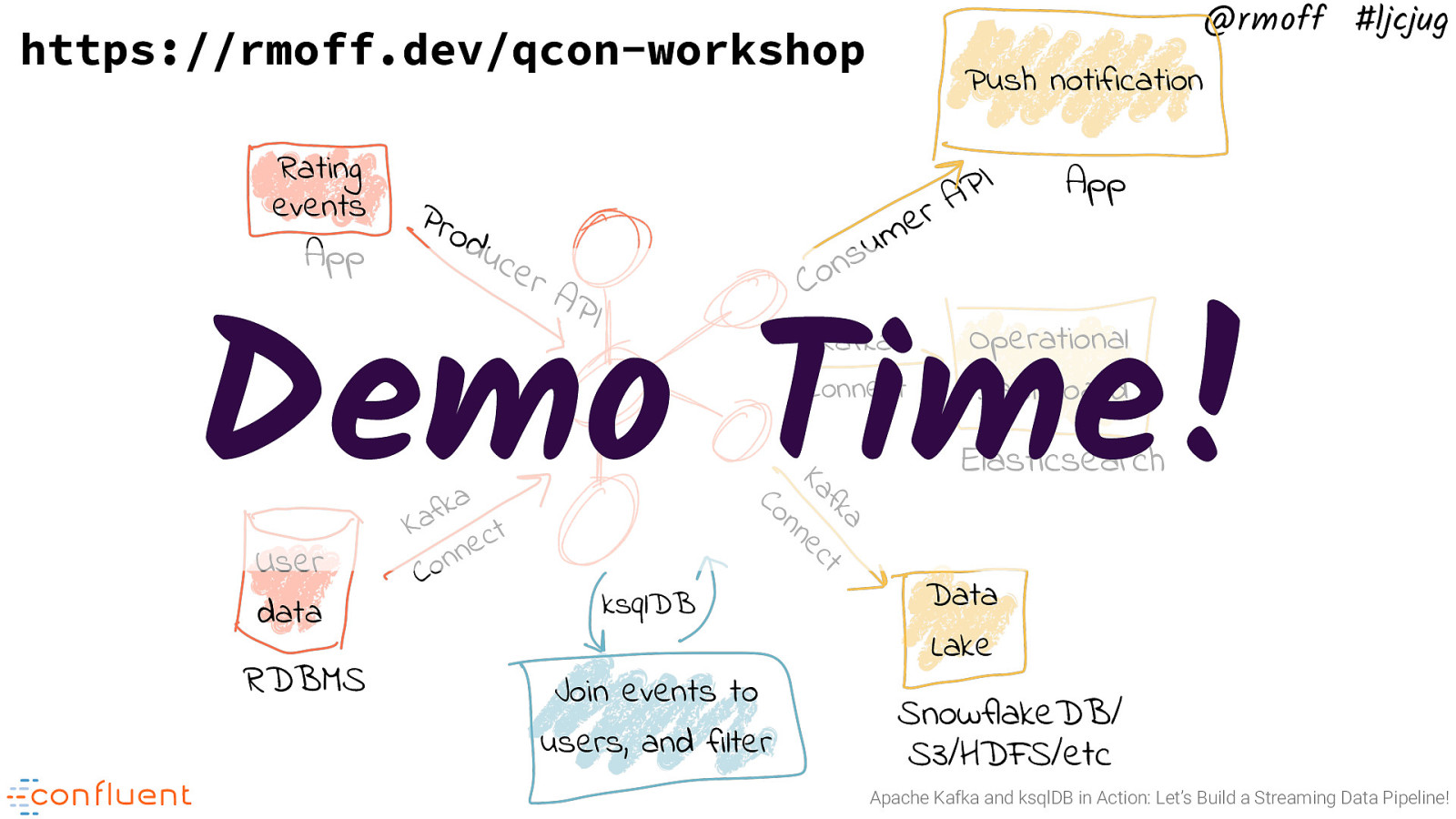

@rmoff #ljcjug https://rmoff.dev/qcon-workshop Rating events App Pro d Push notification I P A r e m App u s n o C uc e rA PI Demo Time! a fk t Ka ec n RDBMS Operational Dashboard Elasticsearch n Co User data a k f a K t c e n n o C Kafka Connect ksqlDB Join events to users, and filter Data Lake SnowflakeDB/ S3/HDFS/etc Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 52

@rmoff #ljcjug Push notification Rating events App Kafka Connect a fk t Ka ec n RDBMS u s n o C uc e rA PI a k f a K t c e n n o C App Operational Dashboard Elasticsearch n Co User data Pro d I P A r e m ksqlDB Join events to users, and filter Data Lake SnowflakeDB/ S3/HDFS/etc Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 53

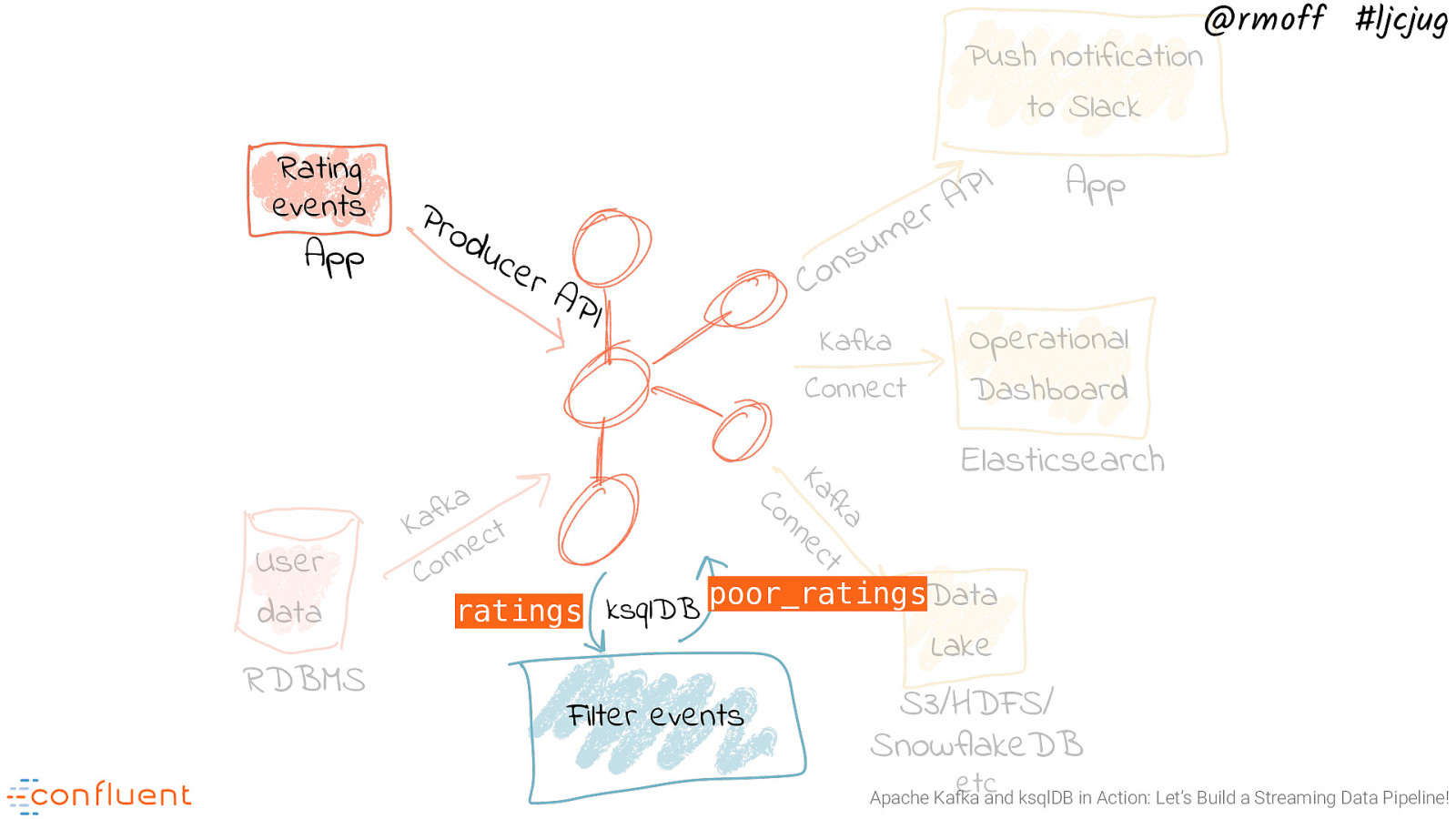

@rmoff #ljcjug Push notification to Slack Rating events App Pro d I P A r e m App u s n o C uc e rA PI Kafka Connect Operational Dashboard Elasticsearch n Co a fk t Ka ec n a k f a K t c e n n o C poor_ratings Data ratings ksqlDB User data RDBMS Lake Filter events S3/HDFS/ SnowflakeDB etc Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 54

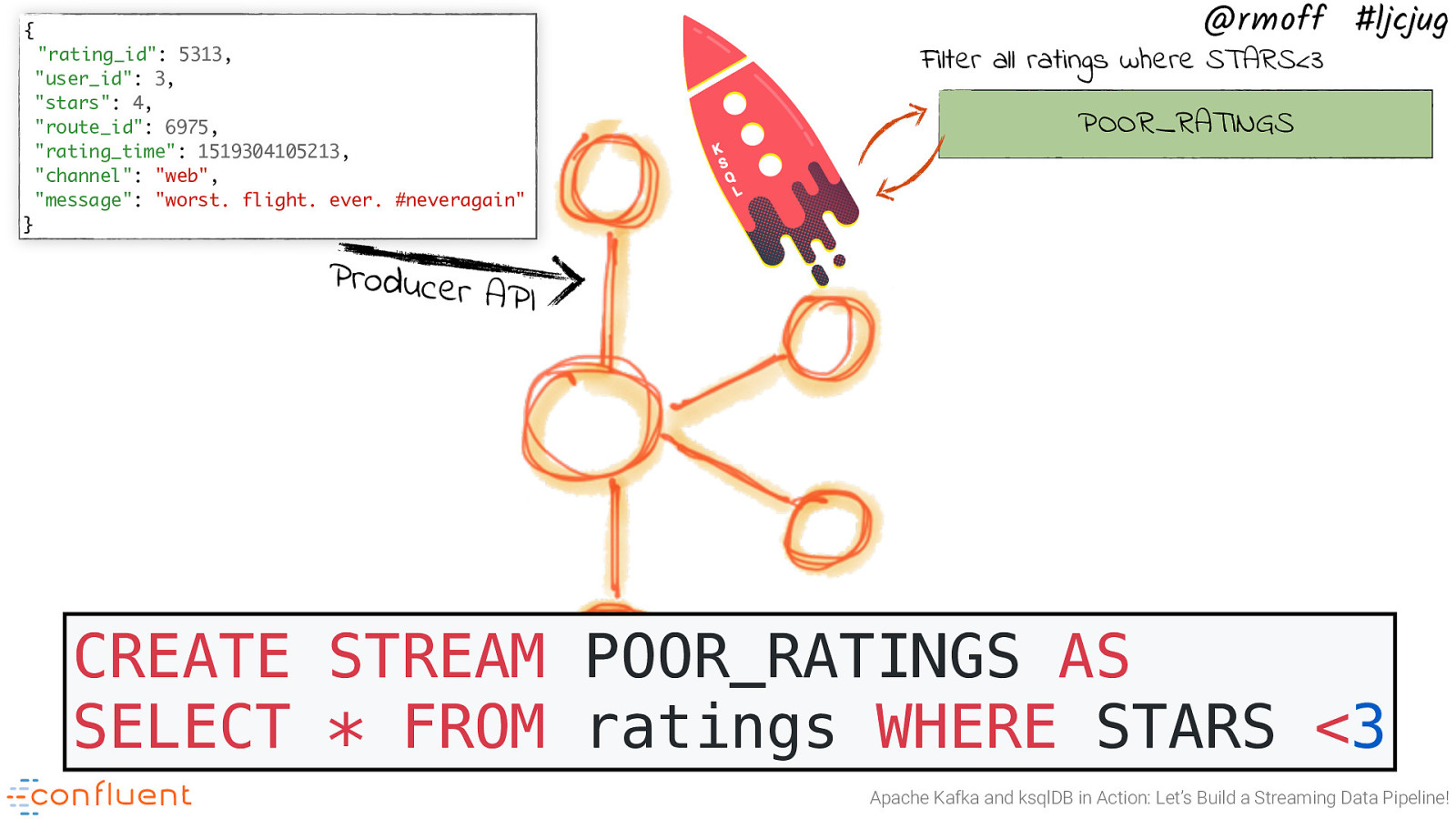

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” @rmoff #ljcjug Filter all ratings where STARS<3 POOR_RATINGS } Producer API CREATE STREAM POOR_RATINGS AS SELECT * FROM ratings WHERE STARS <3 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 55

@rmoff #ljcjug Push notification to Slack Kafka Connect Rating events App Kafka Connect a fk t Ka ec n RDBMS u s n o C uc e rA PI a k f a K t c e n n o C App Operational Dashboard Elasticsearch n Co User data Pro d I P A r e m Join events to users, and filter Data Lake SnowflakeDB/ S3/HDFS/etc Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

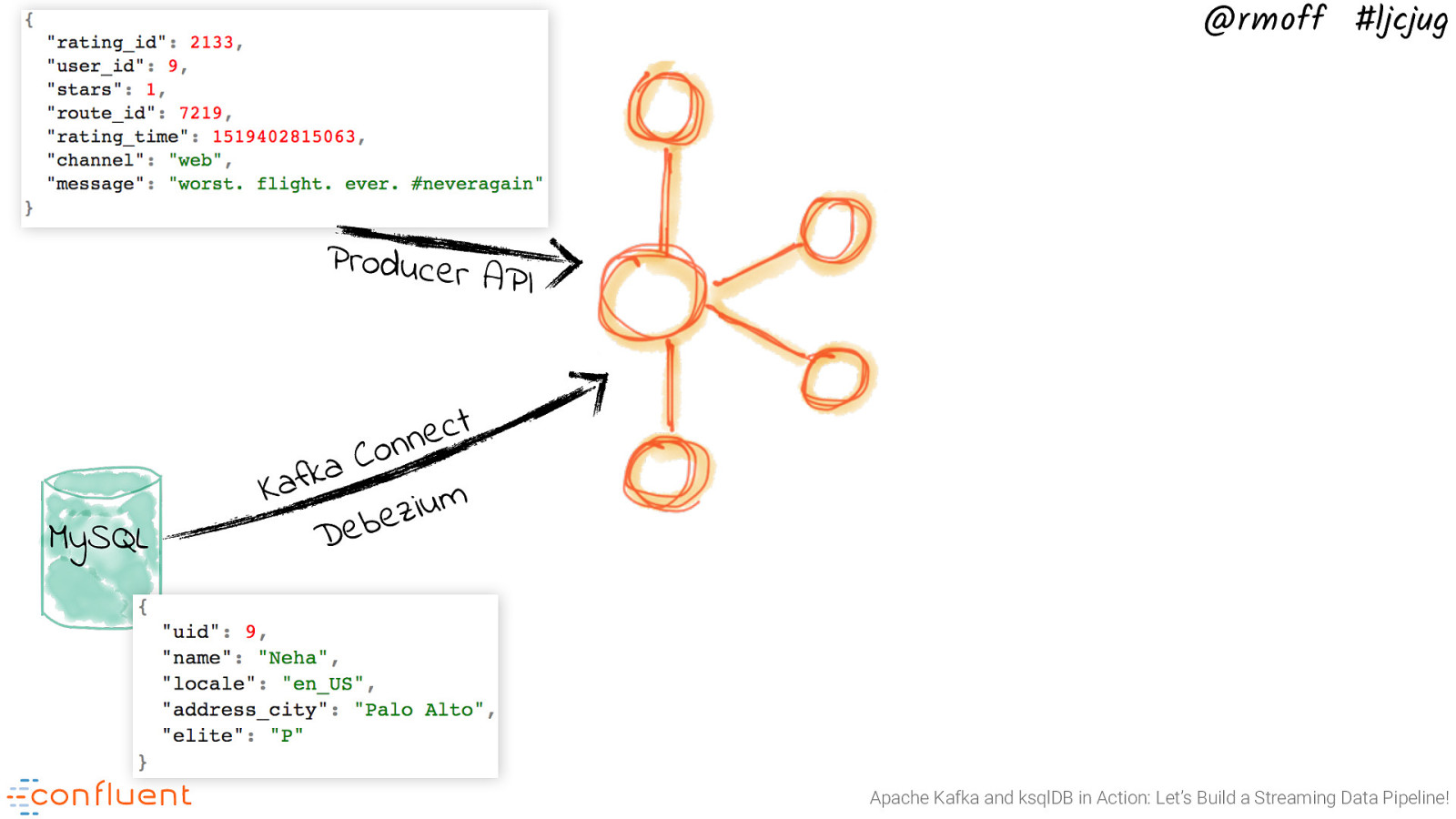

Slide 56

@rmoff #ljcjug Producer API MySQL t c e n n o C a k f Ka m u i z e b e D Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

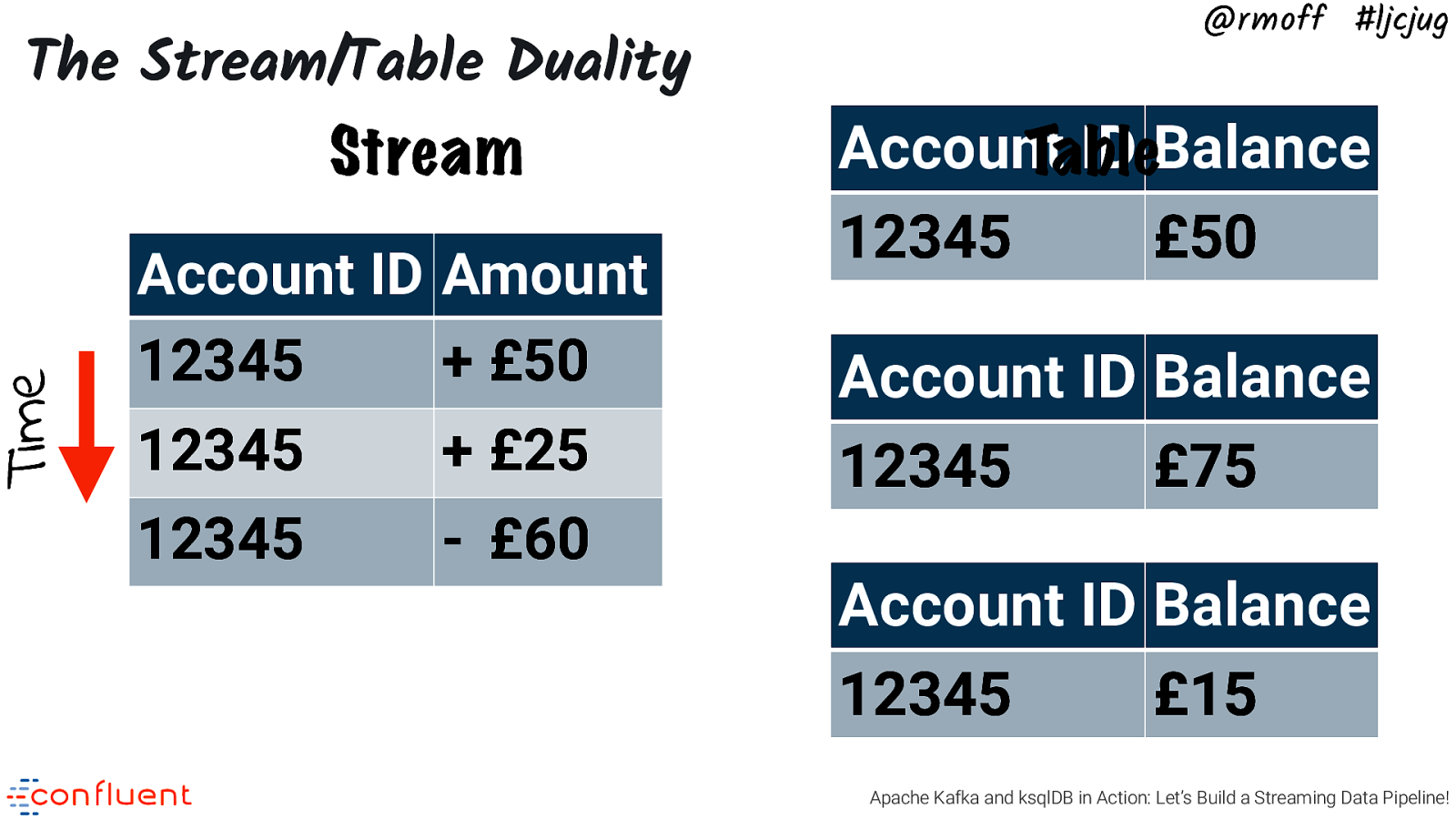

Slide 57

Time The Stream/Table Duality Stream Account ID Amount 12345 + £50 12345

- £25 12345

- £60 @rmoff #ljcjug Account ID Balance Table 12345 £50 Account ID Balance 12345 £75 Account ID Balance 12345 £15 Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 58

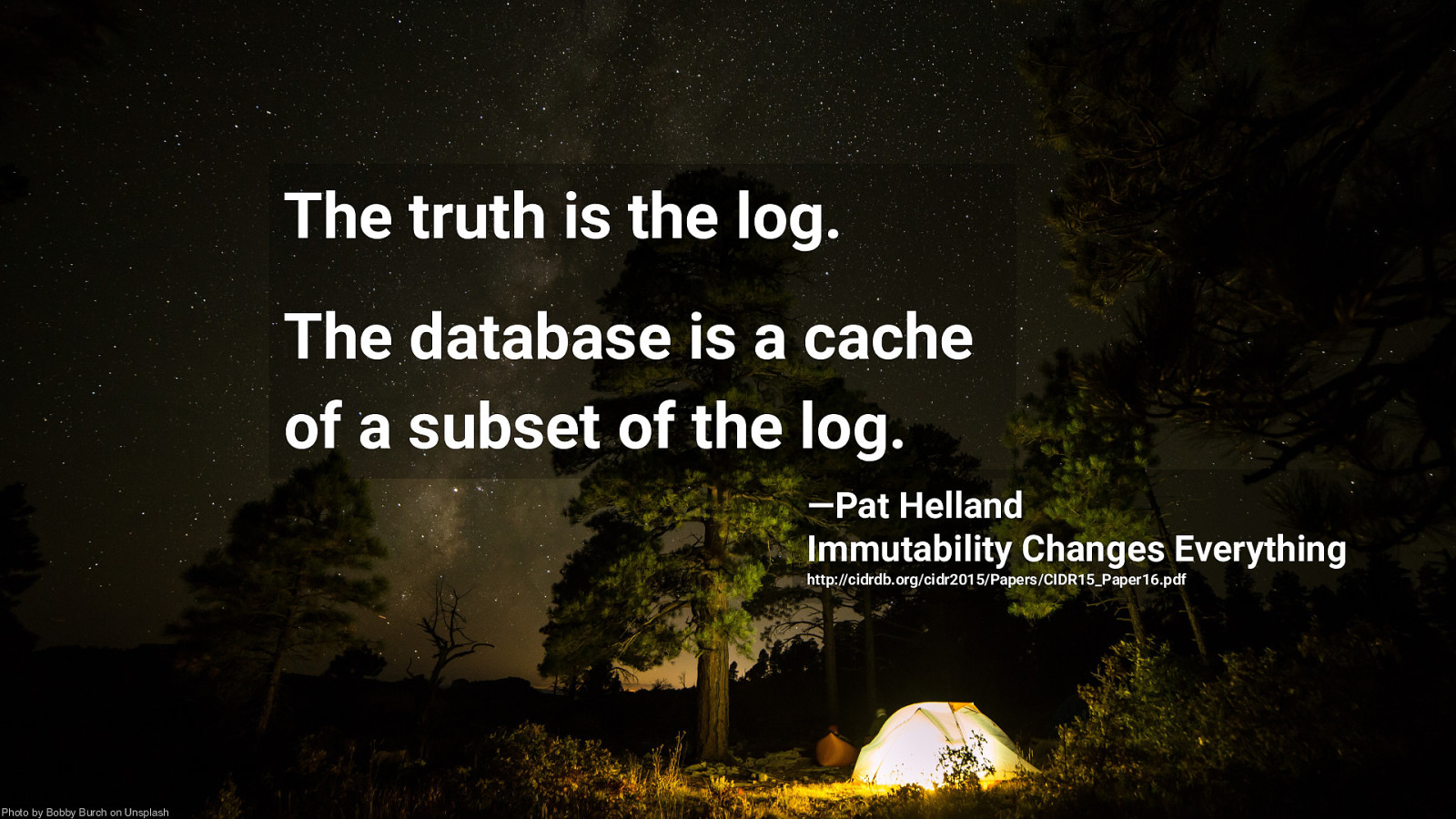

The truth is the log. The database is a cache of a subset of the log. —Pat Helland Immutability Changes Everything http://cidrdb.org/cidr2015/Papers/CIDR15_Paper16.pdf Photo by Bobby Burch on Unsplash

Slide 59

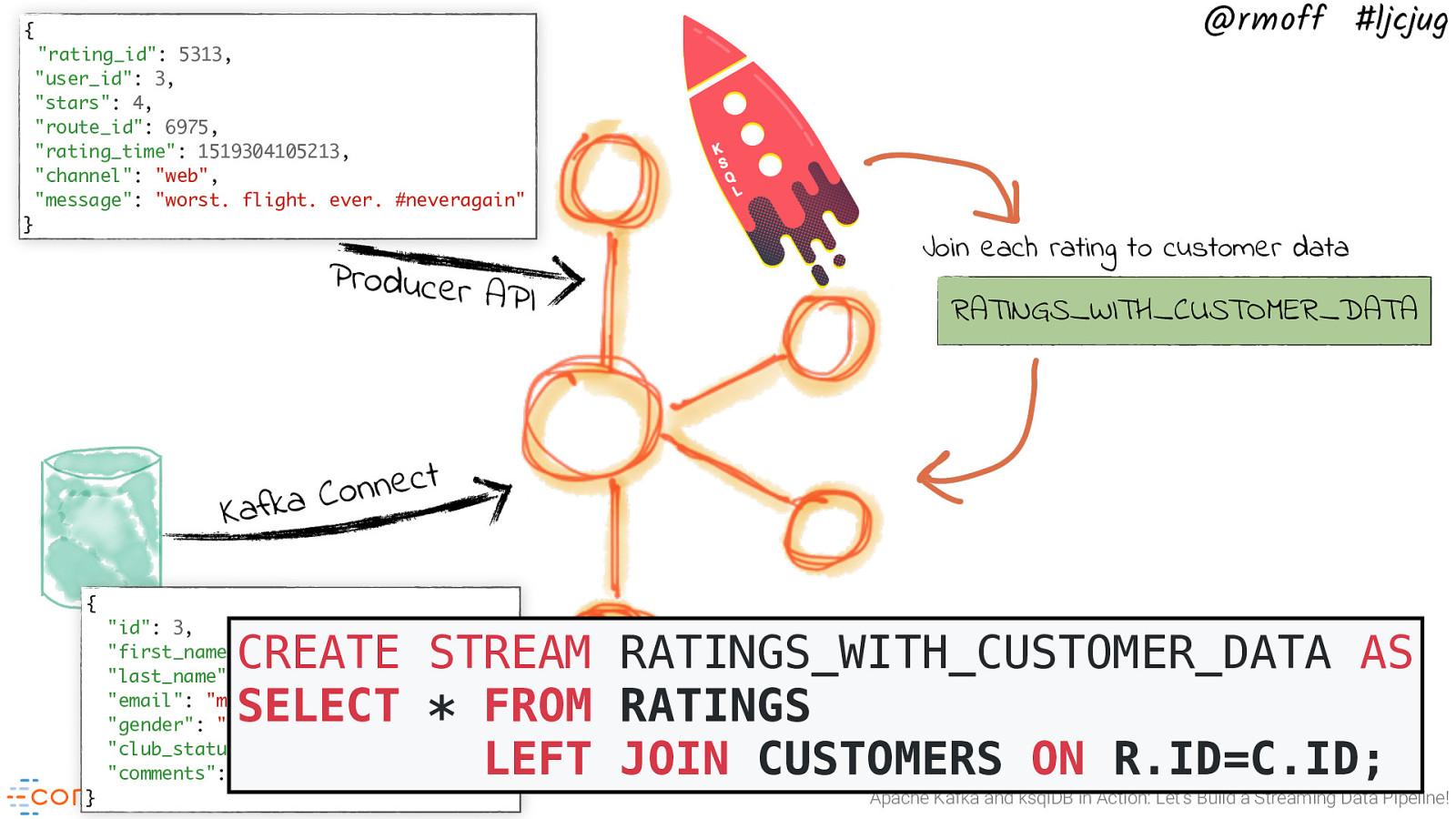

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } Producer API @rmoff #ljcjug Join each rating to customer data RATINGS_WITH_CUSTOMER_DATA t c e n n o C a k f a K { “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “[email protected]”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” CREATE STREAM RATINGS_WITH_CUSTOMER_DATA AS SELECT * FROM RATINGS LEFT JOIN CUSTOMERS ON R.ID=C.ID; } Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

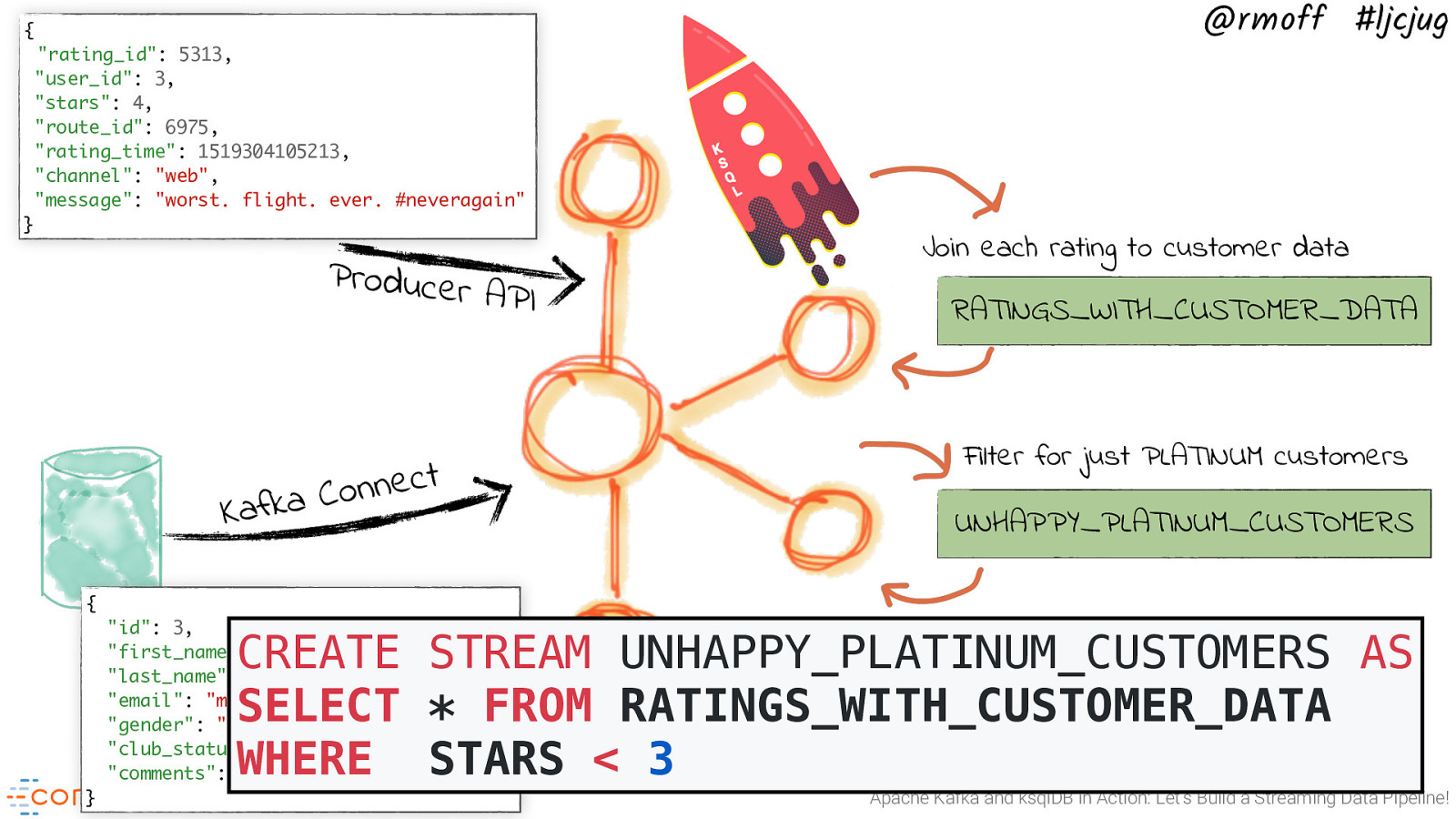

Slide 60

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } Producer API t c e n n o C a k f a K @rmoff #ljcjug Join each rating to customer data RATINGS_WITH_CUSTOMER_DATA Filter for just PLATINUM customers UNHAPPY_PLATINUM_CUSTOMERS { “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “[email protected]”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” CREATE STREAM UNHAPPY_PLATINUM_CUSTOMERS AS SELECT * FROM RATINGS_WITH_CUSTOMER_DATA WHERE STARS < 3 } Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

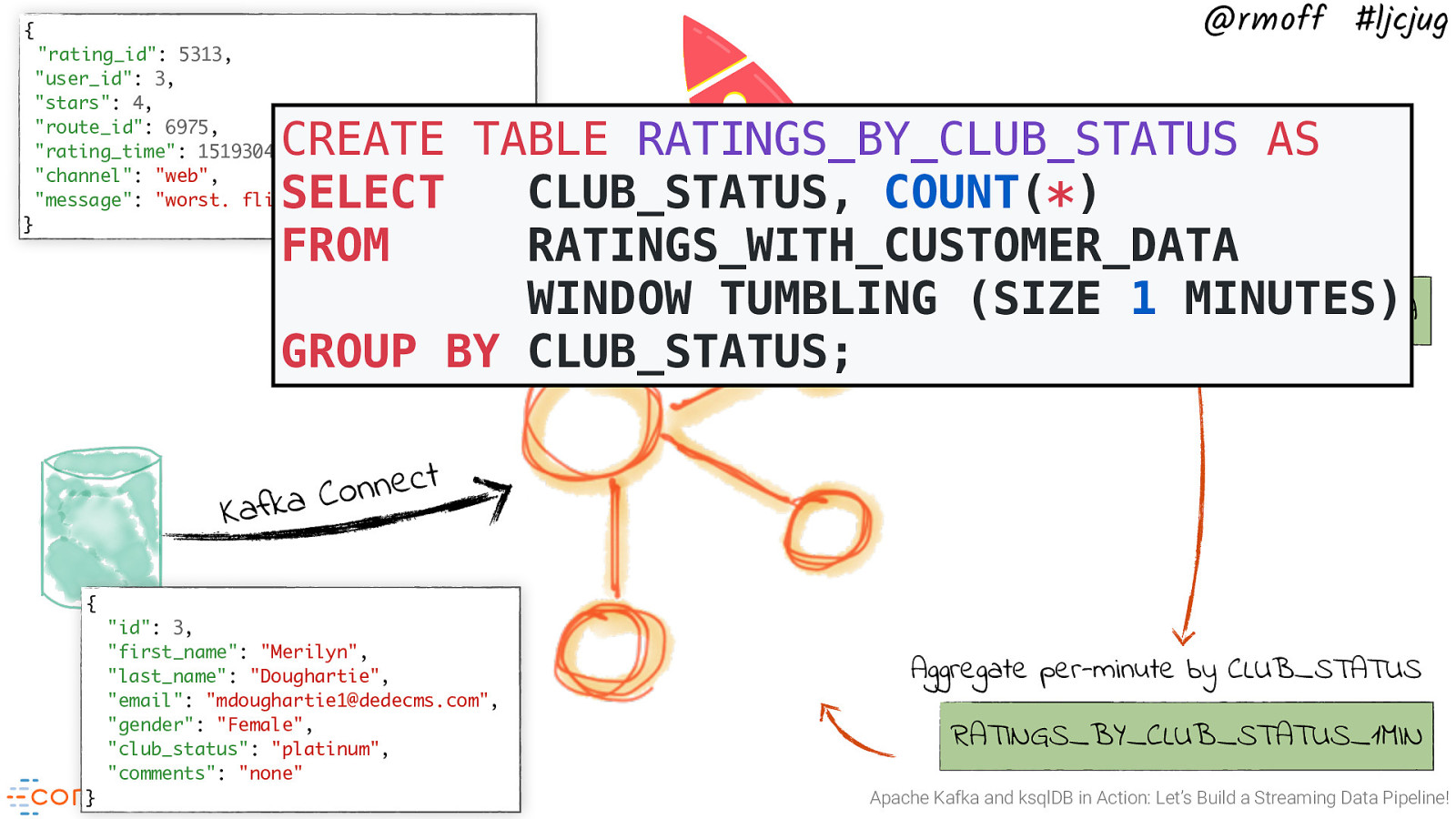

Slide 61

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” @rmoff #ljcjug CREATE TABLE RATINGS_BY_CLUB_STATUS AS SELECT CLUB_STATUS, COUNT(*) Join each rating to customer data FROM RATINGS_WITH_CUSTOMER_DATA Producer APWINDOW I TUMBLING RATINGS_WITH_CUSTOMER_DATA (SIZE 1 MINUTES) GROUP BY CLUB_STATUS; } t c e n n o C a k f a K { “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “[email protected]”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” } Aggregate per-minute by CLUB_STATUS RATINGS_BY_CLUB_STATUS_1MIN Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 62

Kafka Connect → Elasticsearch @rmoff #ljcjug Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 63

Photo by on @rmoff #ljcjug Want to learn more? CTAs, not CATs (sorry, not sorry) Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 64

Learn Kafka. Start building with Apache Kafka at Confluent Developer. developer.confluent.io

Slide 65

Confluent Community Slack group @rmoff #ljcjug cnfl.io/slack Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 66

@rmoff #ljcjug Free Books! https://rmoff.dev/ljcjug Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 67

Resources @rmoff #ljcjug #EOF • CDC Spreadsheet • Blog: No More Silos: How to Integrate your Databases with Apache Kafka and CDC • #partner-engineering on Slack for questions • BD team (#partners / [email protected]) can help with introductions on a given sales op Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!

Slide 68

Related Talks @rmoff #ljcjug •The Changing Face of ETL: Event-Driven Architectures for Data Engineers • 📖 Slides • 📽 Recording •ATM Fraud detection with Kafka and ksqlDB • 📖 Slides • 👾 Code • 📽 Recording •Embrace the Anarchy: Apache Kafka’s Role in Modern Data Architectures • 📖 Slides • 📽 Recording •Apache Kafka and ksqlDB in Action : Let’s Build a Streaming Data Pipeline! • 📖 Slides • 👾 Code • 📽 Recording •No More Silos: Integrating Databases and Apache Kafka • 📖 Slides • 👾 Code (MySQL) • 👾 Code (Oracle) • 📽 Recording Apache Kafka and ksqlDB in Action: Let’s Build a Streaming Data Pipeline!