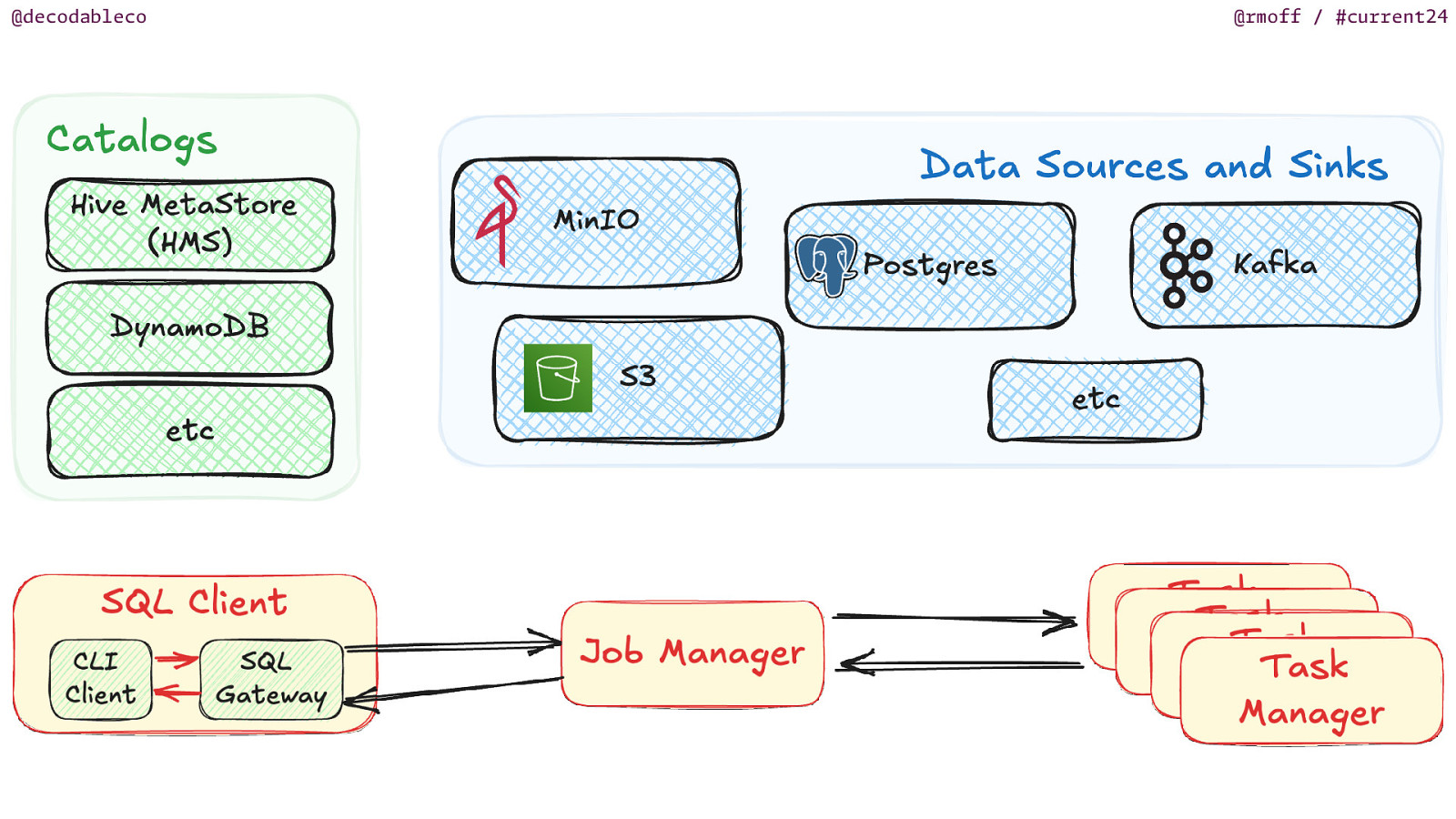

@rmoff / 18 Sep 2024 / #current24 The Joy of JARs and Other Flink SQL Troubleshooting Tales Robin Moffatt, Principal DevEx Engineer @ Decodable

Slide 1

Slide 2

@decodableco @rmoff / #current24 @rmoff

Slide 3

@decodableco @rmoff / #current24 @rmoff

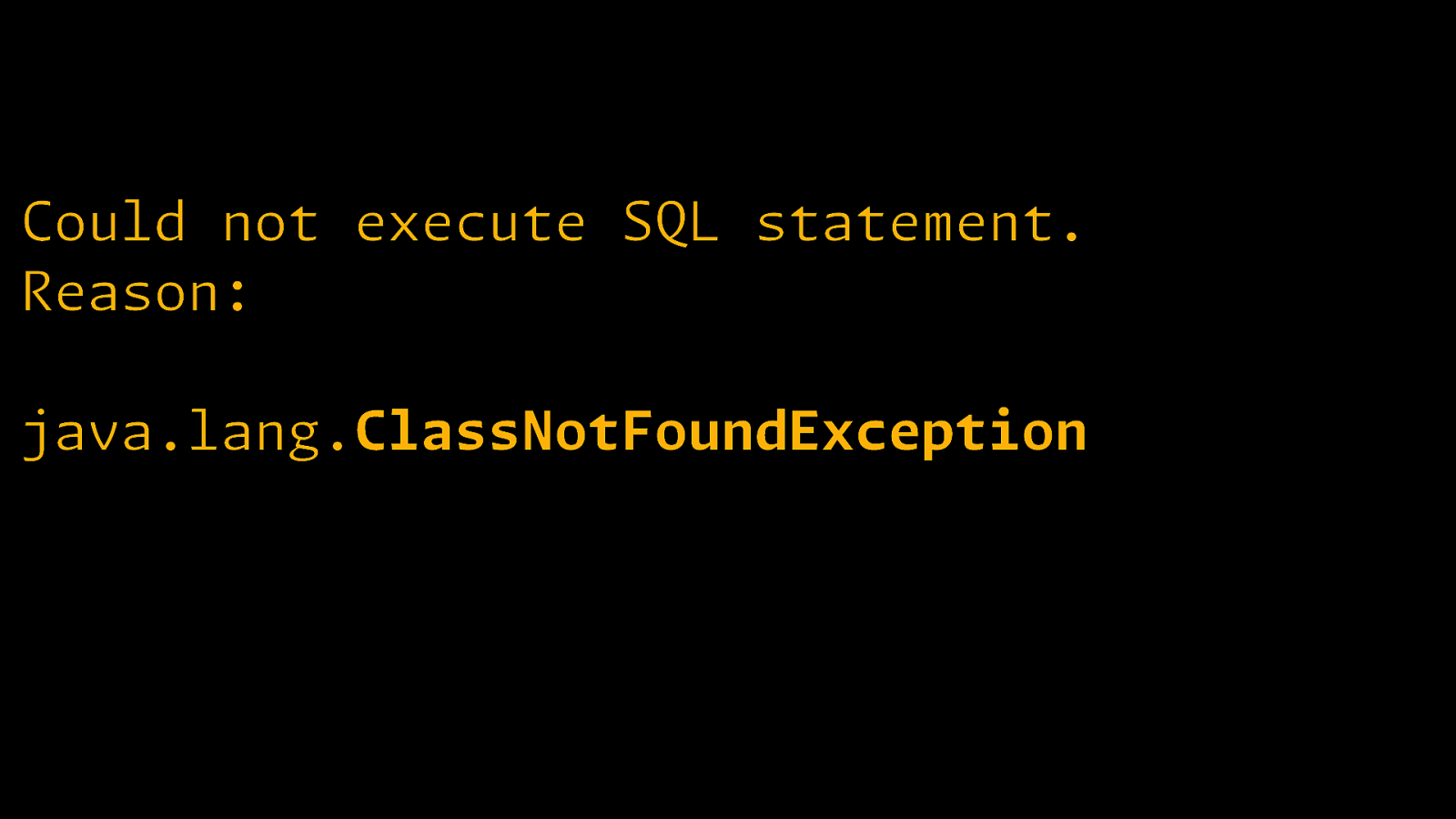

Slide 4

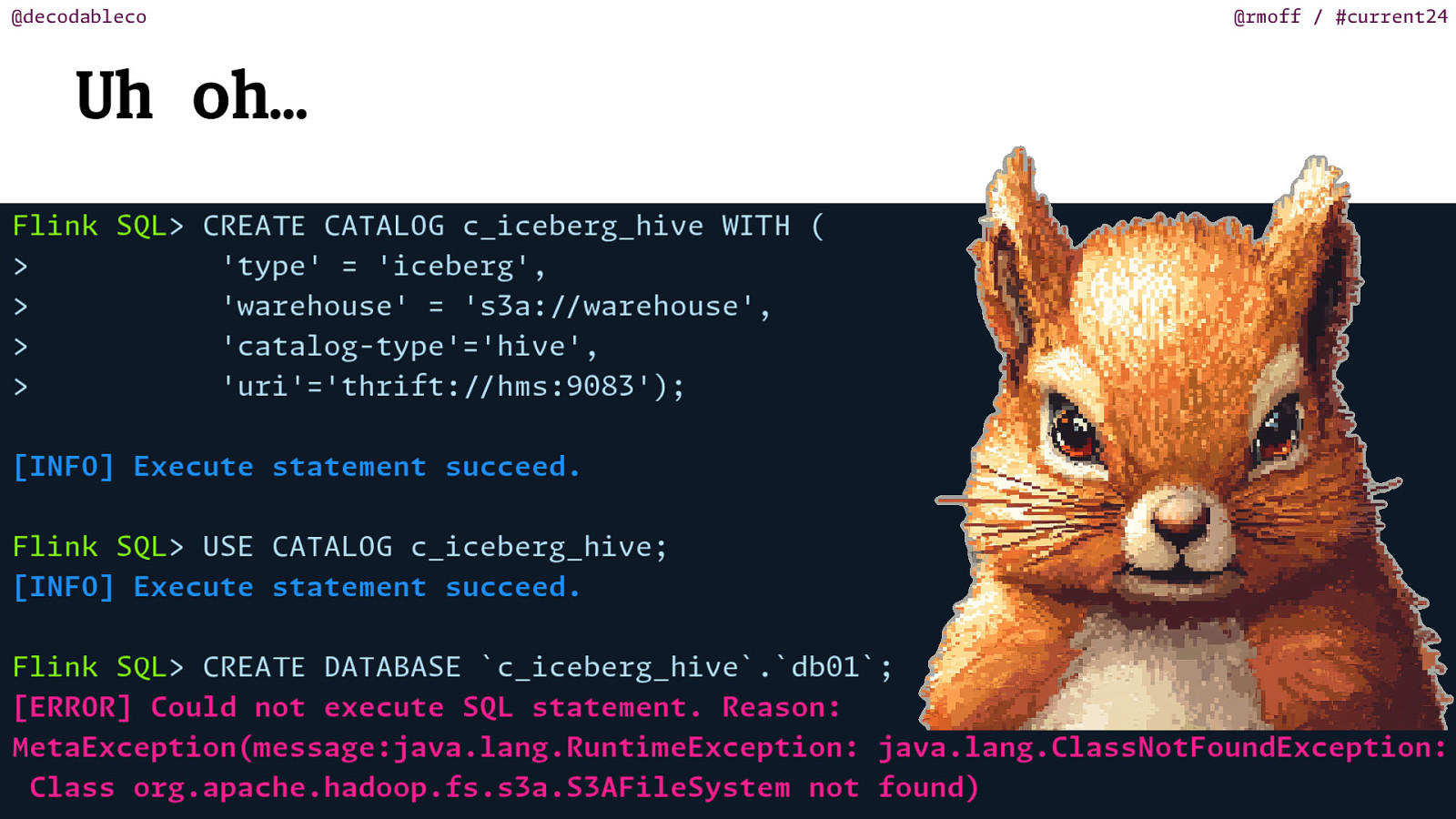

Could not execute SQL statement. Reason: java.lang.ClassNotFoundException

Slide 5

S m Could not find a file system plementation for scheme ‘s3’ m i org.apache.flink.core.fs. UnsupportedFileSyste chemeException:

Slide 6

m i Could not find any factory for identifier ‘hive’ that plements ‘org.apache.flink.table.factories. CatalogFactory’ in the classpath. 🏭

Slide 7

@decodableco @rmoff / #current24

Slide 8

@decodableco Troubleshooting @rmoff / #current24

Slide 9

@decodableco @rmoff / #current24 “…now I ll jiggle things rando y until they unbreak” is not acceptable —Linus Torvalds l m i libquotes.com/linus-torvalds/quote/lbr1k4j w / / https:

Slide 10

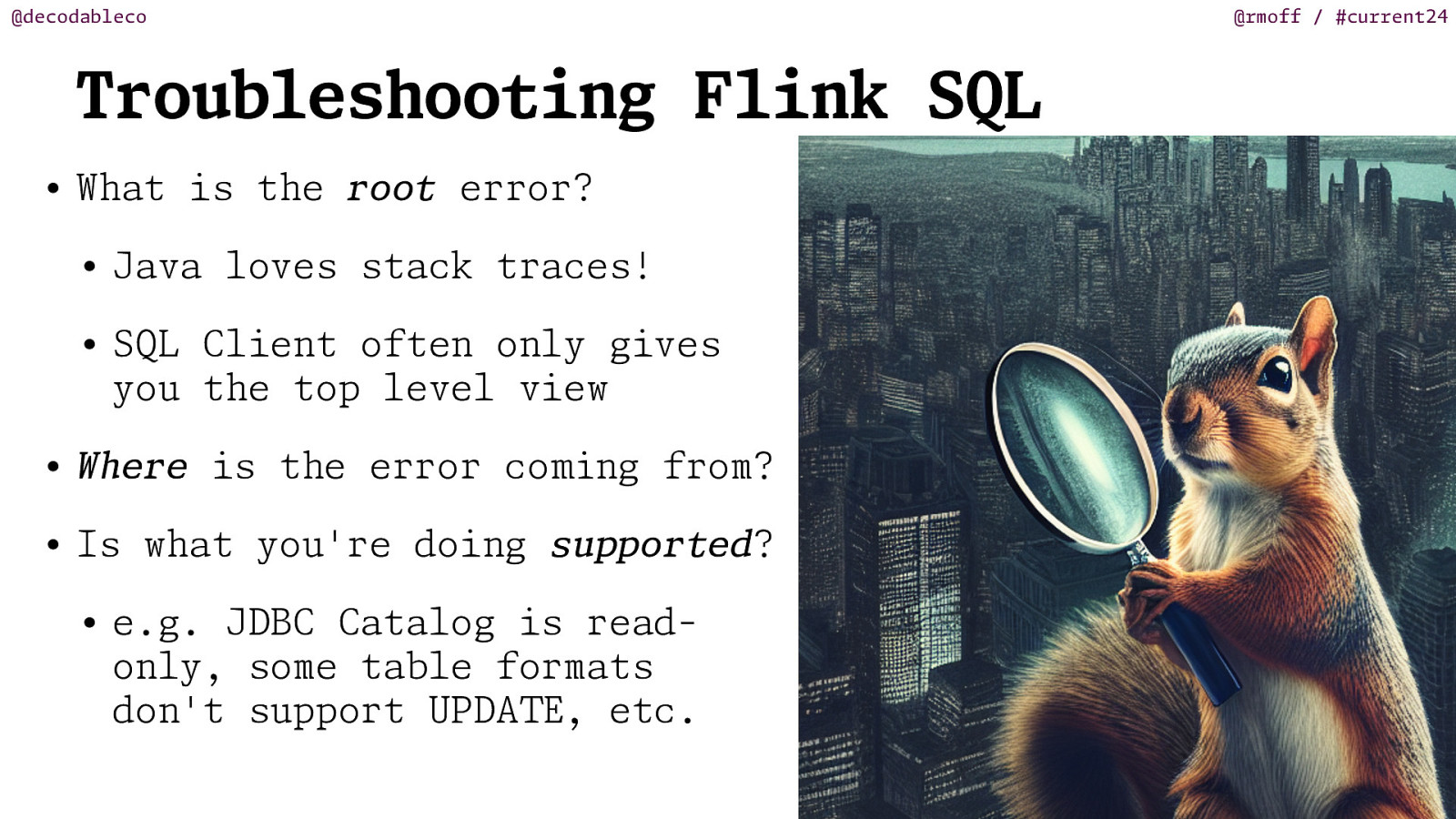

@decodableco @rmoff / #current24 Troubleshooting Flink SQL • What is the root error? • Java loves stack traces! • SQL Client often only gives you the top level view • W ere is the error co ng from? • Is what you’re doing supported? • e.g. JDBC Catalog is read- i m E T h only, some table formats don’t support UPDA , etc.

Slide 11

@decodableco @rmoff / #current24 Things to a • Versions • Flink vs libraries • Dependencies, e.g. • Required JARs w l • Java version ays check

Slide 12

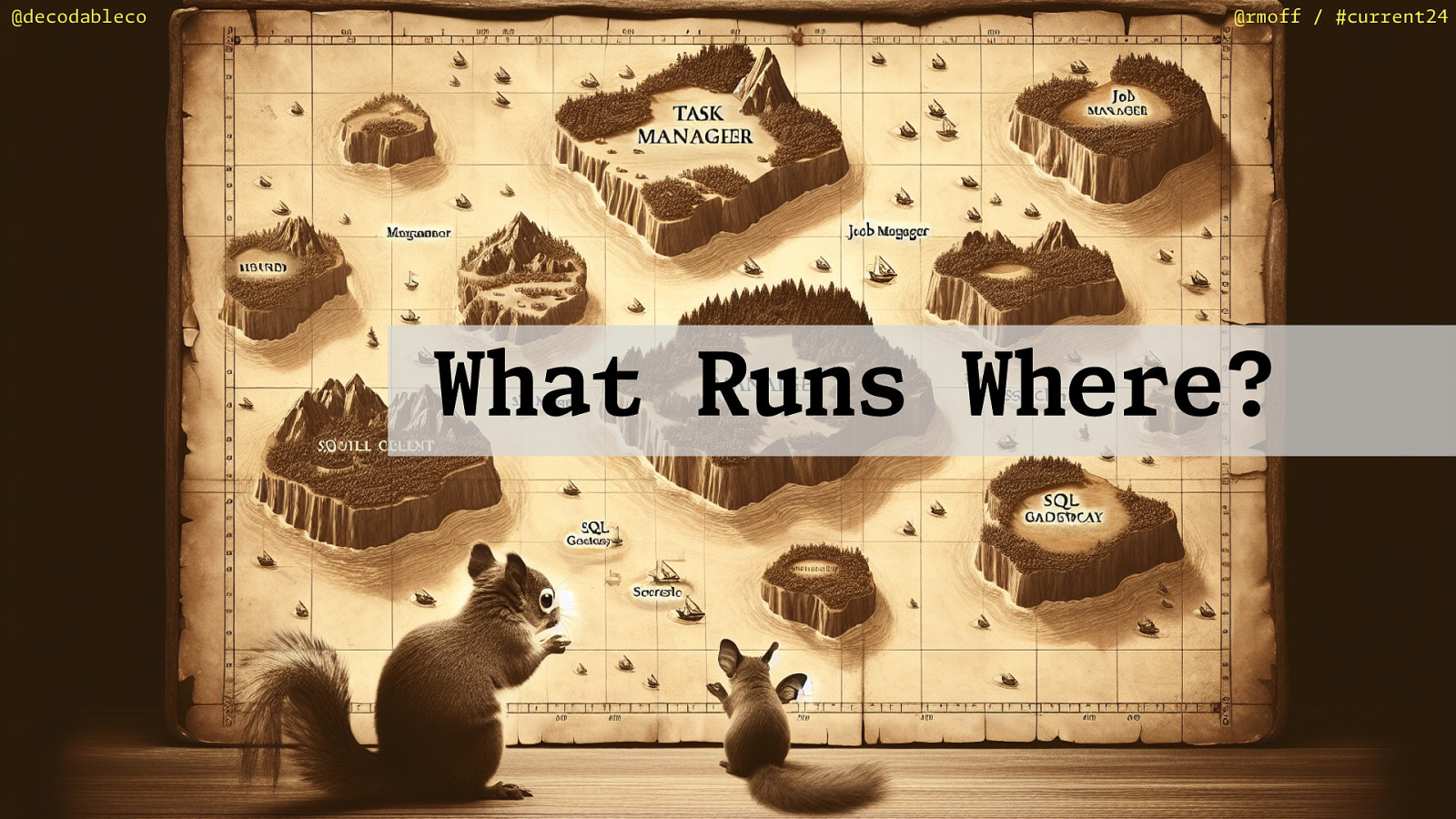

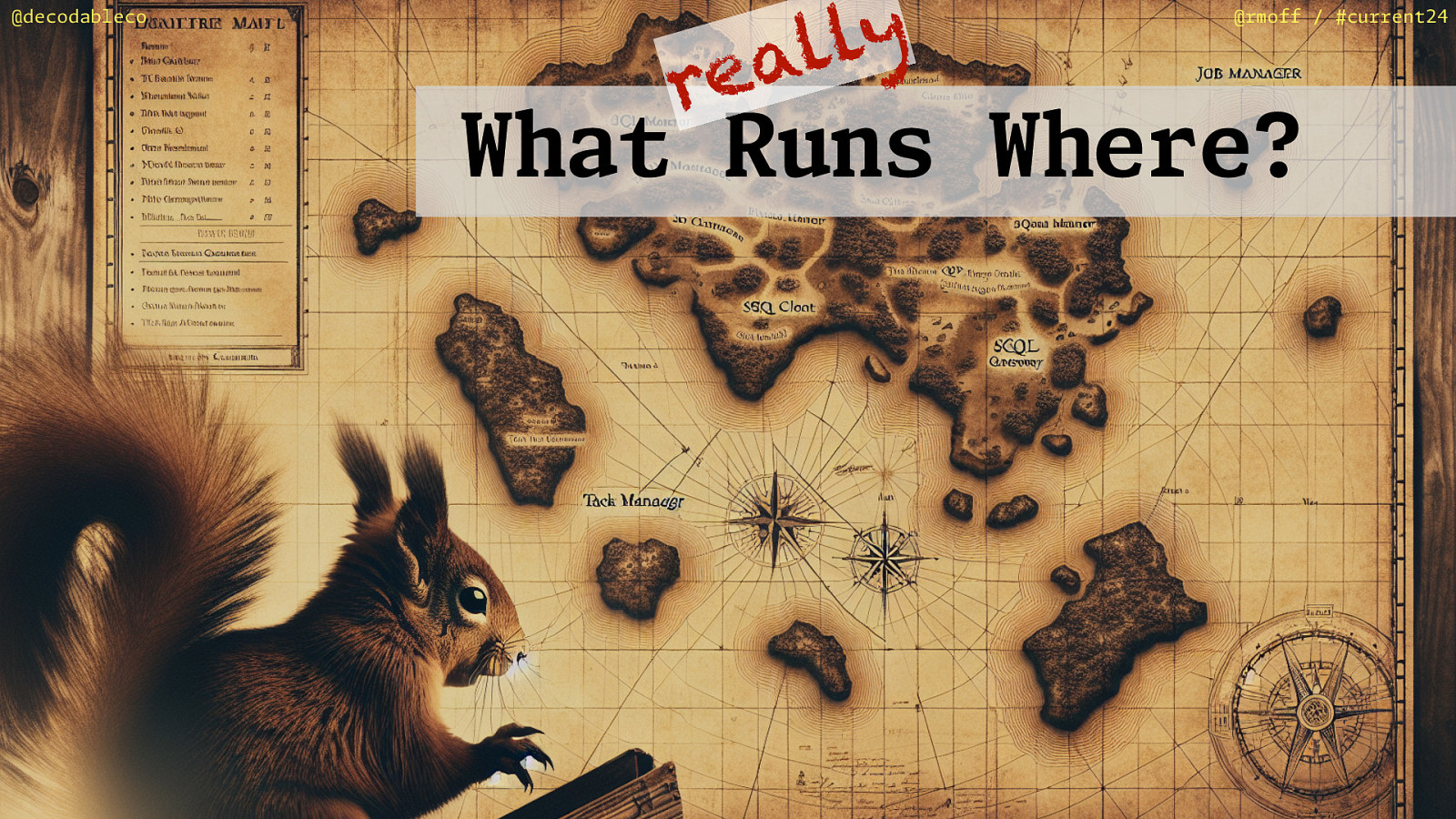

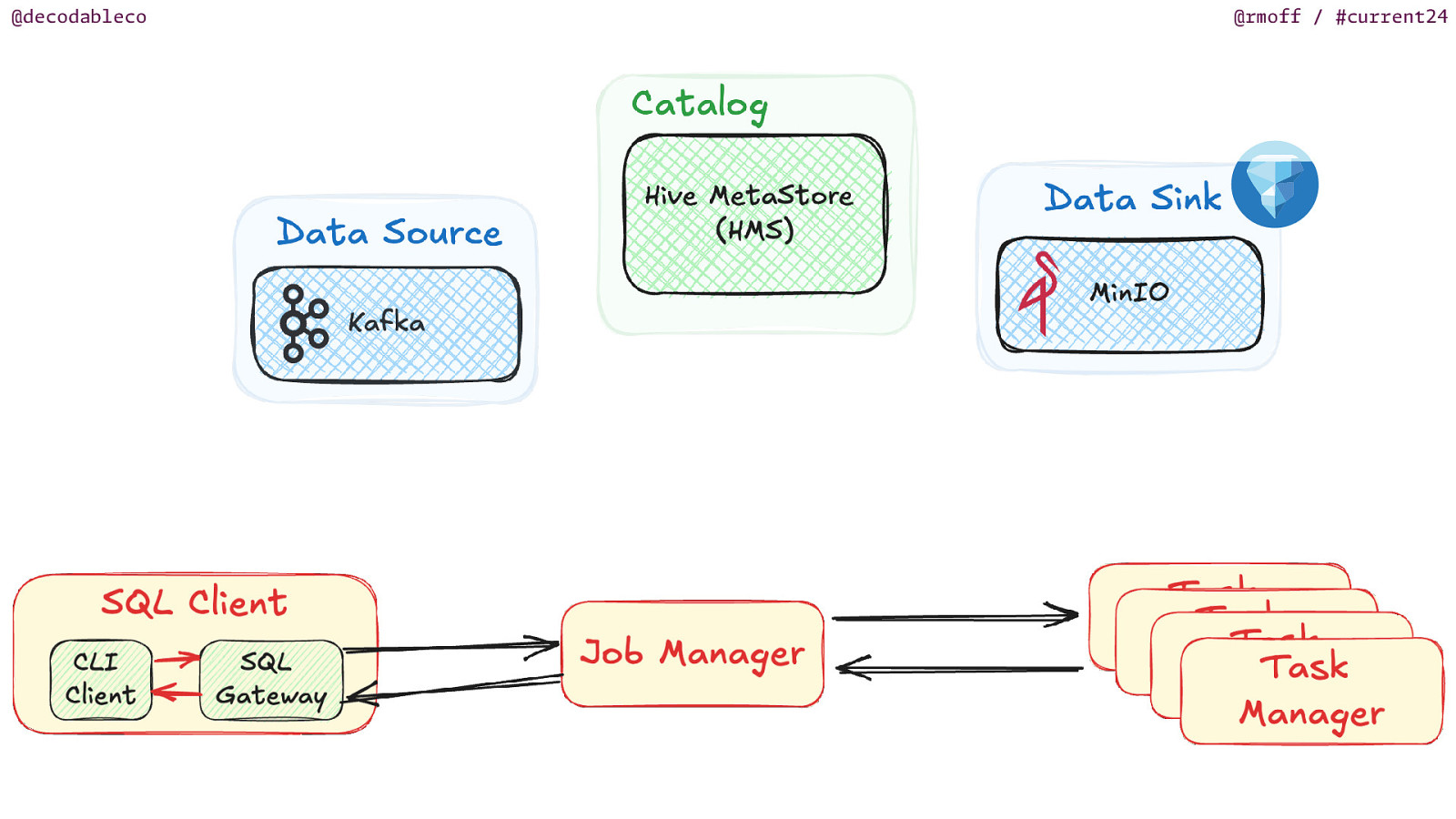

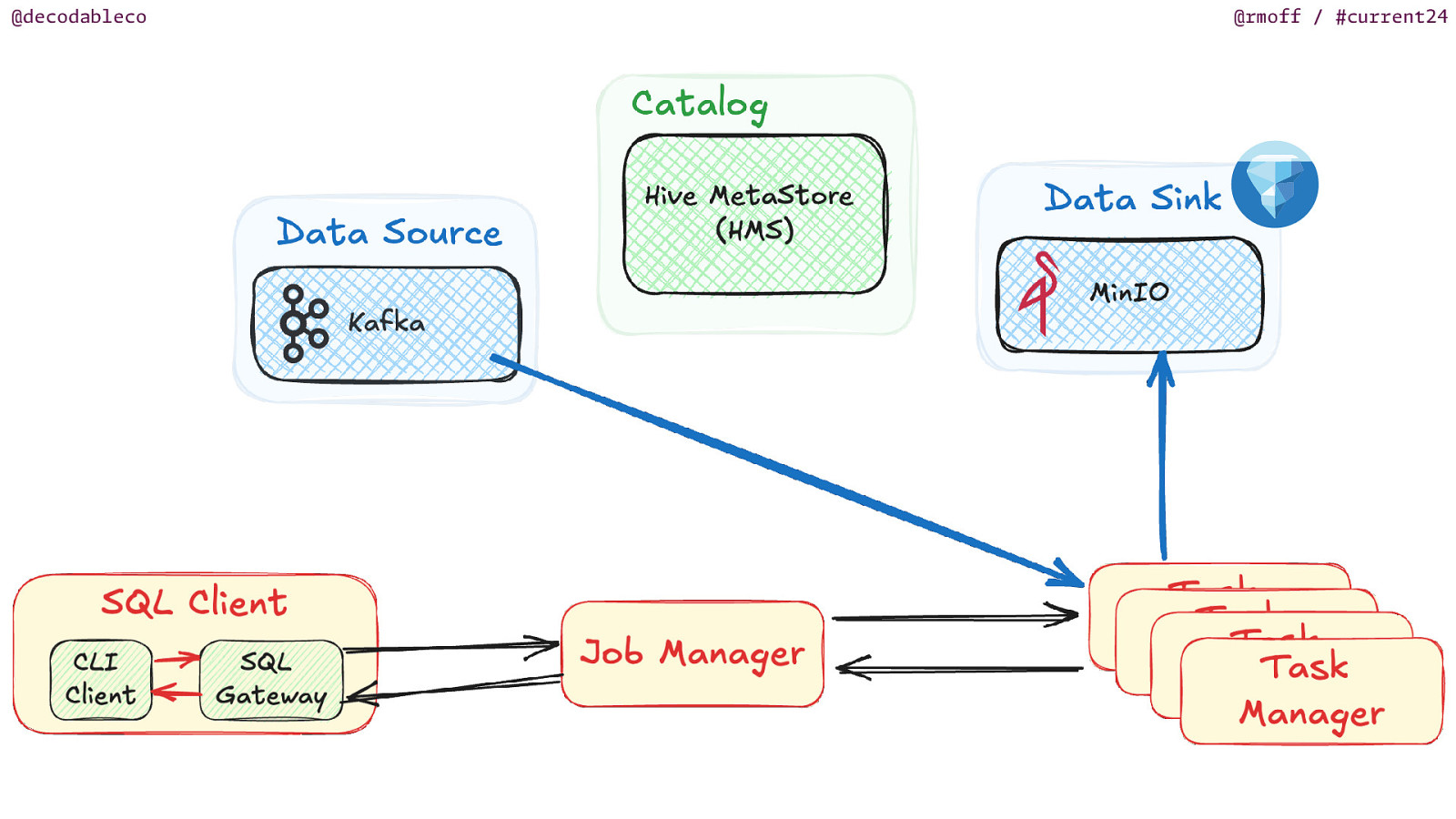

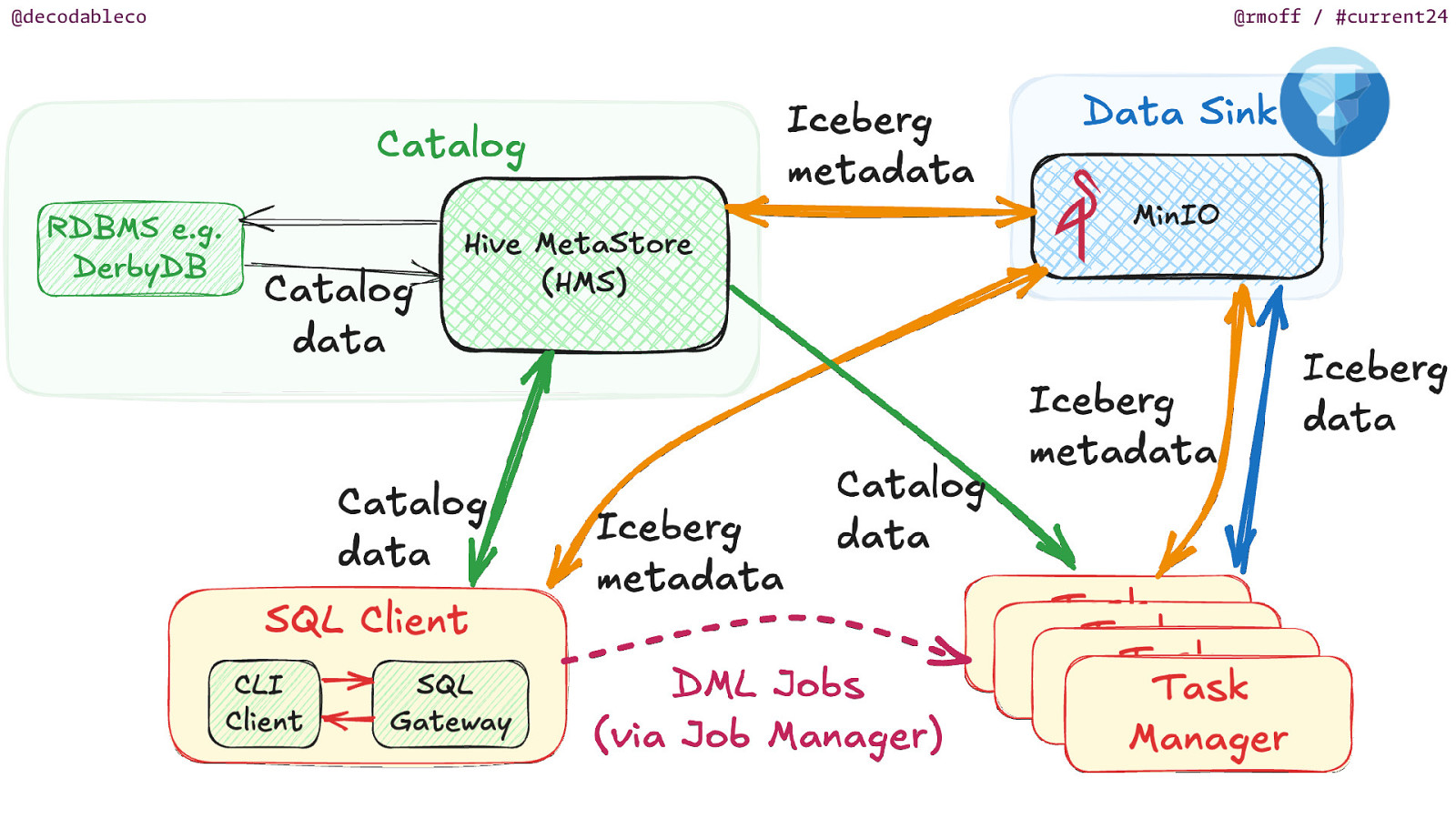

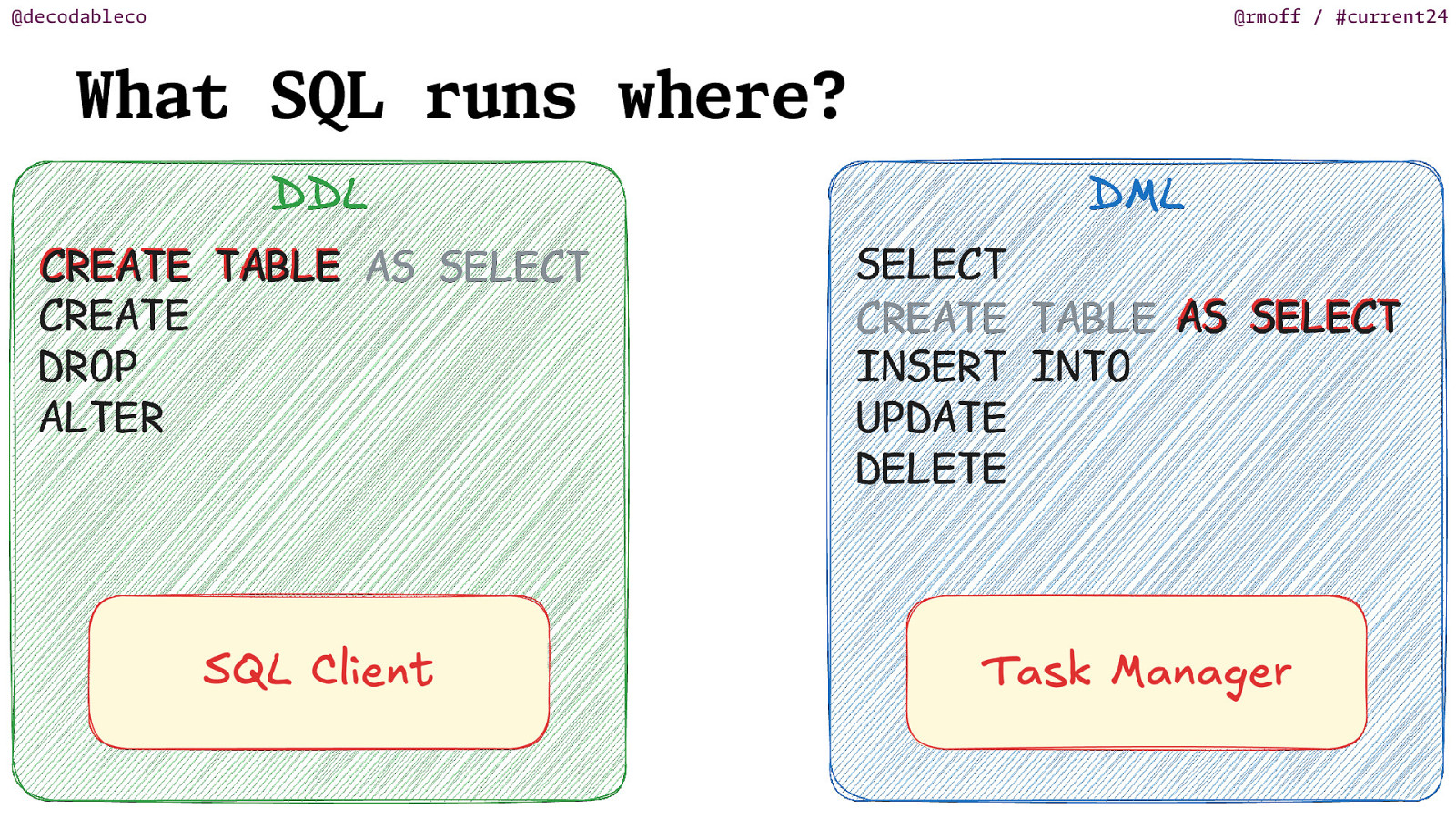

@decodableco @rmoff / #current24 What Runs Where?

Slide 13

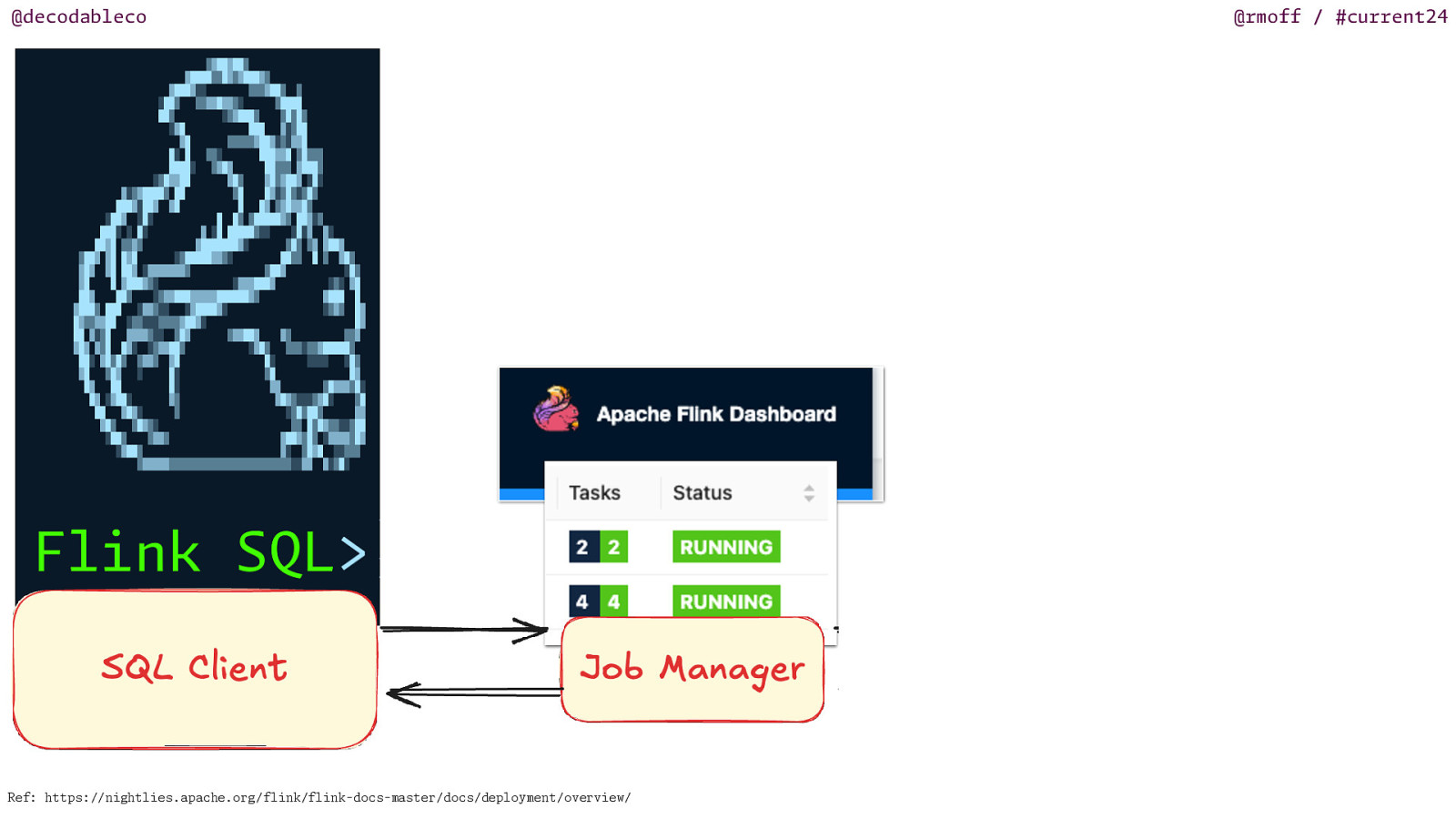

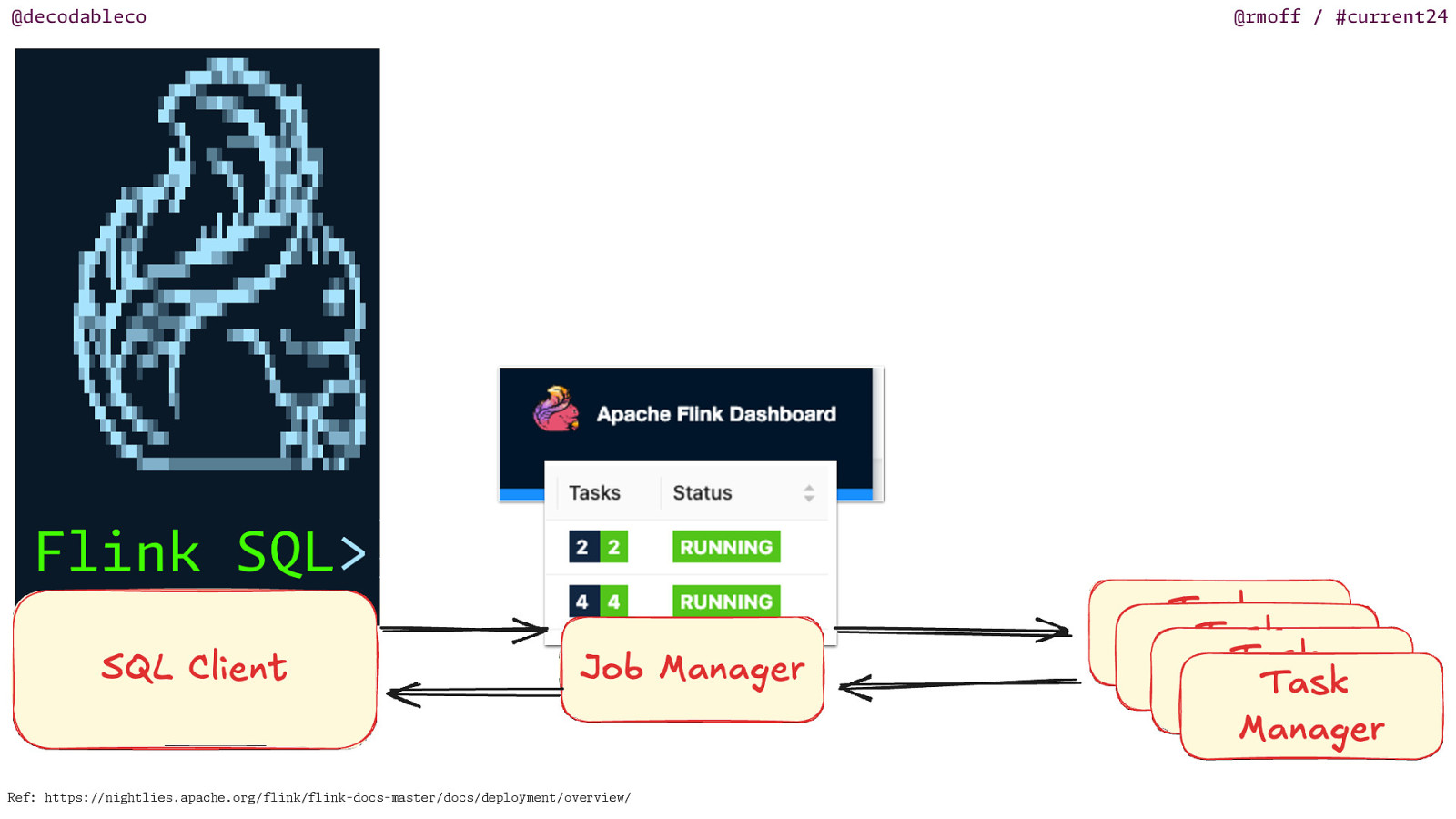

@decodableco @rmoff / #current24 apache flink is a distributed system it’s not being difficult entirely for the sake of it ;)

Slide 14

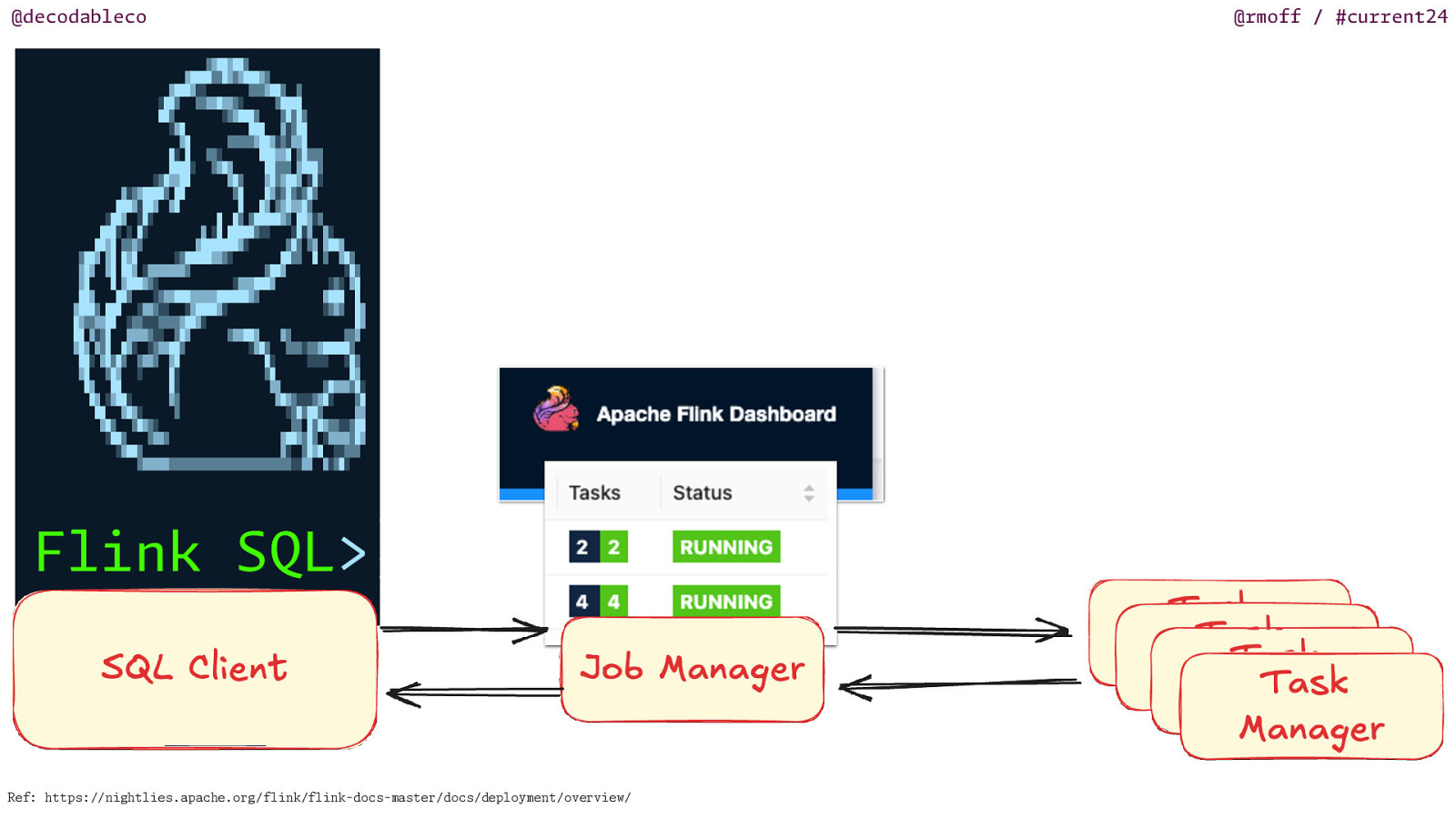

@decodableco nightlies.apache.org/flink/flink-docs-master/docs/deployment/overview/ / / Ref: https: @rmoff / #current24

Slide 15

@decodableco nightlies.apache.org/flink/flink-docs-master/docs/deployment/overview/ / / Ref: https: @rmoff / #current24

Slide 16

@decodableco nightlies.apache.org/flink/flink-docs-master/docs/deployment/overview/ / / Ref: https: @rmoff / #current24

Slide 17

@rmoff / #current24 What went wrong?

Slide 18

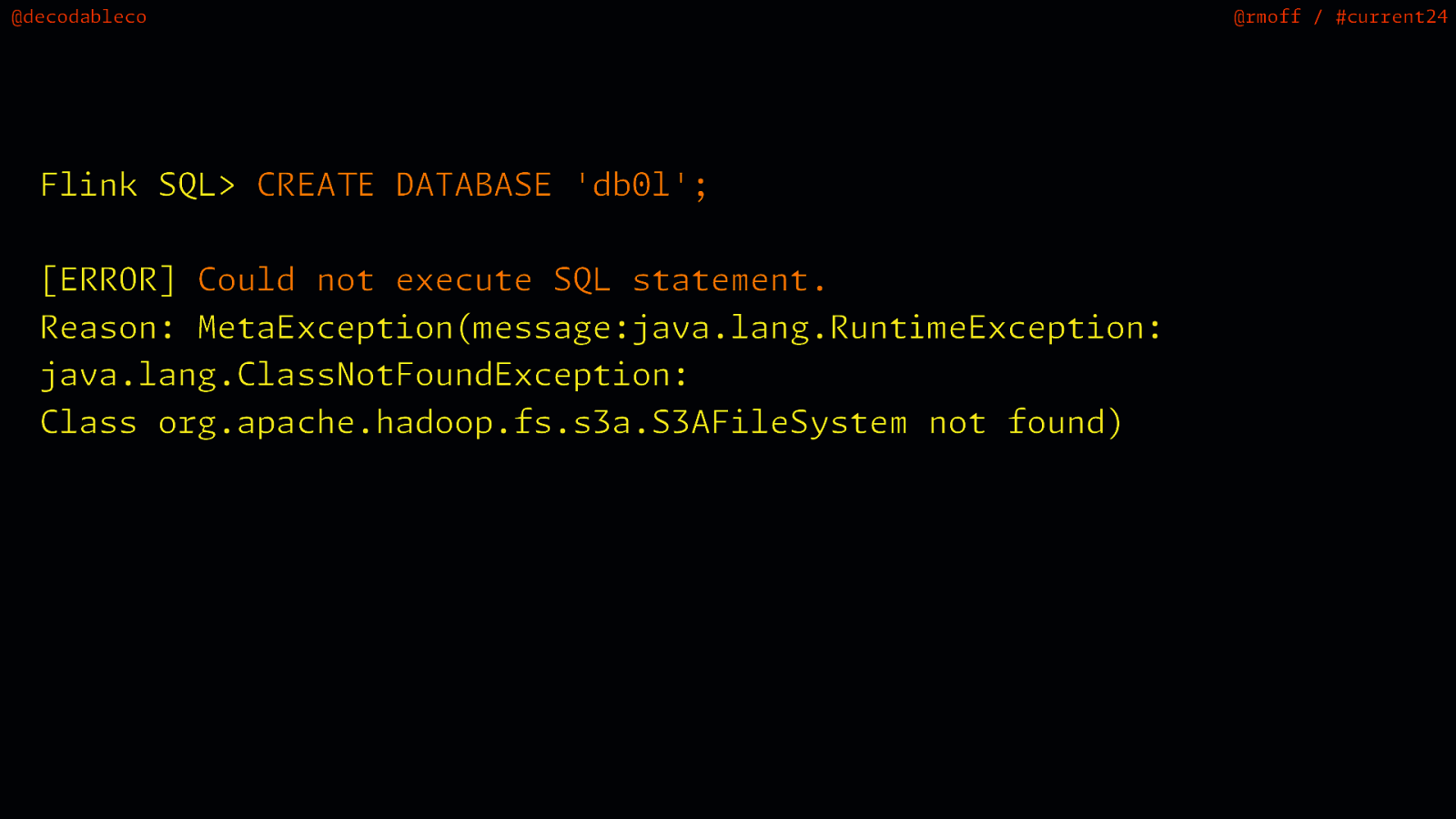

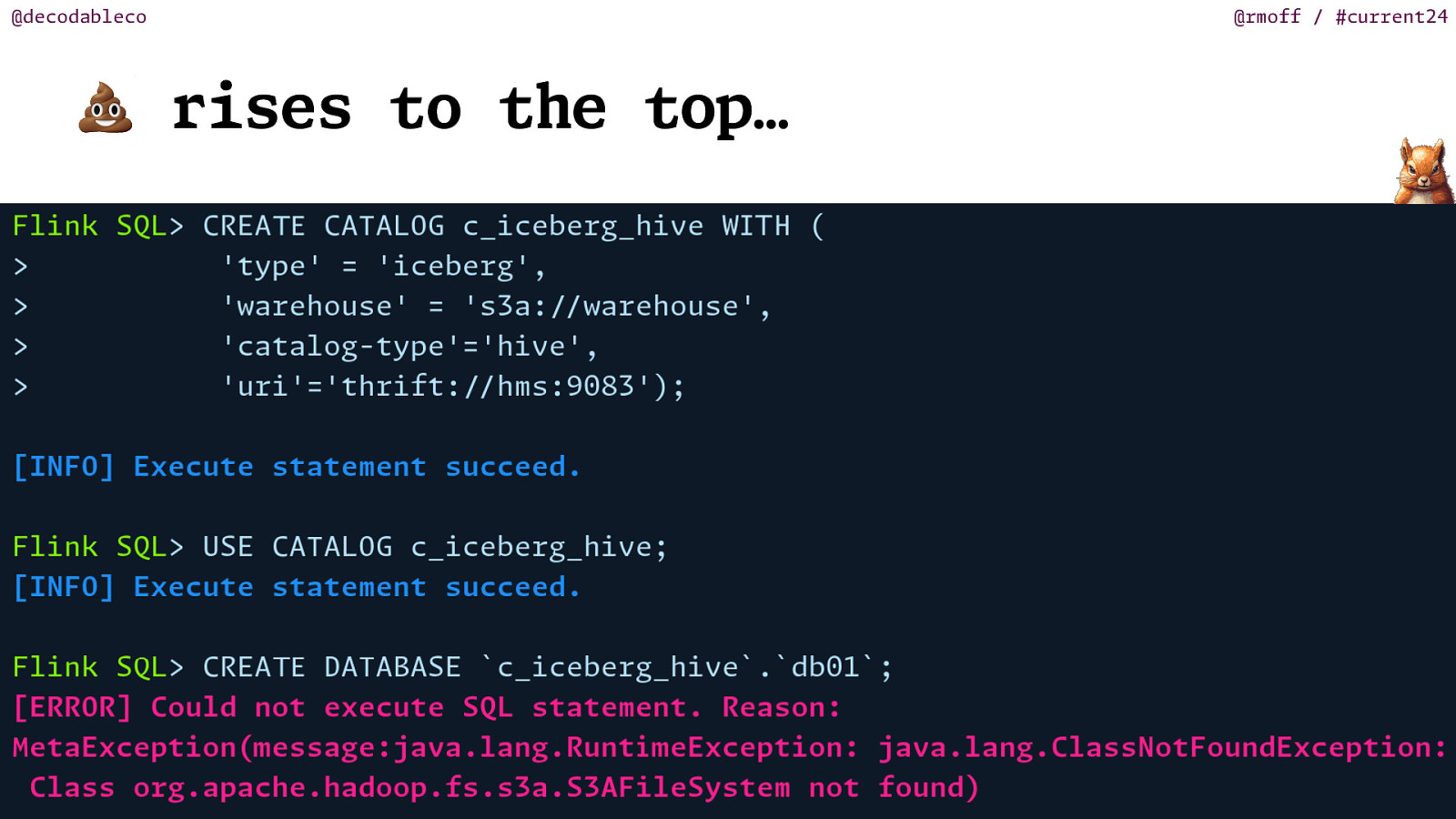

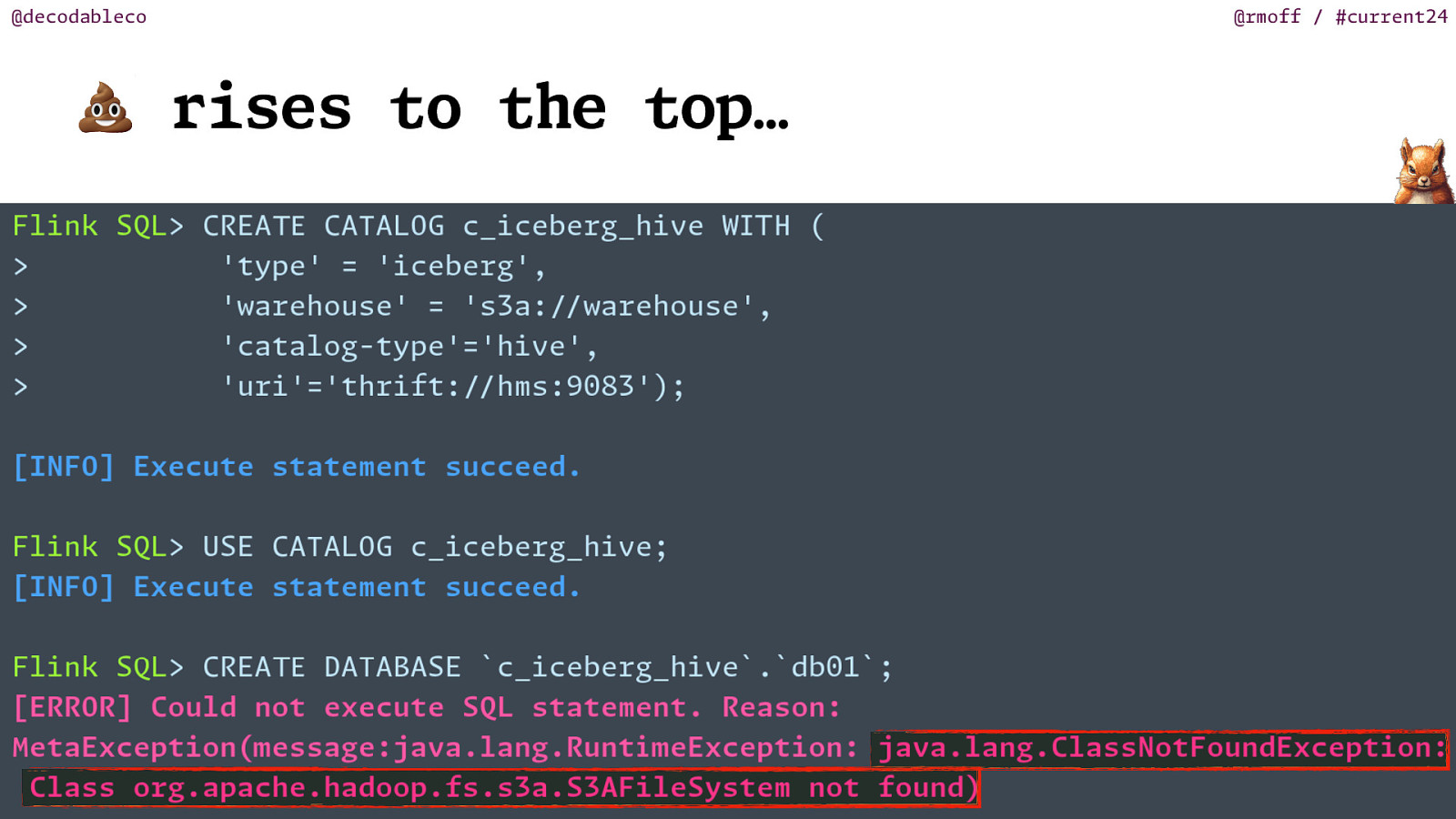

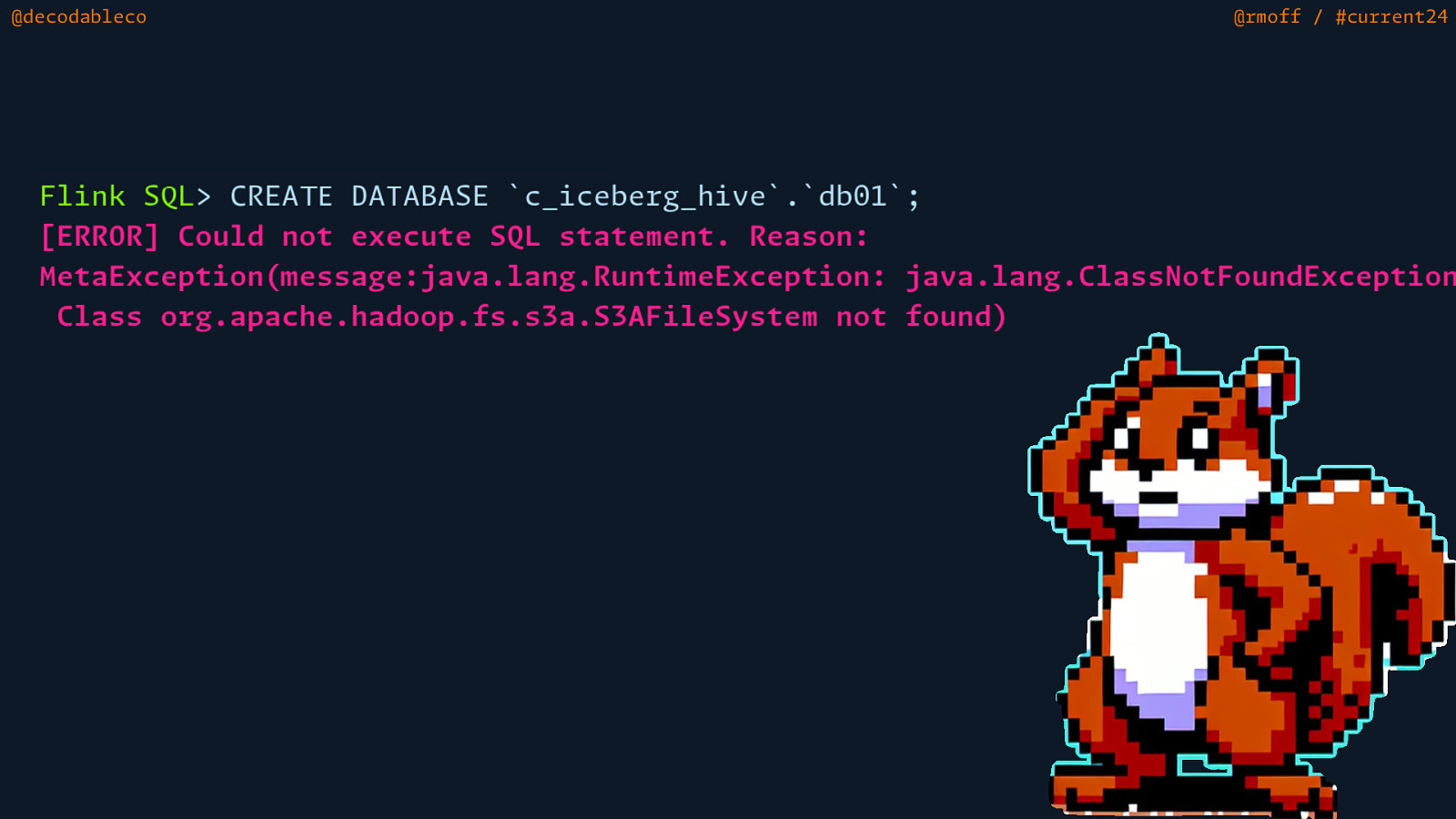

@decodableco @rmoff / #current24 Flink SQL> CREA DATABASE ‘db0l’; m i m E T [ERROR] Could not execute SQL statement. Reason: MetaException( essage:java.lang.Runt eException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found)

Slide 19

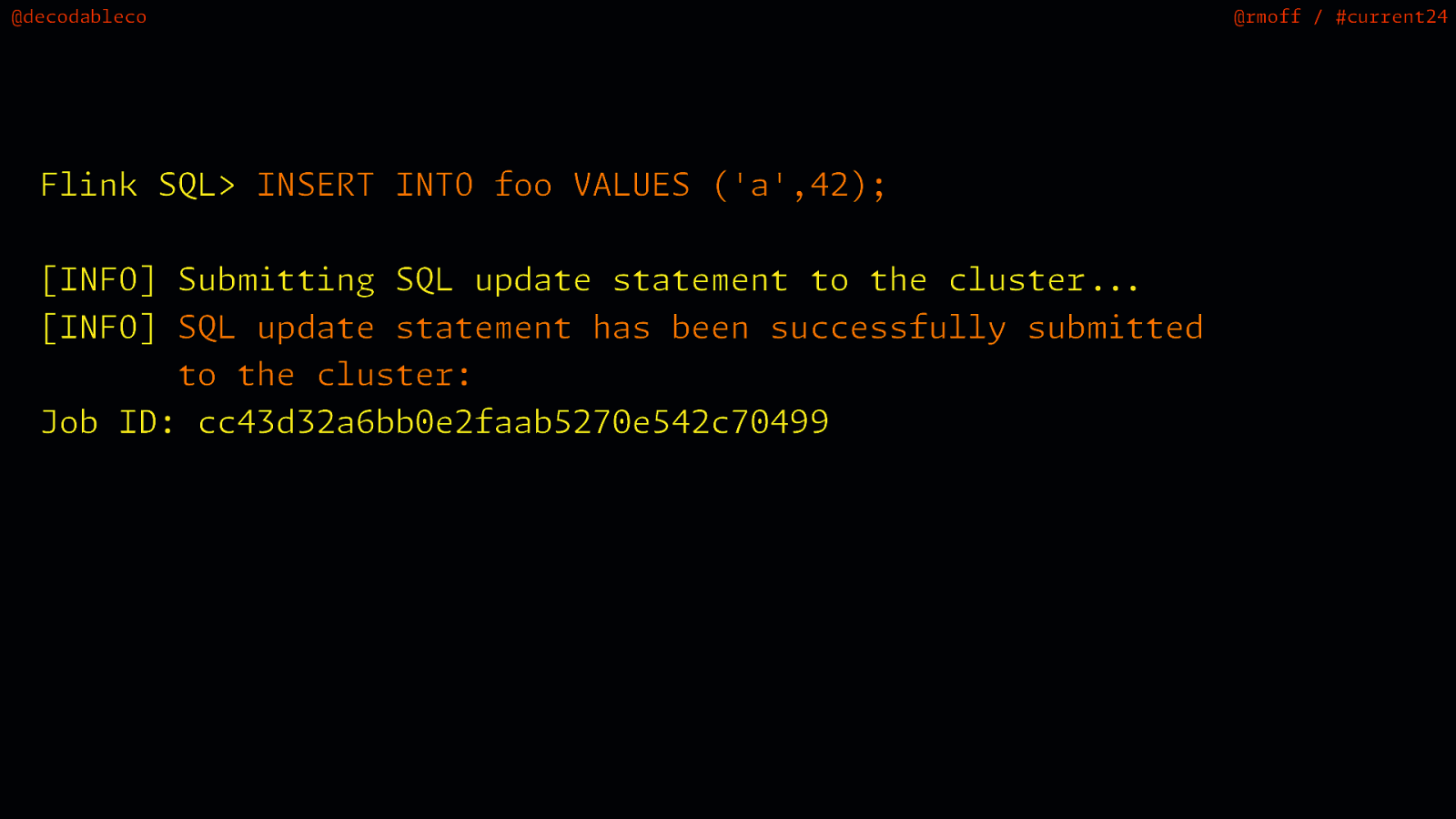

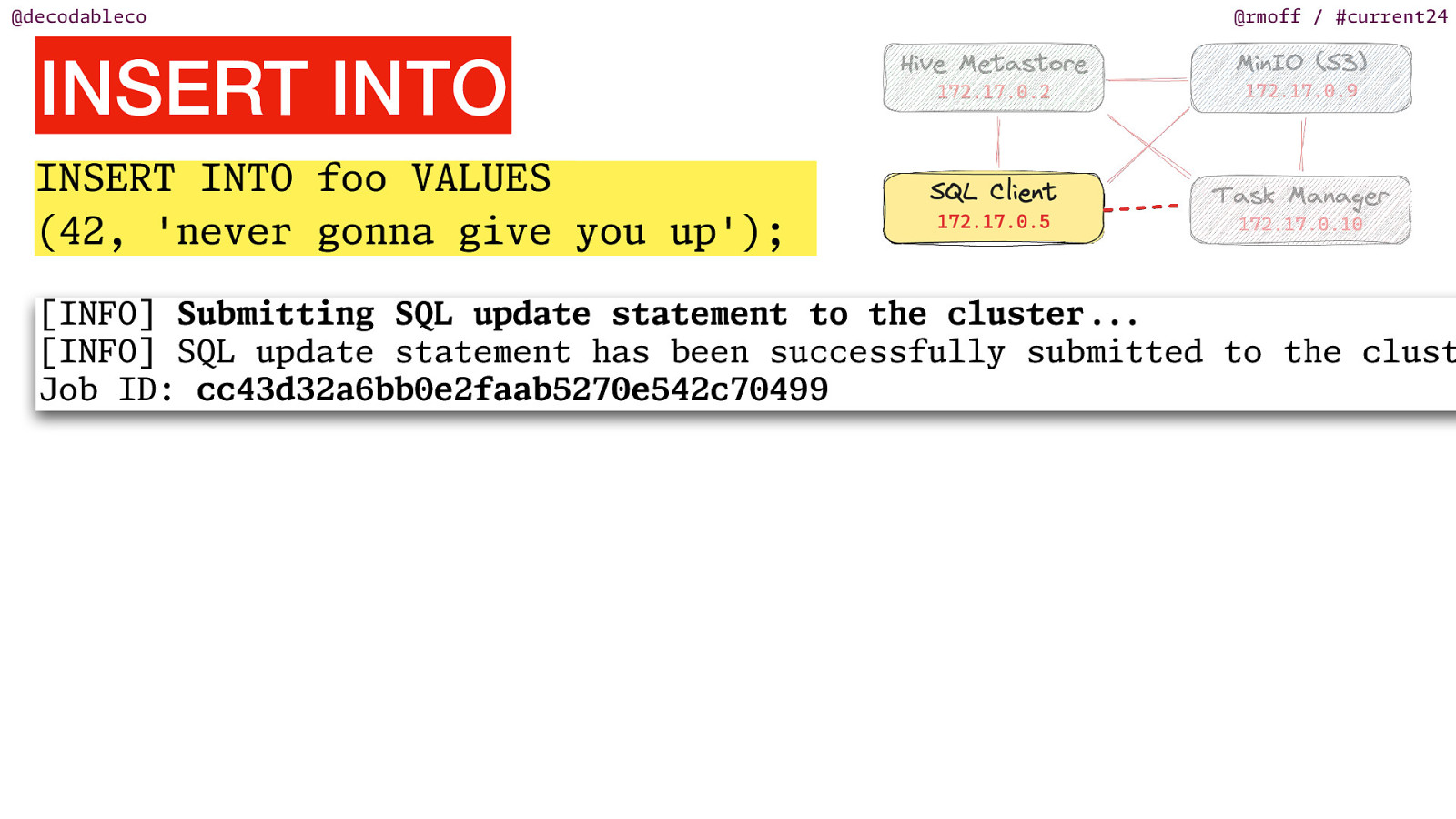

@decodableco @rmoff / #current24 Flink SQL> INSERT INTO foo VALUES (‘a’,42); tted . . i . m i m [INFO] Sub tting SQL update statement to the cluster [INFO] SQL update statement has been successfully sub to the cluster: Job ID: cc43d32a6bb0e2faab5270e542c70499

Slide 20

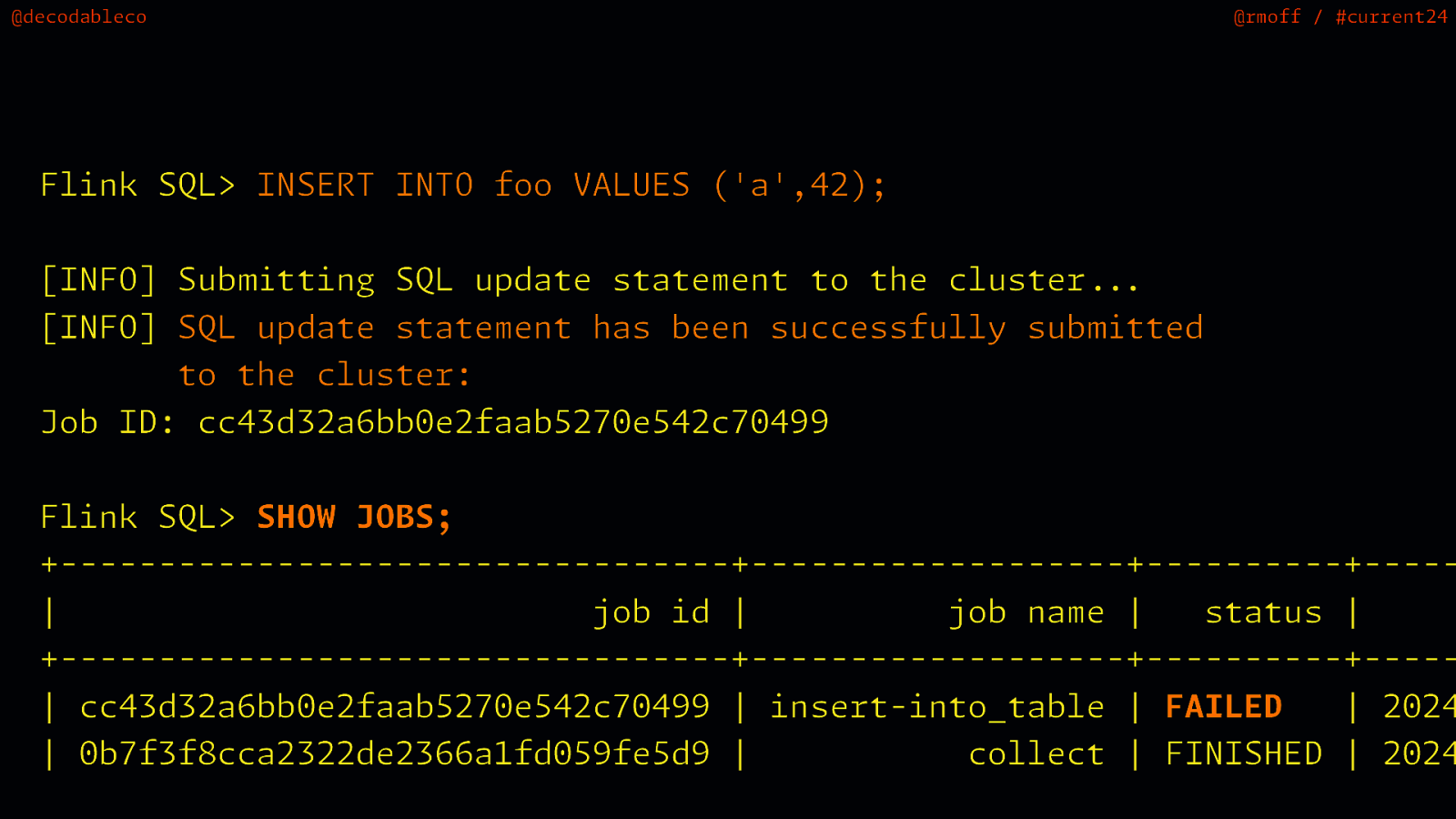

@decodableco @rmoff / #current24 Flink SQL> INSERT INTO foo VALUES (‘a’,42); [INFO] Sub tting SQL update statement to the cluster [INFO] SQL update statement has been successfully sub to the cluster: Job ID: cc43d32a6bb0e2faab5270e542c70499 tted . . i . m i m Flink SQL> SHOW JOBS; +—————————————————+—————————-+—————+——| job id | job name | status | +—————————————————+—————————-+—————+——| cc43d32a6bb0e2faab5270e542c70499 | insert-into_table | FAILED | 2024 | 0b7f3f8cca2322de2366a1fd059fe5d9 | collect | FINISHED | 2024

Slide 21

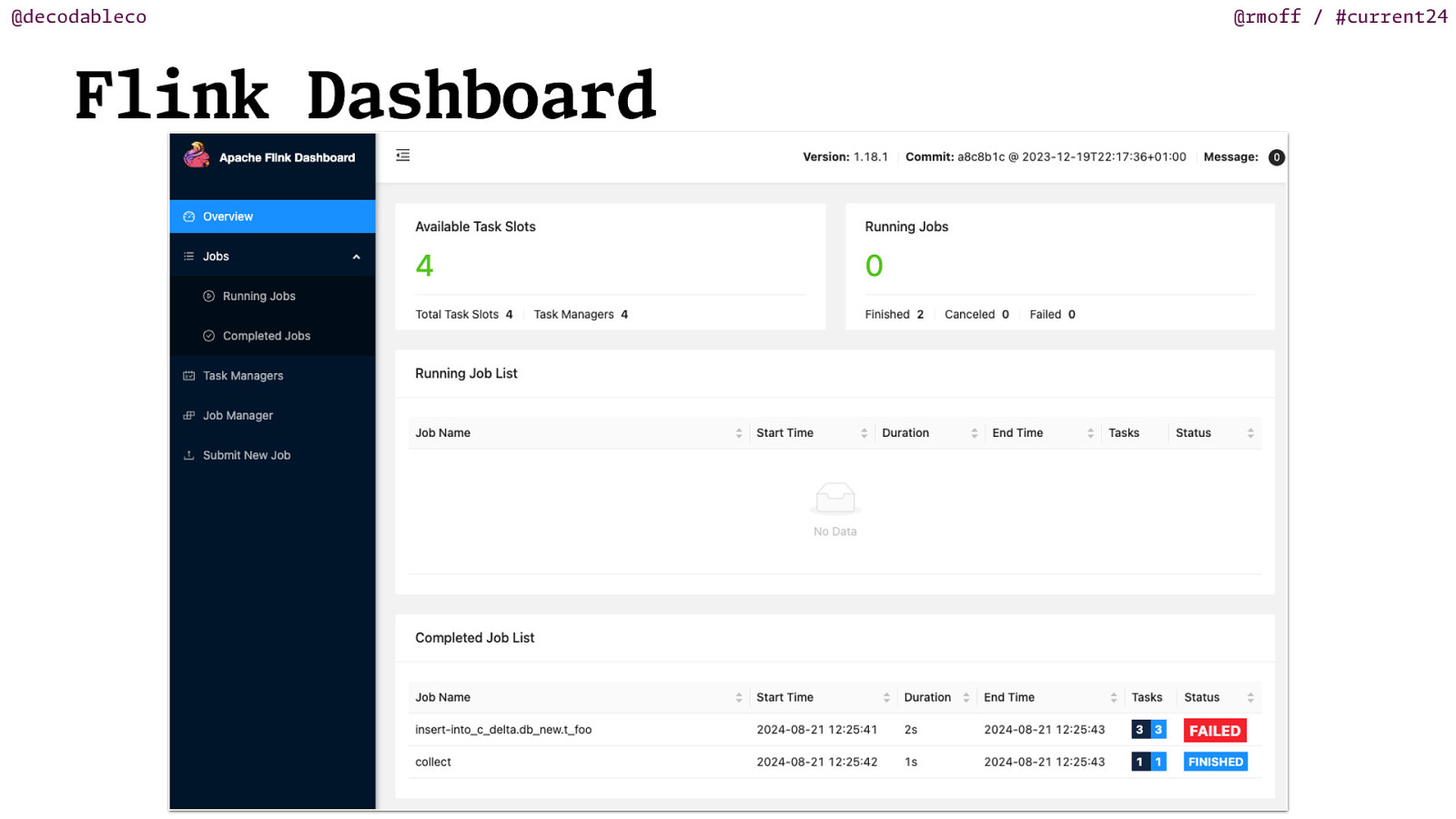

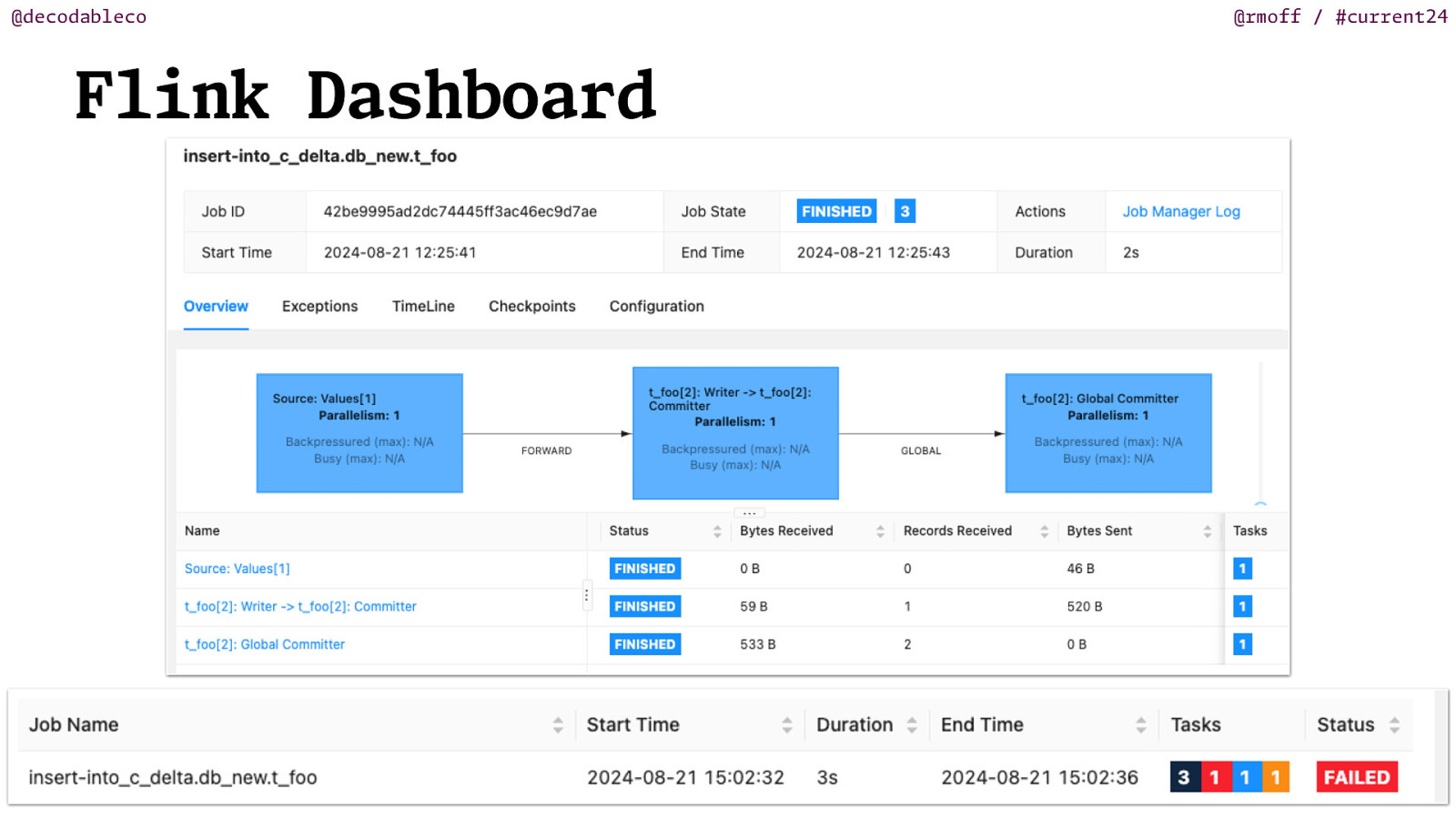

@decodableco Flink Dashboard @rmoff / #current24

Slide 22

@decodableco Flink Dashboard @rmoff / #current24

Slide 23

@decodableco @rmoff / #current24 The truth is the log m f queue.ac .org/detail.c m / / h/t Pat Helland https: ?id=2884038

Slide 24

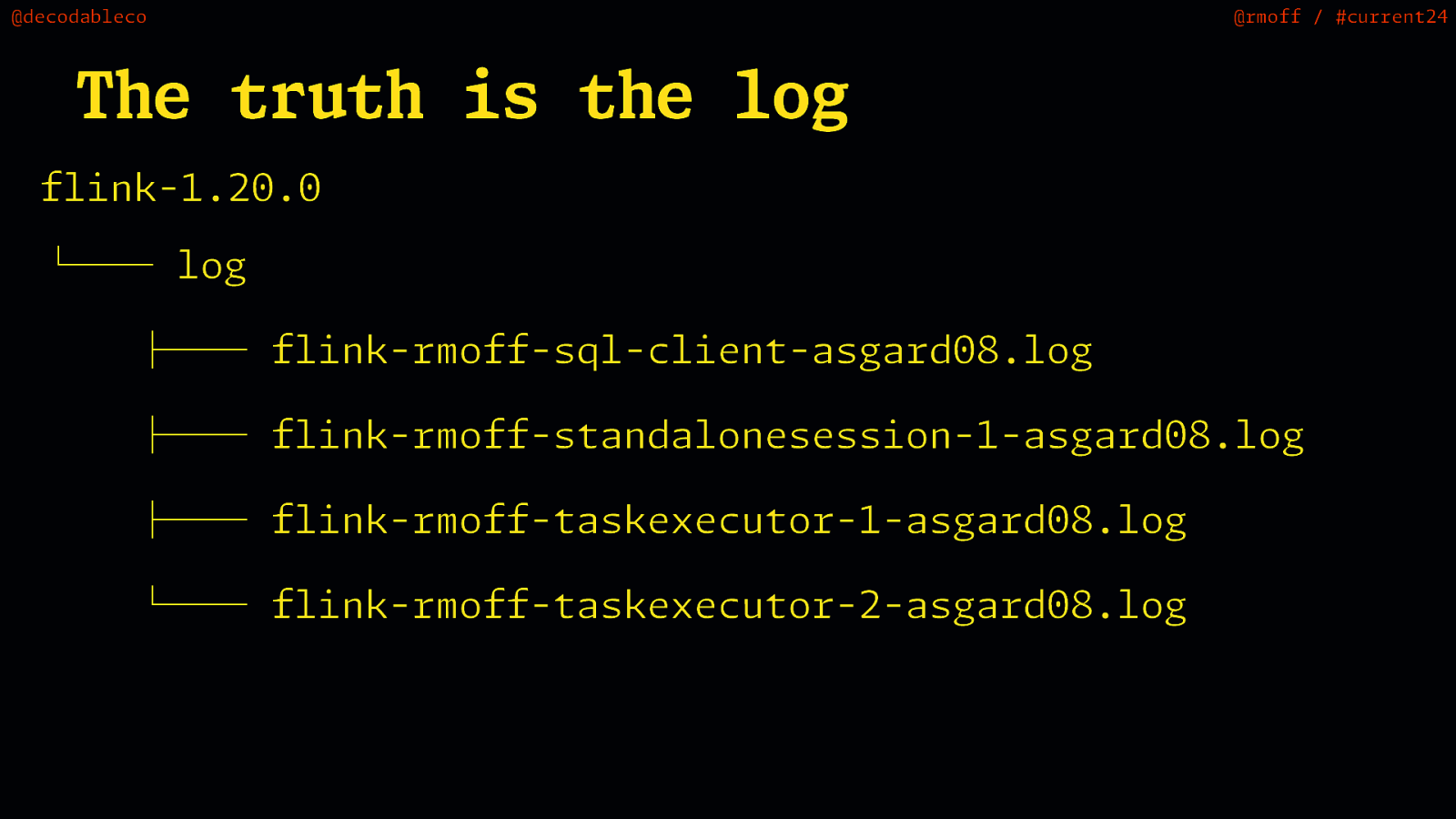

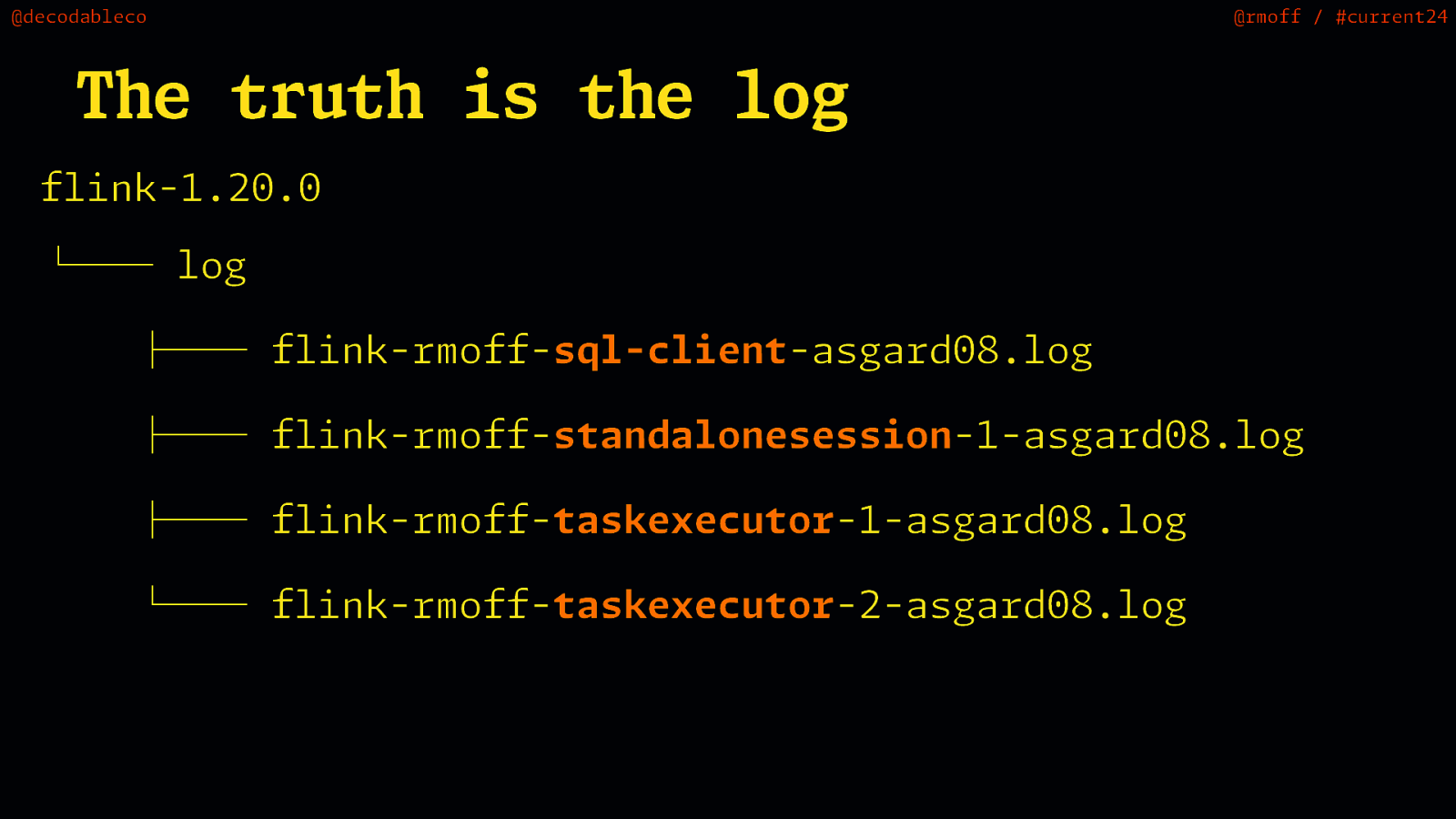

@decodableco @rmoff / #current24 The truth is the log flink-1.20.0 └── log ├── flink-rmoff-sql-client-asgard08.log ├── flink-rmoff-standalonesession-1-asgard08.log ├── flink-rmoff-taskexecutor-1-asgard08.log └── flink-rmoff-taskexecutor-2-asgard08.log

Slide 25

@decodableco @rmoff / #current24 The truth is the log flink-1.20.0 └── log ├── flink-rmoff-sql-client-asgard08.log ├── flink-rmoff-standalonesession-1-asgard08.log ├── flink-rmoff-taskexecutor-1-asgard08.log └── flink-rmoff-taskexecutor-2-asgard08.log

Slide 26

@decodableco @rmoff / #current24 i m Na ng things is hard (apparently)

Slide 27

@decodableco @rmoff / #current24 i m Na ng things is hard (apparently)

Slide 28

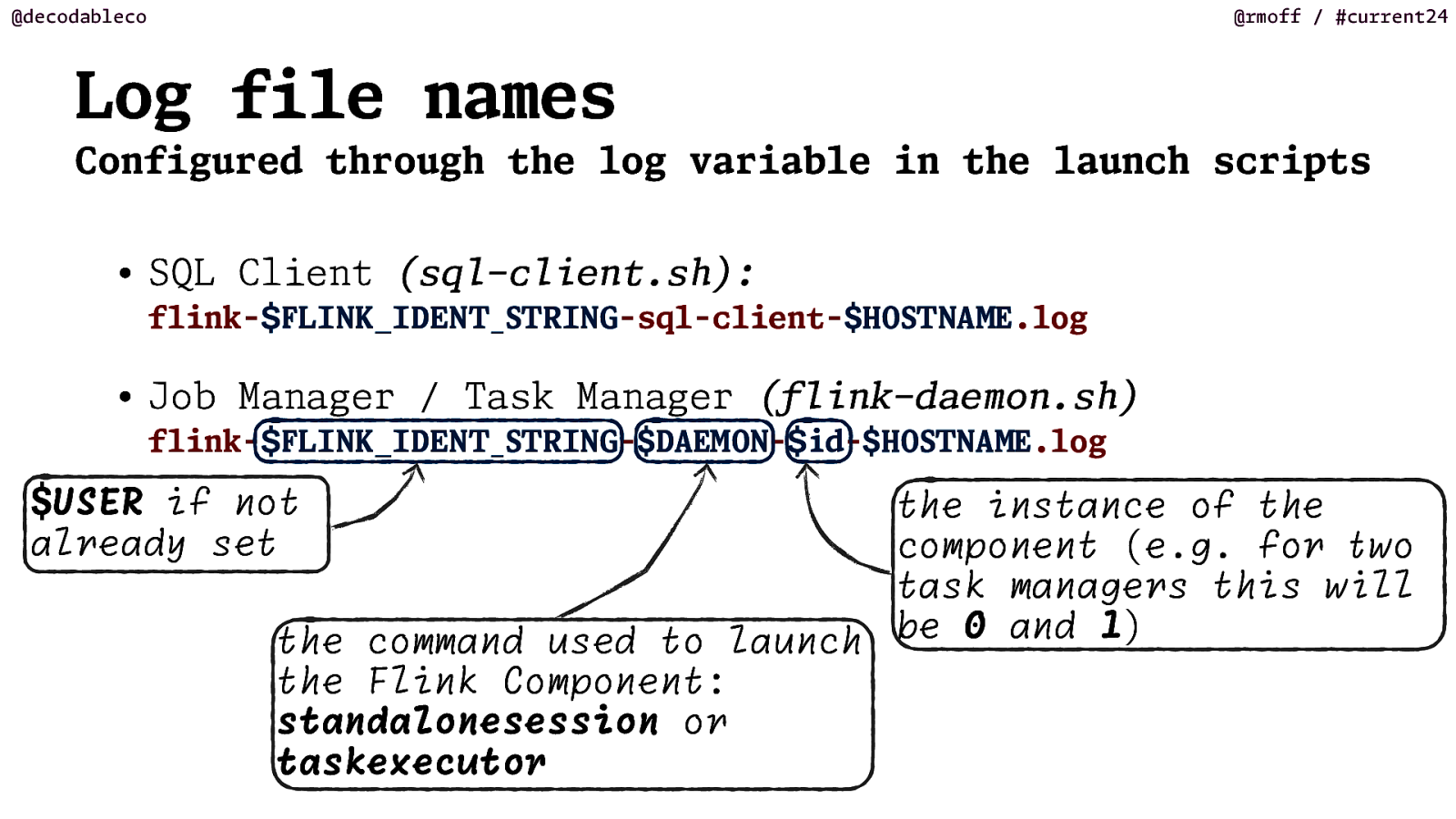

@rmoff / #current24 Log file names Configured through the log variable in the launch scripts • SQL Client (sq -c flink- e t.s ): LINK_IDEN RING-sql-client- HO • Job Manager / Task Manager ( flink- LINK_IDEN RING- DA NA .log k-dae o .s ) ON- d- HO NA USER if not already set .log h n E E M m M T T S S $ n $ i i l $ f M E h $ w n t i w i l T T S S _ _ T T l F F $ the instance of the component (e.g. for task managers this be 0 and 1) the command used to launch the Flink Component: standalonesession or taskexecutor $ $ @decodableco o ll

Slide 29

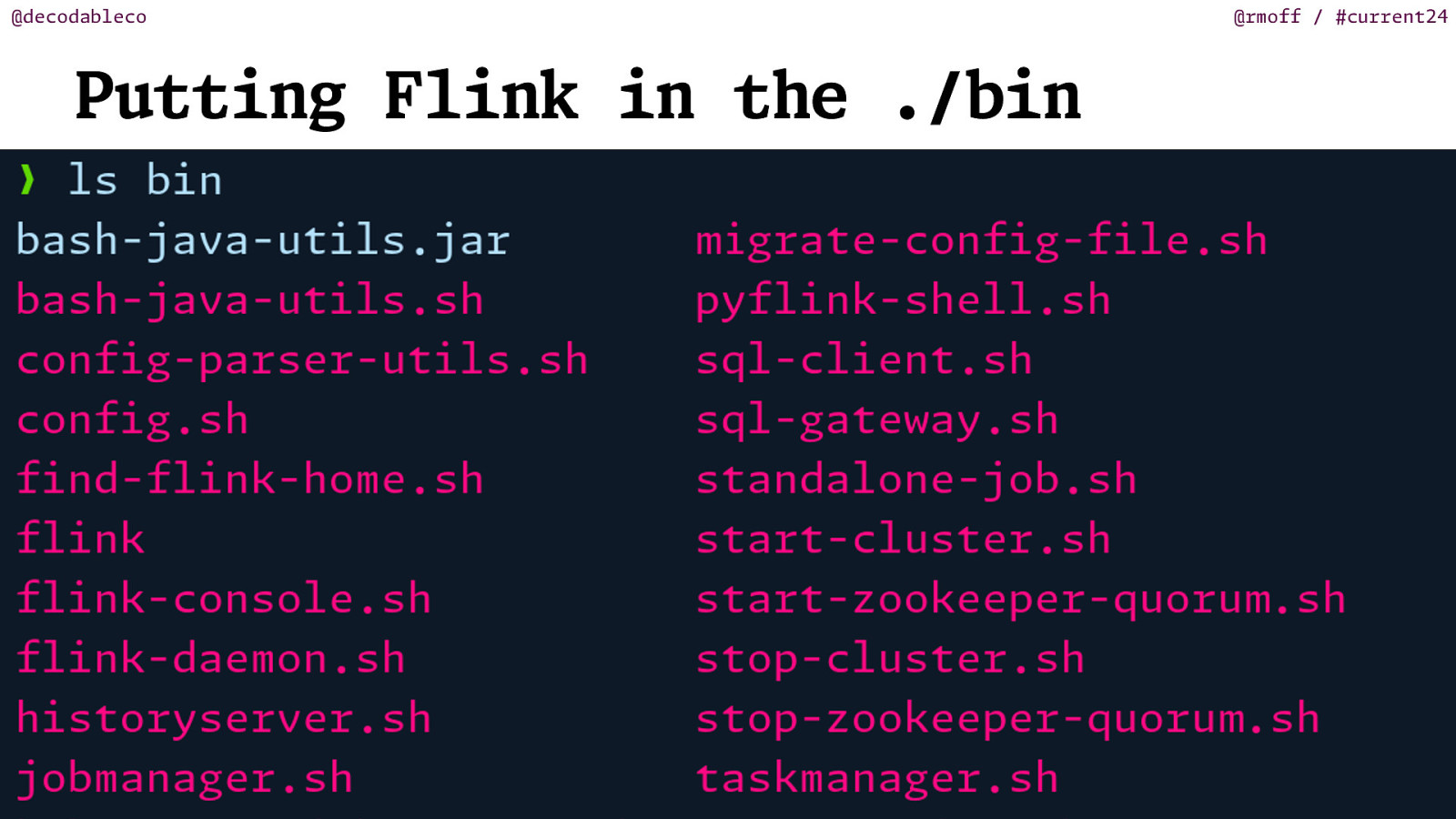

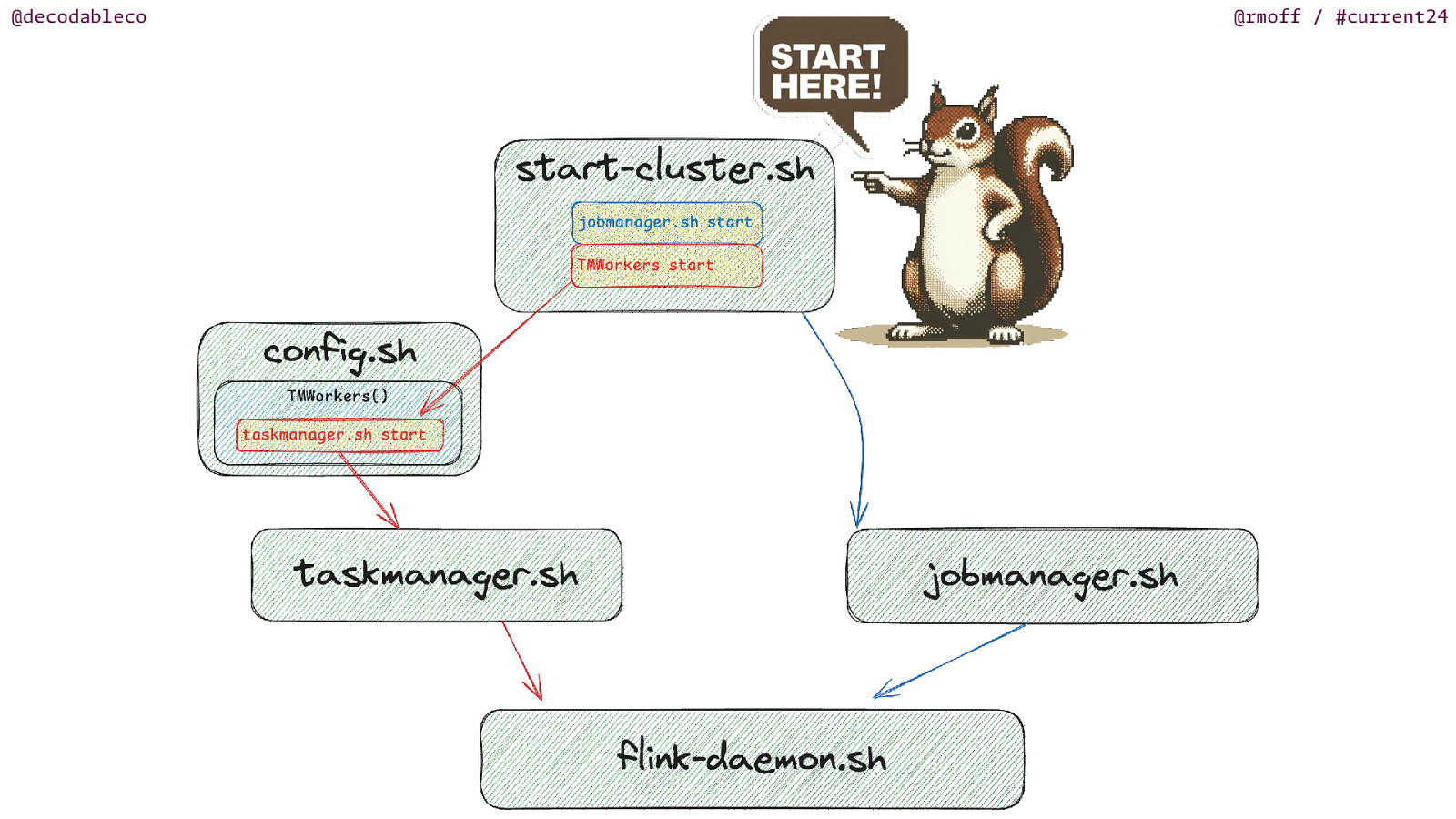

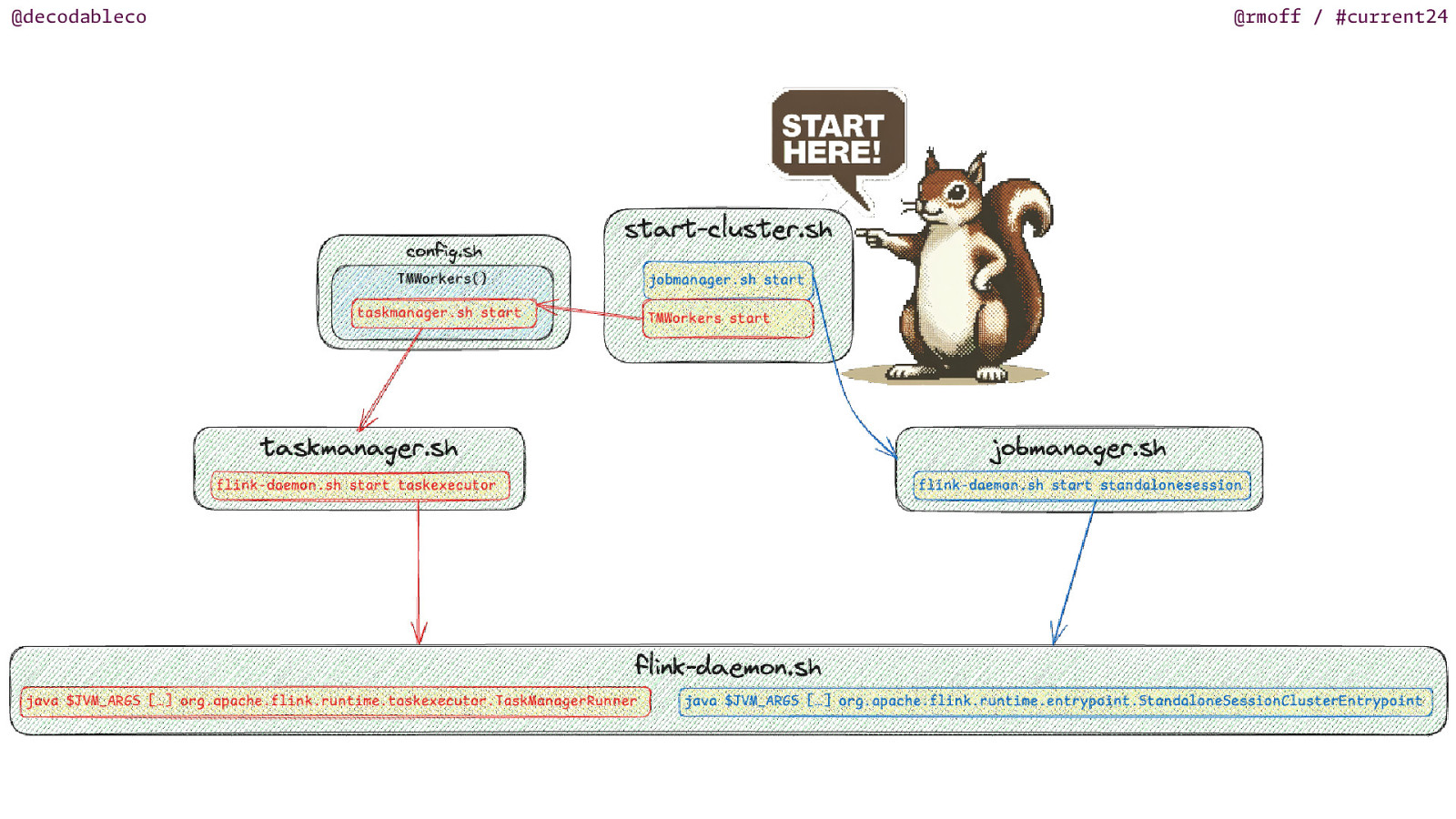

@decodableco Putting Flink in the ./bin @rmoff / #current24

Slide 30

@decodableco @rmoff / #current24

Slide 31

@decodableco @rmoff / #current24

Slide 32

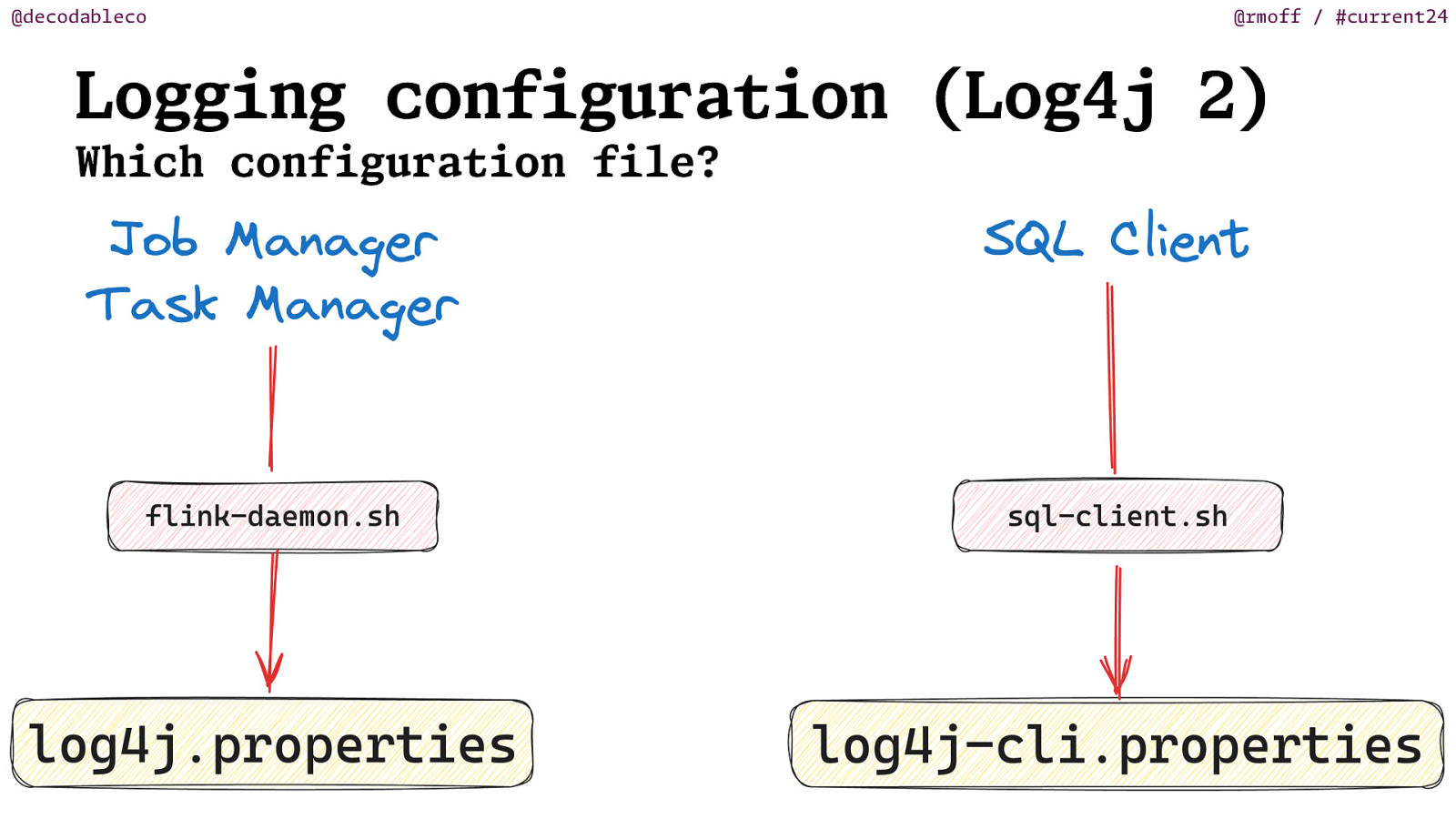

@decodableco @rmoff / #current24 Logging configuration (Log4j 2) Which configuration file?

Slide 33

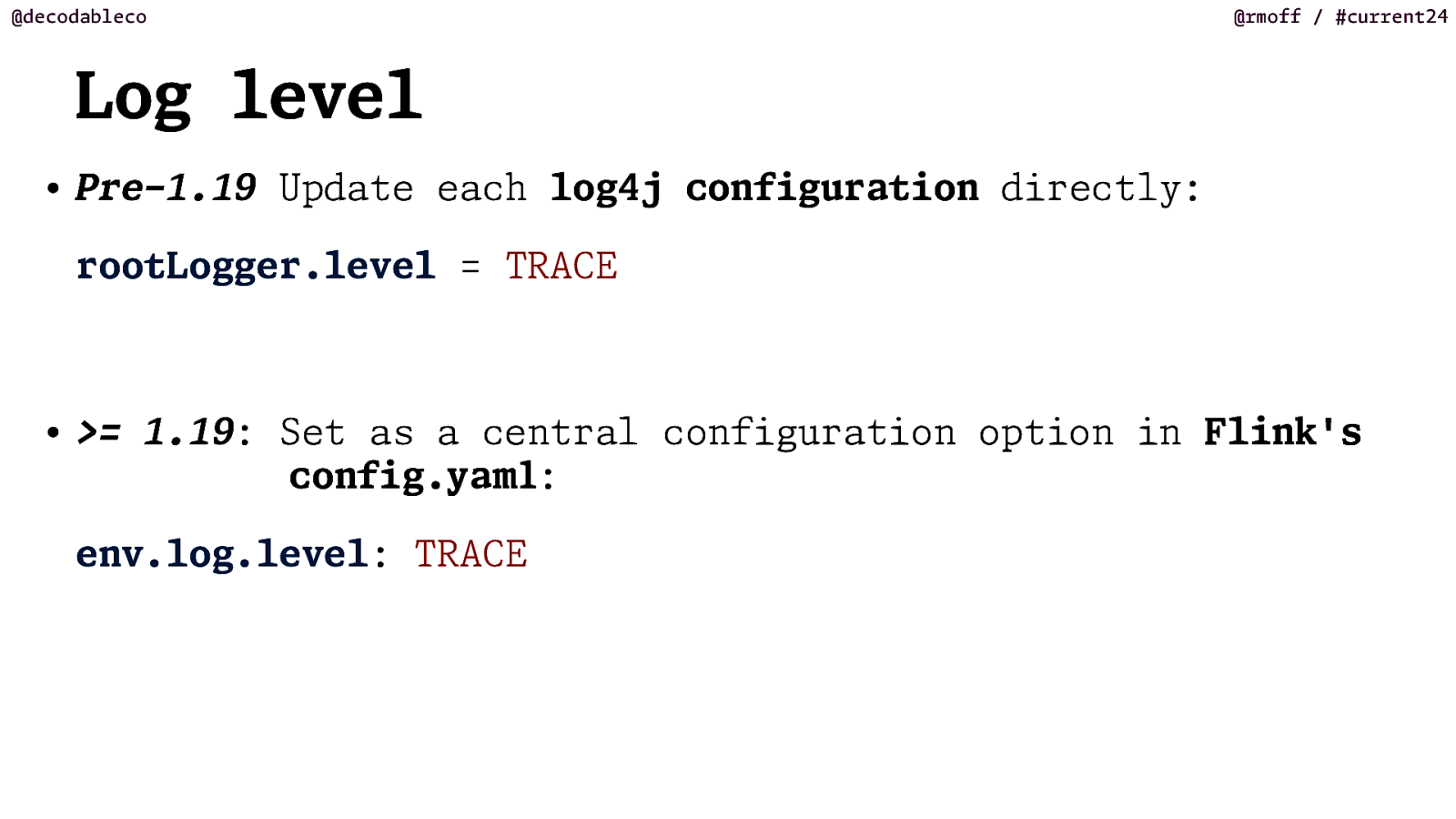

@decodableco @rmoff / #current24 Log level • Pre-1.19 Update each log4j configuration directly: rootLogger.level = TRACE • >= 1.19: Set as a central configuration option in Flink’s config.ya l m env.log.level: TRACE :

Slide 34

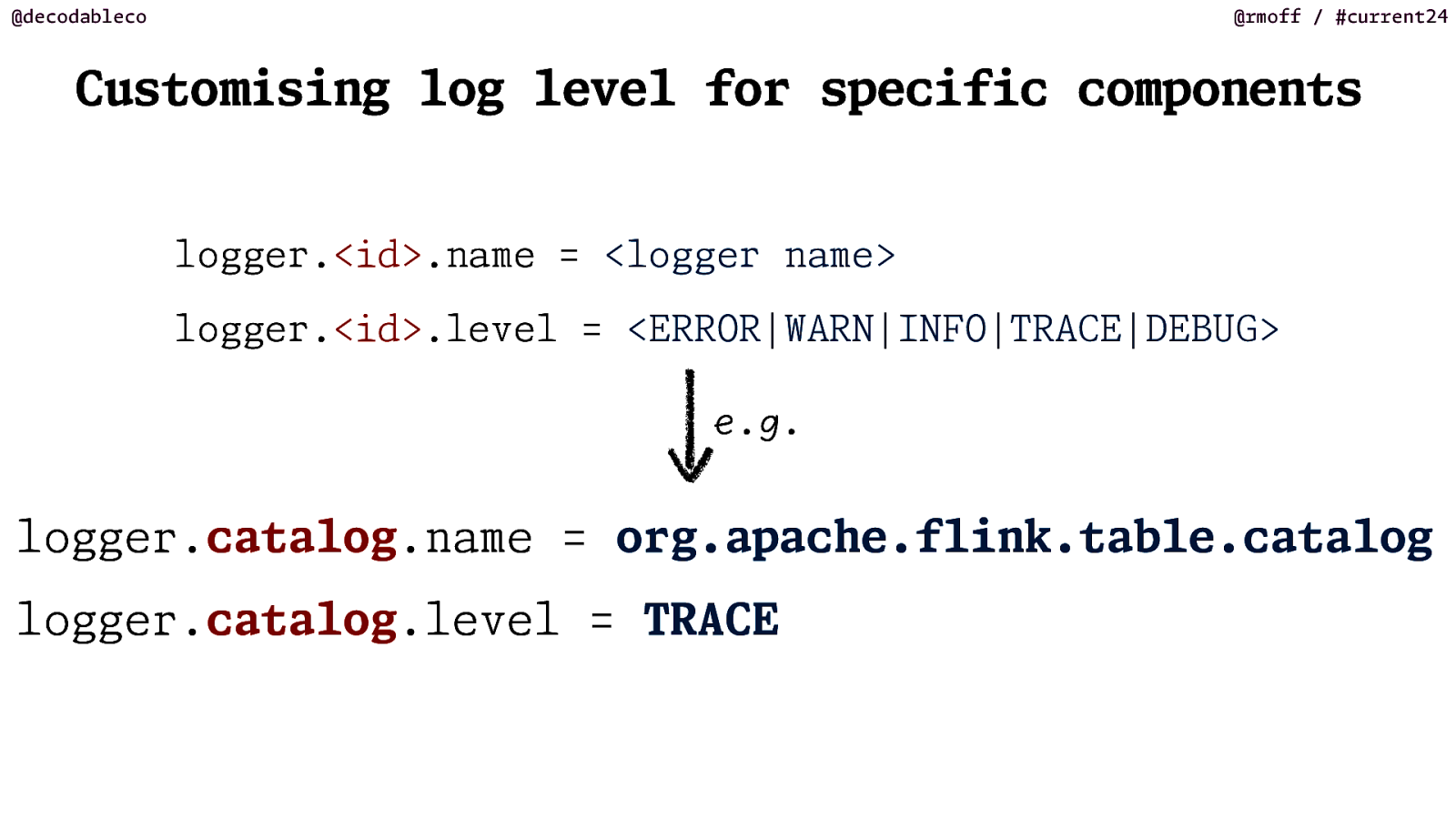

@decodableco @rmoff / #current24 Custo sing log level for specific components logger.<id>.name = <logger name> logger.<id>.level = <ERROR|WARN|INFO|TRACE|DEBUG> e.g. logger.catalog.name = org.apache.flink.table.catalog i m logger.catalog.level = TRACE

Slide 35

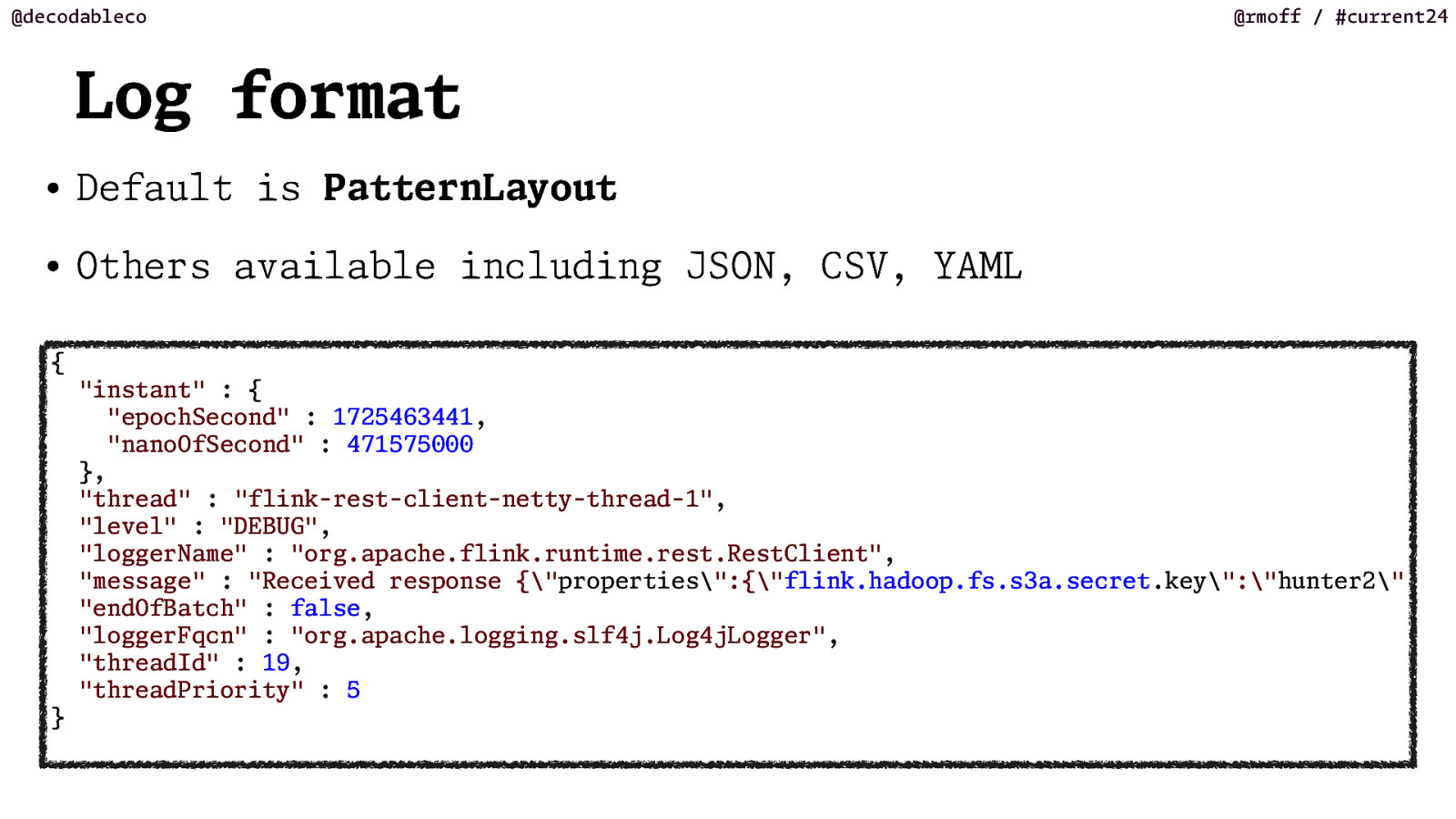

@decodableco @rmoff / #current24 Log format • Default is PatternLayout • Others available including JSON, CSV, YA { “instant” : { “epochSecond” : 1725463441, “nanoOfSecond” : 471575000 }, “thread” : “flink-rest-client-netty-thread-1”, “level” : “DEBUG”, “loggerName” : “org.apache.flink.runt e.rest.RestClient”, “message” : “Received response {“properties”:{“flink.hadoop.fs.s3a.secret.key”:”hunter2” “endOfBatch” : false, “loggerFqcn” : “org.apache.logging.slf4j.Log4jLogger”, “threadId” : 19, “threadPriority” : 5 L M m i }

Slide 36

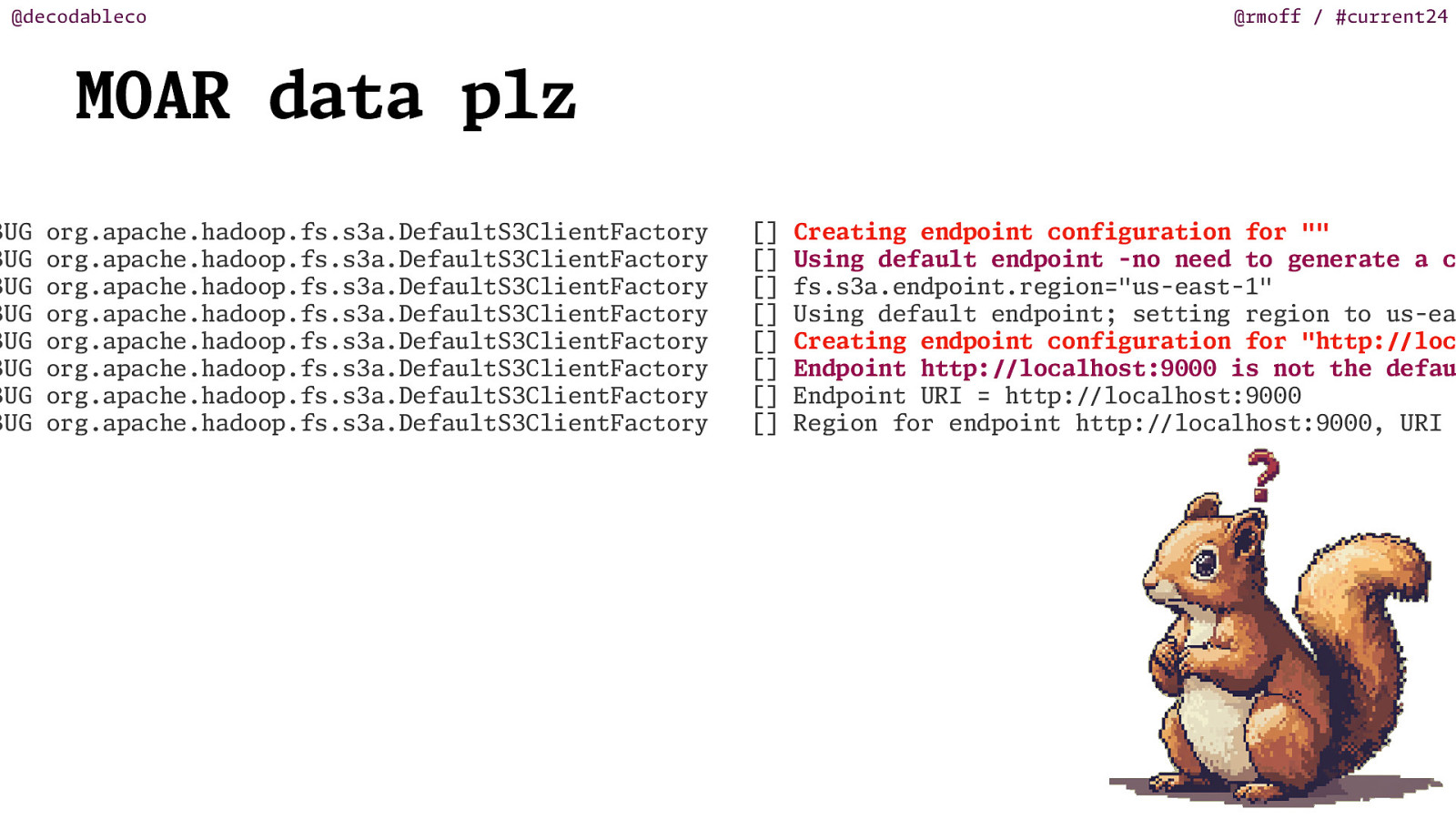

@decodableco MOAR data plz / / / / / Creating endpoint configuration for “” Using default endpoint -no need to generate a c fs.s3a.endpoint.region=”us-east-1” Using default endpoint; setting region to us-ea Creating endpoint configuration for “http: loc Endpoint http: localhost:9000 is not the defau Endpoint URI = http: localhost:9000 Region for endpoint http: localhost:9000, URI / [] [] [] [] [] [] [] [] / org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory / BUG BUG BUG BUG BUG BUG BUG BUG @rmoff / #current24

Slide 37

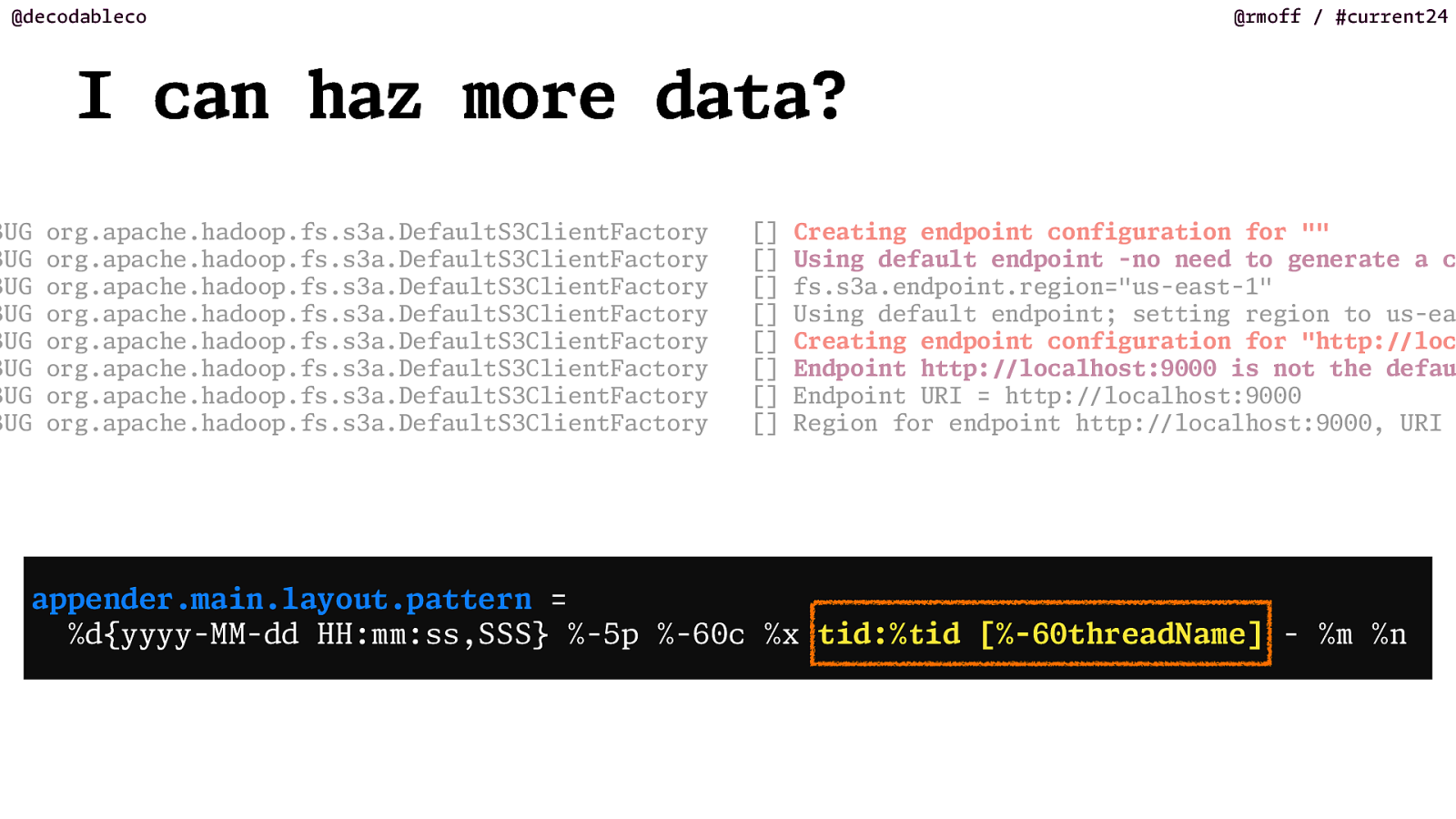

@decodableco @rmoff / #current24 I can haz more data? BUG BUG BUG BUG BUG BUG BUG BUG org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory org.apache.hadoop.fs.s3a.DefaultS3ClientFactory [] [] [] [] [] [] [] [] Creating endpoint configuration for “” Using default endpoint -no need to generate a c fs.s3a.endpoint.region=”us-east-1” Using default endpoint; setting region to us-ea Creating endpoint configuration for “http: Endpoint http: Endpoint URI = http: Region for endpoint http: / / / % appender.

Slide 38

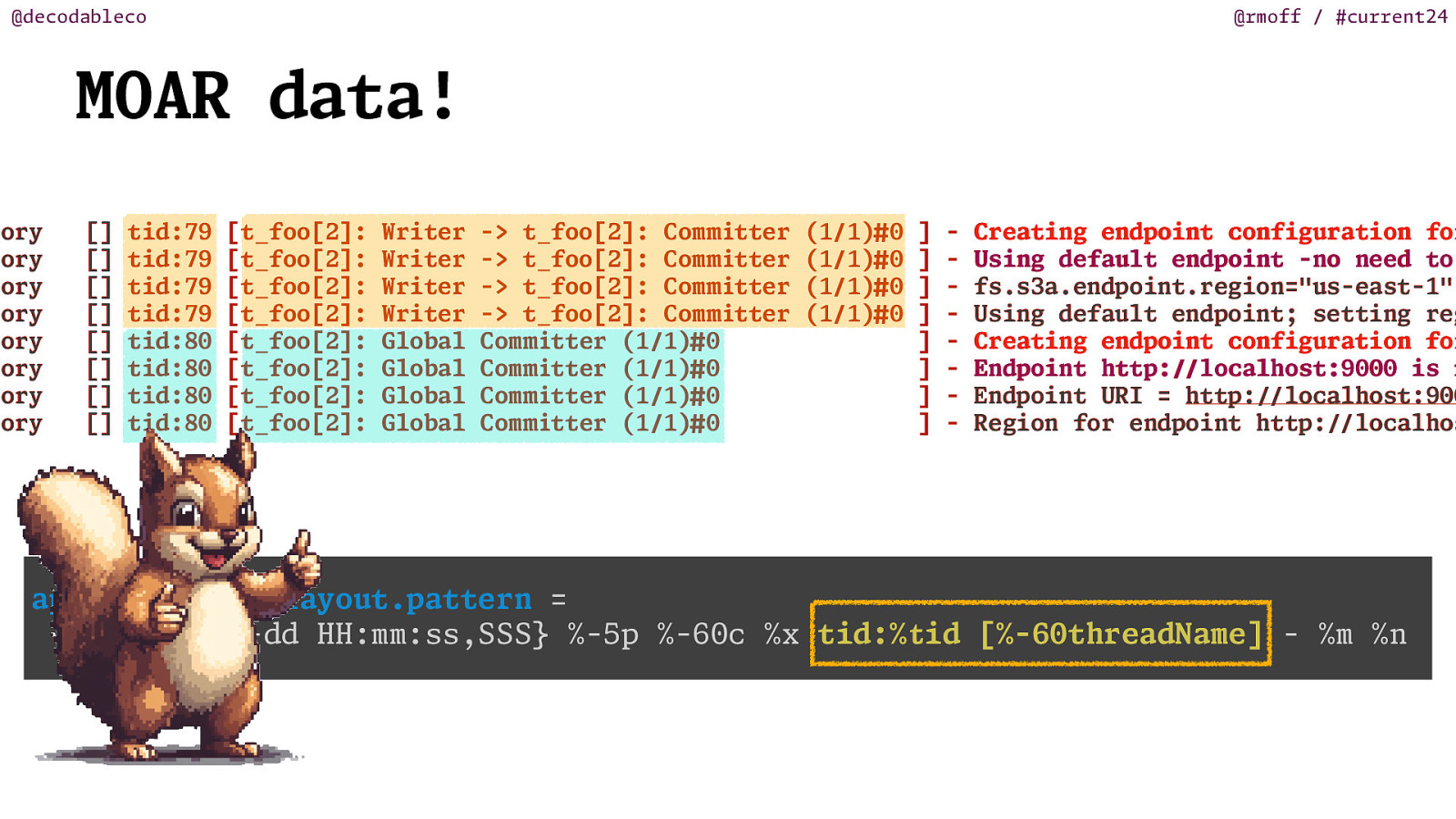

@decodableco @rmoff / #current24 MOAR data! tory tory tory tory tory tory tory tory [] [] [] [] [] [] [] [] tid:79 tid:79 tid:79 tid:79 tid:80 tid:80 tid:80 tid:80 [t_foo[2]: [t_foo[2]: [t_foo[2]: [t_foo[2]: [t_foo[2]: [t_foo[2]: [t_foo[2]: [t_foo[2]: Writer Writer Writer Writer Global Global Global Global -> t_foo[2]: Com -> t_foo[2]: Com -> t_foo[2]: Com -> t_foo[2]: Com Com tter (1/1) 0 Com tter (1/1) 0 Com tter (1/1) 0 Com tter (1/1) 0 tter tter tter tter (1/1) (1/1) (1/1) (1/1) 0 0 0 0 ] ] ] ] ] ] ] ]

Creating endpoint configuration for Using default endpoint -no need to fs.s3a.endpoint.region=”us-east-1” Using default endpoint; setting reg Creating endpoint configuration for Endpoint http: localhost:9000 is n Endpoint URI = http: localhost:900 Region for endpoint http: localhos i i i i m m m m

i i i i m m m m % appender.

Slide 39

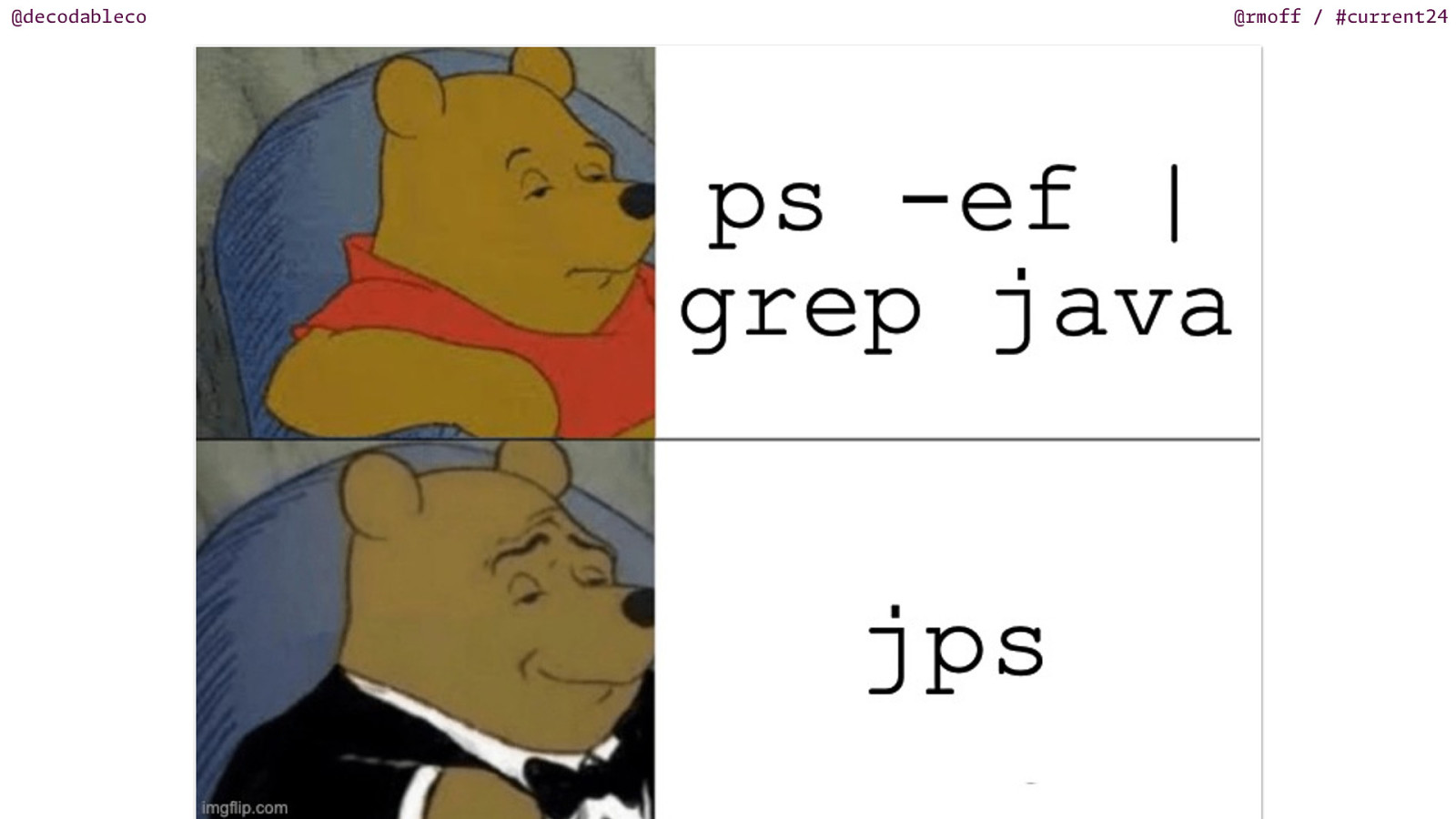

Java Tools

Slide 40

@decodableco @rmoff / #current24

Slide 41

@decodableco @rmoff / #current24

Slide 42

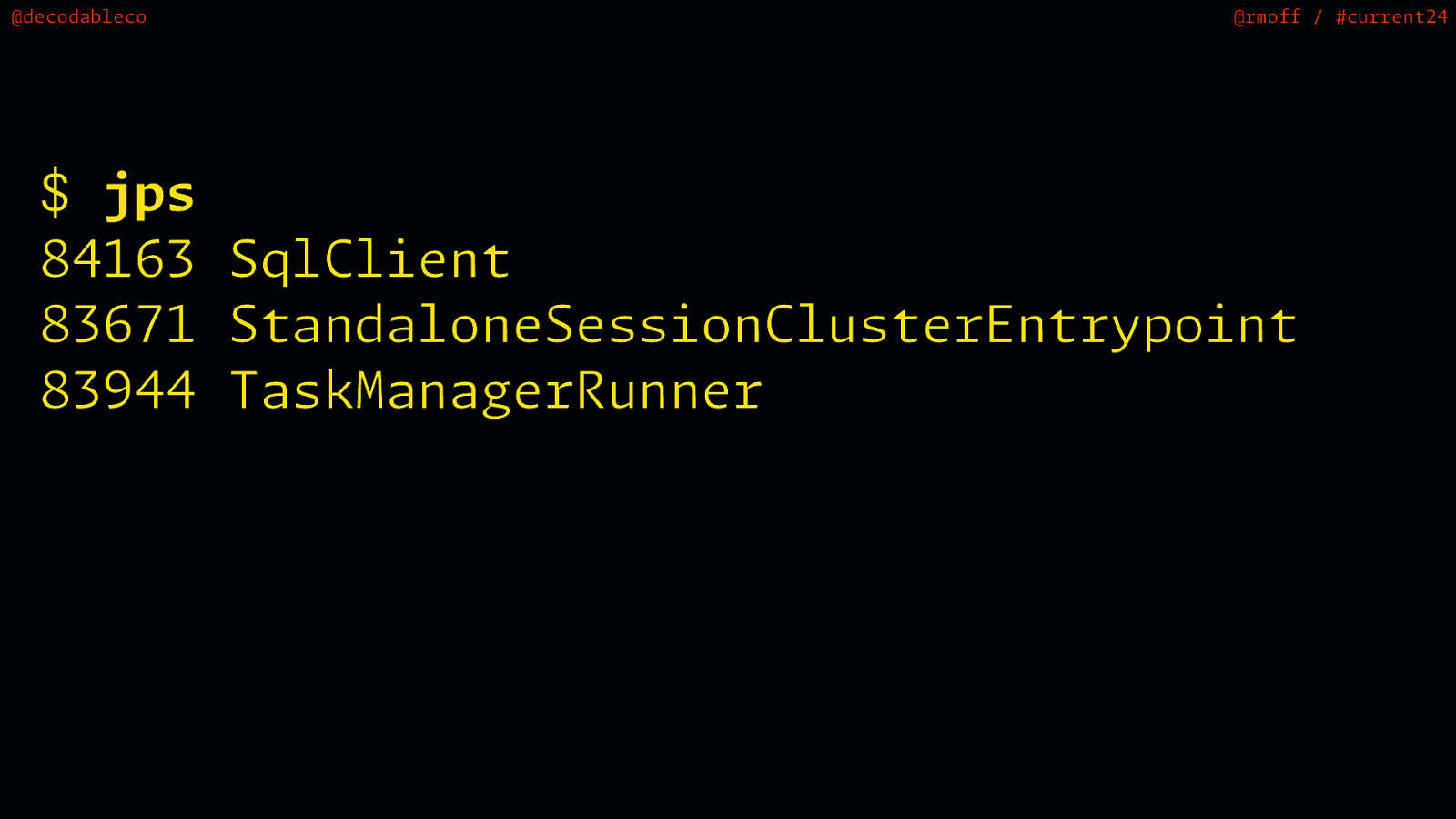

@decodableco @rmoff / #current24 $ jps 84163 SqlClient 83671 StandaloneSessionClusterEntrypoint 83944 TaskManagerRunner

Slide 43

@decodableco $ jinfo 84163 @rmoff / #current24

Slide 44

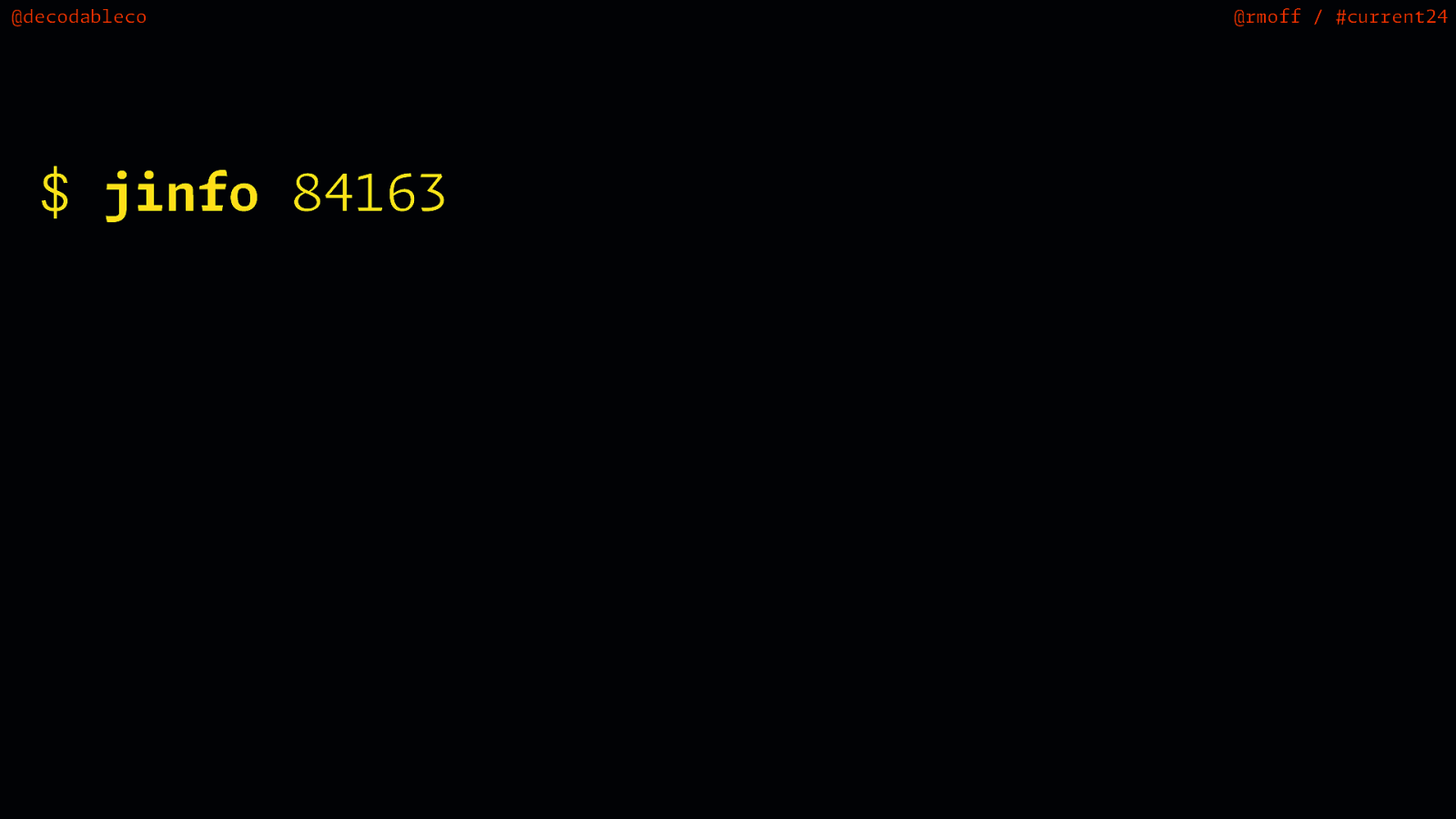

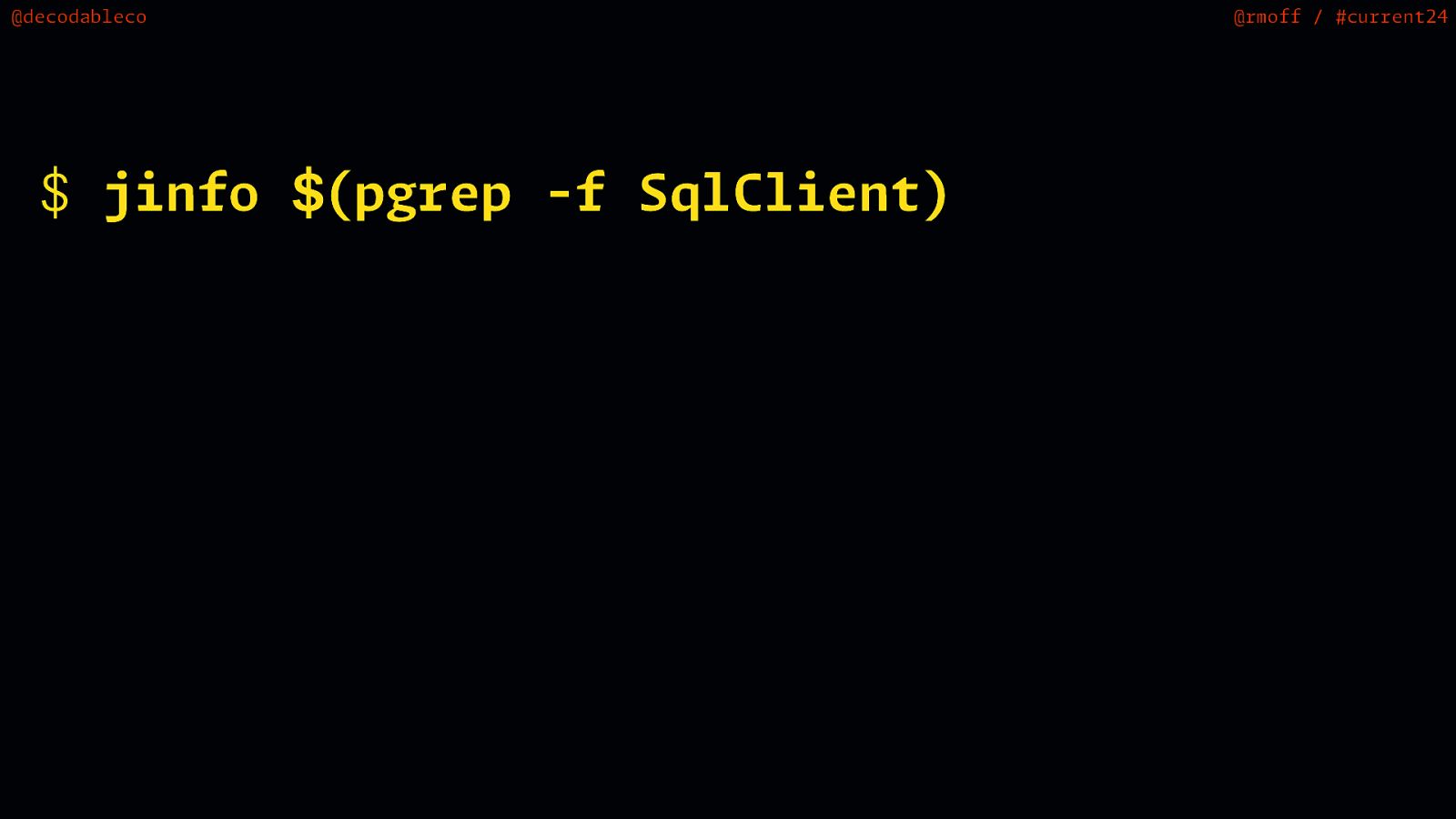

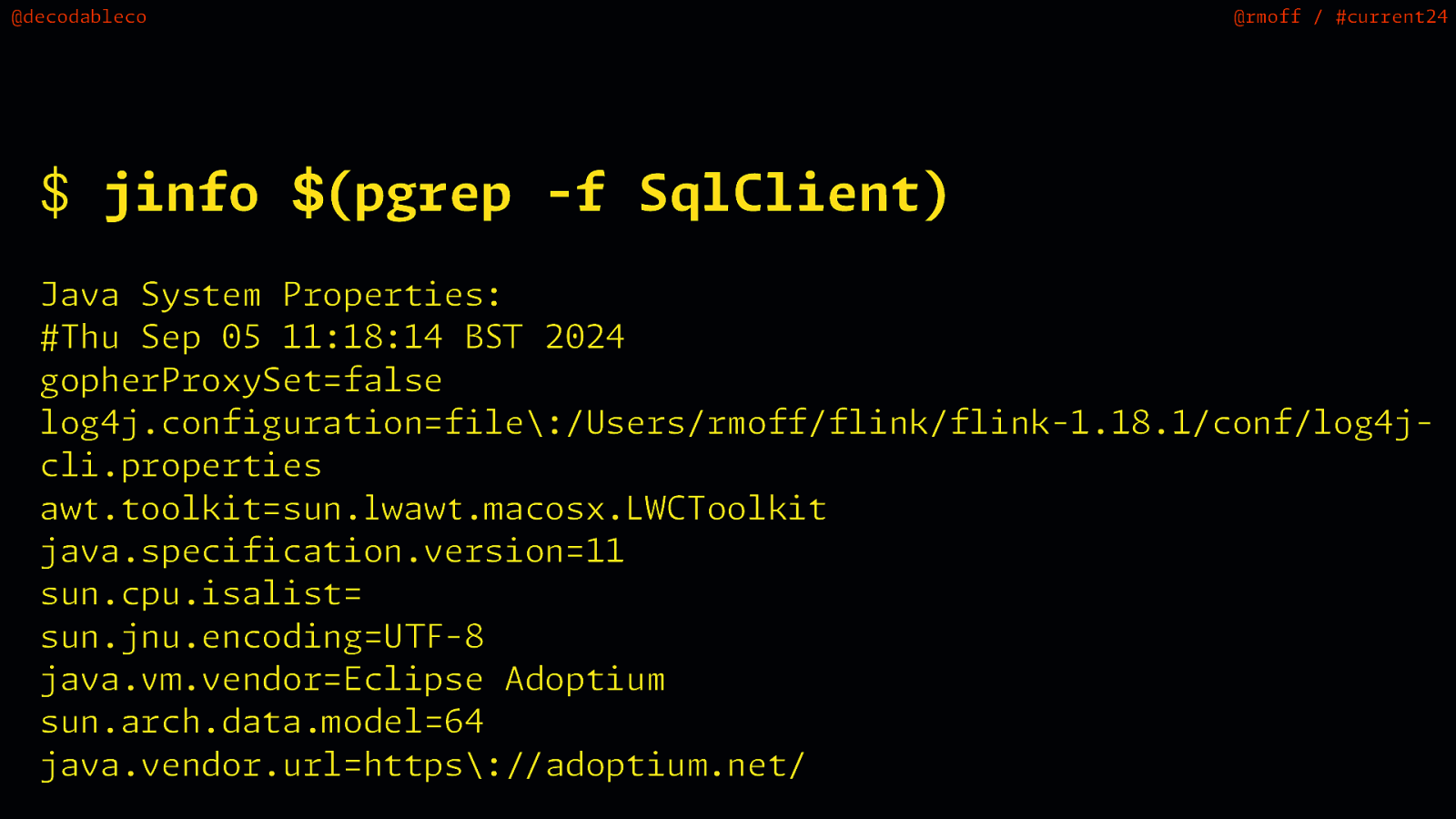

@decodableco @rmoff / #current24 $ $ jinfo (pgrep -f SqlClient)

Slide 45

@decodableco @rmoff / #current24 $ jinfo (pgrep -f SqlClient) m W L / T / S m t w F T w l m $ m v t w Java System Properties: #Thu Sep 05 11:18:14 B 2024 gopherProxySet=false log4j.configuration=file:/Users/rmoff/flink/flink-1.18.1/conf/log4jcli.properties a .toolkit=sun. a . acosx. CToolkit java.specification.version=11 sun.cpu.isalist= sun.jnu.encoding=U -8 java. .vendor=Eclipse Adoptium sun.arch.data. odel=64 java.vendor.url=https: adoptiu .net/

Slide 46

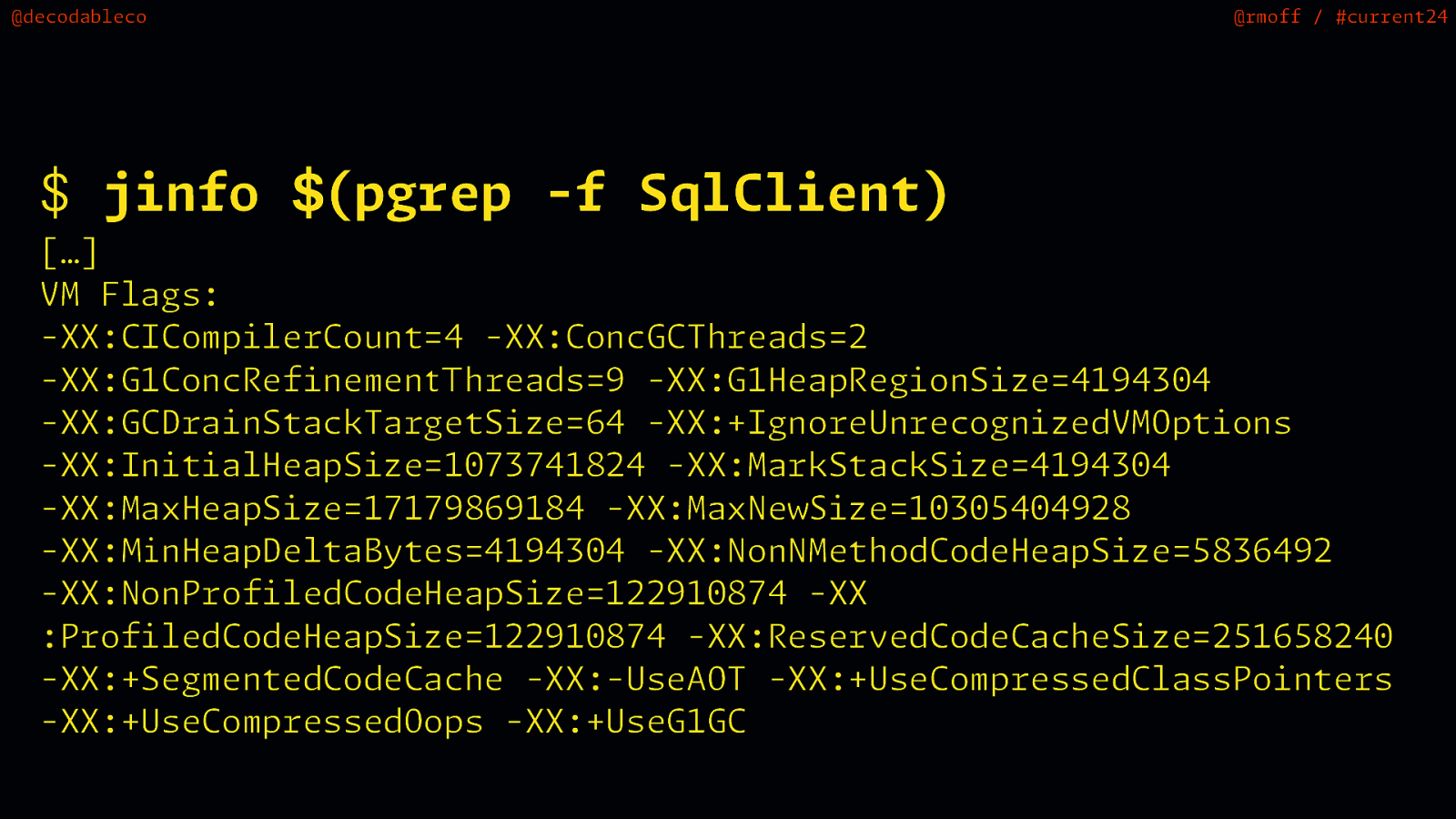

@decodableco @rmoff / #current24 $ jinfo (pgrep -f SqlClient) S w T t $ i M […] VM Flags: -XX:CICompilerCount=4 -XX:ConcGCThreads=2 -XX:G1ConcRefinemen hreads=9 -XX:G1HeapRegionSize=4194304 -XX:GCDrainStackTargetSize=64 -XX:+IgnoreUnrecognizedVMOptions -XX:InitialHeapSize=1073741824 -XX:MarkStackSize=4194304 -XX:MaxHeapSize=17179869184 -XX:MaxNe ize=10305404928 -XX: nHeapDeltaBytes=4194304 -XX:NonNMethodCodeHeapSize=5836492 -XX:NonProfiledCodeHeapSize=122910874 -XX :ProfiledCodeHeapSize=122910874 -XX:ReservedCodeCacheSize=251658240 -XX:+SegmentedCodeCache -XX:-UseAOT -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseG1GC

Slide 47

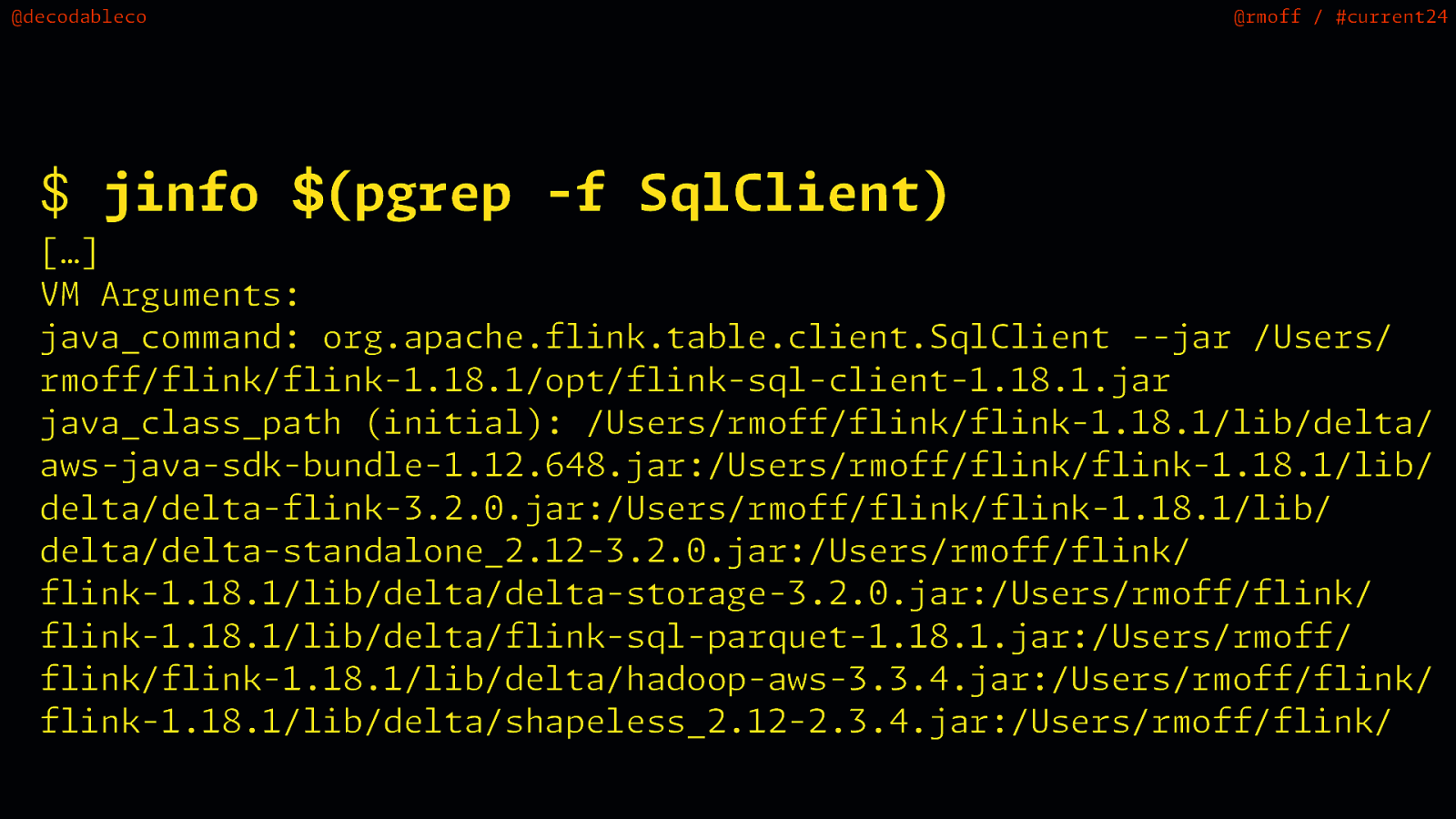

@decodableco @rmoff / #current24 $ jinfo (pgrep -f SqlClient) $ […] VM Arguments: java_command: org.apache.flink.table.client.SqlClient —jar /Users/ rmoff/flink/flink-1.18.1/opt/flink-sql-client-1.18.1.jar java_class_path (initial): /Users/rmoff/flink/flink-1.18.1/lib/delta/ aws-java-sdk-bundle-1.12.648.jar:/Users/rmoff/flink/flink-1.18.1/lib/ delta/delta-flink-3.2.0.jar:/Users/rmoff/flink/flink-1.18.1/lib/ delta/delta-standalone_2.12-3.2.0.jar:/Users/rmoff/flink/ flink-1.18.1/lib/delta/delta-storage-3.2.0.jar:/Users/rmoff/flink/ flink-1.18.1/lib/delta/flink-sql-parquet-1.18.1.jar:/Users/rmoff/ flink/flink-1.18.1/lib/delta/hadoop-aws-3.3.4.jar:/Users/rmoff/flink/ flink-1.18.1/lib/delta/shapeless_2.12-2.3.4.jar:/Users/rmoff/flink/

Slide 48

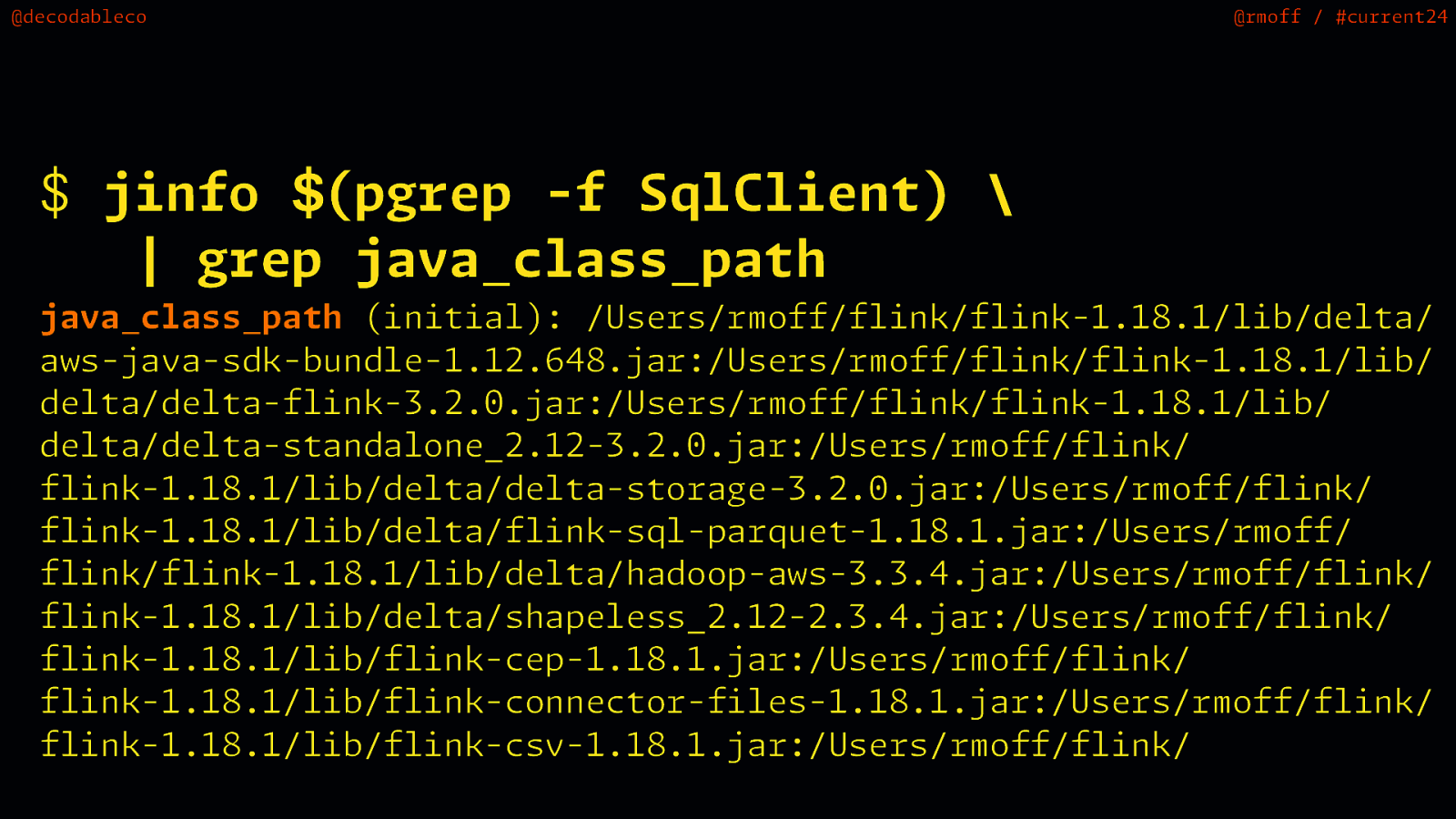

@decodableco @rmoff / #current24 $ jinfo (pgrep -f SqlClient) \ | grep java_class_path $ java_class_path (initial): /Users/rmoff/flink/flink-1.18.1/lib/delta/ aws-java-sdk-bundle-1.12.648.jar:/Users/rmoff/flink/flink-1.18.1/lib/ delta/delta-flink-3.2.0.jar:/Users/rmoff/flink/flink-1.18.1/lib/ delta/delta-standalone_2.12-3.2.0.jar:/Users/rmoff/flink/ flink-1.18.1/lib/delta/delta-storage-3.2.0.jar:/Users/rmoff/flink/ flink-1.18.1/lib/delta/flink-sql-parquet-1.18.1.jar:/Users/rmoff/ flink/flink-1.18.1/lib/delta/hadoop-aws-3.3.4.jar:/Users/rmoff/flink/ flink-1.18.1/lib/delta/shapeless_2.12-2.3.4.jar:/Users/rmoff/flink/ flink-1.18.1/lib/flink-cep-1.18.1.jar:/Users/rmoff/flink/ flink-1.18.1/lib/flink-connector-files-1.18.1.jar:/Users/rmoff/flink/ flink-1.18.1/lib/flink-csv-1.18.1.jar:/Users/rmoff/flink/

Slide 49

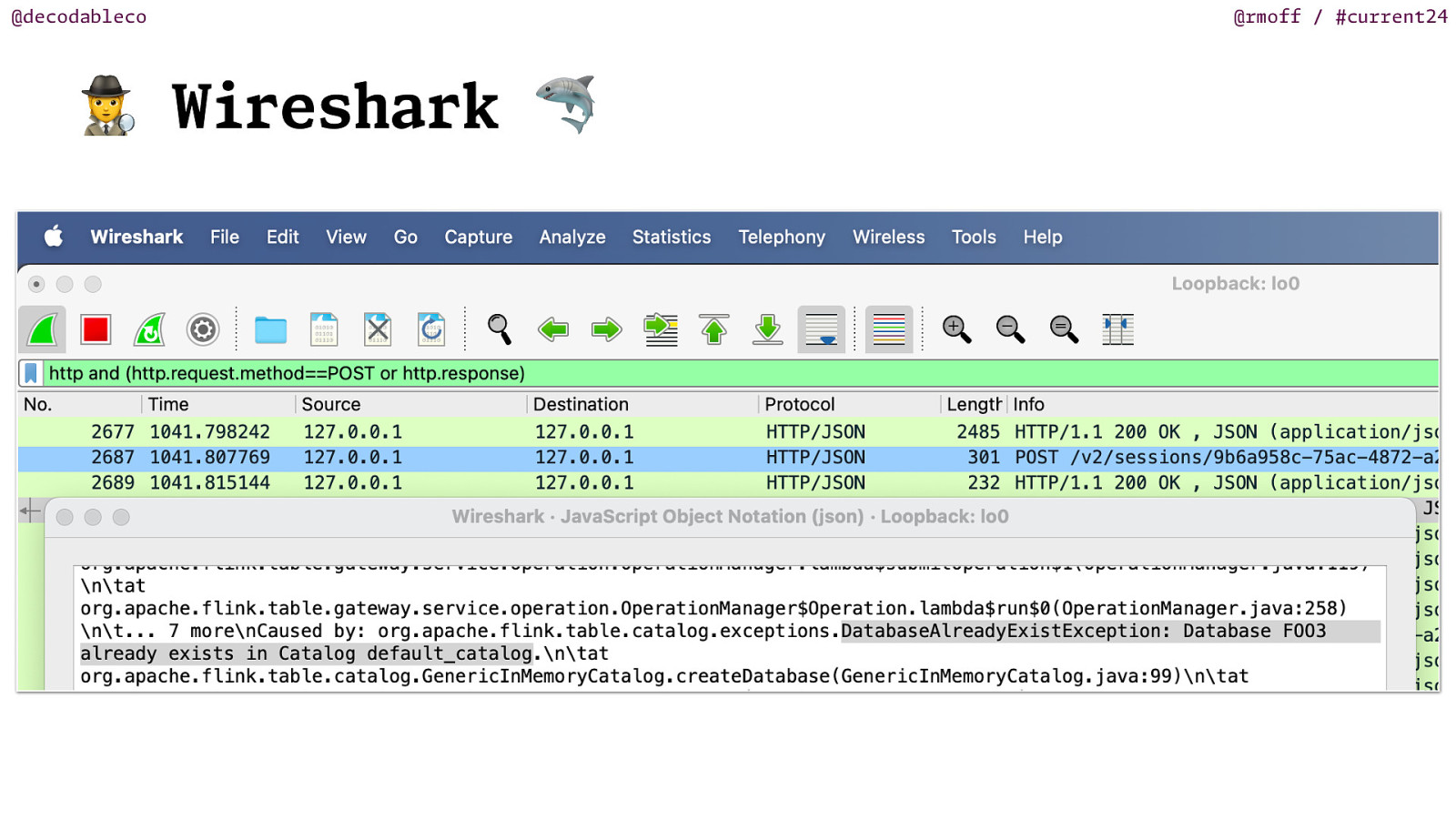

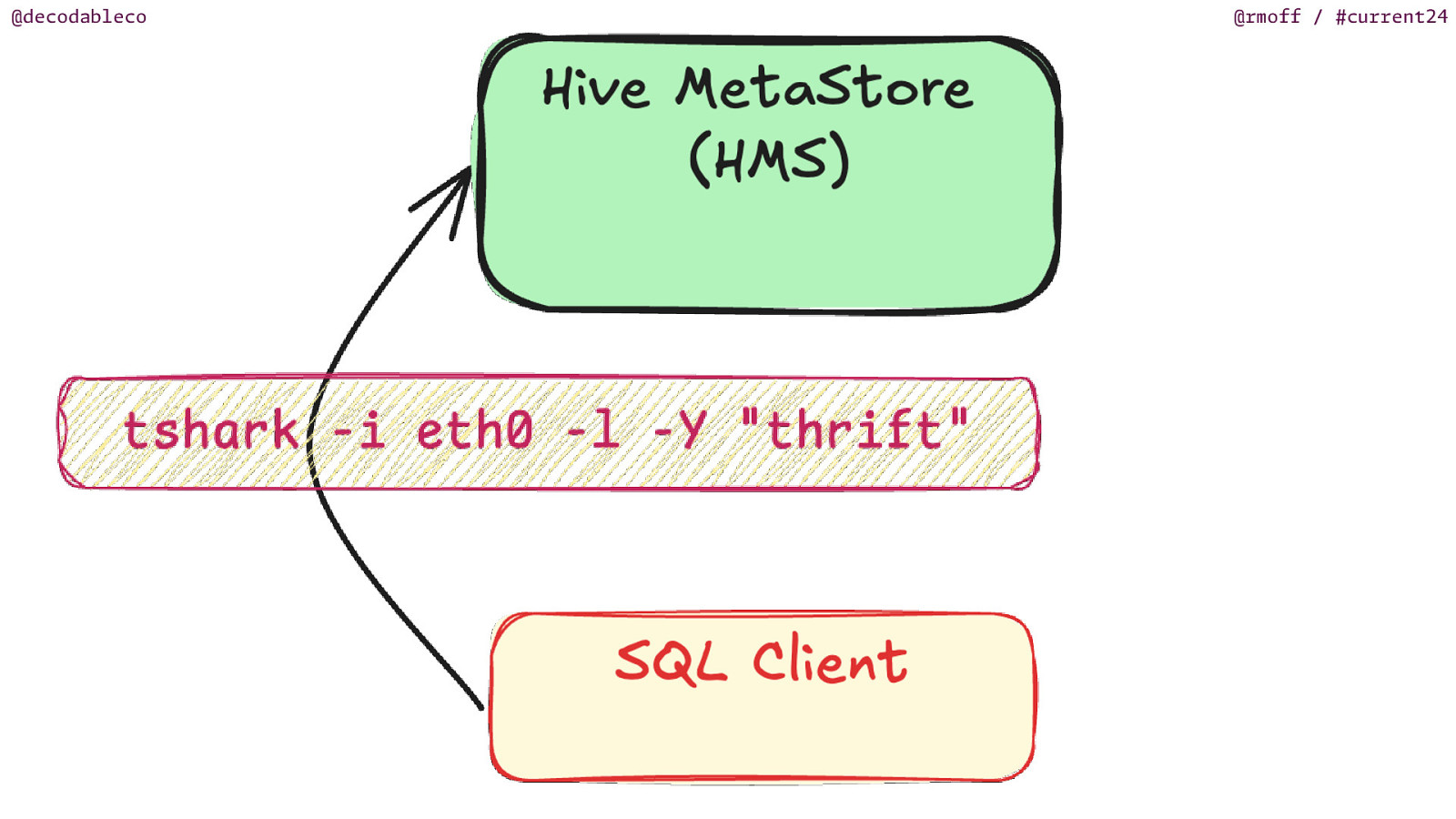

@decodableco i W 🕵 @rmoff / #current24 reshark 🦈

Slide 50

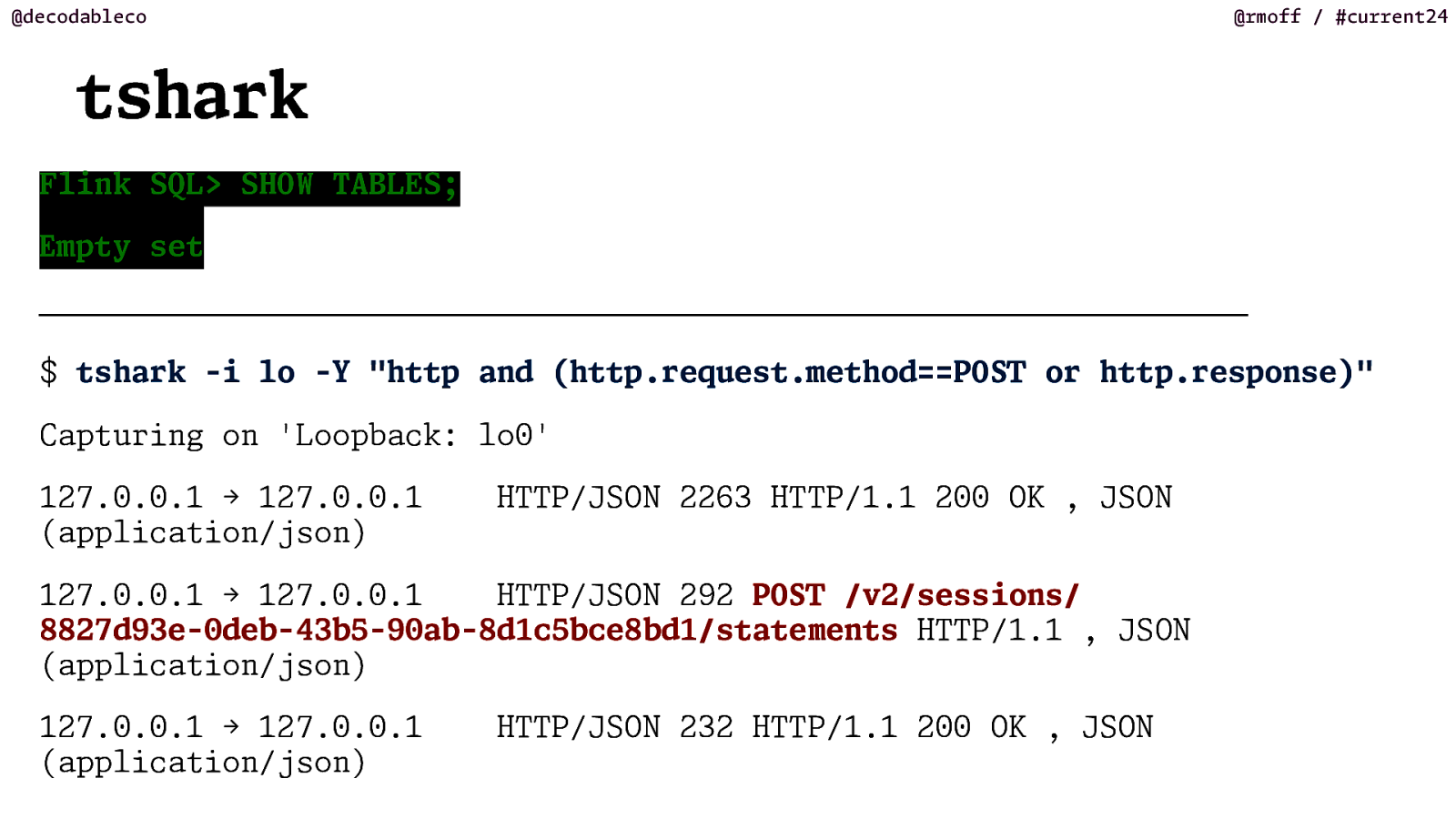

@rmoff / #current24 tshark Flink SQL> SHOW TABLES; pty set ───────────────────────────────────────── $ tshark -i lo -Y “http and (http.request. ethod==PO or http.response)” Capturing on ‘Loopback: lo0’ 127.0.0.1 → 127.0.0.1 (application/json) HTTP/JSON 2263 HTTP/1.1 200 OK , JSON 127.0.0.1 → 127.0.0.1 HTTP/JSON 292 PO /v2/sessions/ 8827d93e-0deb-43b5-90ab-8d1c5bce8bd1/statements HTTP/1.1 , JSON (application/json) T S m T HTTP/JSON 232 HTTP/1.1 200 OK , JSON S 127.0.0.1 → 127.0.0.1 (application/json) m E @decodableco

Slide 51

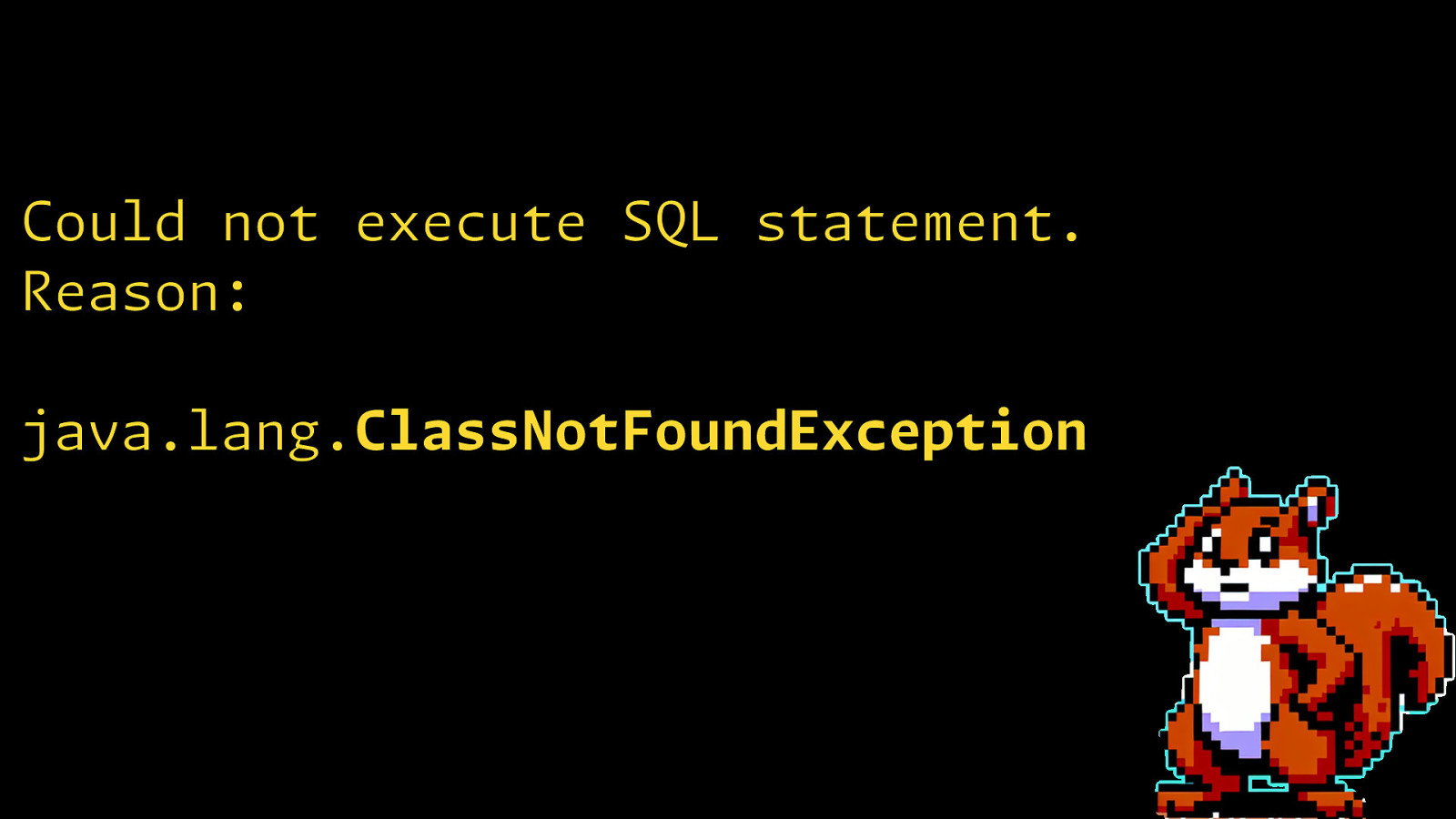

Could not execute SQL statement. Reason: java.lang.ClassNotFoundException

Slide 52

@decodableco @rmoff / #current24

Slide 53

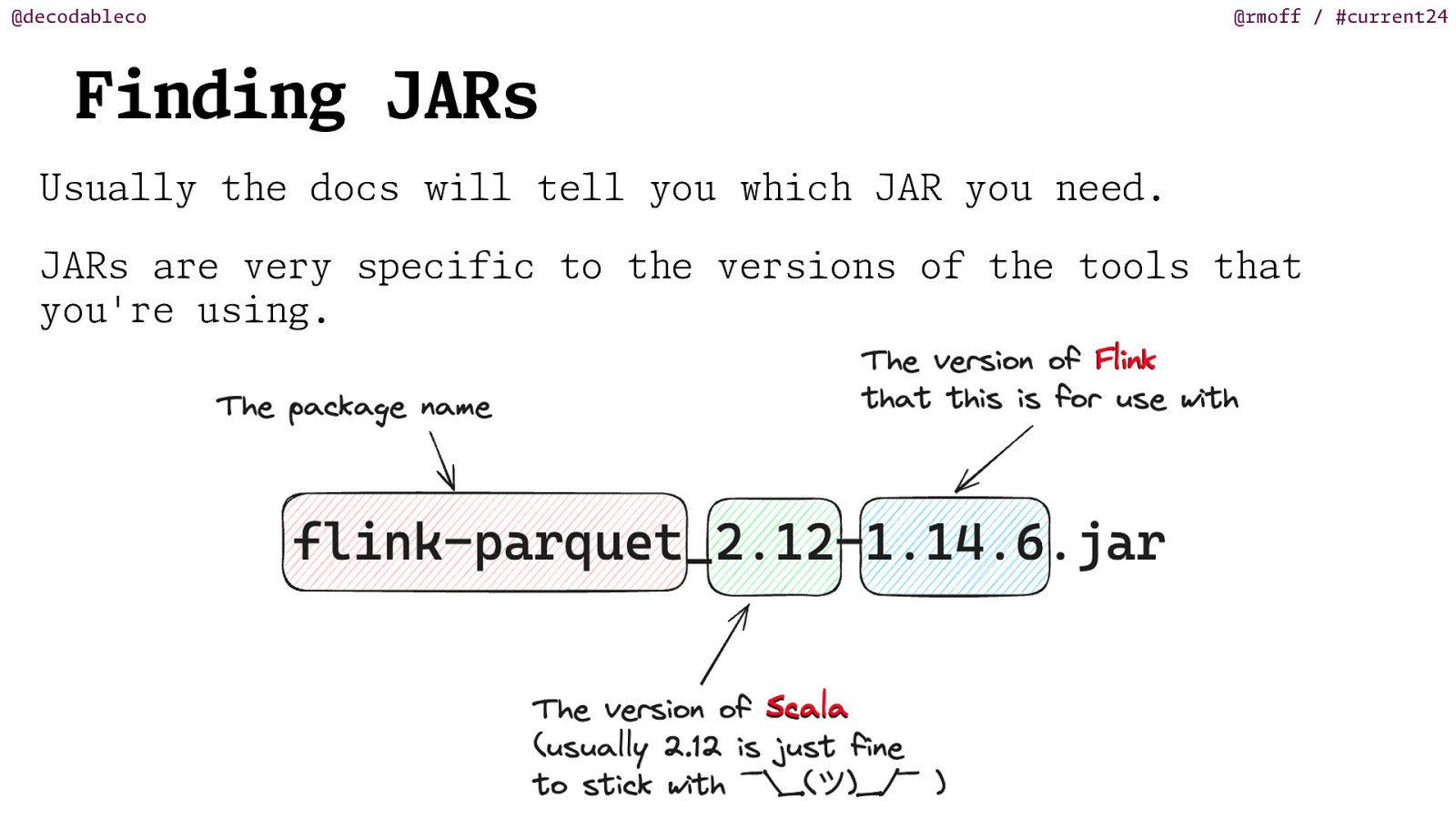

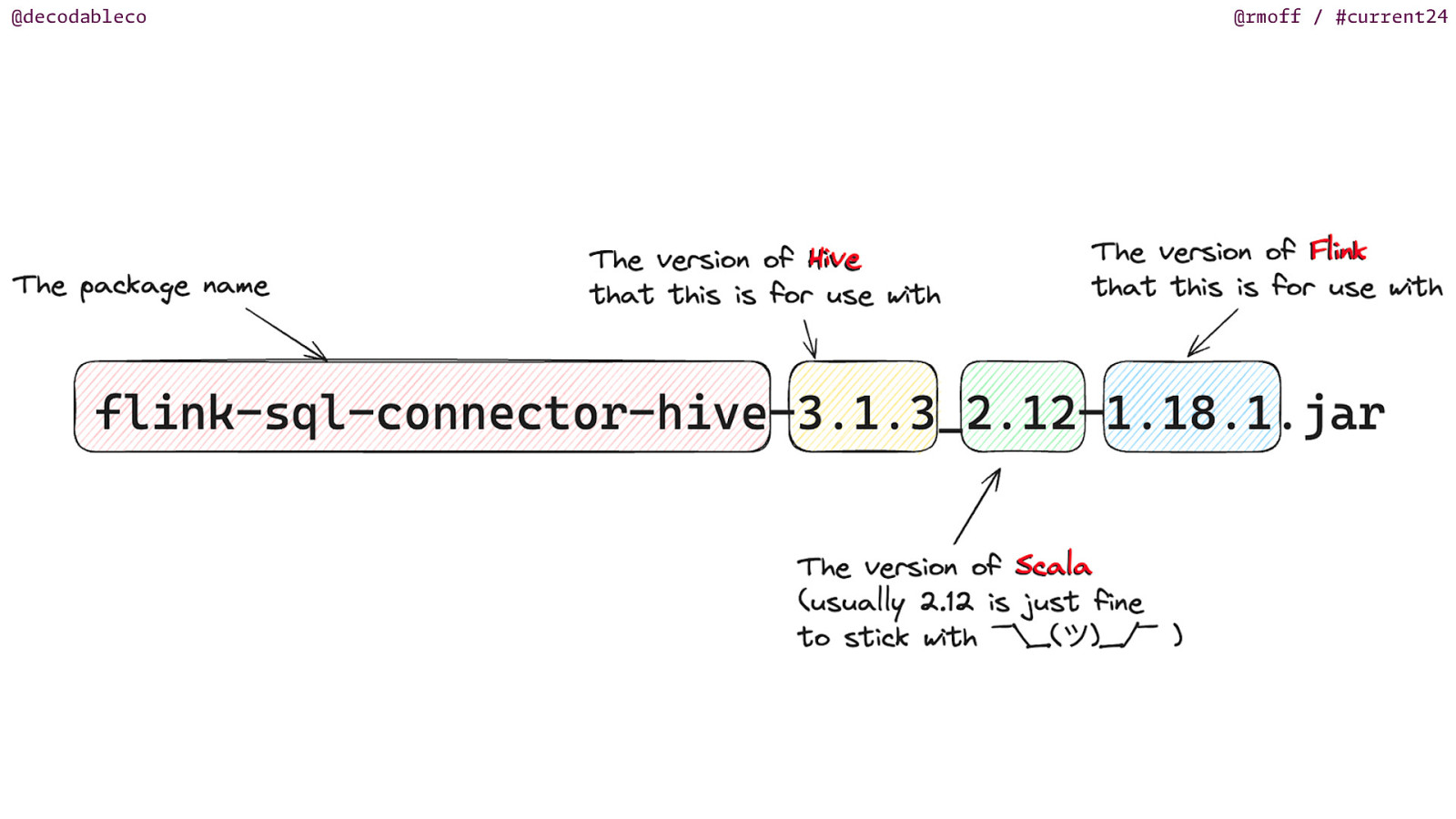

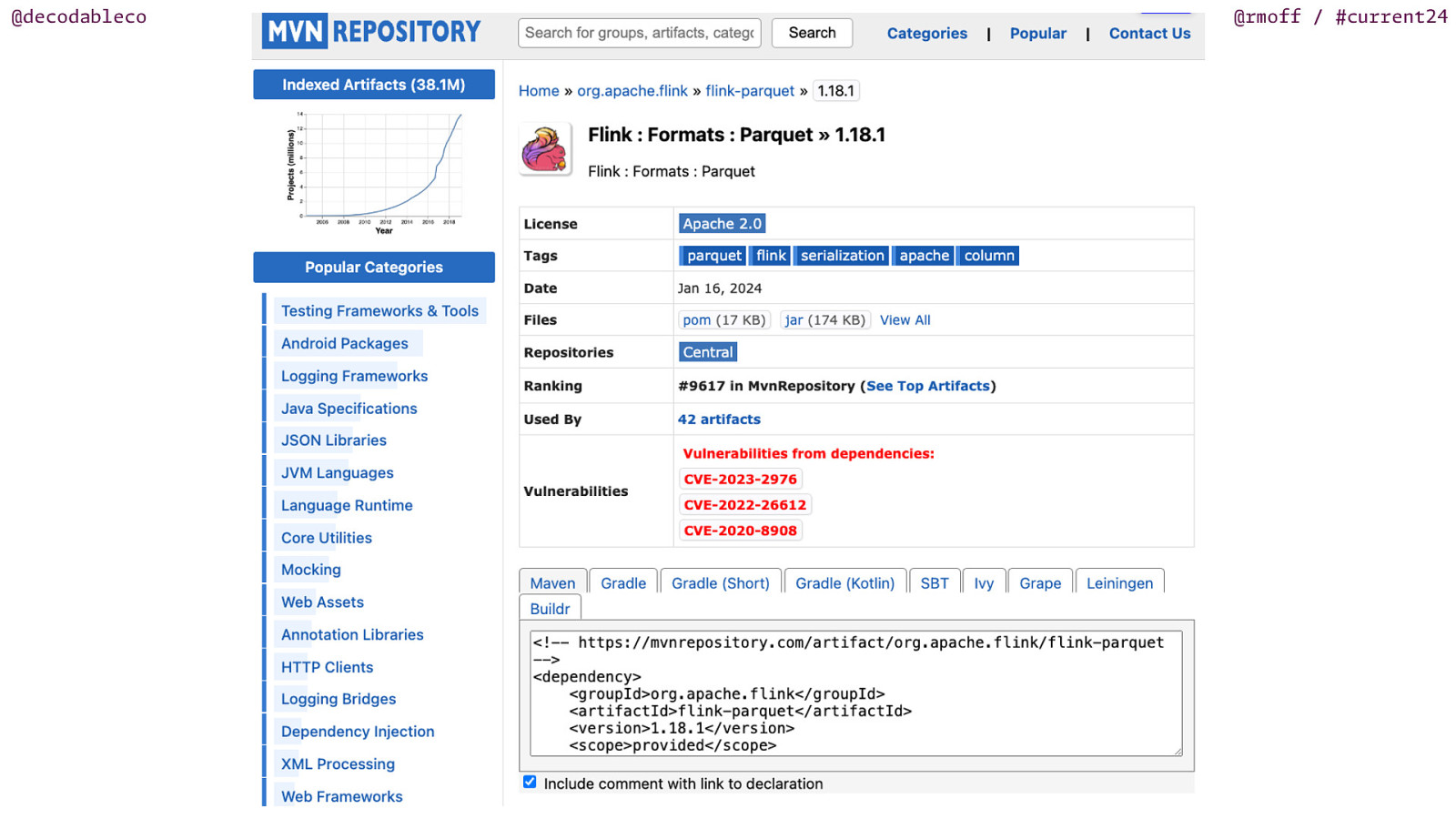

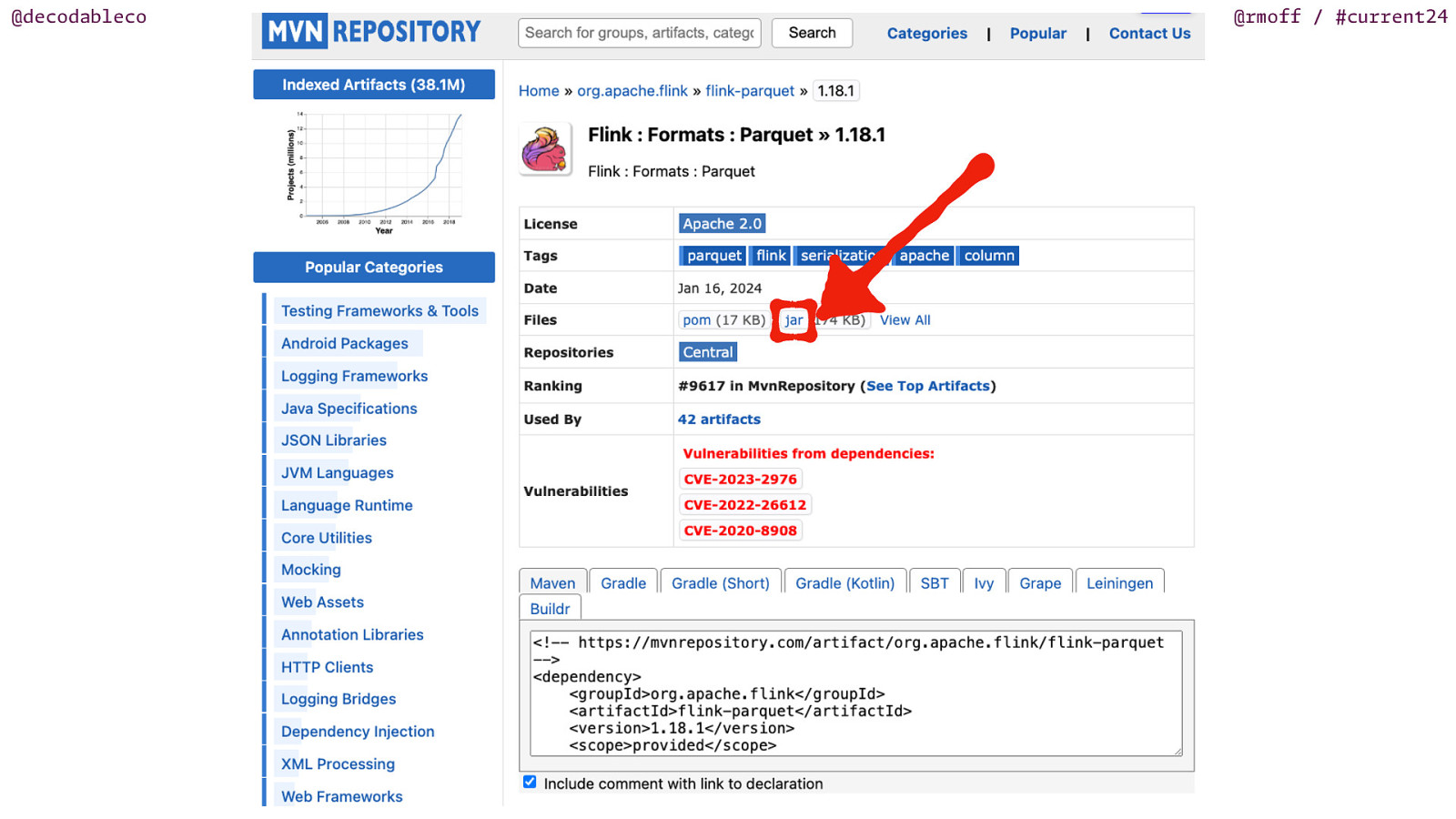

@decodableco @rmoff / #current24 Finding JARs Usually the docs ll tell you which JAR you need. i w JARs are very specific to the versions of the tools that you’re using.

Slide 54

@decodableco @rmoff / #current24

Slide 55

@decodableco @rmoff / #current24

Slide 56

@decodableco @rmoff / #current24

Slide 57

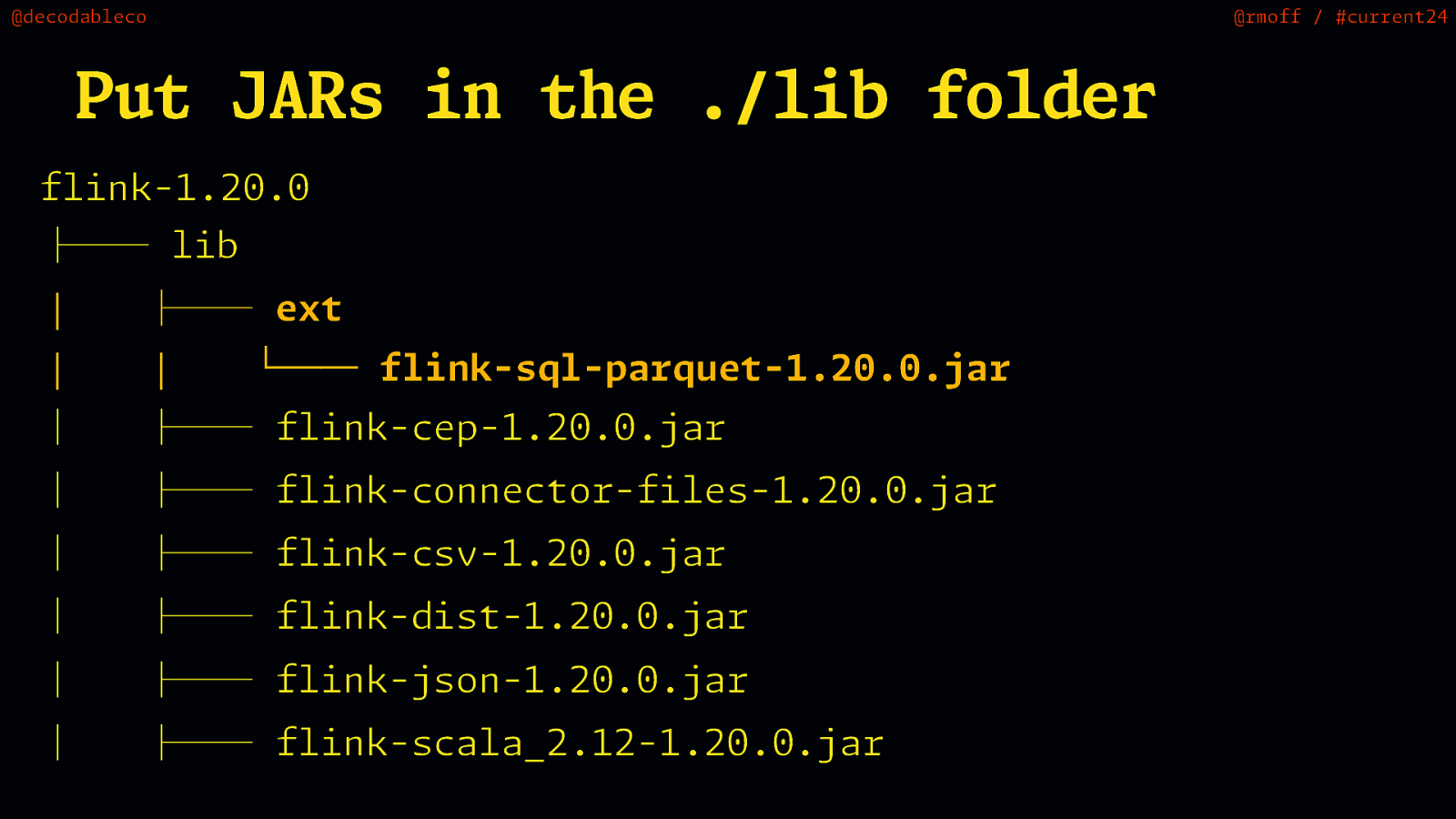

@decodableco @rmoff / #current24 Put JARs in the ./lib folder flink-1.20.0 ├── lib │ ├── ext │ │ │ ├── flink-cep-1.20.0.jar │ ├── flink-connector-files-1.20.0.jar │ ├── flink-csv-1.20.0.jar │ ├── flink-dist-1.20.0.jar │ ├── flink-json-1.20.0.jar │ ├── flink-scala_2.12-1.20.0.jar └── flink-sql-parquet-1.20.0.jar

Slide 58

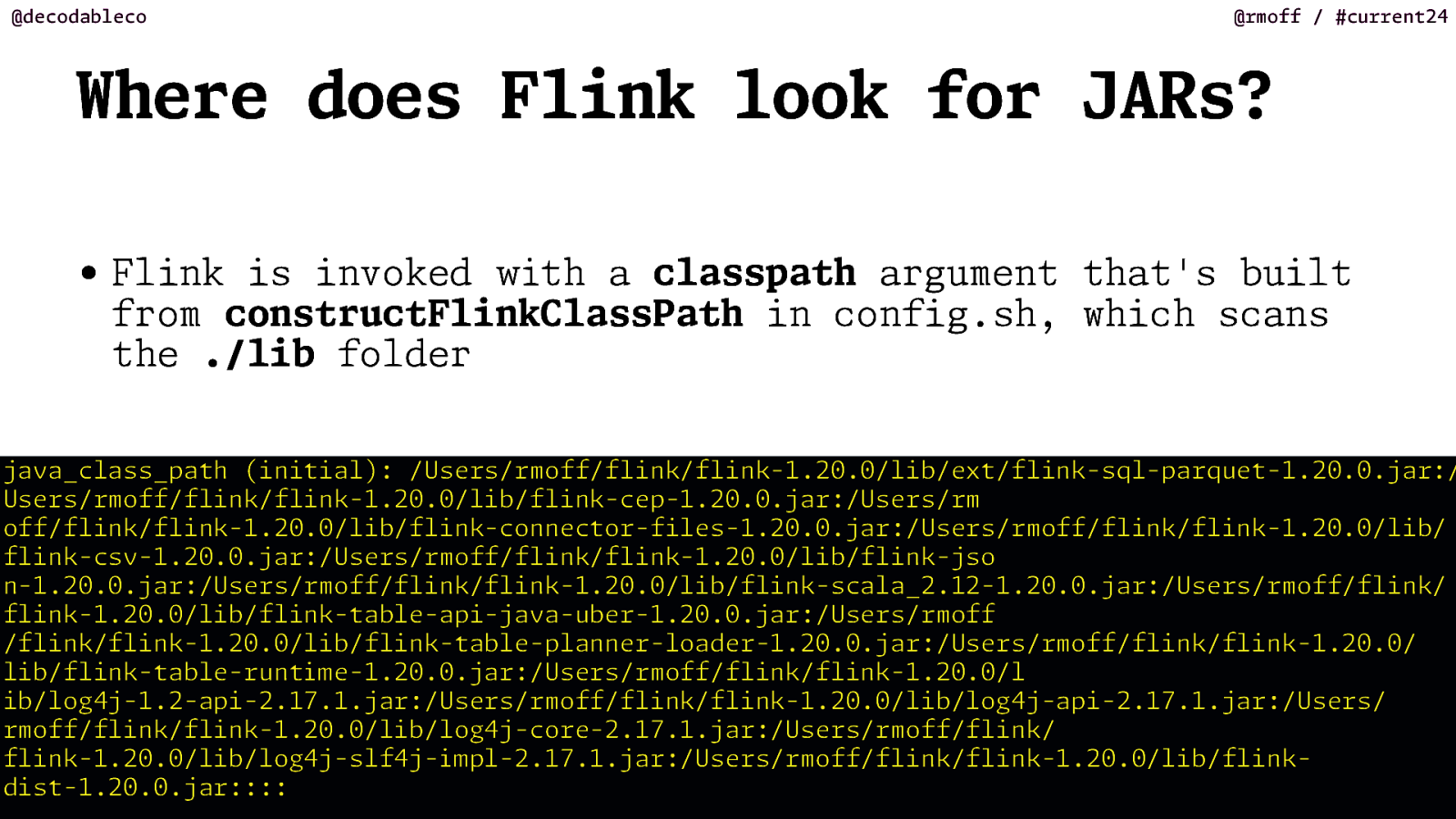

@decodableco @rmoff / #current24 Where does Flink look for JARs? • Flink is invoked th a classpath argument that’s built from constructFlinkClassPath in config.sh, which scans the ./lib folder m i i w m i java_class_path (initial): /Users/rmoff/flink/flink-1.20.0/lib/ext/flink-sql-parquet-1.20.0.jar:/ Users/rmoff/flink/flink-1.20.0/lib/flink-cep-1.20.0.jar:/Users/rm off/flink/flink-1.20.0/lib/flink-connector-files-1.20.0.jar:/Users/rmoff/flink/flink-1.20.0/lib/ flink-csv-1.20.0.jar:/Users/rmoff/flink/flink-1.20.0/lib/flink-jso n-1.20.0.jar:/Users/rmoff/flink/flink-1.20.0/lib/flink-scala_2.12-1.20.0.jar:/Users/rmoff/flink/ flink-1.20.0/lib/flink-table-api-java-uber-1.20.0.jar:/Users/rmoff /flink/flink-1.20.0/lib/flink-table-planner-loader-1.20.0.jar:/Users/rmoff/flink/flink-1.20.0/ lib/flink-table-runt e-1.20.0.jar:/Users/rmoff/flink/flink-1.20.0/l ib/log4j-1.2-api-2.17.1.jar:/Users/rmoff/flink/flink-1.20.0/lib/log4j-api-2.17.1.jar:/Users/ rmoff/flink/flink-1.20.0/lib/log4j-core-2.17.1.jar:/Users/rmoff/flink/ flink-1.20.0/lib/log4j-slf4j- pl-2.17.1.jar:/Users/rmoff/flink/flink-1.20.0/lib/flinkdist-1.20.0.jar::::

Slide 59

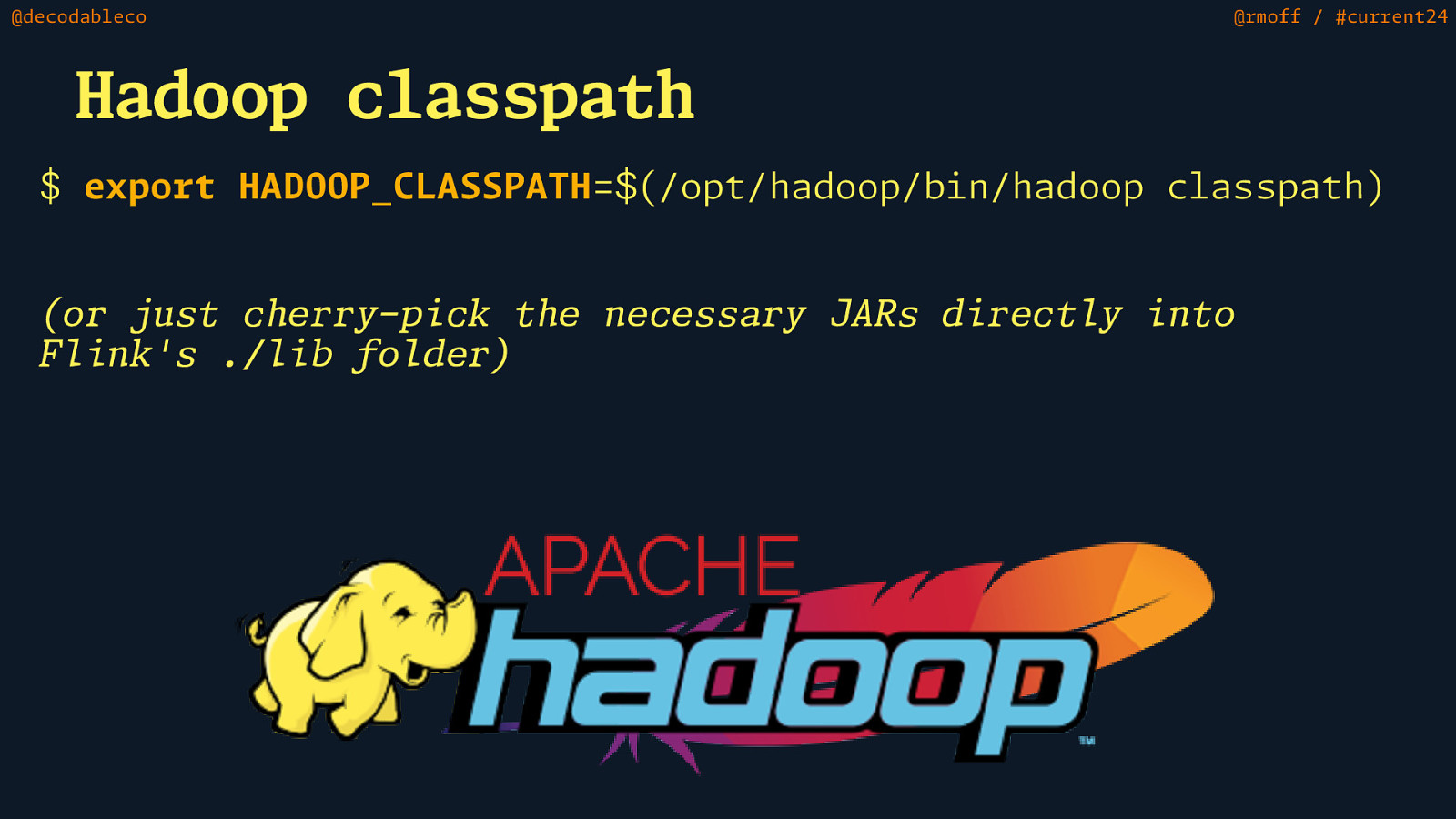

@decodableco @rmoff / #current24 Hadoop classpath $ export HADOOP_CLASSPATH= (/opt/hadoop/bin/hadoop classpath) to n i l ecessary JARs d rect y i $ n h i l f i h l n i l (or just c erry-p ck t e F k’s ./ b o der)

Slide 60

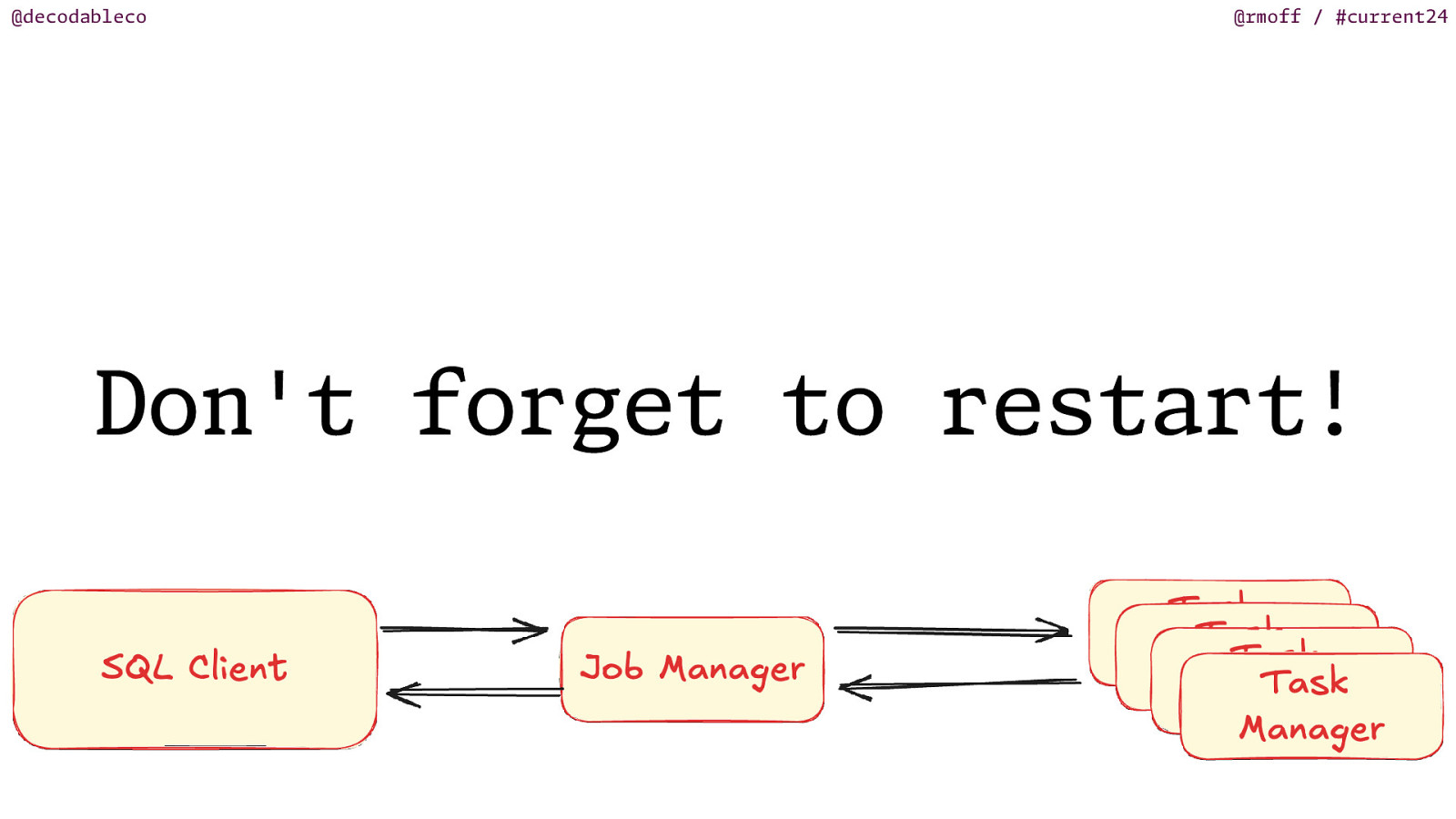

@decodableco @rmoff / #current24 Don’t forget to restart!

Slide 61

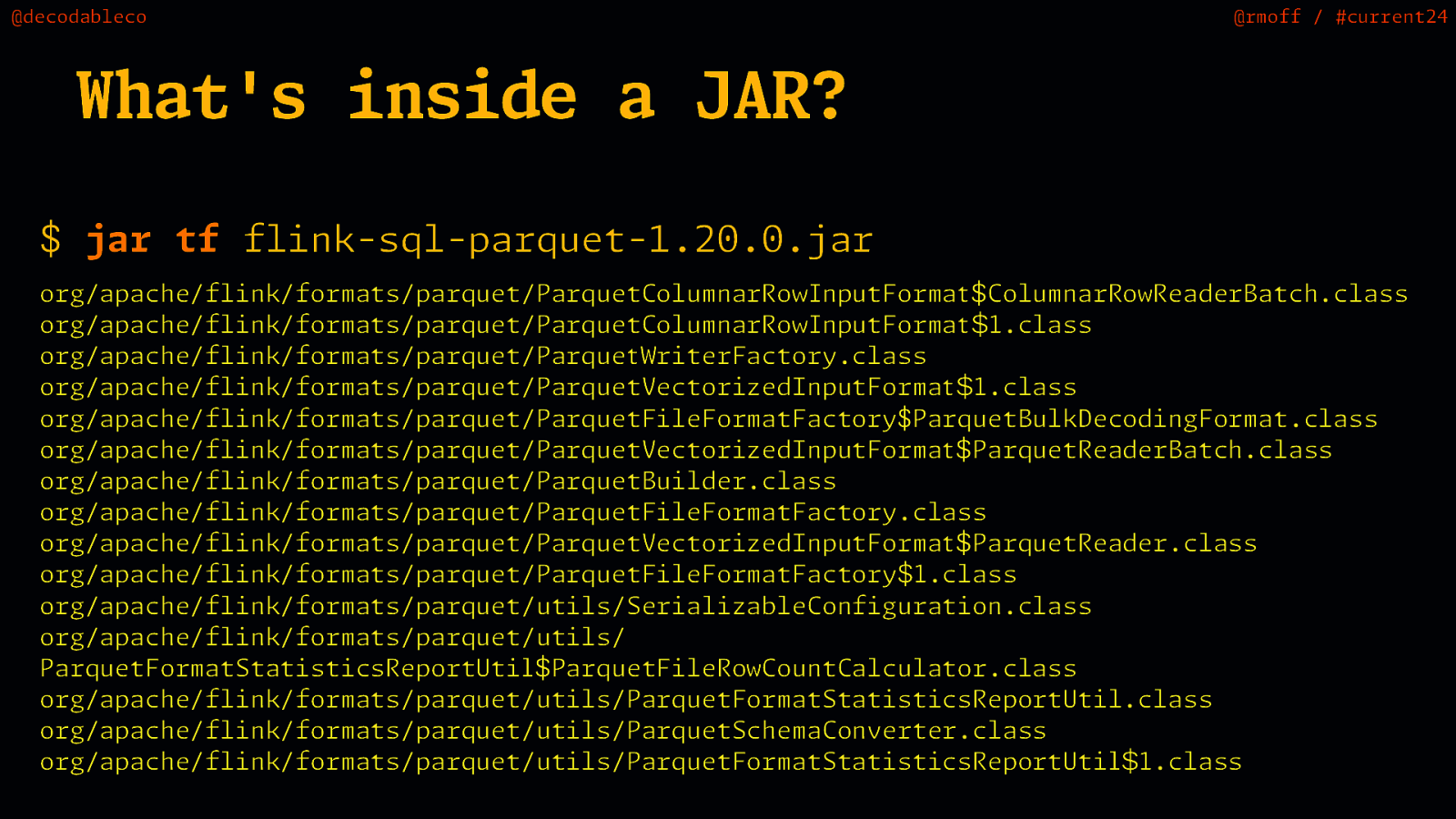

@decodableco @rmoff / #current24 What’s inside a JAR? $ jar tf flink-sql-parquet-1.20.0.jar 1 $ l 1 $ $ 1 t t $ $ $ t t t 1 $ I I w w W t $ l org/apache/flink/formats/parquet/ParquetColumnarRo nputForma ColumnarRowReaderBatch.class org/apache/flink/formats/parquet/ParquetColumnarRo nputForma .class org/apache/flink/formats/parquet/Parque riterFactory.class org/apache/flink/formats/parquet/ParquetVectorizedInputForma .class org/apache/flink/formats/parquet/ParquetFileFormatFactory$ParquetBulkDecodingFormat.class org/apache/flink/formats/parquet/ParquetVectorizedInputForma ParquetReaderBatch.class org/apache/flink/formats/parquet/ParquetBuilder.class org/apache/flink/formats/parquet/ParquetFileFormatFactory.class org/apache/flink/formats/parquet/ParquetVectorizedInputForma ParquetReader.class org/apache/flink/formats/parquet/ParquetFileFormatFactory .class org/apache/flink/formats/parquet/utils/SerializableConfiguration.class org/apache/flink/formats/parquet/utils/ ParquetFormatStatisticsReportUti ParquetFileRowCountCalculator.class org/apache/flink/formats/parquet/utils/ParquetFormatStatisticsReportUtil.class org/apache/flink/formats/parquet/utils/ParquetSchemaConverter.class org/apache/flink/formats/parquet/utils/ParquetFormatStatisticsReportUti .class

Slide 62

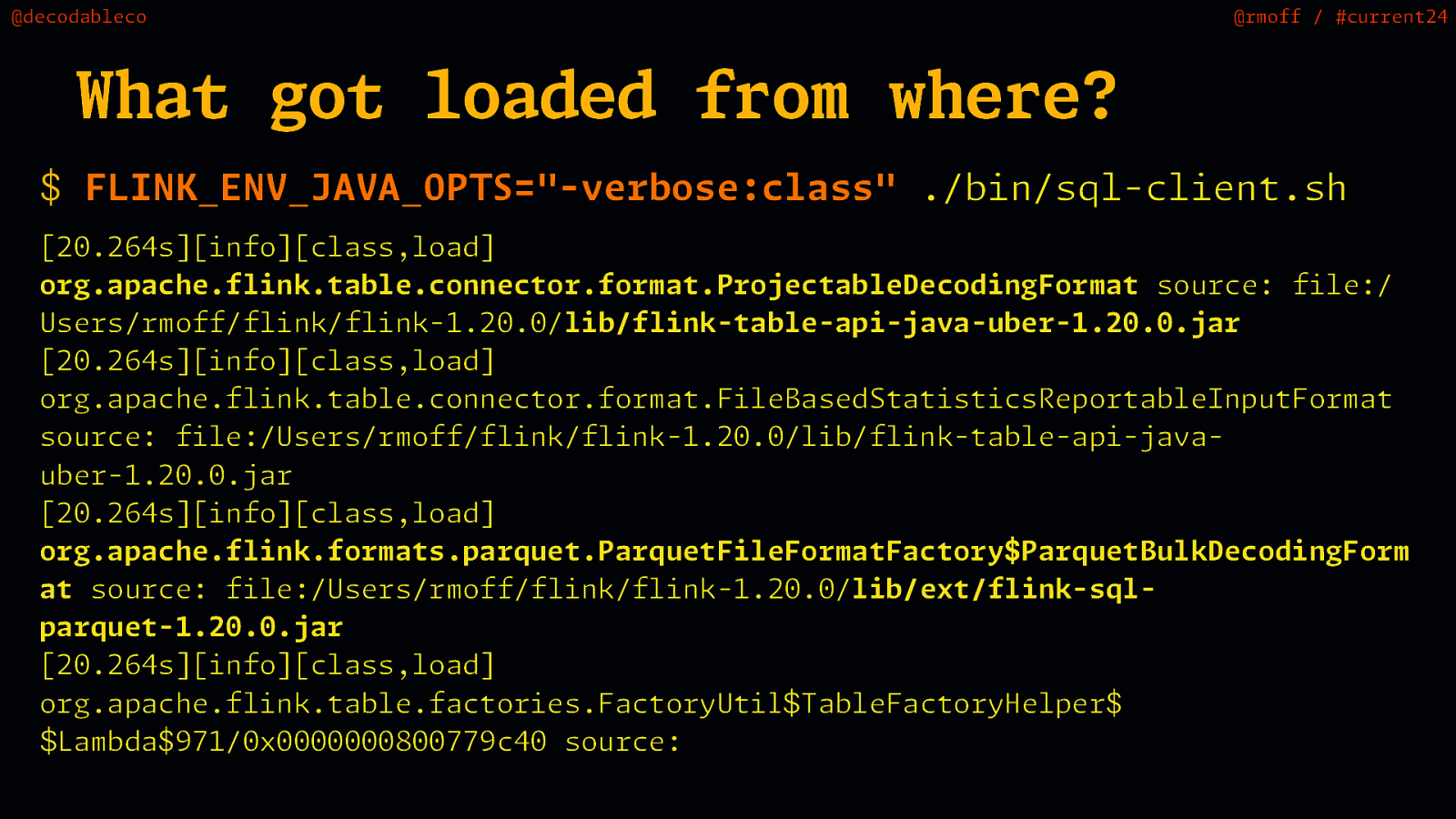

@rmoff / #current24 What got loaded from where? $ FLINK_ENV_JAVA_OP =”-verbose:class” ./bin/sql-client.sh $ l S T [20.264s][info][class,load] org.apache.flink.table.connector.format.ProjectableDecodingFormat source: file:/ Users/rmoff/flink/flink-1.20.0/lib/flink-table-api-java-uber-1.20.0.jar [20.264s][info][class,load] org.apache.flink.table.connector.format.FileBasedStatisticsReportableInputFormat source: file:/Users/rmoff/flink/flink-1.20.0/lib/flink-table-api-javauber-1.20.0.jar [20.264s][info][class,load] org.apache.flink.formats.parquet.ParquetFileFormatFactory$ParquetBulkDecodingForm at source: file:/Users/rmoff/flink/flink-1.20.0/lib/ext/flink-sqlparquet-1.20.0.jar [20.264s][info][class,load] org.apache.flink.table.factories.FactoryUti TableFactoryHelper$ ambda$971/0x0000000800779c40 source: L $ @decodableco

Slide 63

@decodableco y l l a e r @rmoff / #current24 What Runs Where?

Slide 64

@decodableco nightlies.apache.org/flink/flink-docs-master/docs/deployment/overview/ / / Ref: https: @rmoff / #current24

Slide 65

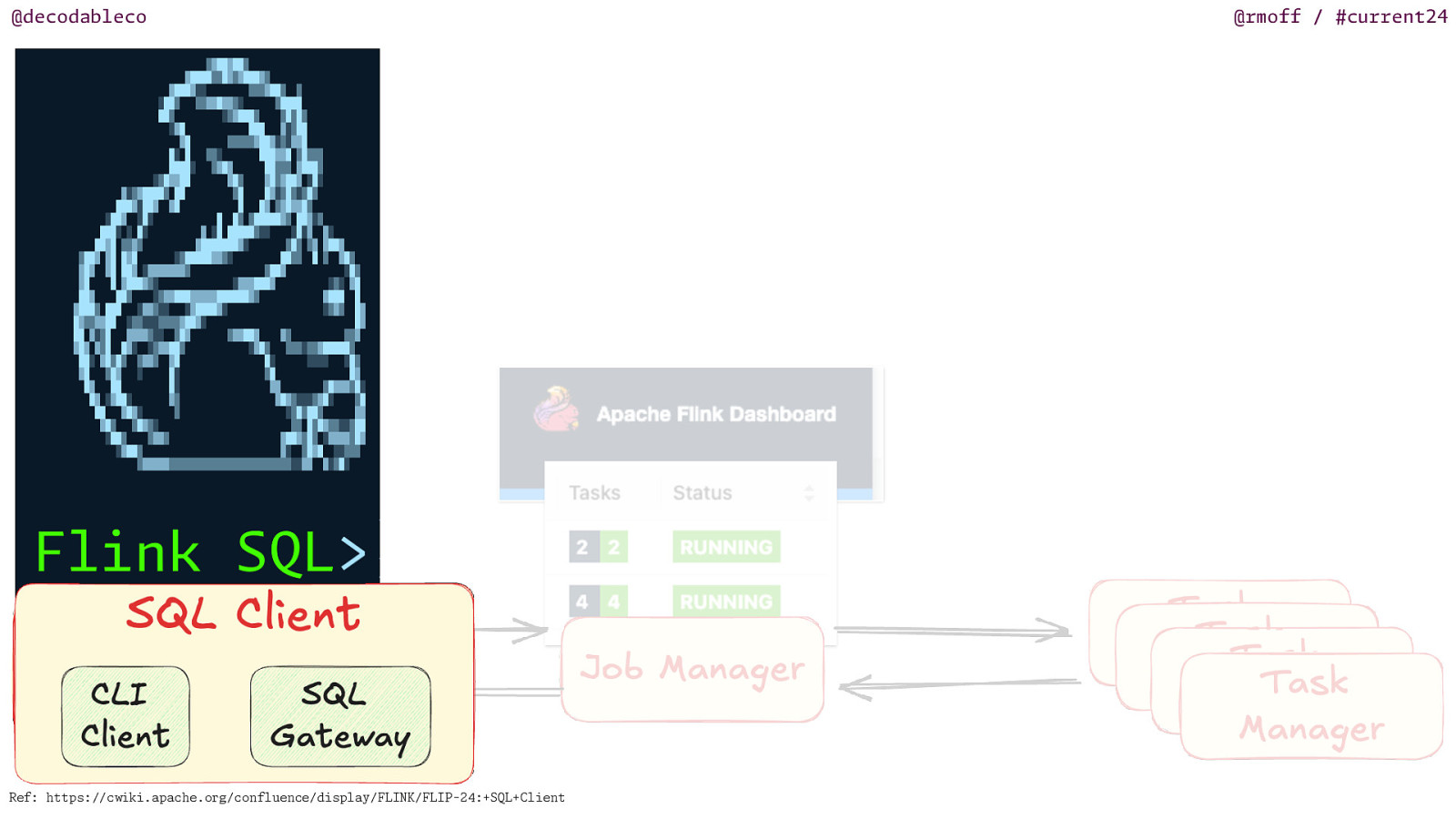

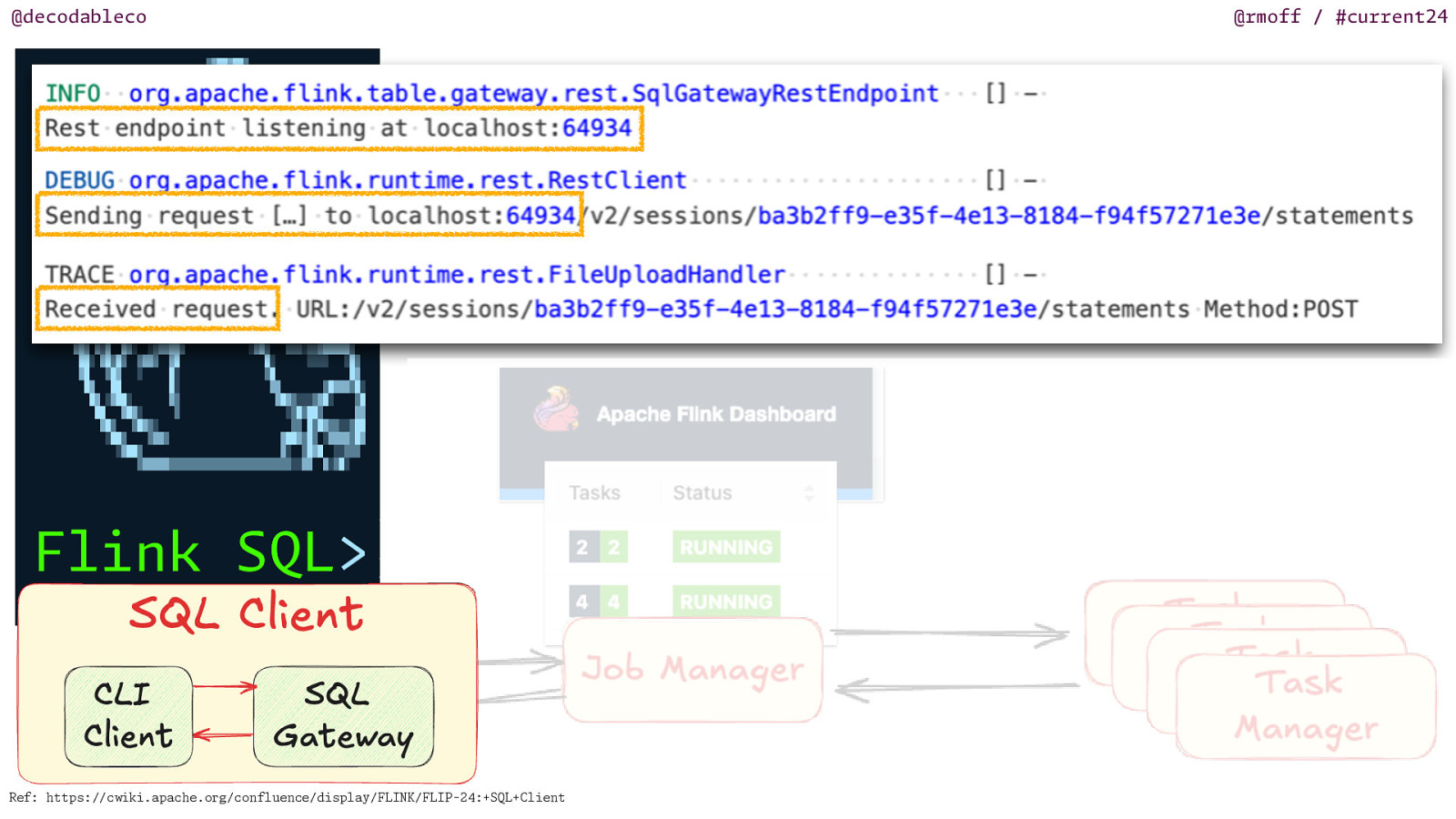

@decodableco i c w / / Ref: https: ki.apache.org/confluence/display/FLINK/FLIP-24:+SQL+Client @rmoff / #current24

Slide 66

@decodableco i c w / / Ref: https: ki.apache.org/confluence/display/FLINK/FLIP-24:+SQL+Client @rmoff / #current24

Slide 67

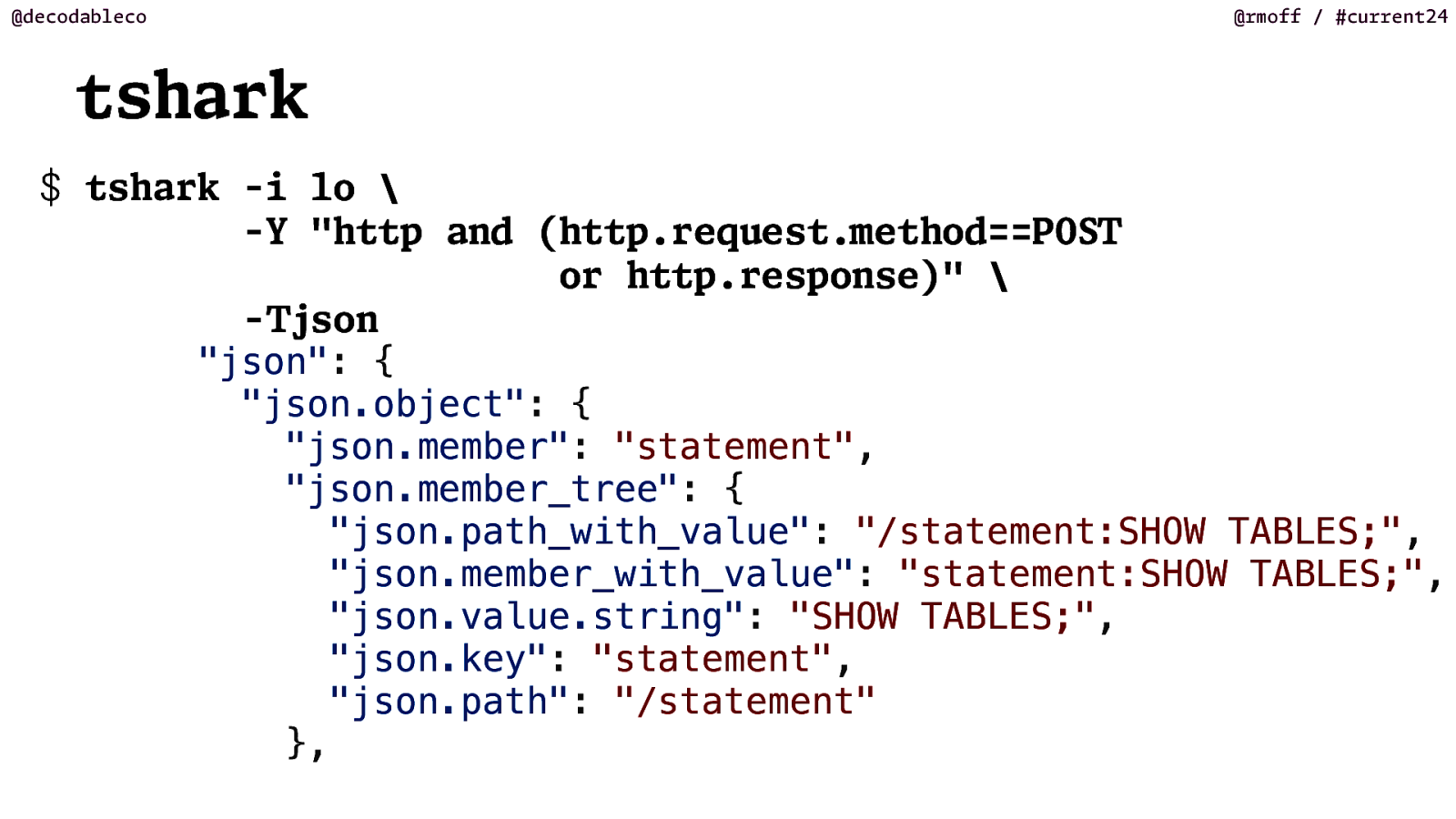

@decodableco @rmoff / #current24 tshark T S m j T $ tshark -i lo \ -Y “http and (http.request. ethod==PO or http.response)” \ - son “json”: { “json.object”: { “json.member”: “statement”, “json.member_tree”: { “json.path_with_value”: “/statement:SHOW TABLES;”, “json.member_with_value”: “statement:SHOW TABLES;”, “json.value.string”: “SHOW TABLES;”, “json.key”: “statement”, “json.path”: “/statement” },

Slide 68

@decodableco what about external stuff? @rmoff / #current24

Slide 69

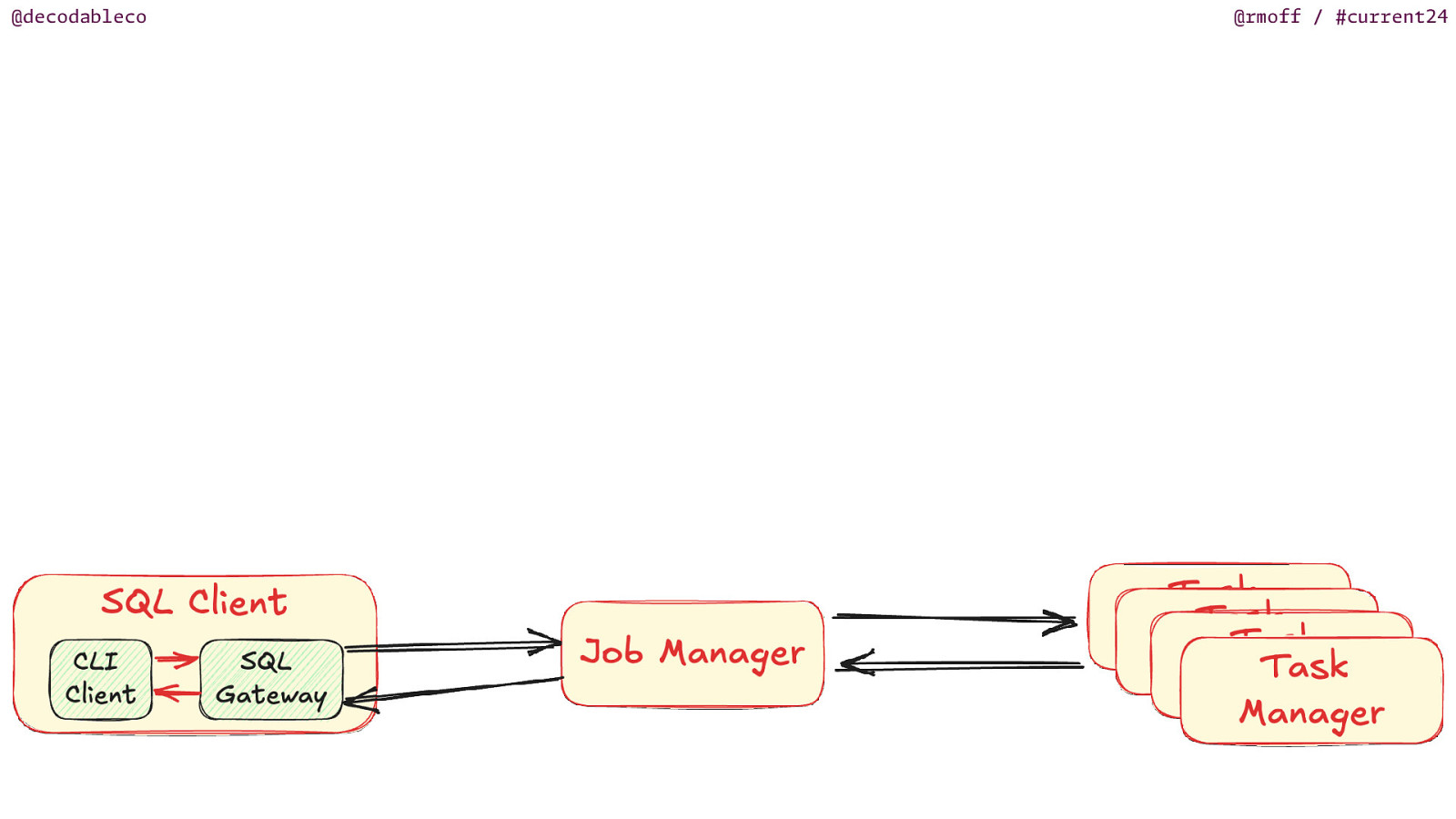

@decodableco @rmoff / #current24

Slide 70

@decodableco @rmoff / #current24

Slide 71

@decodableco @rmoff / #current24

Slide 72

@decodableco @rmoff / #current24

Slide 73

@decodableco Uh oh… @rmoff / #current24

Slide 74

@decodableco 💩 rises to the top… @rmoff / #current24

Slide 75

@decodableco 💩 rises to the top… @rmoff / #current24

Slide 76

@decodableco @rmoff / #current24

Slide 77

@decodableco $ @rmoff / #current24

Slide 78

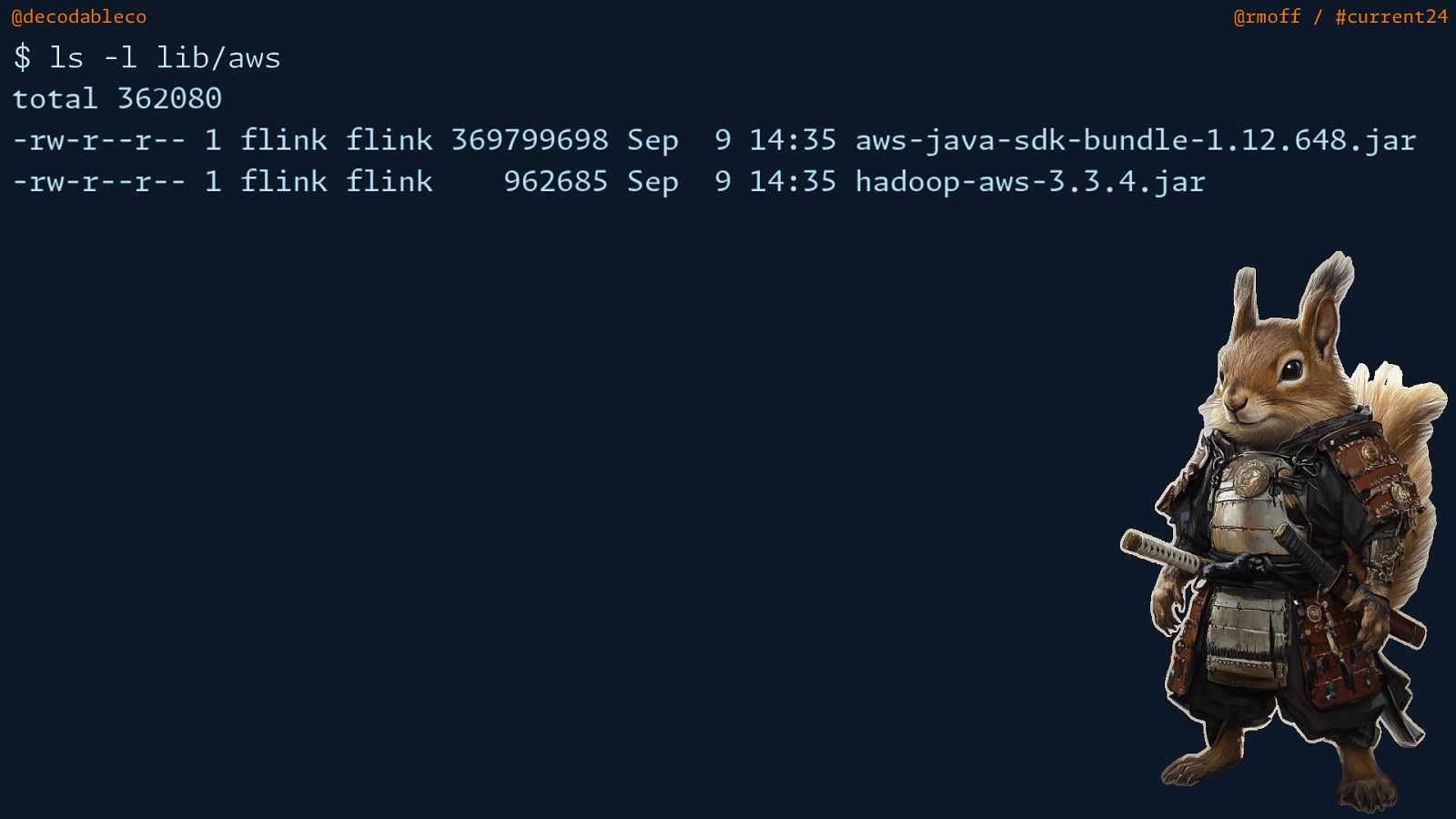

@decodableco $ ls -l lib/aws @rmoff / #current24

Slide 79

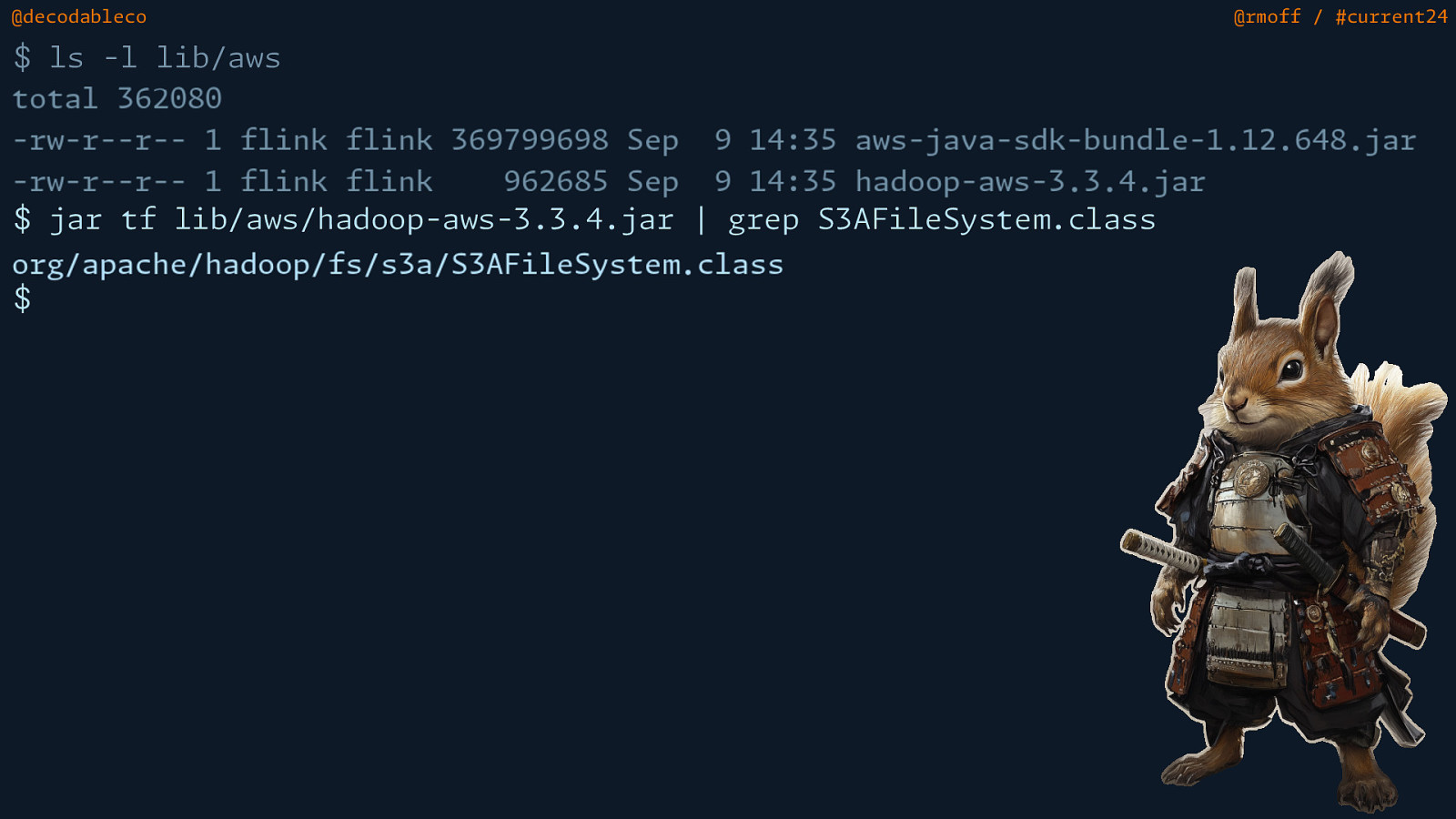

@decodableco @rmoff / #current24 $ ls -l lib/aws $ jar tf lib/aws/hadoop-aws-3.3.4.jar | grep S3AFileSyste .class m $

Slide 80

@decodableco @rmoff / #current24 $ ls -l lib/aws $ jar tf lib/aws/hadoop-aws-3.3.4.jar | grep S3AFileSyste .class m $

Slide 81

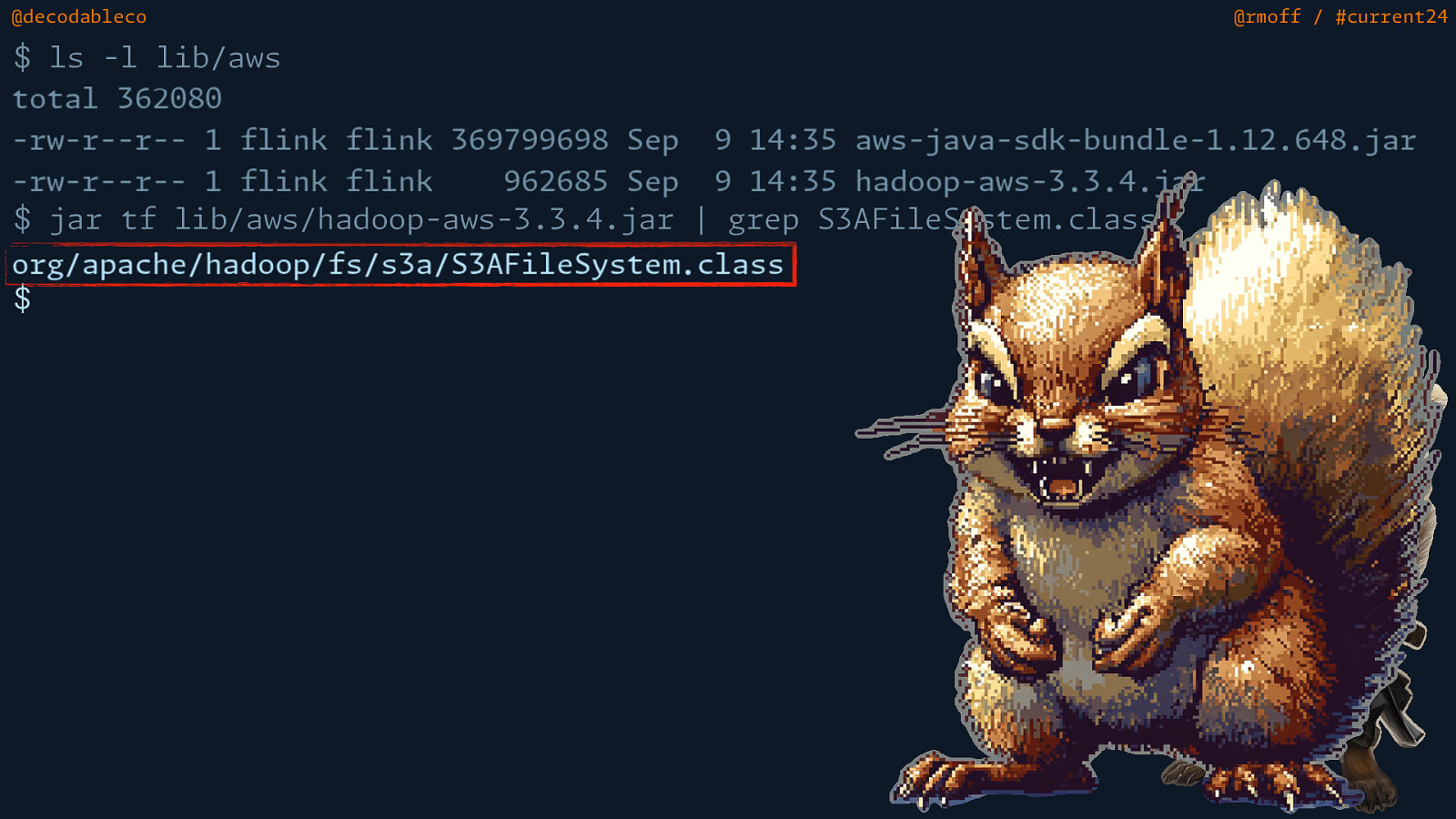

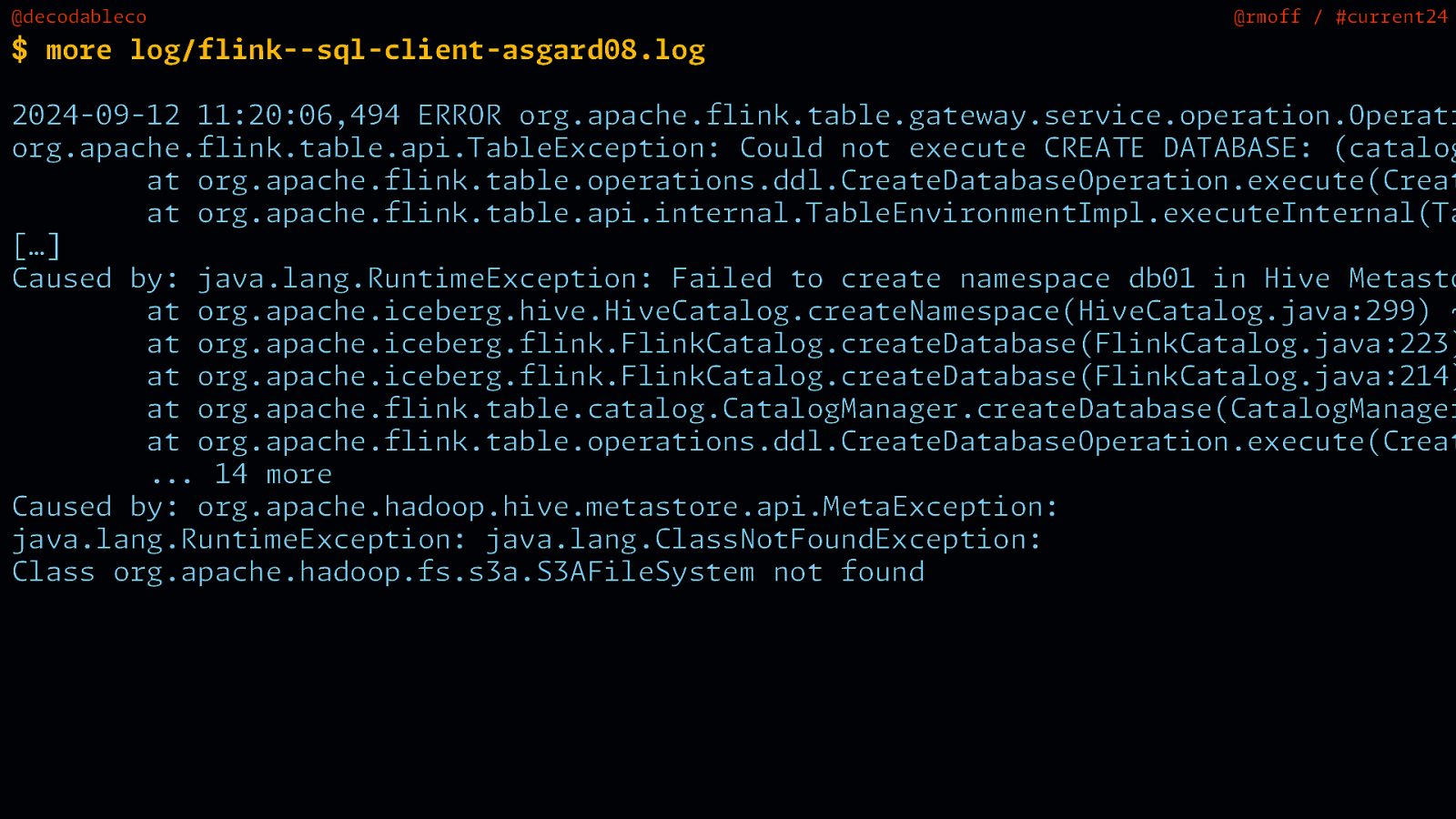

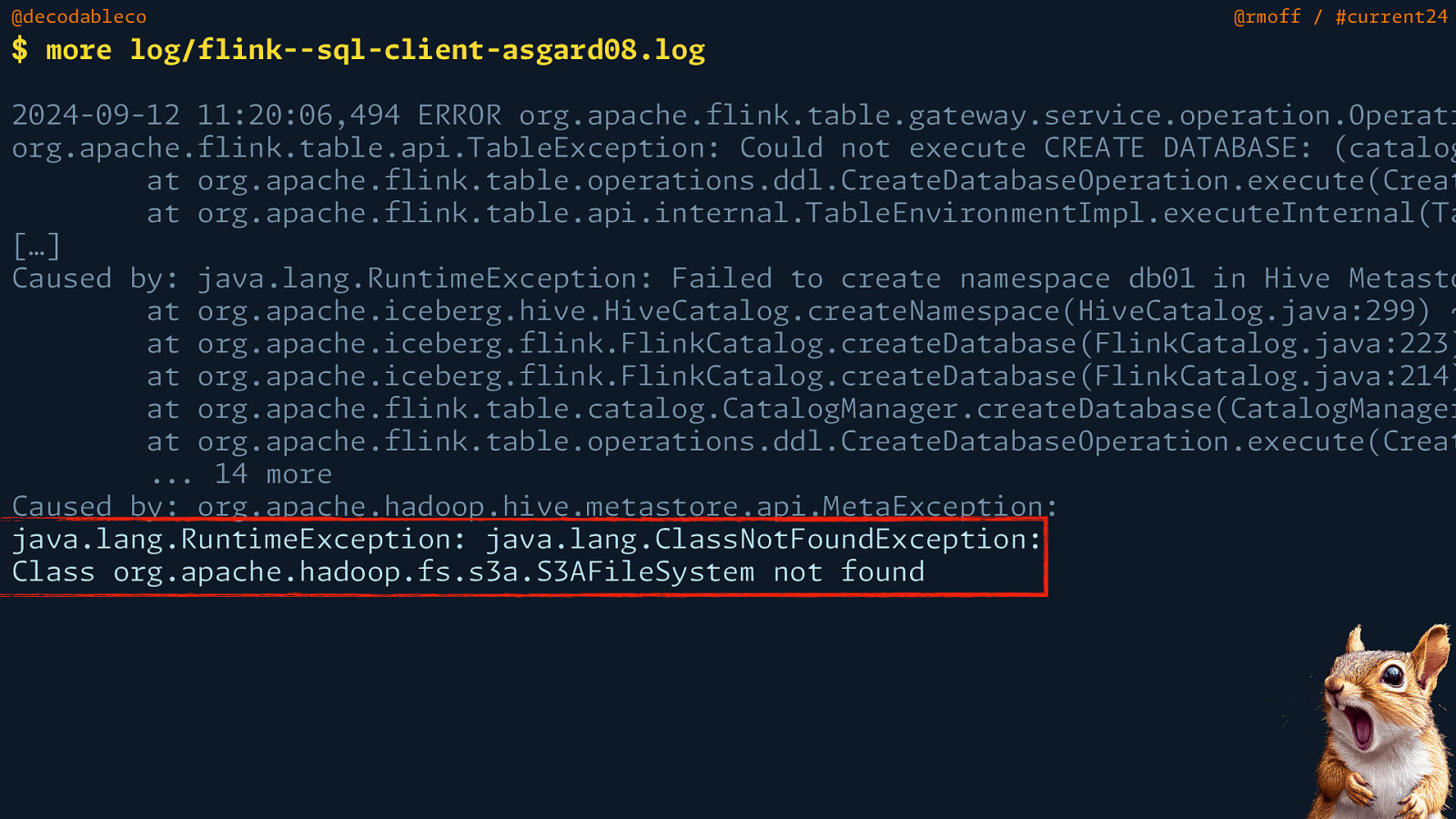

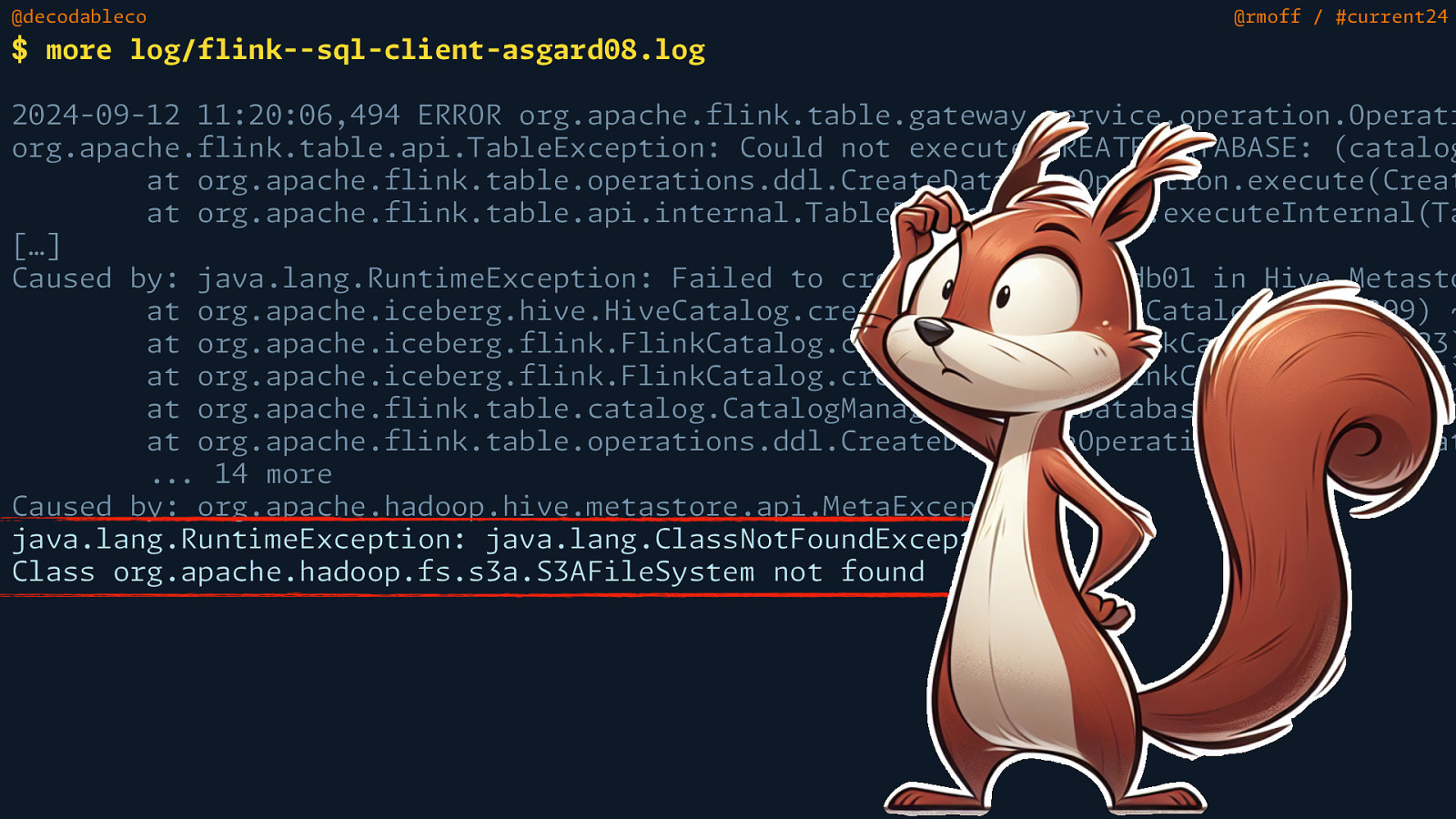

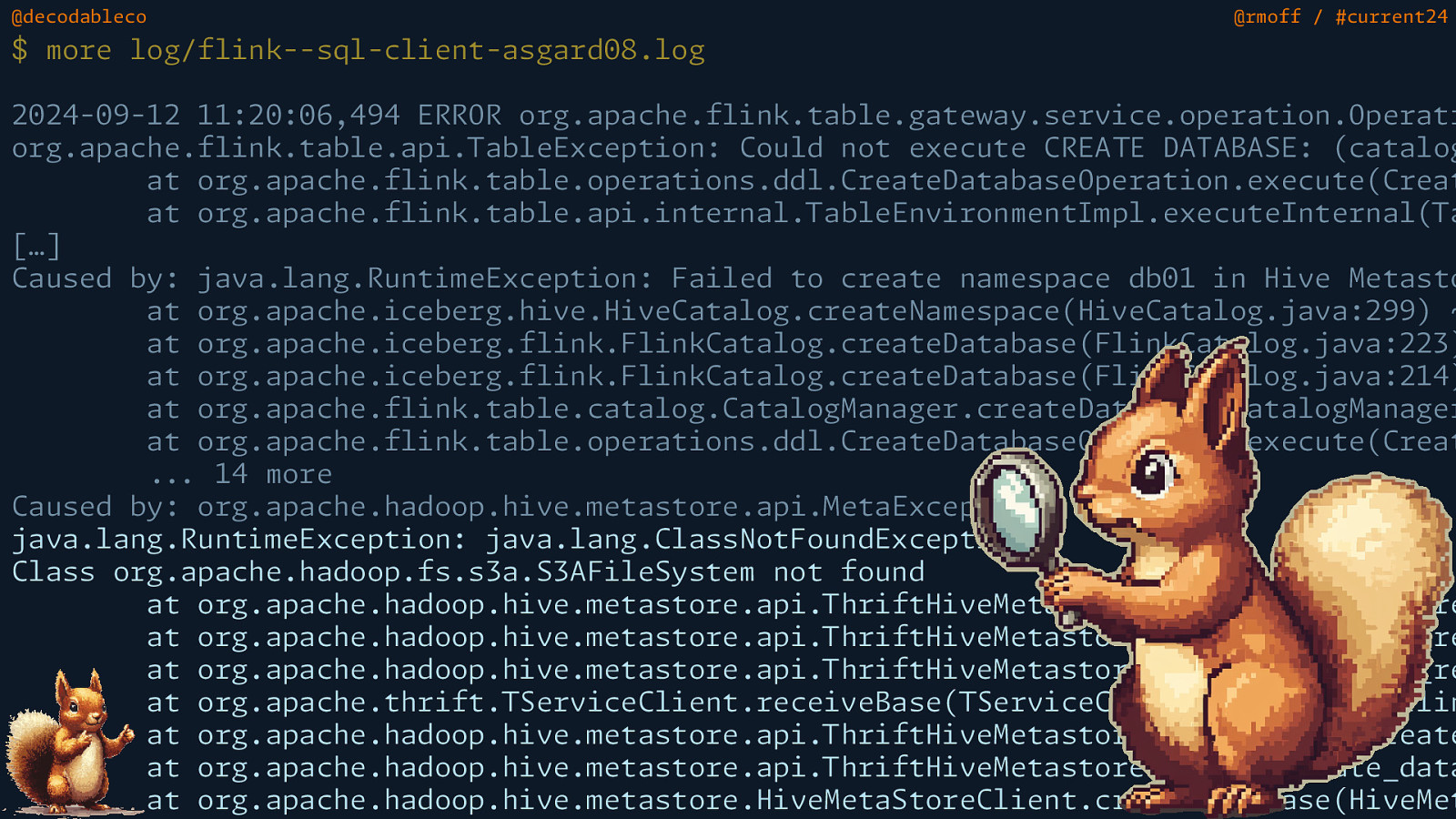

@decodableco @rmoff / #current24 $ more log/flink—sql-client-asgard08.log T E T m I M T m T m i m i . . . 2024-09-12 11:20:06,494 ERROR org.apache.flink.table.gateway.service.operation.Operati org.apache.flink.table.api. ableException: Could not execute CREA DATABASE: (catalog at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat at org.apache.flink.table.api.internal. ableEnvironment pl.executeInternal( a […] Caused by: java.lang.Runt eException: Failed to create namespace db01 in Hive Metasto at org.apache.iceberg.hive.HiveCatalog.createNamespace(HiveCatalog.java:299) ~ at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:223) at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:214) at org.apache.flink.table.catalog.CatalogManager.createDatabase(CatalogManager at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat 14 more Caused by: org.apache.hadoop.hive. etastore.api. etaException: java.lang.Runt eException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found

Slide 82

@decodableco @rmoff / #current24 $ more log/flink—sql-client-asgard08.log T E T m I M T m T m i m i . . . 2024-09-12 11:20:06,494 ERROR org.apache.flink.table.gateway.service.operation.Operati org.apache.flink.table.api. ableException: Could not execute CREA DATABASE: (catalog at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat at org.apache.flink.table.api.internal. ableEnvironment pl.executeInternal( a […] Caused by: java.lang.Runt eException: Failed to create namespace db01 in Hive Metasto at org.apache.iceberg.hive.HiveCatalog.createNamespace(HiveCatalog.java:299) ~ at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:223) at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:214) at org.apache.flink.table.catalog.CatalogManager.createDatabase(CatalogManager at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat 14 more Caused by: org.apache.hadoop.hive. etastore.api. etaException: java.lang.Runt eException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found

Slide 83

@decodableco @rmoff / #current24 $ more log/flink—sql-client-asgard08.log T E T m I M T m T m i m i . . . 2024-09-12 11:20:06,494 ERROR org.apache.flink.table.gateway.service.operation.Operati org.apache.flink.table.api. ableException: Could not execute CREA DATABASE: (catalog at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat at org.apache.flink.table.api.internal. ableEnvironment pl.executeInternal( a […] Caused by: java.lang.Runt eException: Failed to create namespace db01 in Hive Metasto at org.apache.iceberg.hive.HiveCatalog.createNamespace(HiveCatalog.java:299) ~ at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:223) at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:214) at org.apache.flink.table.catalog.CatalogManager.createDatabase(CatalogManager at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat 14 more Caused by: org.apache.hadoop.hive. etastore.api. etaException: java.lang.Runt eException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found

Slide 84

@decodableco @rmoff / #current24 $ more log/flink—sql-client-asgard08.log T E T m I S T M T T T T T T m m m m m m m S T T m i m i . . . 2024-09-12 11:20:06,494 ERROR org.apache.flink.table.gateway.service.operation.Operati org.apache.flink.table.api. ableException: Could not execute CREA DATABASE: (catalog at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat at org.apache.flink.table.api.internal. ableEnvironment pl.executeInternal( a […] Caused by: java.lang.Runt eException: Failed to create namespace db01 in Hive Metasto at org.apache.iceberg.hive.HiveCatalog.createNamespace(HiveCatalog.java:299) ~ at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:223) at org.apache.iceberg.flink.FlinkCatalog.createDatabase(FlinkCatalog.java:214) at org.apache.flink.table.catalog.CatalogManager.createDatabase(CatalogManager at org.apache.flink.table.operations.ddl.CreateDatabaseOperation.execute(Creat 14 more Caused by: org.apache.hadoop.hive. etastore.api. etaException: java.lang.Runt eException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$create_database_re at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$create_database_re at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$create_database_re at org.apache.thrift. erviceClient.receiveBase( erviceClient.java:86) ~[flin at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$Client.recv_create at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$Client.create_data at org.apache.hadoop.hive. etastore.HiveMetaStoreClient.createDatabase(HiveMet

Slide 85

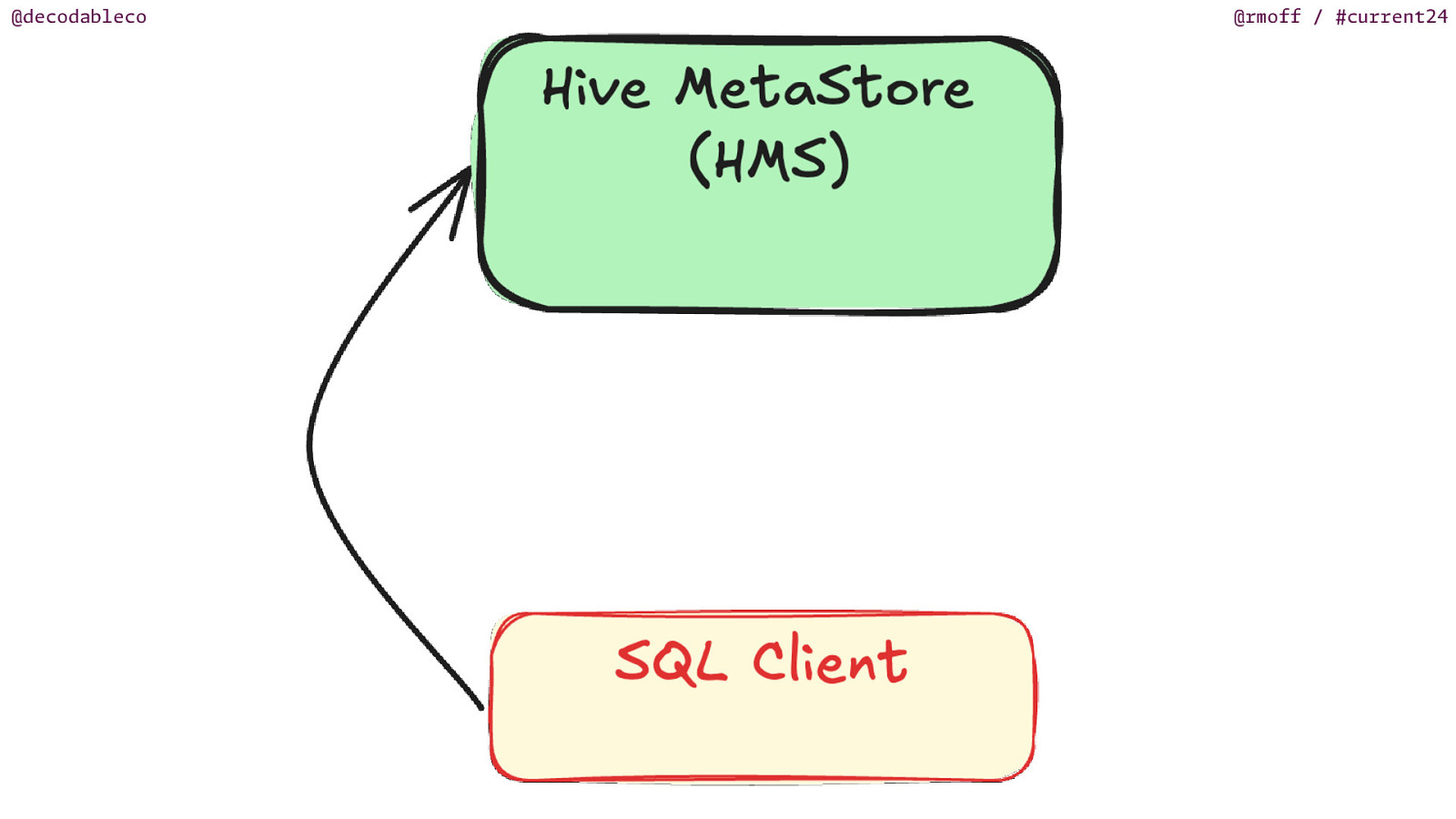

@decodableco @rmoff / #current24

Slide 86

@decodableco @rmoff / #current24

Slide 87

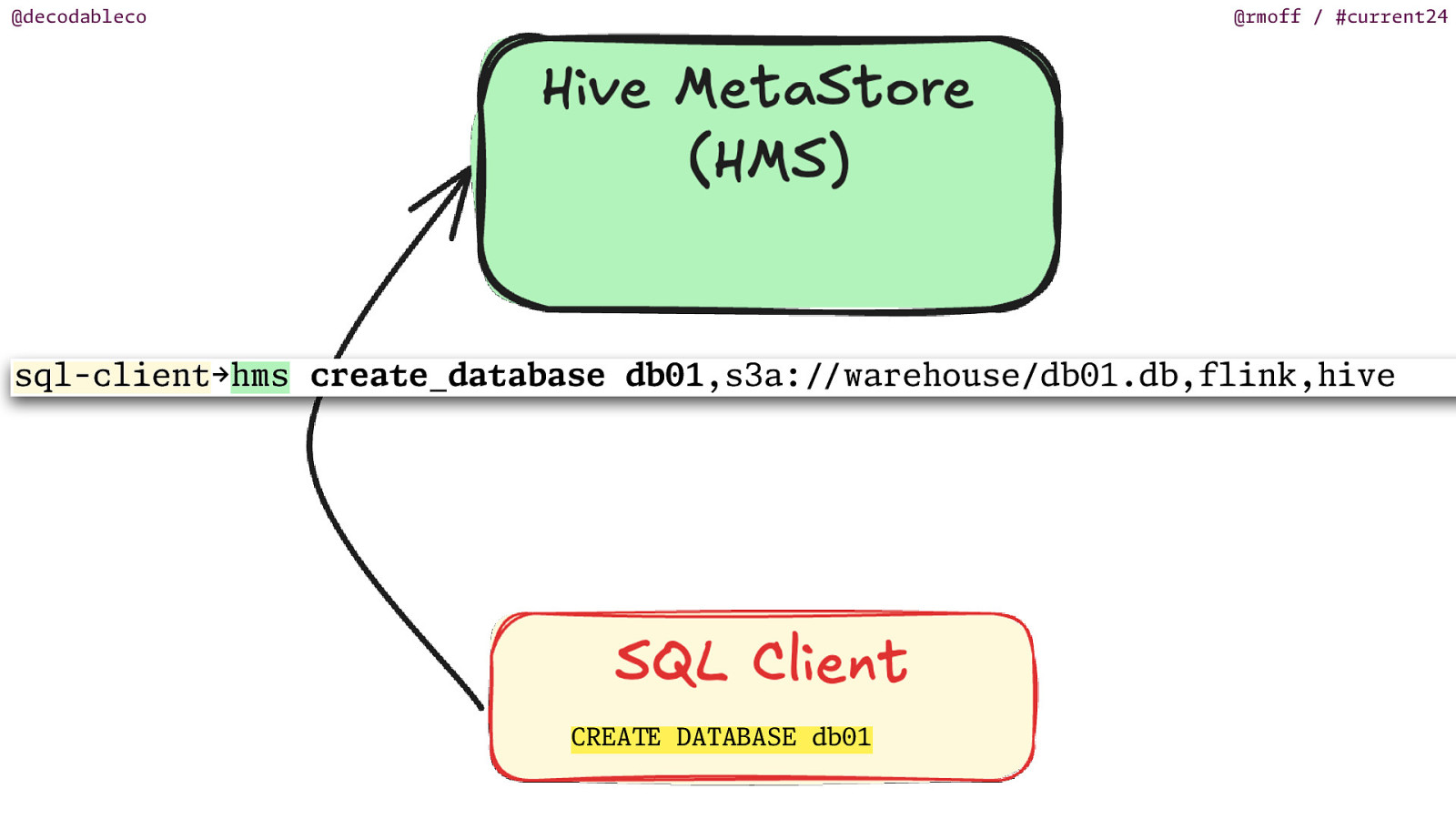

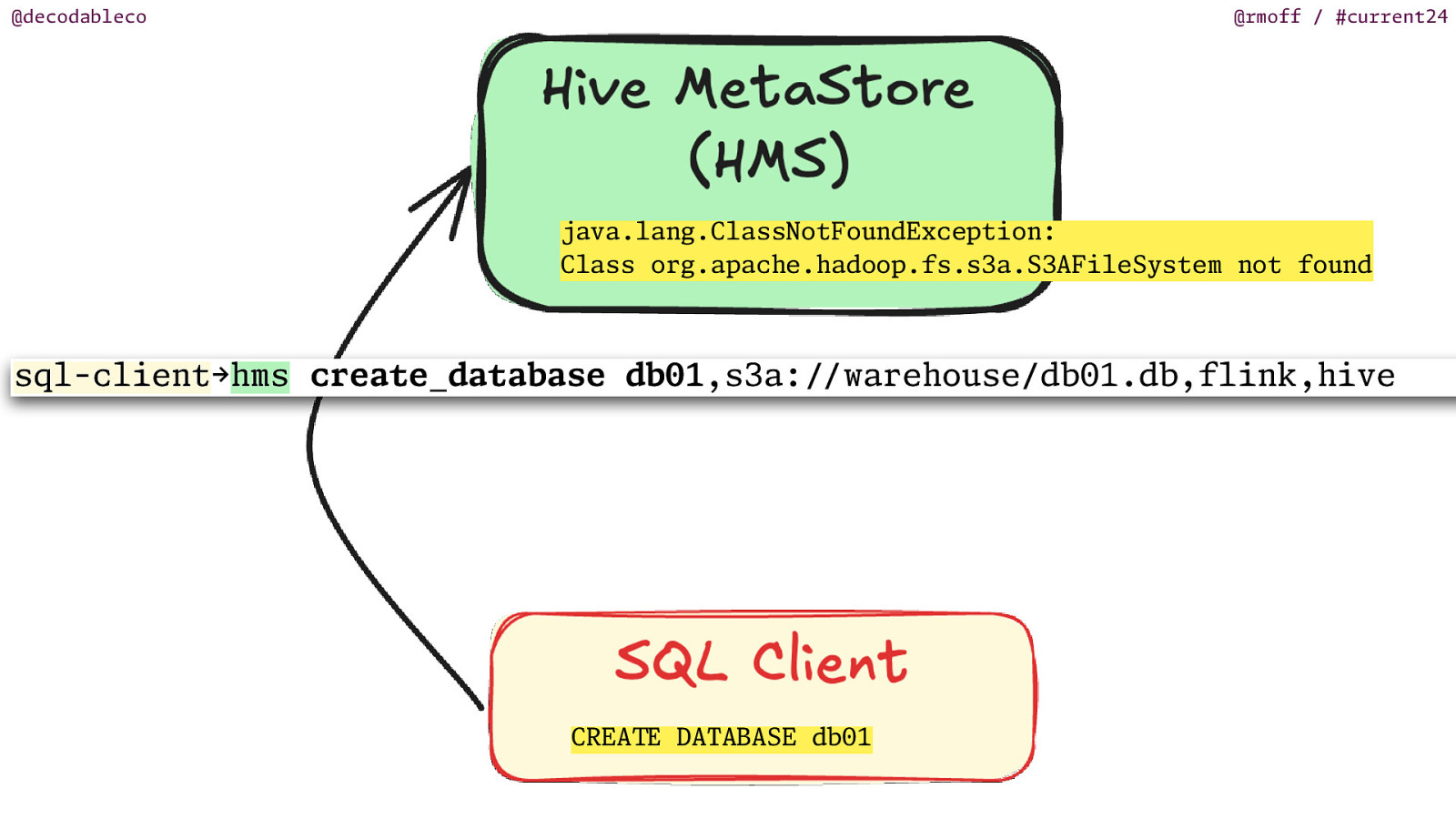

@decodableco @rmoff / #current24 sql-client→hms create_database db01,s3a: / DATABASE db01 / E T CREA warehouse/db01.db,flink,hive

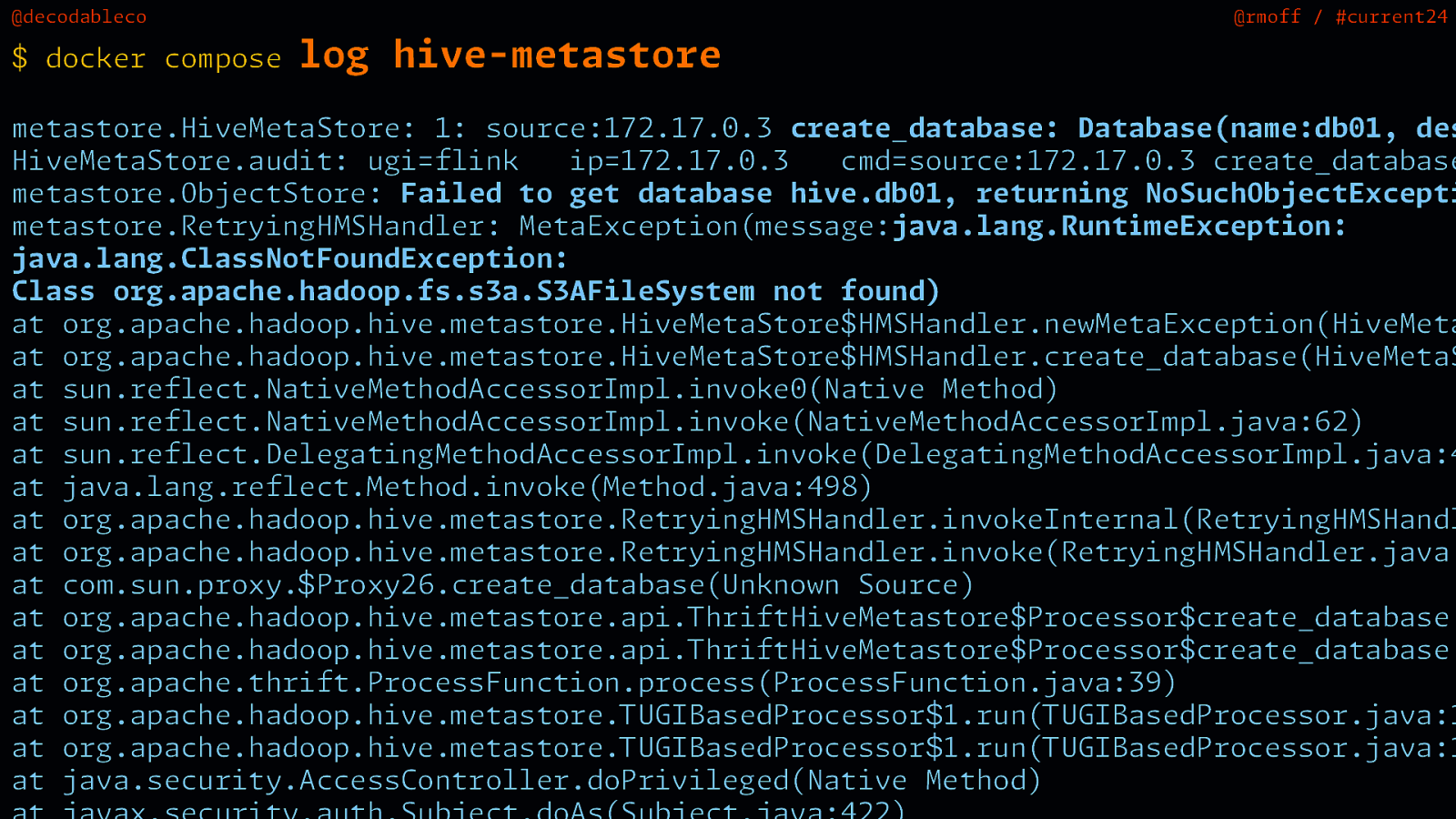

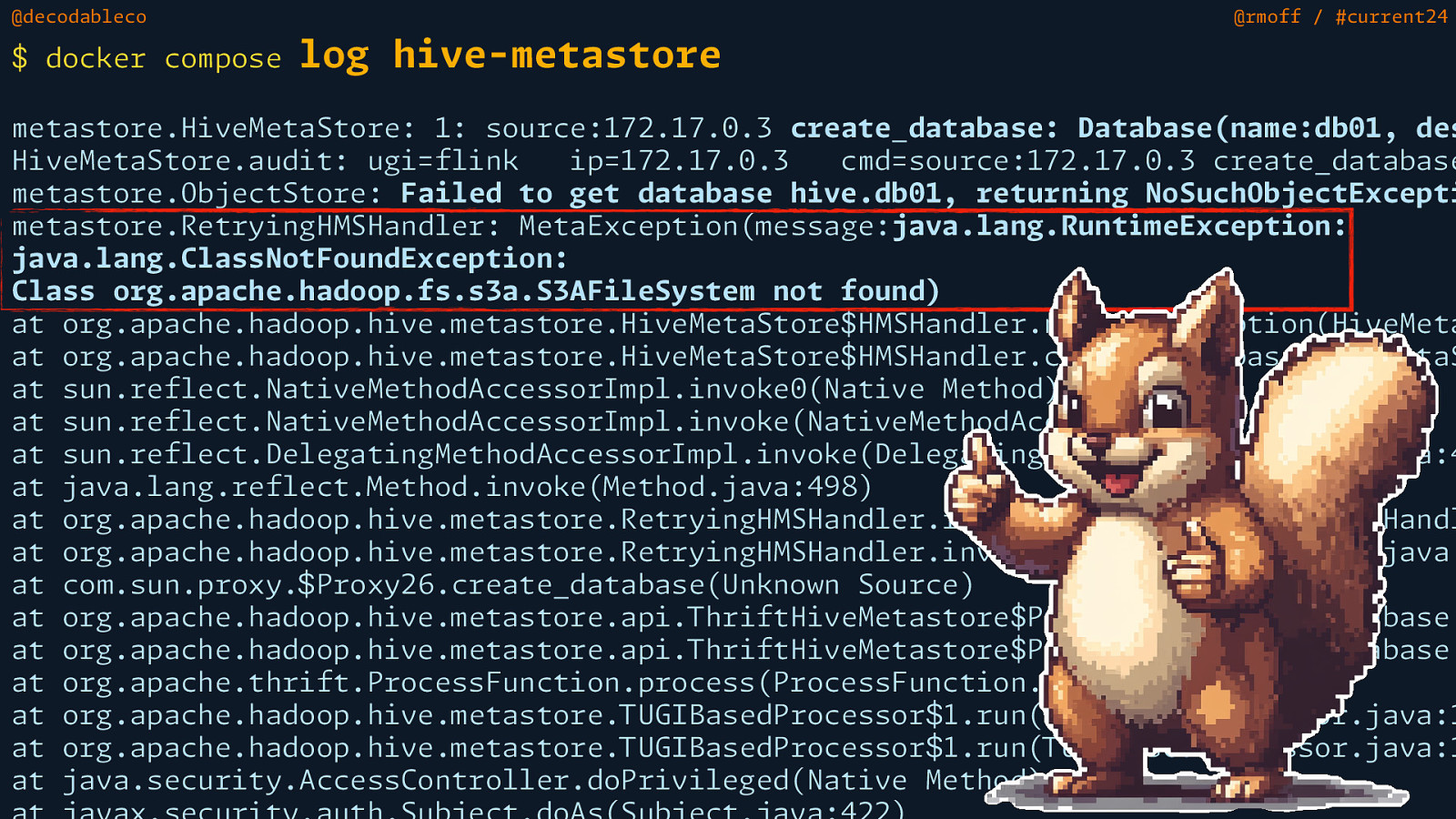

Slide 88

@decodableco @rmoff / #current24 log hive-metastore $ docker compose S M m I S M m m I i T T 1 1 $ $ S S M M S S M M m m T T I m m T T I I M m m m m m m m m M S M $ m metastore.HiveMetaStore: 1: source:172.17.0.3 create_database: Database(name:db01, des HiveMetaStore.audit: ugi=flink ip=172.17.0.3 cmd=source:172.17.0.3 create_database metastore.ObjectStore: Failed to get database hive.db01, returning NoSuchObjectExcepti metastore.RetryingH Handler: MetaException( essage:java.lang.Runt eException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found) at org.apache.hadoop.hive. etastore.HiveMetaStore$H Handler.newMetaException(HiveMeta at org.apache.hadoop.hive. etastore.HiveMetaStore$H Handler.create_database(HiveMetaS at sun.reflect.NativeMethodAccessor pl.invoke0(Native Method) at sun.reflect.NativeMethodAccessor pl.invoke(NativeMethodAccessor pl.java:62) at sun.reflect.DelegatingMethodAccessor pl.invoke(DelegatingMethodAccessor pl.java:4 at java.lang.reflect. ethod.invoke( ethod.java:498) at org.apache.hadoop.hive. etastore.RetryingH Handler.invokeInternal(RetryingH Handl at org.apache.hadoop.hive. etastore.RetryingH Handler.invoke(RetryingH Handler.java: at co .sun.proxy. Proxy26.create_database(Unknown Source) at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$Processor$create_database. at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$Processor$create_database. at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) at org.apache.hadoop.hive. etastore. UGIBasedProcessor .run( UGIBasedProcessor.java:1 at org.apache.hadoop.hive. etastore. UGIBasedProcessor .run( UGIBasedProcessor.java:1 at java.security.AccessController.doPrivileged(Native Method)

Slide 89

@decodableco @rmoff / #current24 log hive-metastore $ docker compose S M m I S M m m I i T T 1 1 $ $ S S M M S S M M m m T T I m m T T I I M m m m m m m m m M S M $ m metastore.HiveMetaStore: 1: source:172.17.0.3 create_database: Database(name:db01, des HiveMetaStore.audit: ugi=flink ip=172.17.0.3 cmd=source:172.17.0.3 create_database metastore.ObjectStore: Failed to get database hive.db01, returning NoSuchObjectExcepti metastore.RetryingH Handler: MetaException( essage:java.lang.Runt eException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found) at org.apache.hadoop.hive. etastore.HiveMetaStore$H Handler.newMetaException(HiveMeta at org.apache.hadoop.hive. etastore.HiveMetaStore$H Handler.create_database(HiveMetaS at sun.reflect.NativeMethodAccessor pl.invoke0(Native Method) at sun.reflect.NativeMethodAccessor pl.invoke(NativeMethodAccessor pl.java:62) at sun.reflect.DelegatingMethodAccessor pl.invoke(DelegatingMethodAccessor pl.java:4 at java.lang.reflect. ethod.invoke( ethod.java:498) at org.apache.hadoop.hive. etastore.RetryingH Handler.invokeInternal(RetryingH Handl at org.apache.hadoop.hive. etastore.RetryingH Handler.invoke(RetryingH Handler.java: at co .sun.proxy. Proxy26.create_database(Unknown Source) at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$Processor$create_database. at org.apache.hadoop.hive. etastore.api. hriftHiveMetastore$Processor$create_database. at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) at org.apache.hadoop.hive. etastore. UGIBasedProcessor .run( UGIBasedProcessor.java:1 at org.apache.hadoop.hive. etastore. UGIBasedProcessor .run( UGIBasedProcessor.java:1 at java.security.AccessController.doPrivileged(Native Method)

Slide 90

@decodableco @rmoff / #current24 java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found sql-client→hms create_database db01,s3a: / DATABASE db01 / E T CREA warehouse/db01.db,flink,hive

Slide 91

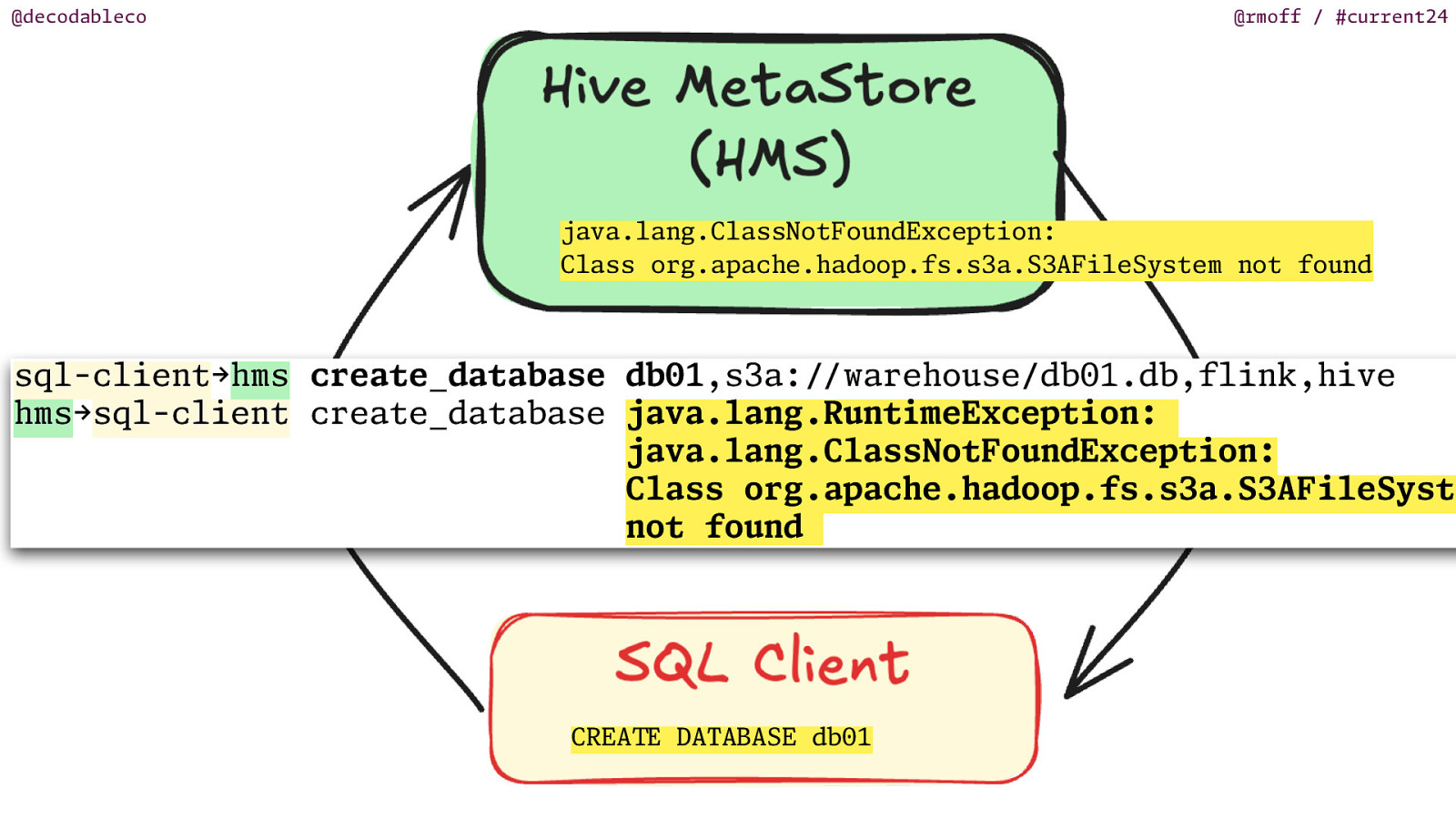

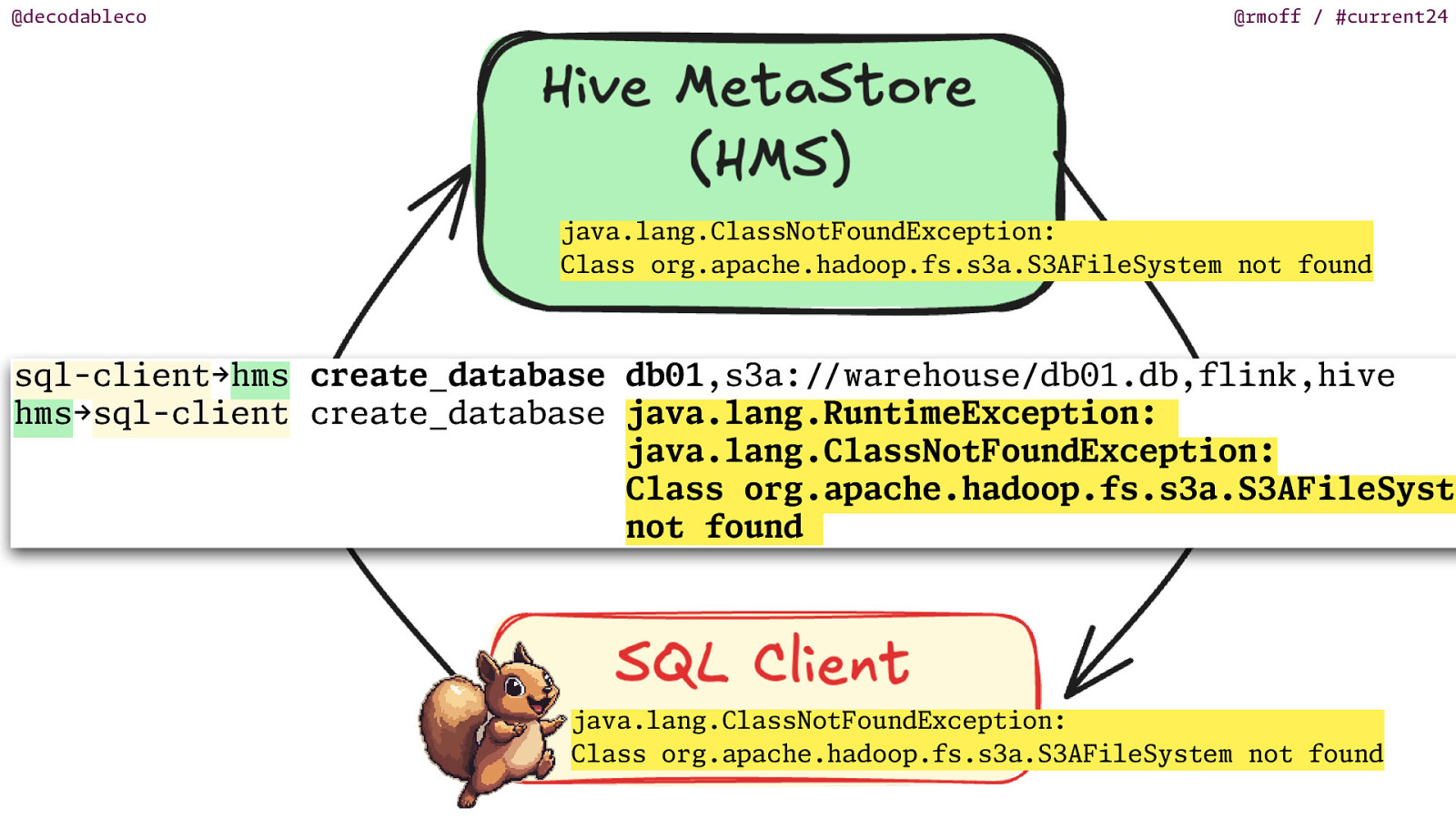

@decodableco @rmoff / #current24 java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found sql-client→hms create_database db01,s3a: warehouse/db01.db,flink,hive hms→sql-client create_database java.lang.Runt eException: Thrift java.lang.ClassNotFoundException: protocol Class org.apache.hadoop.fs.s3a.S3AFileSyste not found m i / DATABASE db01 / E T CREA

Slide 92

@decodableco @rmoff / #current24 java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found sql-client→hms create_database db01,s3a: warehouse/db01.db,flink,hive hms→sql-client create_database java.lang.Runt eException: Thrift java.lang.ClassNotFoundException: protocol Class org.apache.hadoop.fs.s3a.S3AFileSyste not found m i / / java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.s3a.S3AFileSystem not found

Slide 93

@decodableco @rmoff / #current24

Slide 94

@decodableco @rmoff / #current24

Slide 95

@decodableco @rmoff / #current24

Slide 96

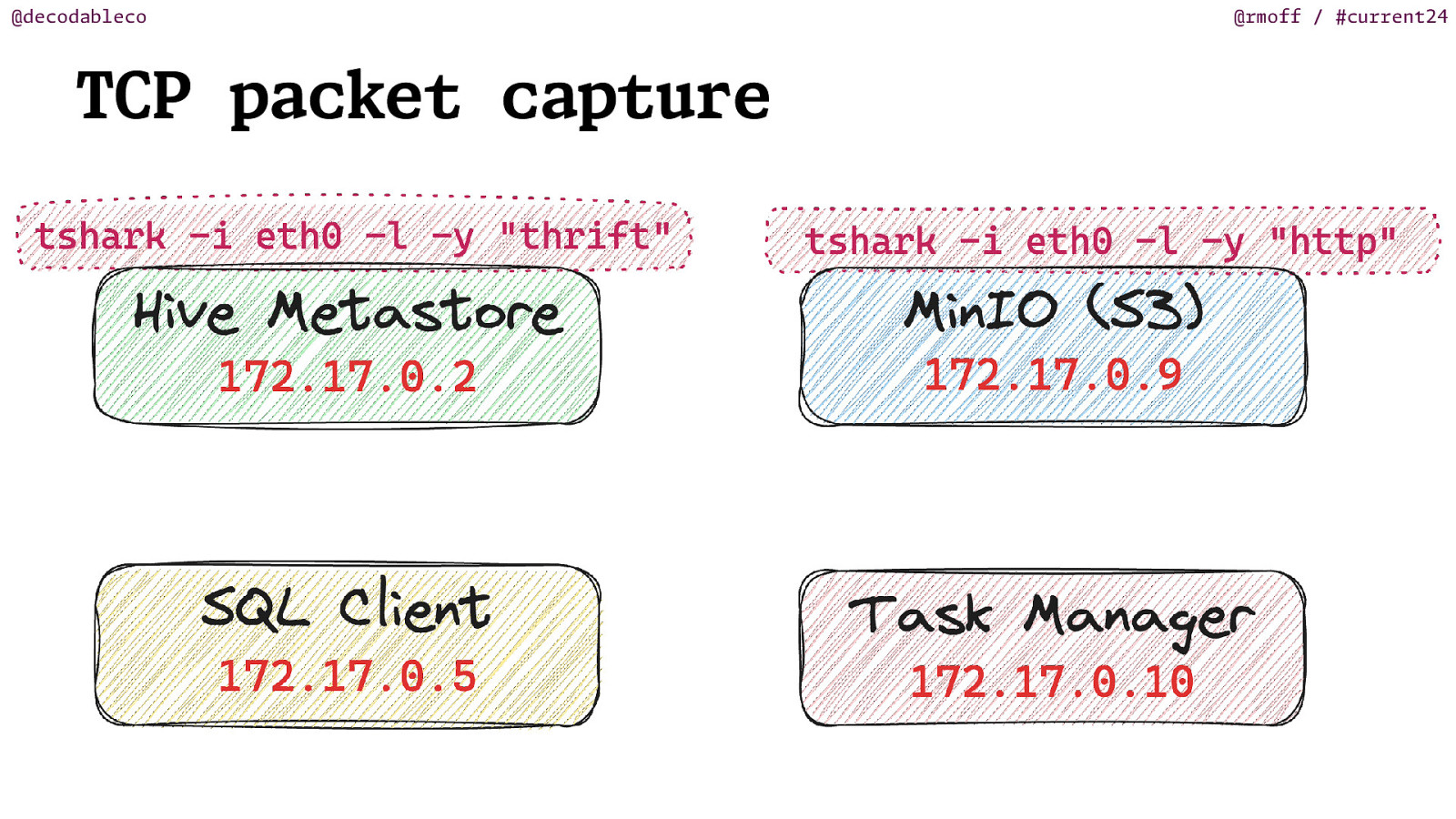

@decodableco TCP packet capture @rmoff / #current24

Slide 97

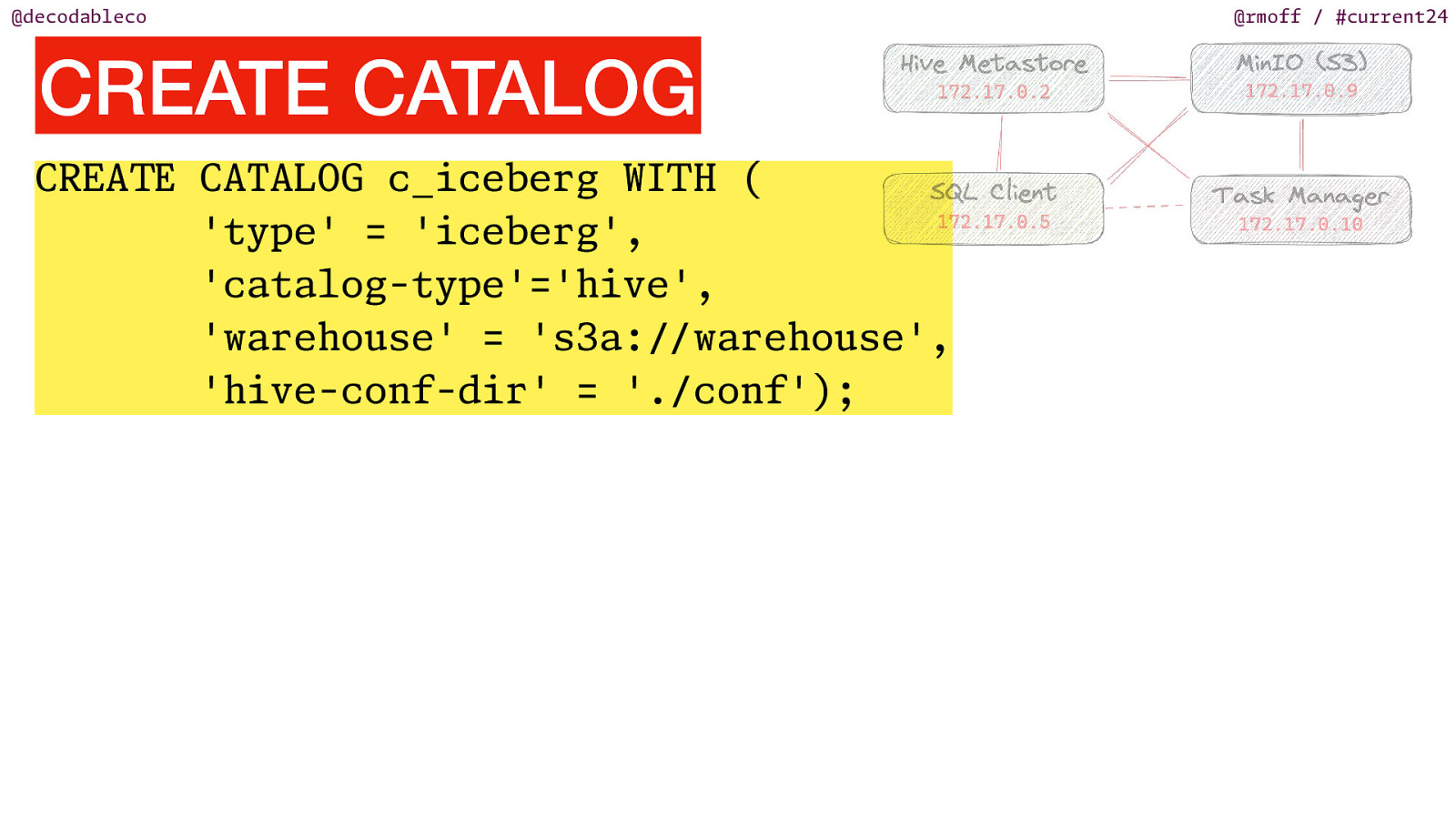

@decodableco @rmoff / #current24 CREATE CATALOG T / I CATALOG c_iceberg W H ( ‘type’ = ‘iceberg’, ‘catalog-type’=’hive’, ‘warehouse’ = ‘s3a: warehouse’, ‘hive-conf-dir’ = ‘./conf’); / E T CREA

Slide 98

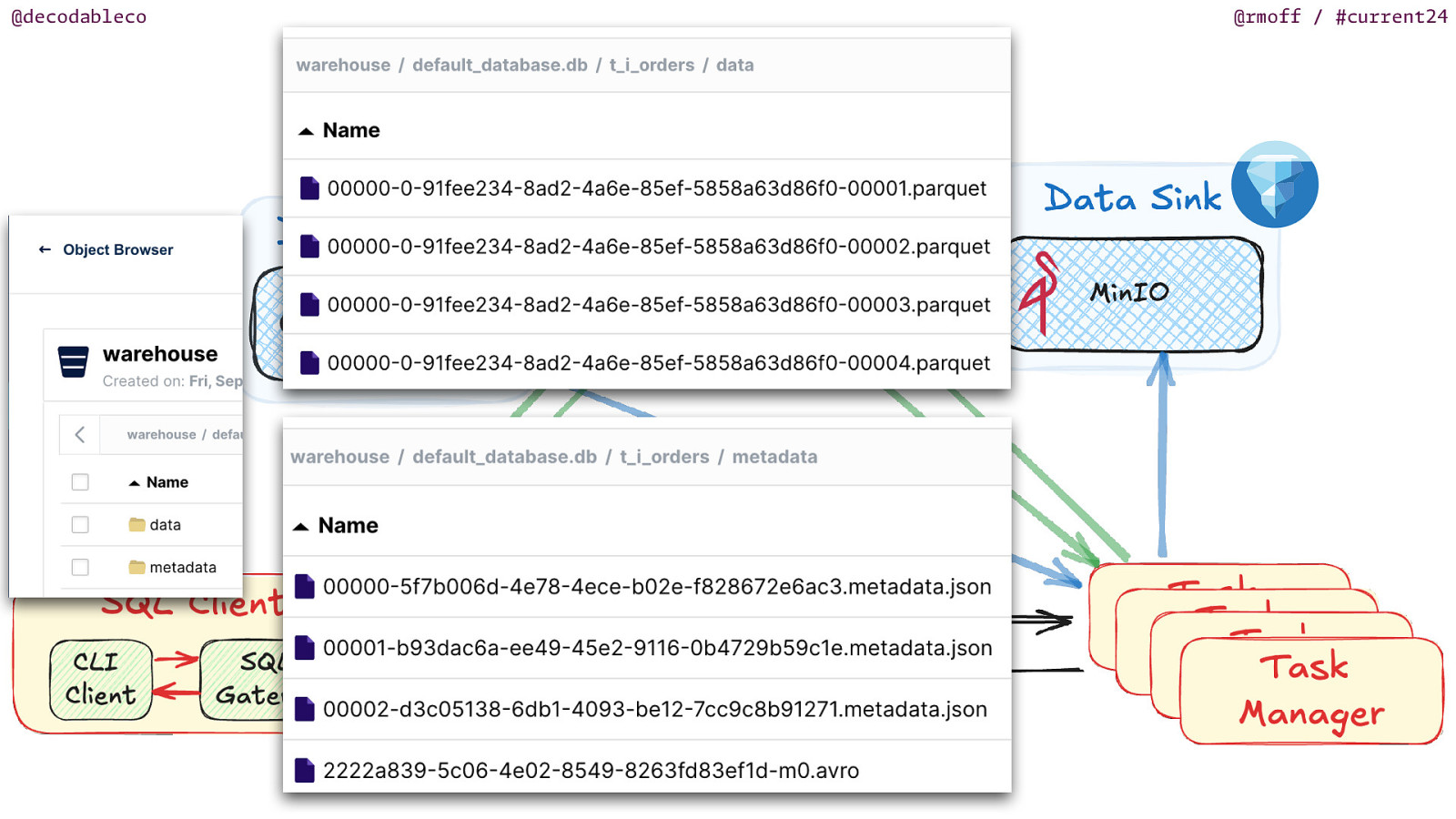

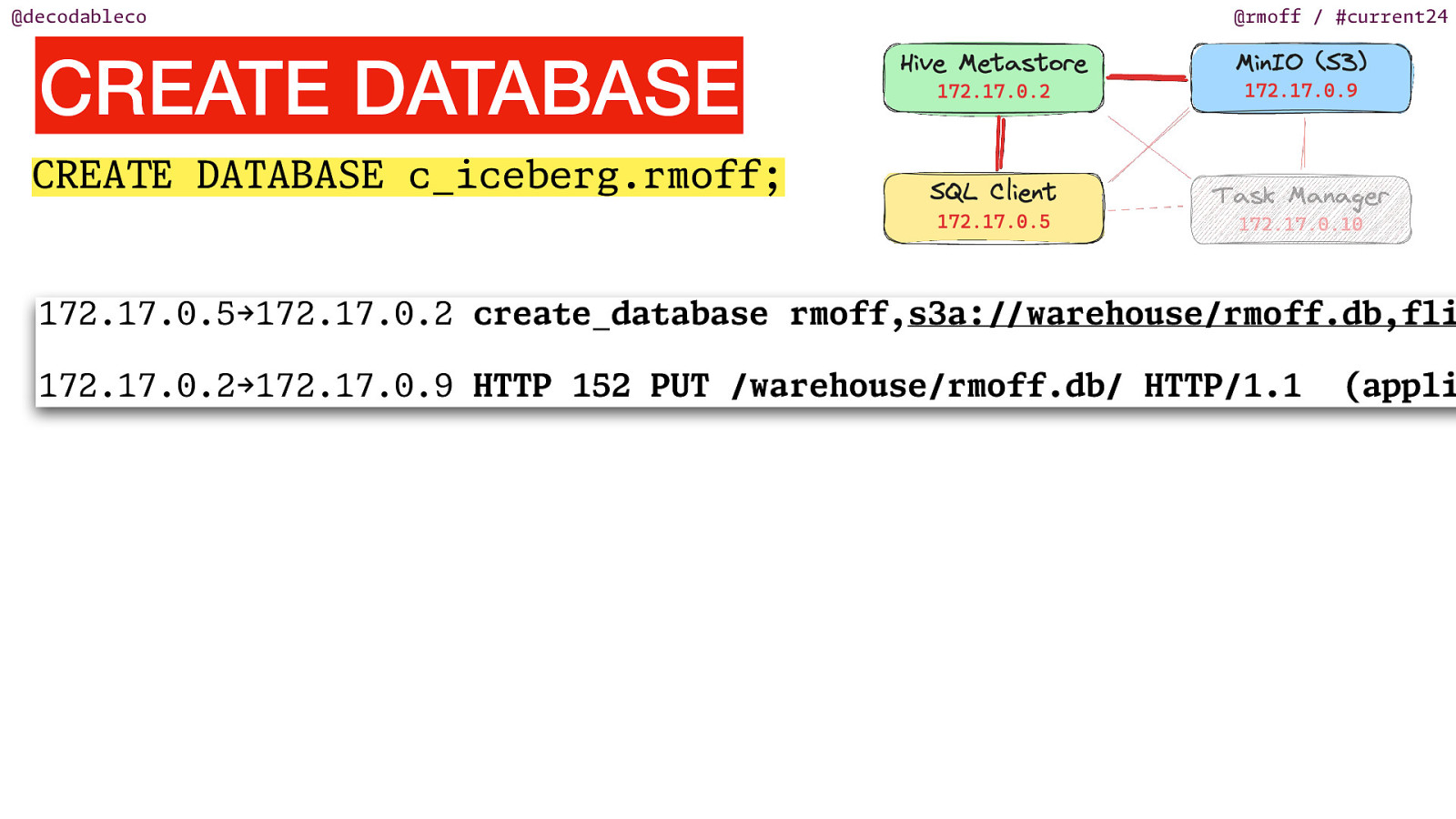

@decodableco @rmoff / #current24 CREATE DATABASE CREA DATABASE c_iceberg.rmoff; 172.17.0.5→172.17.0.2 create_database rmoff,s3a: warehouse/rmoff.db,fli / / E T 172.17.0.2→172.17.0.9 HTTP 152 PUT /warehouse/rmoff.db/ HTTP/1.1 (appli

Slide 99

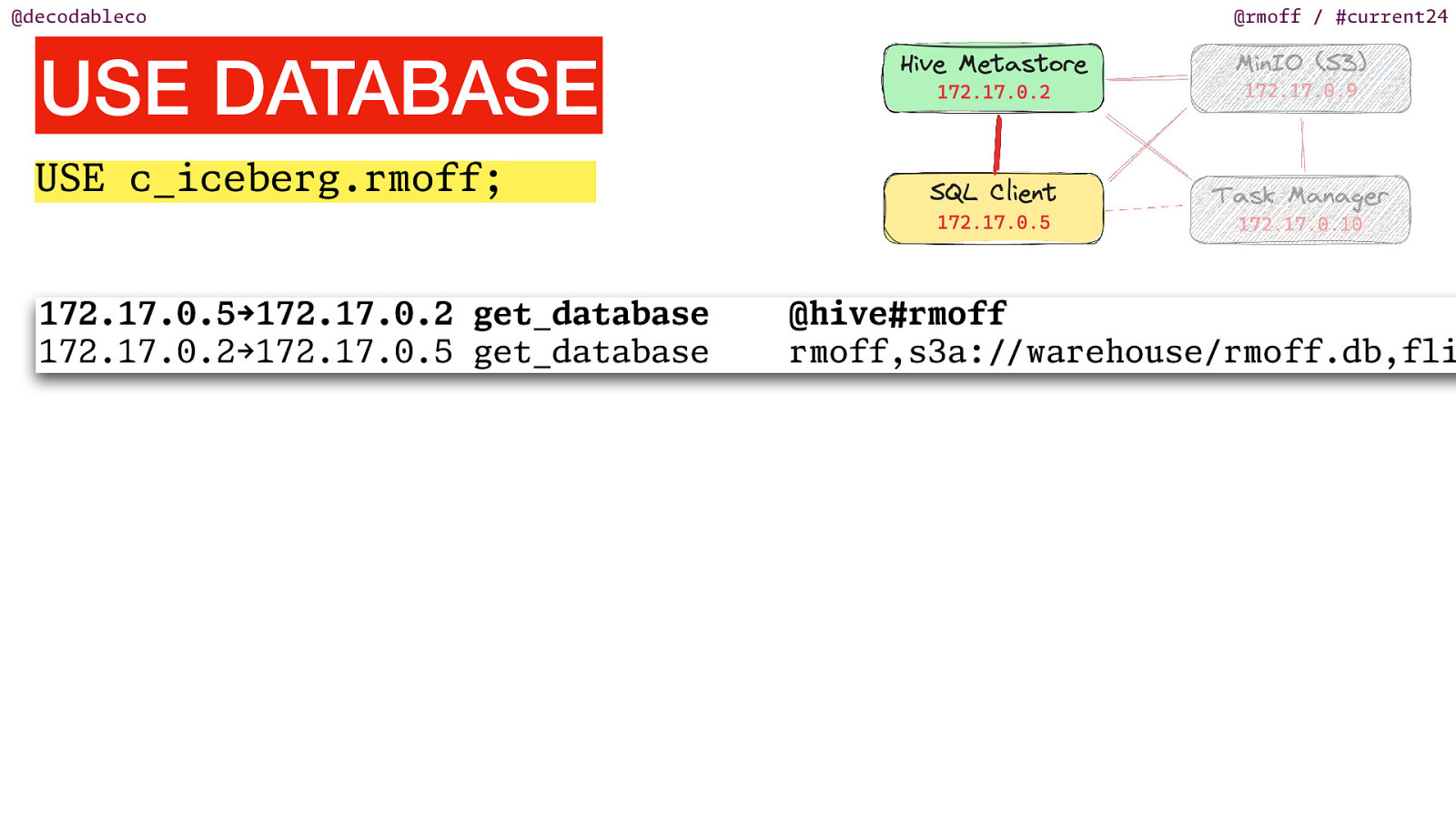

@decodableco @rmoff / #current24 USE DATABASE USE c_iceberg.rmoff; / @hive#rmoff rmoff,s3a: warehouse/rmoff.db,fli / 172.17.0.5→172.17.0.2 get_database 172.17.0.2→172.17.0.5 get_database

Slide 100

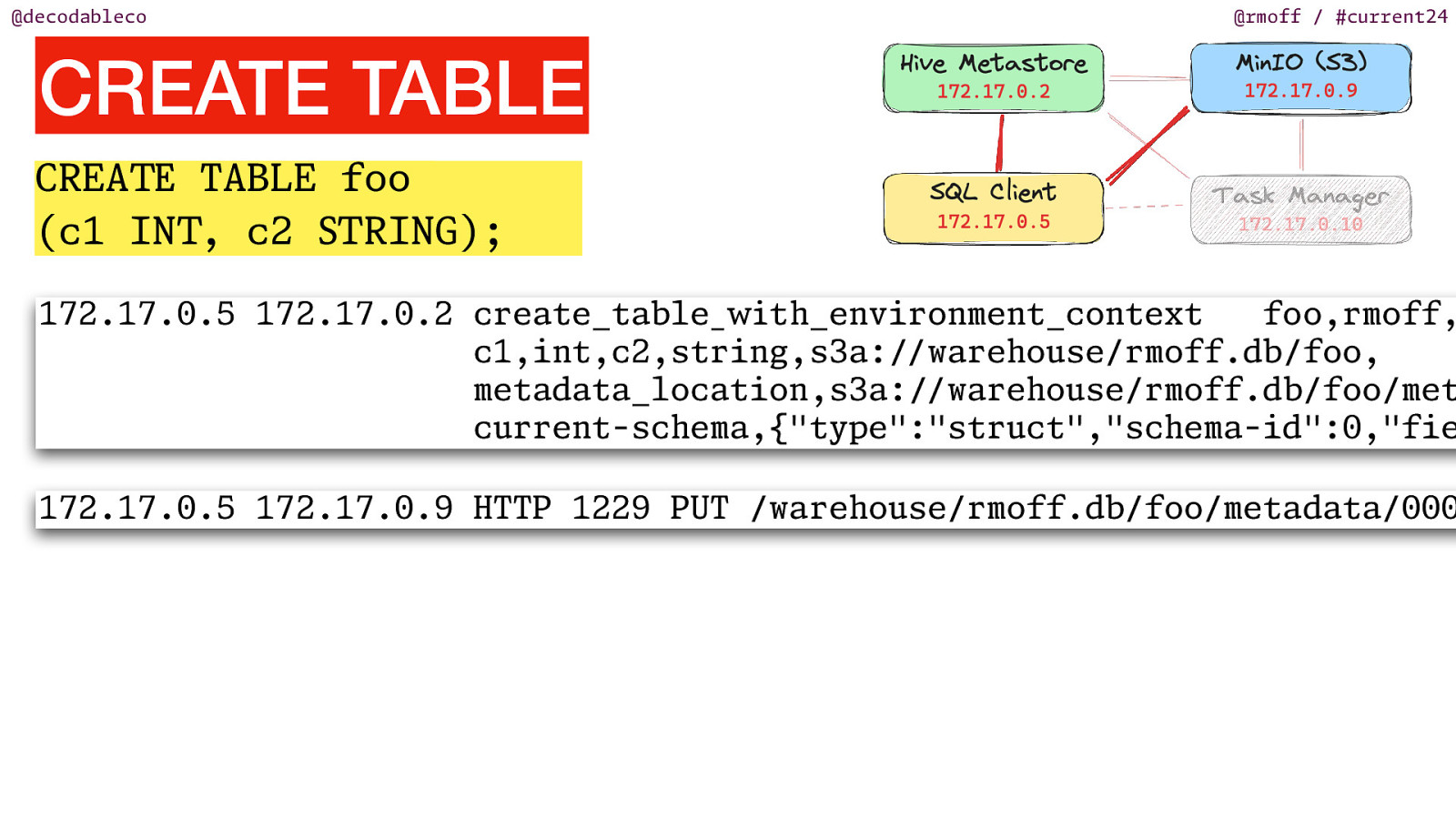

@decodableco @rmoff / #current24 CREATE TABLE CREA TABLE foo (c1 IN , c2 RING); 172.17.0.5 172.17.0.2 create_table th_environment_context foo,rmoff, c1,int,c2,string,s3a: warehouse/rmoff.db/foo, metadata_location,s3a: warehouse/rmoff.db/foo/met current-schema,{“type”:”struct”,”schema-id”:0,”fie / / / / i w _ T S T E T 172.17.0.5 172.17.0.9 HTTP 1229 PUT /warehouse/rmoff.db/foo/metadata/000

Slide 101

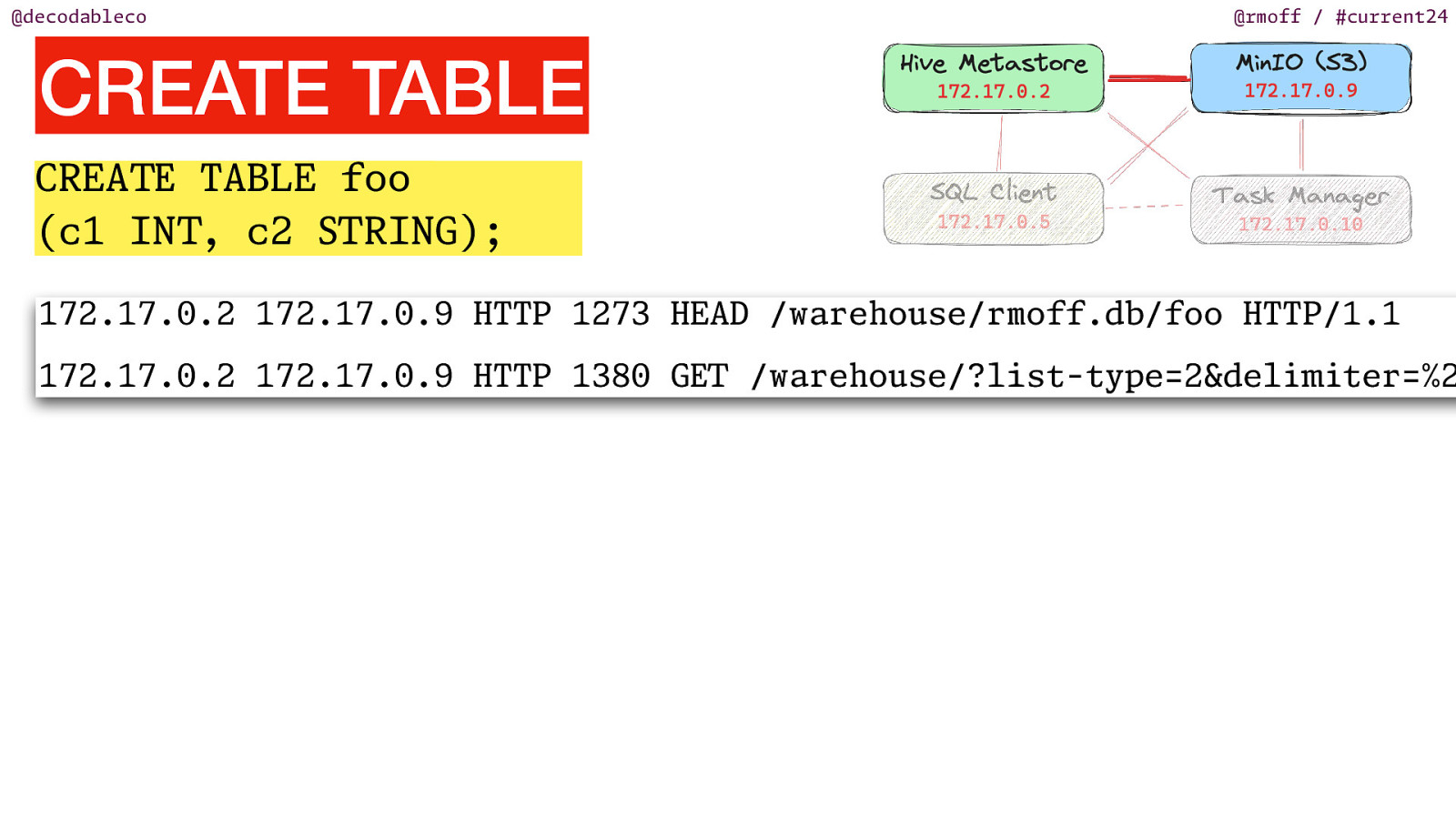

@decodableco @rmoff / #current24 CREATE TABLE CREA TABLE foo (c1 IN , c2 RING); 172.17.0.2 172.17.0.9 HTTP 1273 HEAD /warehouse/rmoff.db/foo HTTP/1.1 & F ter=%2 i m /warehouse/?list-type=2&del i T E T S T E T 172.17.0.2 172.17.0.9 HTTP 1380 G

Slide 102

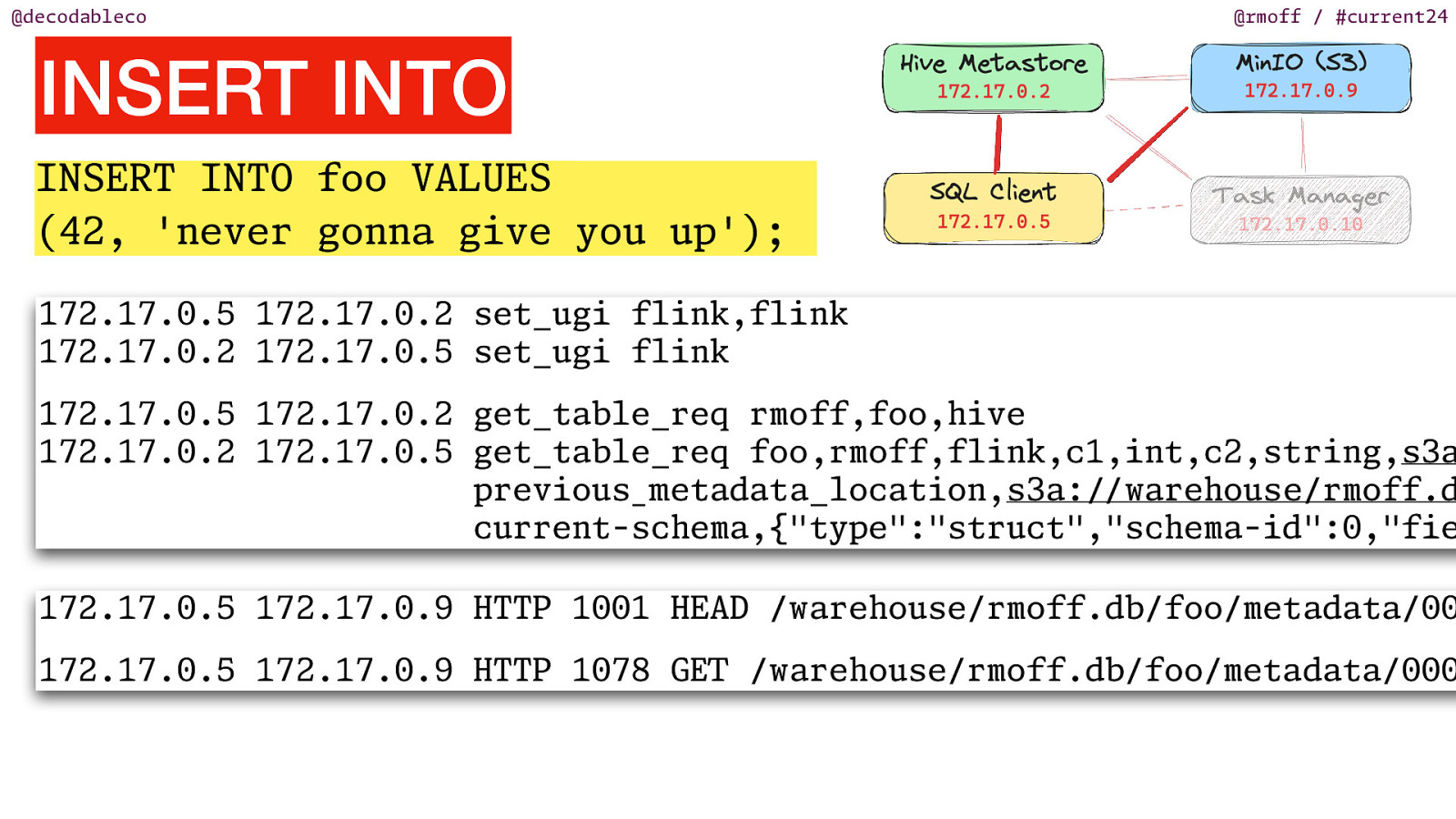

@decodableco @rmoff / #current24 INSERT INTO INSERT INTO foo VALUES (42, ‘never gonna give you up’); 172.17.0.5 172.17.0.2 set_ugi flink,flink 172.17.0.2 172.17.0.5 set_ugi flink 172.17.0.5 172.17.0.2 get_table_req rmoff,foo,hive 172.17.0.2 172.17.0.5 get_table_req foo,rmoff,flink,c1,int,c2,string,s3a previous etadata_location,s3a: warehouse/rmoff.d current-schema,{“type”:”struct”,”schema-id”:0,”fie 172.17.0.5 172.17.0.9 HTTP 1001 HEAD /warehouse/rmoff.db/foo/metadata/00 / / /warehouse/rmoff.db/foo/metadata/000 / T E m _ 172.17.0.5 172.17.0.9 HTTP 1078 G

Slide 103

@decodableco @rmoff / #current24 INSERT INTO INSERT INTO foo VALUES (42, ‘never gonna give you up’); tted to the clust . . i . m i m [INFO] Sub tting SQL update statement to the cluster [INFO] SQL update statement has been successfully sub Job ID: cc43d32a6bb0e2faab5270e542c70499

Slide 104

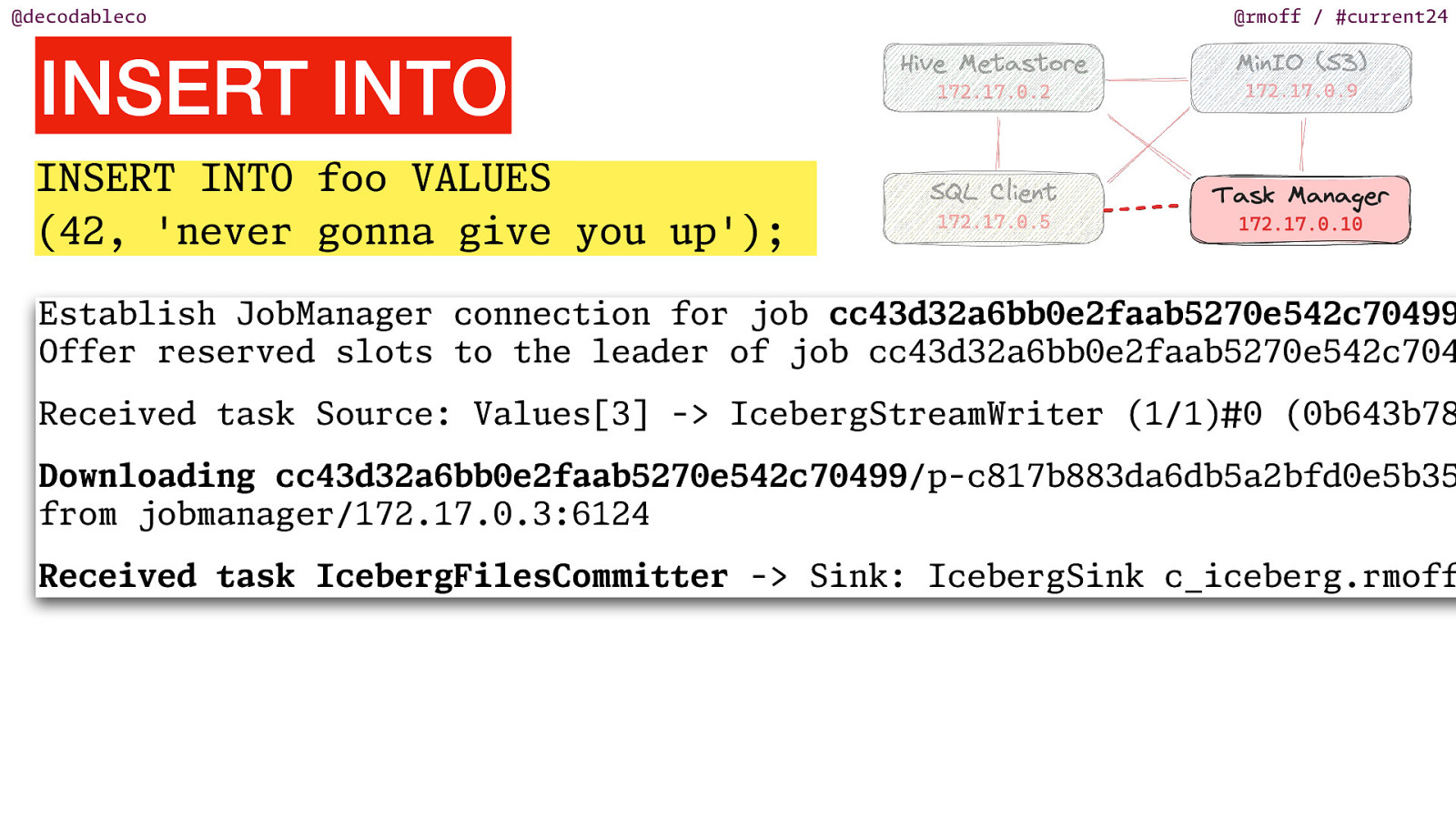

@decodableco @rmoff / #current24 INSERT INTO INSERT INTO foo VALUES (42, ‘never gonna give you up’); Establish JobManager connection for job cc43d32a6bb0e2faab5270e542c70499 Offer reserved slots to the leader of job cc43d32a6bb0e2faab5270e542c704 Received task Source: Values[3] -> IcebergStreamWriter (1/1) 0 (0b643b78 Downloading cc43d32a6bb0e2faab5270e542c70499/p-c817b883da6db5a2bfd0e5b35 from jobmanager/172.17.0.3:6124 tter -> Sink: IcebergSink c_iceberg.rmoff

i m Received task IcebergFilesCom

Slide 105

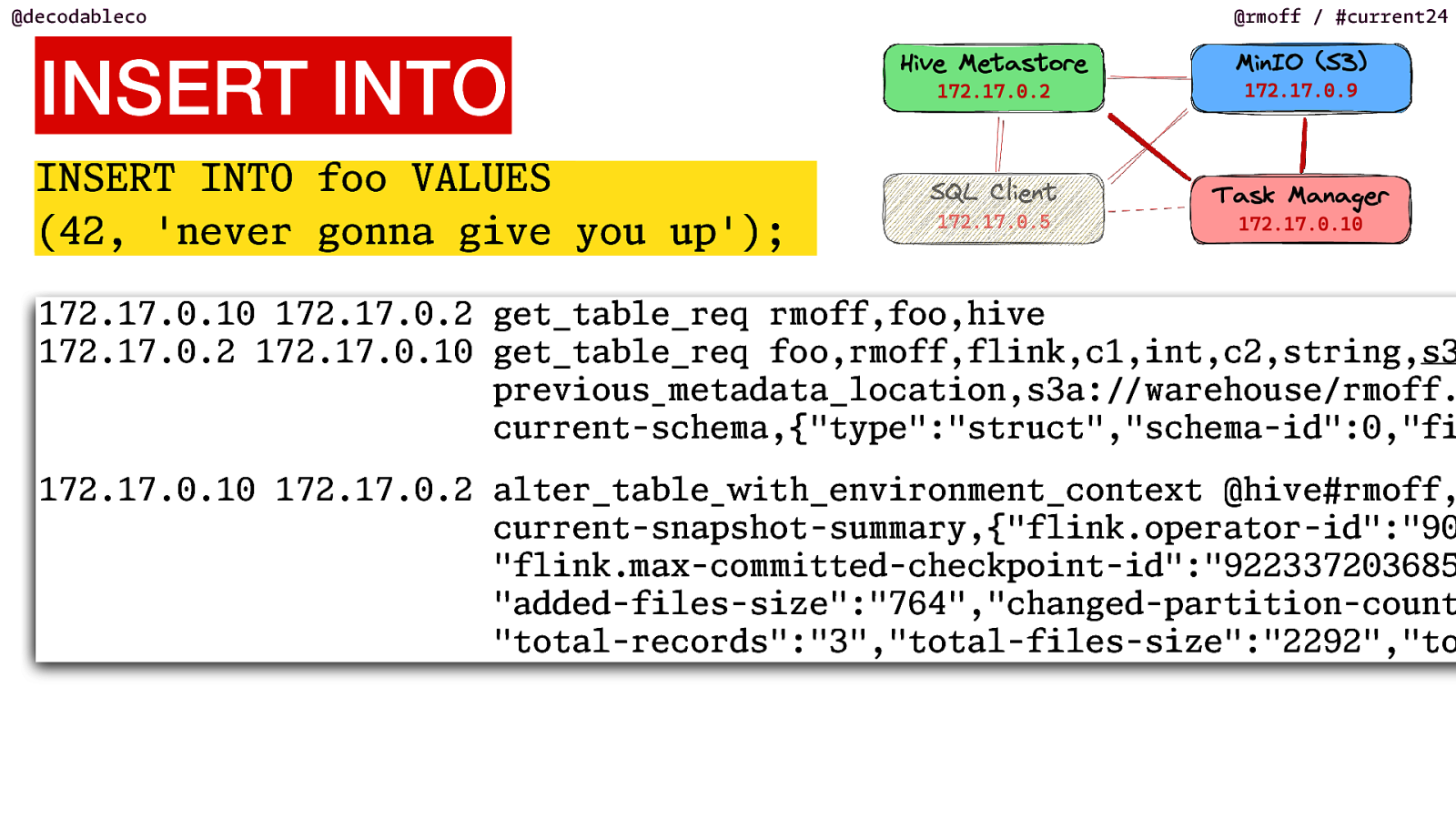

@decodableco @rmoff / #current24 INSERT INTO INSERT INTO foo VALUES (42, ‘never gonna give you up’); 172.17.0.10 172.17.0.2 get_table_req rmoff,foo,hive 172.17.0.2 172.17.0.10 get_table_req foo,rmoff,flink,c1,int,c2,string,s3 previous etadata_location,s3a: warehouse/rmoff. current-schema,{“type”:”struct”,”schema-id”:0,”fi / / i m i w _ m _ m 172.17.0.10 172.17.0.2 alter_table th_environment_context @hive#rmoff, current-snapshot-summary,{“flink.operator-id”:”90 “flink. ax-com tted-checkpoint-id”:”922337203685 “added-files-size”:”764”,”changed-partition-count “total-records”:”3”,”total-files-size”:”2292”,”to

Slide 106

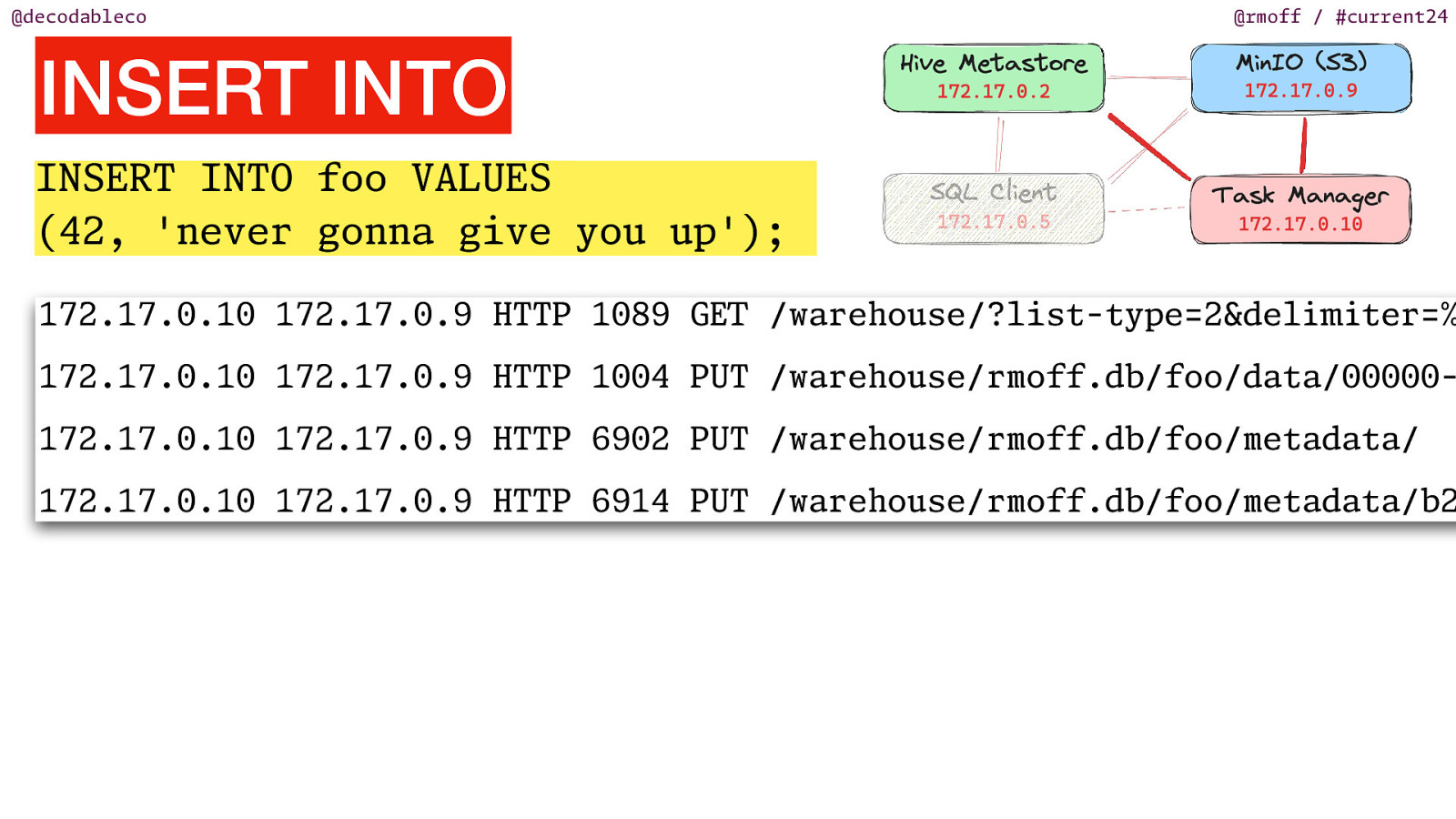

@decodableco @rmoff / #current24 INSERT INTO INSERT INTO foo VALUES (42, ‘never gonna give you up’); 172.17.0.10 172.17.0.9 HTTP 1089 G /warehouse/?list-type=2&del ter=% 172.17.0.10 172.17.0.9 HTTP 1004 PUT /warehouse/rmoff.db/foo/data/00000172.17.0.10 172.17.0.9 HTTP 6902 PUT /warehouse/rmoff.db/foo/metadata/ F i m i T E 172.17.0.10 172.17.0.9 HTTP 6914 PUT /warehouse/rmoff.db/foo/metadata/b2

Slide 107

@decodableco @rmoff / #current24

Slide 108

@decodableco @rmoff / #current24

Slide 109

@decodableco What SQL runs where? @rmoff / #current24

Slide 110

@decodableco @rmoff / #current24

Slide 111

@decodableco @rmoff / #current24

Slide 112

@decodableco @rmoff / #current24 In conclusion…

Slide 113

@decodableco @rmoff / #current24 Troubleshoot methodically

Slide 114

@decodableco Understand the architecture @rmoff / #current24

Slide 115

@decodableco Get your JARs right @rmoff / #current24

Slide 116

@decodableco @rmoff / #current24 Get your toolbox ready :)

Slide 117

@decodableco decodable.co/blog @rmoff / #current24

Slide 118

E

@decodableco @rmoff / #current24 OF @rmoff / 18 Sep 2024 / #current24